AI Model Validation vs. Robustness Testing in Healthcare

Post Summary

AI systems in healthcare must prove two things: they work as intended (validation) and they can handle unexpected changes (robustness testing). While validation evaluates accuracy, reliability, and safety for specific clinical tasks, robustness testing ensures models can perform consistently when conditions - like patient demographics, equipment, or data quality - change. Both are necessary to avoid risks like misdiagnoses and biased outcomes.

Key Points:

- Validation checks if an AI model performs its clinical job effectively and meets regulatory standards.

- Robustness testing identifies how well the model handles variations, errors, or adversarial inputs.

- Together, these practices reduce risks, improve patient safety, and build clinician confidence.

- Tools like Censinet RiskOps™ help integrate these processes into healthcare risk management systems.

Quick Overview:

- Validation focuses on clinical effectiveness and regulatory approval.

- Robustness testing ensures stability under changing or challenging conditions.

- Both require ongoing monitoring and cross-functional collaboration to maintain safety and reliability.

These practices are critical for ensuring AI systems in healthcare deliver safe, consistent, and effective results.

What Is AI Model Validation in Healthcare?

AI model validation in healthcare ensures that an AI system performs its intended clinical task accurately, reliably, and safely in its specific medical context [6][1]. Unlike general software testing or internal evaluations, validation focuses on determining whether the model is suitable for clinical use. This means confirming that it works effectively across real-world patient populations, workflows, and environments - not just on the data it was trained on [6][1].

Validation examines diagnostic metrics like sensitivity, specificity, and AUC, assesses reliability across different patient subgroups, and ensures the model aligns with its intended clinical purpose - whether for triage, diagnosis, or risk prediction. It also evaluates whether the model meets safety and regulatory standards required for clinical applications [1][4].

The difference between development and validation is crucial. Strong technical performance doesn’t always translate to clinical effectiveness. A model may excel in metrics but fail to improve outcomes or integrate safely into clinical workflows [1][3][6]. For instance, Epic’s sepsis prediction model missed nearly two-thirds of actual sepsis cases and generated numerous false positives when implemented at Michigan Medicine. This highlights why thorough, context-specific validation is critical before clinical use [7].

Validation not only supports patient safety but also identifies and addresses risks like misdiagnosis, inappropriate treatment recommendations, unequal performance across subgroups, and unsafe automation. Without proper validation, models can lead to errors, false positives, and performance issues in underrepresented groups, jeopardizing both safety and equity [7][2][3][6]. Tools like Censinet RiskOps™ can integrate validation documentation with risk management systems, providing ongoing oversight of AI solutions involving patient data, clinical applications, and connected devices [3][6].

This focus on validation lays the foundation for the different approaches used to ensure clinical applicability.

Types of AI Validation

Healthcare AI validation typically involves four key approaches, each playing a role in building evidence from algorithm performance to clinical outcomes. Together, they ensure the model is both effective and safe for real-world use.

- Internal validation: This step tests the model on held-out data from the same source used in development, such as through cross-validation or train-test splits. It helps detect overfitting and establishes baseline performance [6][1]. However, it doesn’t reveal issues like dataset shifts or hidden biases that may arise in other settings.

- External validation: Here, the model is tested on data from new sources - different institutions, geographies, devices, or time periods. This assesses its generalizability and ability to perform in varied care environments [1][4]. Increasingly, U.S. health systems are required to conduct local validation to confirm that a vendor’s model works with their specific EHR data, patient demographics, and workflows before clinical use [7].

- Technical validation: This approach focuses on performance metrics like ROC AUC, accuracy, precision-recall, calibration, and subgroup analyses [1][6]. For classification tasks, key metrics include sensitivity, specificity, and predictive values, often broken down by clinically relevant subgroups. For risk prediction or regression tasks, metrics like mean squared error and calibration plots are central [1]. The choice of metrics depends on the clinical context - life-threatening conditions might prioritize sensitivity, while specificity could be more important where false positives lead to invasive procedures.

- Clinical validation: Sometimes called clinical evaluation, this step determines whether the model improves real-world outcomes, workflows, or decision-making. It often involves randomized controlled trials, observational studies, or pilot testing in actual care settings [1][4]. Clinical validation examines impacts on patient outcomes, adverse events, clinician workload, and usability. Unlike earlier steps, it involves clinicians, regulators, and patients - not just data scientists and engineers.

Regulatory and Governance Requirements

Validation isn’t just about reliability - it’s also a key part of meeting FDA and institutional governance standards. The U.S. Food and Drug Administration (FDA) views both analytical and clinical validation as critical for AI/ML-based Software as a Medical Device (SaMD). This applies to premarket submissions and post-market updates for adaptive models [3][6].

The FDA requires models to consistently meet predefined performance targets using representative datasets and sound study designs. Validation must also demonstrate safety and effectiveness across intended populations and settings throughout the model’s lifecycle [3]. For higher-risk applications, such as autonomous diagnostic tools, the FDA expects more extensive validation, including prospective clinical studies. Decision-support tools, on the other hand, may rely on retrospective or observational data [3][4].

Hospitals and health systems also demand a thorough validation dossier. This includes details on the model’s intended use, data sources, validation methods, performance metrics (including subgroup analyses), comparisons with standard care, and limitations [4][6]. Additional documentation may cover clinical validation studies, workflow evaluations, cybersecurity assessments, and update policies [3][4].

Documentation and Evidence Requirements

Effective validation requires detailed documentation of data, methods, metrics, and limitations to meet regulatory and governance expectations [3][6][8].

For third-party AI solutions, healthcare organizations often require vendor-provided evidence aligned with FDA filings, along with responses to security and risk questionnaires. These materials should support integration into existing risk management systems [3][6]. Comprehensive documentation helps inform clinical decisions and ensures compliance with Joint Commission, CMS, and internal policies.

Validation processes should continue throughout the model’s lifecycle. This includes initial validation at deployment and re-validation when significant changes occur, such as model retraining, new data inputs, or deployment in new settings [3][6]. Validation evidence should be stored in structured repositories, ideally integrated with governance tools, to track versioned models, datasets, study protocols, and known risks [3][6]. For software-as-a-service models or continuously learning systems, hospitals need clear agreements on change control, re-validation triggers, and ongoing access to updated performance data [3][4].

What Is Robustness Testing of AI Models in Healthcare?

Robustness testing evaluates whether an AI model can maintain clinically safe performance when real-world conditions differ from its training environment. This includes changes in patient populations, data quality, workflows, or even deliberate attempts to manipulate inputs. Unlike standard accuracy tests on clean datasets, robustness testing pushes a model to its limits, asking questions like: What happens if imaging data come from a different scanner? What if lab values are noisy or missing? Can the system handle attempts to deceive it?

A model might excel during validation but fail in real-world scenarios. Its ability to handle distribution shifts, corrupted data, workflow changes, and adversarial attacks is critical for patient safety and system reliability. For instance, a radiology model could achieve a high AUC on a test set but lose sensitivity for certain patient groups when deployed in settings with different imaging protocols or demographics [2][7].

Robustness is a cornerstone of trustworthy AI in healthcare, standing alongside fairness and explainability. Frameworks like FUTURE-AI and the European Commission's ALTAI mandate robustness and resilience against adversarial attacks for high-risk medical AI [2]. In the United States, healthcare organizations are incorporating robustness testing into pre-deployment evaluations, where privacy, bias, and robustness are assessed before AI systems are integrated into clinical workflows [3].

The focus of robustness testing extends beyond technical performance. It ensures the reliability of the entire AI system, including its data pipelines and user interactions, under the unpredictable conditions of real healthcare settings. This approach acknowledges that robustness depends not just on the model but also on how well it integrates with electronic health records (EHRs), medical devices, documentation practices, and clinical teams. Below, we’ll explore the key dimensions and methods that support effective robustness testing in healthcare.

Key Dimensions of Robustness

Healthcare organizations should evaluate robustness across several critical areas, each addressing specific risks to patient safety and operations:

- Data distribution shifts: This involves assessing how a model performs when patient demographics, disease prevalence, or care pathways differ from the training data. For example, models may falter when local demographics or clinical practices vary significantly, potentially leading to missed diagnoses or alert fatigue.

- Noise and missing data: Robustness to errors like measurement inaccuracies, transcription mistakes, or incomplete fields is essential. A reliable model should still make clinically sound predictions when working with imperfect data, a common scenario in U.S. EHR and device records.

- Adversarial or manipulated inputs: This dimension tests how a model reacts to deliberately altered inputs, such as subtly modified images or adjusted data fields. Metrics like robust accuracy (performance on manipulated inputs) and failure success rate (the percentage of adversarial attempts that cause errors) are used to gauge resilience [7][5].

- Environmental and workflow changes: Models must adapt to evolving clinical workflows, new lab panels, updated imaging protocols, or revised triage systems. These changes can challenge a model’s consistency as hospital systems upgrade or reconfigure their services [2][3].

- Subpopulation and site variability: Ensuring consistent performance across diverse patient groups - differing by age, race, ethnicity, comorbidities, or care settings - is crucial to avoid inequities in care. U.S. regulators and AI frameworks emphasize this alongside fairness and transparency [2][7].

Testing Methods for Robustness

Robustness testing combines stress-testing, local validation, and adversarial probing to identify potential failures before deployment.

- Stress-testing and local evaluation: This involves creating worst-case but realistic scenarios, such as high-noise lab results, incomplete patient histories, or unusual clinical presentations. Testing models with actual hospital data is crucial, as centralized benchmarks may not reflect local demographics or practices. For example, variables like vital signs can be programmatically altered to assess their impact on metrics like AUROC, sensitivity, and positive predictive value. Stratifying results by factors such as race, age, or comorbidities helps identify at-risk groups [1][2][7].

- Adversarial testing: This approach generates manipulated inputs designed to mislead the model without obvious changes visible to clinicians. Automated adversarial input generation and red teaming exercises are common techniques. For instance, subtle alterations in imaging data could lead to misclassification. A 2024 survey of cancer imaging AI stakeholders found that only 16.7% of technical respondents consistently tested for adversarial robustness [4].

- Failure mode cataloging: Documenting error-prone conditions - like rare disease presentations, overnight labs, or specific imaging devices - helps organizations create safeguards. These insights guide governance decisions and risk management strategies. Platforms like Censinet RiskOps™ streamline the documentation and tracking of robustness assessments, linking findings to enterprise risk controls and cybersecurity programs.

Robustness in Cybersecurity and Algorithmic Safety

Robustness testing also plays a critical role in cybersecurity and algorithmic safety. It identifies vulnerabilities that could compromise clinical decisions, expose sensitive data, or disrupt workflows. This testing informs measures like input validation, monitoring for unusual usage patterns, vendor risk assessments, and incident response plans [2][7][5]. For example, if adversarial testing reveals a diagnostic model’s sensitivity to small input changes, stricter data validation or human review for borderline cases might be implemented.

Tools like Censinet RiskOps™ enable healthcare organizations to map AI dependencies and assess risks related to cybersecurity, third-party interactions, and enterprise operations. By centralizing robustness evidence and fostering collaboration across clinical, IT, security, and compliance teams, such platforms ensure robustness testing becomes a core component of risk management, safeguarding patients, data, and clinical workflows.

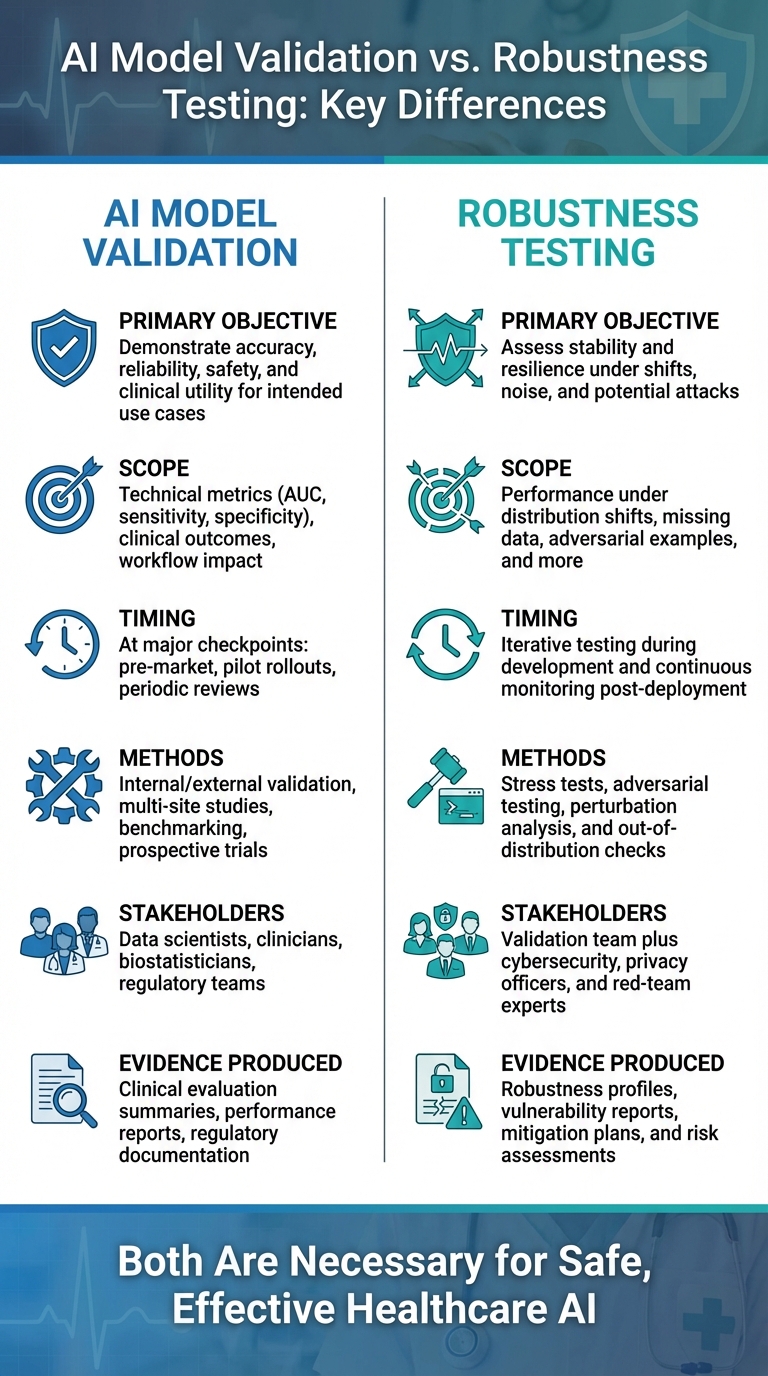

AI Model Validation vs. Robustness Testing: Key Differences

AI Model Validation vs Robustness Testing in Healthcare: Key Differences

Although both validation and robustness testing evaluate AI models in healthcare, they address different objectives. Validation ensures clinical benefit, while robustness testing focuses on sustained performance amid change. In essence, validation verifies that the model is accurate, reliable, safe, and clinically effective for its intended use. On the other hand, robustness testing examines how the model performs when faced with data shifts, workflow changes, or even deliberate interference.

These distinctions influence how each process is applied throughout the AI lifecycle. Validation takes place at key milestones - such as before regulatory approval, during pilot deployments, and at scheduled re-evaluations - producing critical metrics and safety reports. Robustness testing, however, is more ongoing, identifying threats like data drift or environmental changes. Its outputs, such as stress test results and mitigation strategies, directly feed into risk management. The teams involved also differ: validation typically includes data scientists, clinicians, biostatisticians, and regulatory experts, while robustness testing expands this group to include cybersecurity professionals, privacy officers, red-team specialists, and risk management teams. These differences shape how each method is integrated into the AI lifecycle.

Comparison Table: Validation vs. Robustness Testing

Here's a side-by-side breakdown of the key differences:

| Dimension | AI Model Validation | Robustness Testing |

|---|---|---|

| Primary objective | Demonstrate accuracy, reliability, safety, and clinical utility for intended use cases | Assess stability and resilience under shifts, noise, and potential attacks |

| Scope | Technical metrics (AUC, sensitivity, specificity), clinical outcomes, workflow impact | Performance under distribution shifts, missing data, adversarial examples, and more |

| Timing | At major checkpoints: pre-market, pilot rollouts, periodic reviews | Iterative testing during development and continuous monitoring post-deployment |

| Methods | Internal/external validation, multi-site studies, benchmarking, prospective trials | Stress tests, adversarial testing, perturbation analysis, and out-of-distribution checks |

| Stakeholders | Data scientists, clinicians, biostatisticians, regulatory teams | Validation team plus cybersecurity, privacy officers, and red-team experts |

| Evidence produced | Clinical evaluation summaries, performance reports, regulatory documentation | Robustness profiles, vulnerability reports, mitigation plans, and risk assessments |

Regulatory and Governance Implications

These differences hold significant weight in regulatory and governance contexts. U.S. regulations, particularly from the FDA, emphasize a total product lifecycle approach for AI/ML-enabled medical devices. This requires clear evidence of clinical performance, real-world monitoring, and change management. Validation artifacts - like external validation studies and subgroup performance reports - are pivotal for regulatory submissions and device approvals.

Robustness testing, while not always explicitly required, is equally crucial for maintaining safety in real-world conditions. It addresses challenges like adaptive algorithm behavior and cyber threats, ensuring performance remains stable across various scenarios. Frameworks such as ALTAI and FUTURE-AI highlight robustness as a core principle, alongside fairness and explainability. Tools like Censinet RiskOps™ integrate both validation and robustness findings into enterprise risk assessments, addressing both clinical and cybersecurity concerns across the AI lifecycle.

Why Both Are Necessary

A model can perform exceptionally during validation but fail in practice if robustness is overlooked. For instance, a study on AI validation practices in cancer imaging revealed that only 16.7% of respondents "always" checked for adversarial robustness [4]. This gap highlights a major risk: a diagnostic model might achieve high AUC scores on clean test data but falter when imaging protocols change, patient demographics shift, or data quality deteriorates.

Both validation and robustness testing are indispensable before deployment. Validation confirms the model’s clinical reliability for a specific purpose, while robustness testing prepares for real-world variables, such as diverse patient populations, different imaging equipment, or adversarial manipulation. Together, they ensure AI systems not only excel in controlled settings but also adapt safely to evolving conditions, safeguarding patients, workflows, and sensitive data.

sbb-itb-535baee

Integrating Validation and Robustness Testing into Healthcare AI Governance

AI Governance Frameworks and Best Practices

Frameworks like the FDA's Total Product Lifecycle, FUTURE-AI, and ALTAI emphasize the need for continuous oversight of AI systems from their inception to retirement. These frameworks tie governance objectives directly to two critical areas: validation for clinical safety and robustness testing for resilience under real-world conditions.

To put these frameworks into action, healthcare organizations in the U.S. should establish clear governance goals linked to measurable outcomes. This involves defining risk-based requirements and implementing staged evaluations - such as sandbox trials, pilot tests, and scaled rollouts. At each stage, validation milestones and robustness testing protocols should be rigorously applied. A cross-functional AI review committee, including clinicians, data scientists, risk managers, compliance officers, and cybersecurity experts, should collaboratively assess validation results and robustness testing outcomes before granting production approval. This integrated, multidisciplinary approach ensures that risks are identified and mitigated early, paving the way for effective governance using advanced platforms.

Role of Risk Management Platforms

In addition to robust governance frameworks, specialized risk management platforms play a vital role in securing AI systems. For example, Censinet RiskOps™ centralizes critical data such as validation evidence, robustness test results, and risk assessments into an organized, auditable system. This platform simplifies the process by standardizing both internal and vendor-facing AI questionnaires, capturing essential details like validation methods, robustness strategies, and security measures.

Censinet RiskOps™ integrates seamlessly with procurement and security workflows, ensuring that AI solutions cannot move forward without comprehensive validation and robustness documentation. The platform also automates reminders for re-validation or robustness re-testing whenever software updates, data sources, or clinical indications change. As Terry Grogan, CISO at Tower Health, shared:

"Censinet RiskOps allowed 3 FTEs to go back to their real jobs! Now we do a lot more risk assessments with only 2 FTEs required."

This streamlined process not only enhances efficiency but also enables organizations to scale their AI governance efforts without needing to proportionally increase staff.

Continuous Monitoring and Algorithmovigilance

Validation and robustness testing aren’t "one-and-done" tasks - they’re part of an ongoing governance cycle. This is where algorithmovigilance - the continuous monitoring of AI performance - becomes essential. Real-time or near-real-time dashboards should track key performance metrics such as AUC, sensitivity, calibration drift, and alert override rates using current patient data. Automated drift detection can identify significant shifts in input distributions, patient demographics, or outcome prevalence that might undermine earlier validation results.

Regularly re-running robustness test suites on updated datasets - including synthetic noise, altered inputs, and simulated documentation changes - helps ensure AI models remain resilient as clinical environments evolve. Governance committees should review these monitoring reports at set intervals, with the authority to adjust thresholds, limit indications, or even suspend models if performance or robustness falls below acceptable levels. All decisions and actions should be meticulously logged in the organization's AI risk management platform, creating a clear audit trail and ensuring compliance during regulatory reviews.

To round out the governance process, clinician feedback and incident reporting should be integrated. Combining technical metrics with frontline experiences ensures a more comprehensive approach to safety and reliability assessments.

Conclusion

Validating AI models ensures they fulfill their clinical purpose, proving they can perform their intended function and potentially enhance patient outcomes. On the other hand, robustness testing examines how these models handle varied, unpredictable real-world conditions [1][2].

Together, these practices play a critical role in protecting patient safety and maintaining clinician confidence in AI-powered healthcare. Relying on just one approach is risky. Validation alone might produce systems that excel in controlled settings but falter when faced with the complexity of diverse U.S. clinical environments. Conversely, focusing solely on robustness testing could lead to models that lack proven clinical effectiveness or fail to meet regulatory standards. Both validation and robustness testing are essential to ensure AI tools remain reliable and effective across the healthcare landscape.

Incorporating these practices into AI governance frameworks - such as the FDA's Total Product Lifecycle, FUTURE-AI, and ALTAI - is becoming increasingly important. U.S. healthcare organizations should establish cross-functional oversight committees, create clear validation and robustness protocols, and implement continuous monitoring systems (often called algorithmovigilance). These systems help track performance, detect issues like model drift, and prompt re-validation or updates as clinical environments change [2][3]. Such proactive oversight is essential to keep pace with the evolving needs of healthcare.

Beyond compliance, adopting these practices offers strategic advantages. Organizations that integrate robust validation, thorough robustness testing, and structured governance - using tools like Censinet RiskOps™, which centralize evidence and simplify compliance - are better positioned to meet regulatory demands, earn the trust of clinicians and patients, and achieve long-term clinical and financial success with AI. In essence, validation and robustness testing are not just regulatory necessities; they are key to fostering scalable, trustworthy innovation in healthcare AI.

FAQs

What is the difference between AI model validation and robustness testing in healthcare, and how do they work together?

AI model validation is all about making sure a model lives up to specific standards for accuracy, compliance, and performance before it’s put to use. On the flip side, robustness testing examines how well the model holds up when faced with unpredictable, real-world scenarios - like unusual data inputs or sudden changes in conditions.

In healthcare AI, these two processes go hand in hand. Validation ensures the model is dependable, while robustness testing checks if it can tackle the complexities of real-world applications. Together, they play a crucial role in minimizing risks, enhancing patient safety, and fostering confidence in AI-driven healthcare solutions.

What challenges make it difficult to ensure AI models stay reliable in real-world healthcare settings?

AI models face several hurdles when it comes to staying dependable in healthcare settings. One major challenge is dealing with the variability in real-world data. This variability makes it tough for models to generalize their predictions effectively. On top of that, rare or entirely new cases can push even the most thoroughly trained models to their limits.

Inconsistent data quality adds another layer of complexity. Patient records may be incomplete or contain errors, which can directly affect how well an AI system performs. There's also the challenge of keeping up with changes in clinical practices and healthcare environments. These shifting factors mean that AI models need constant monitoring and updates to ensure they remain accurate and relevant.

Why is ongoing monitoring essential for AI models in healthcare?

Monitoring healthcare AI models regularly is essential to promptly catch and fix any performance issues, biases, or errors that might put patient safety or data security at risk. This practice helps ensure these models remain accurate, dependable, and in sync with evolving clinical practices and regulatory requirements.

By keeping a close eye on AI systems, healthcare organizations can reduce the risks tied to outdated or potentially weak models. This proactive approach not only protects the quality of care but also reinforces trust in the technology.