Beyond Policy: Creating AI Governance That Adapts and Evolves

Post Summary

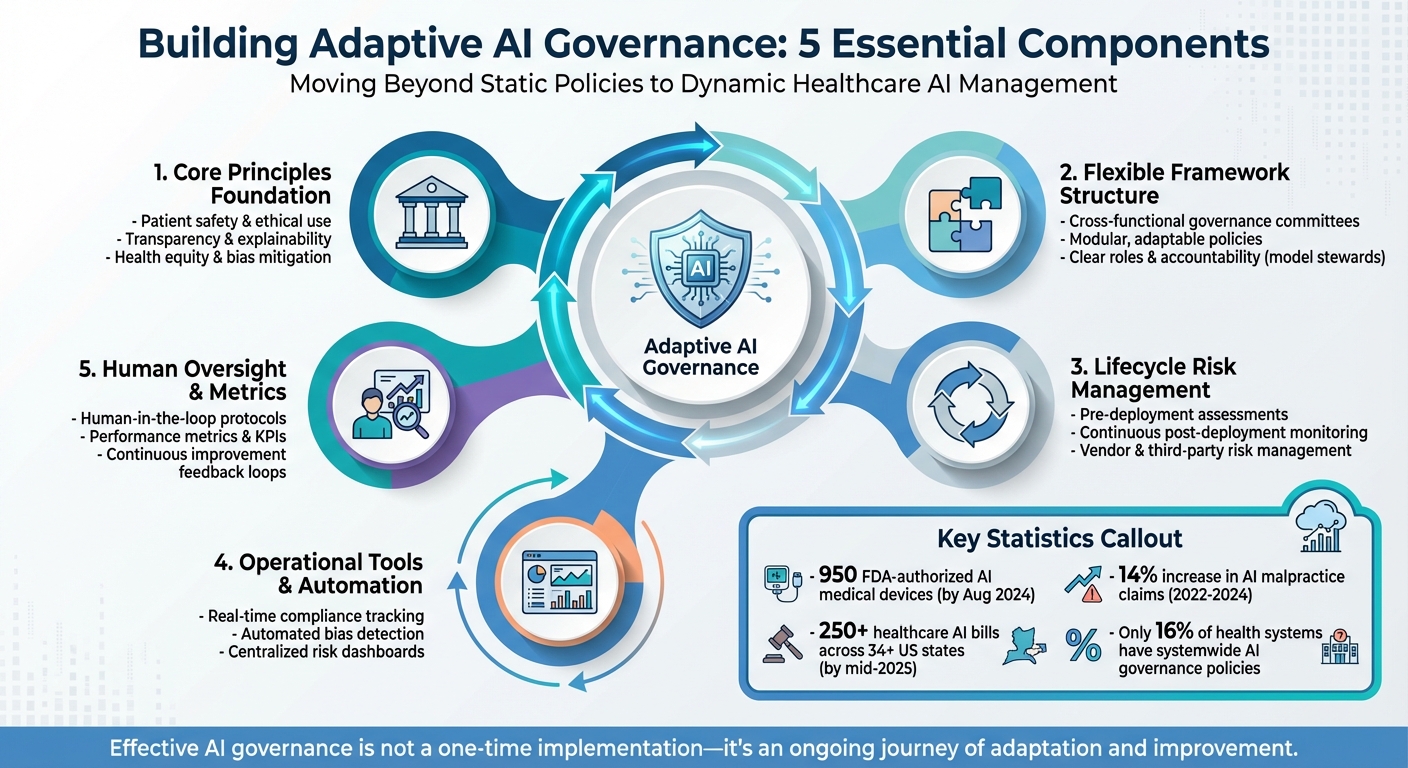

Healthcare organizations face a major challenge: AI systems change constantly, making traditional governance methods ineffective. Static policies can’t keep up with evolving AI risks, regulatory updates, or technological shifts. This is especially critical in healthcare, where patient safety, privacy, and equity are at stake.

To manage AI effectively, organizations need governance frameworks that:

- Prioritize patient safety and ethical use.

- Ensure transparency, explainability, and accountability.

- Include continuous monitoring and feedback loops.

- Assign clear roles and responsibilities for oversight.

- Use modular policies to adapt to specific needs and regulations.

Tools like Censinet RiskOps™ help automate monitoring, detect issues early, and integrate human oversight for better decision-making. A flexible approach ensures AI systems remain safe, compliant, and effective over time.

Five Core Components of Adaptive AI Governance Framework in Healthcare

Core Principles of Evolving AI Governance

Creating an effective AI governance framework in healthcare requires a foundation rooted in principles that prioritize patient well-being, maintain trust, and adapt to advances in AI. These principles are essential for ensuring governance remains effective and responsive over time.

Patient-Centered Governance Priorities

At the heart of AI governance lies the commitment to patient safety, clinical effectiveness, and health equity. These priorities ensure AI systems deliver meaningful clinical benefits without causing harm. For example, The Permanente Medical Group (TPMG) implemented ambient AI scribes across 17 centers in Northern California between October 2023 and December 2024. This initiative involved 7,260 physicians, saved approximately 15,000 hours of documentation time, and logged over 2.5 million uses in a year. Importantly, the rollout was voluntary, required patient consent, did not retain raw audio, and adhered to strict privacy guidelines under ethics committee oversight[4].

Protecting sensitive patient data throughout the AI lifecycle is non-negotiable. Governance must also address health equity, ensuring AI systems do not worsen existing disparities or create new ones. This requires rigorous ethical oversight, including consultations and boards focused on identifying and addressing biases and inequities[2][4].

Transparency, Explainability, and Accountability

In addition to patient-focused priorities, AI systems in healthcare must be transparent and accountable. Clear decision-making processes and well-defined accountability are critical in such settings. As Jee Young Kim and colleagues from the Duke Institute for Health Innovation emphasize:

"Robust AI governance ensures that AI solutions are transparent, equitable, and aligned with ethical and regulatory standards. It mitigates risks, safeguards patient safety, and supports the responsible integration of AI into healthcare."[2]

Transparency fosters trust by providing clinicians, patients, and administrators with insights into how AI systems function. This includes explaining the data used, assumptions made, and system limitations. Many AI models operate as "black boxes", making it difficult to detect errors. Explainable AI (XAI) techniques are vital for helping users understand the reasoning behind AI decisions[2][4].

Accountability requires collaboration among diverse stakeholders. Policies should be written in plain language, clearly outlining roles, responsibilities, and decision-making processes. Cross-functional AI governance committees - including experts in legal, clinical, technical, and ethical domains - are essential for maintaining accountability across the organization[1][4].

Continuous Learning and Feedback Loops

AI systems are not static - they evolve, learn, and adapt to new data. As a result, governance must be an ongoing process. Regular monitoring and timely updates based on feedback, system performance, and regulatory changes are crucial for ensuring AI systems remain safe and effective[2][4][5].

A pilot project conducted in early 2024 highlighted the importance of continuous clinician feedback and real-time performance monitoring[4].

Neglecting continuous learning poses significant risks. For instance, model drift - when real-world data diverges from the data used to train the system - can degrade performance and jeopardize patient safety. Through constant monitoring, auditing, and governance updates, organizations can identify and address potential issues before they impact patients, ensuring long-term safety and reliability well beyond initial deployment[1][3].

Building a Flexible AI Governance Framework

Creating a flexible AI governance framework requires a solid structure with clearly defined responsibilities, capable of adapting to evolving technologies, regulations, and risks. By establishing clear roles and crafting modular policies, healthcare organizations can build a framework that effectively oversees AI systems.

Establishing Governance Roles and Responsibilities

The first step in building effective AI governance is forming a cross-functional committee. This group should include representatives from various areas such as clinical leadership, IT, cybersecurity, compliance, legal, privacy, ethics, and operations. Each member brings a unique perspective:

- Data scientists evaluate model performance and fairness.

- Clinical leaders ensure AI systems align with ethical standards.

- Compliance officers monitor regulatory adherence.

- IT leaders oversee system integration and data security.

This committee should report to a higher-level body, like a Digital Health Committee, to maintain strategic alignment. Additionally, appointing "model stewards" for each high-impact AI system ensures clear ownership and accountability. These stewards play a vital role in maintaining oversight throughout the AI lifecycle, reinforcing principles like safety, equity, efficacy, security, and compliance.

Modular Policies for Scalability

Once roles are defined, modular policies allow organizations to update specific governance components - such as privacy, security, bias mitigation, or transparency - without overhauling the entire framework. This approach is especially critical in healthcare, where regulations can vary widely by state. For example, by mid-2025, over 250 healthcare AI-related bills had been introduced across more than 34 states in the U.S. [6]. Modular policies make it easier to respond to these state-specific requirements while supporting innovation and timely implementation of AI systems.

Integrating Governance into Existing Risk Management Structures

For governance to remain effective and dynamic, it must integrate seamlessly with existing risk management systems. AI oversight functions best when woven into enterprise risk management (ERM), clinical governance, and IT security processes rather than existing as a standalone system. For example:

- Expanding current data governance frameworks used in clinical research to include standards for AI training data can help ensure datasets are complete, representative, and free from bias.

- Consolidating AI compliance with existing reporting structures centralizes oversight, improves accuracy, and enables real-time monitoring.

Embedding Risk Management Across the AI Lifecycle

To ensure AI systems operate safely and effectively, risk management must be integrated into every stage of their lifecycle. From the initial design phase to ongoing operations, this approach is especially critical in fields like healthcare, where risks can arise at multiple points. These include incomplete or biased training data, algorithms that exacerbate health disparities, opaque "black box" models, and implementation failures due to poor planning or misuse [7]. Between 2022 and 2024, AI-related malpractice claims rose by 14% [8], highlighting the pressing need for robust, proactive risk management throughout the process.

Pre-Deployment Risk Assessments

Before launching an AI system, conducting thorough risk assessments is essential. This includes activities like threat modeling, adversarial testing, and privacy impact reviews to identify potential vulnerabilities. For example, red-teaming exercises simulate adversarial scenarios to uncover weaknesses before systems are deployed [7]. These evaluations should begin early, during the planning stages, and include a detailed review of third-party AI partners and vendors [9][10]. Organizations must also assess the quality of training data, test for algorithmic bias across diverse demographic groups, and ensure the model’s interpretability. Laying this groundwork helps establish a solid foundation for ongoing oversight after deployment.

Post-Deployment Monitoring and Incident Response

Once an AI system is operational, continuous monitoring becomes critical. Key performance metrics should be used to track issues like model drift, bias, and anomalies. Anjella Shirkhanloo, Contributor at IAPP, emphasizes:

"AI systems should be monitored throughout their life cycle, not just during initial deployment. This requires integrating real-time model auditing, bias detection and compliance drift tracking to flag anomalies before they lead to regulatory violations or reputational damage" [1].

Healthcare organizations should also implement a robust incident reporting system to log algorithm malfunctions, unexpected outcomes, or patient complaints tied to AI tools. This helps teams identify recurring issues and underlying weaknesses [10]. By integrating monitoring into existing incident response workflows, organizations can ensure timely resolutions. Additionally, this oversight extends to managing risks associated with third-party tools, which is a critical area of focus.

Managing Vendor and Third-Party AI Risks

Third-party AI tools come with their own set of challenges, requiring careful management. During procurement, vendors should be evaluated for security, privacy, and compliance risks. Contracts must include clauses requiring transparency about model updates, training data, and regular audits [1][10]. Alarmingly, only 16% of health systems currently have governance policies addressing AI use and data access on a systemwide level [10], leaving many vulnerable to vendor-related risks. Beyond contractual safeguards, organizations need contingency plans for potential AI system failures. These should include data access protocols, alternative workflows, and communication strategies for when systems are offline or compromised [10]. Holding vendors accountable is essential to maintaining control over AI-driven clinical decisions that directly impact patient safety.

sbb-itb-535baee

Operationalizing Dynamic AI Governance

Turning governance frameworks into actionable practices demands a blend of automation, human oversight, and measurable outcomes. As AI becomes more prevalent [11], the question is no longer if organizations need governance but how they can manage these systems effectively. Static policies just don’t cut it in the face of AI’s rapid advancements. This is where operational tools and processes come into play, helping organizations maintain control and adapt in real time. Let’s explore how automation, human intervention, and metrics work together to bring dynamic AI governance to life.

Continuous Monitoring and Governance Automation

Automation shifts AI governance from a static, checkbox-style task into a dynamic, ongoing process. Platforms like Censinet RiskOps™ handle real-time audits, bias checks, and compliance tracking, ensuring governance stays proactive. These tools monitor predefined Key Performance Indicators (KPIs) to detect issues like model degradation over time [7]. When problems arise, findings are automatically routed to the right team members for review.

Censinet AI serves as a central hub, consolidating policies, risks, and tasks into a single dashboard. This setup gives organizations the real-time visibility they need to address potential issues before they escalate [11]. By automating routine tasks, companies can focus their resources on more complex challenges while maintaining control over their AI systems.

Integrating Human Oversight with Automation

Automation is powerful, but it’s not a substitute for human judgment. That’s why tools like Censinet AI incorporate a human-in-the-loop approach, ensuring that automation supports decision-making rather than replacing it entirely. Risk teams can configure rules and review processes to keep human oversight at the forefront.

This balance is especially critical in fields like healthcare, where 57% of organizations cite patient privacy and data security as their top AI concerns, and 49% worry about biases in AI-generated medical advice [11]. Protocols for AI usage help prevent over-reliance on automated recommendations [7].

Cross-functional AI governance committees play a key role here. These committees should include representatives from legal, clinical operations, privacy, ethics, and IT teams [1]. One ethics-focused stakeholder highlighted the risks of neglecting human oversight:

"It was surprising to me that there is no ethics consultation when it comes to AI deployment. I think that inequities are going to be expected because we don't routinely collect race-based data, we don't routinely collect gender data. If you don't have the data of the people that you serve, the tool itself could be biased against groups of people, depending on where you got the algorithm from." [12]

This insight underscores the importance of deliberate and thoughtful human involvement in AI governance.

Using Metrics to Drive Governance Improvements

Metrics are the backbone of effective governance, offering early warnings and guiding ongoing improvements. For healthcare organizations, tracking performance indicators across multiple areas is crucial. These include model accuracy, drift, bias detection across demographic groups, incident frequency and severity, compliance violations, and vendor risk scores. Metrics that reveal trends like model drift or a spike in incidents [4][1][5] should trigger formal reviews by governance committees.

The rise in AI-related malpractice claims [8] further emphasizes the need for proactive monitoring. Platforms like Censinet RiskOps™ bring these metrics together in an easy-to-navigate dashboard, helping organizations identify patterns and make informed adjustments to their policies. By using data to refine governance frameworks, organizations can ensure their systems align with key principles like safety, equity, privacy, and security [2][4][3].

With the right combination of tools, oversight, and metrics, AI governance becomes not just a safeguard but a dynamic process that evolves alongside the technology it’s designed to manage.

Conclusion

AI governance in healthcare is a continuous journey, demanding flexibility and a forward-thinking approach. Static policies simply can't keep up with the dynamic nature of AI systems that evolve, adapt, and present challenges like model drift, regulatory updates, and third-party integrations. Instead of striving for instant perfection, organizations should focus on building systems that can learn, adjust, and improve over time.

Three core elements are key to moving forward: continuous improvement, cross-functional collaboration, and operational tools. Continuous monitoring helps detect performance issues and emerging biases early, preventing potential compliance or patient safety risks [1]. Collaboration among clinical, technical, legal, privacy, and ethics teams ensures decisions are well-rounded and grounded in diverse expertise. These principles reflect the integrated strategies discussed earlier, highlighting their importance in shaping effective AI governance in healthcare.

Tools like Censinet RiskOps™ provide a practical way to tackle these challenges. With centralized dashboards for real-time risk tracking, automated workflows for critical findings, and integrated human oversight, such platforms enable organizations to scale their AI governance efforts while maintaining safety and accountability. As internal systems strengthen, external regulatory changes further underscore the need for adaptable frameworks.

The regulatory landscape is evolving quickly. For instance, the European AI Act is anticipated to be finalized in 2025, and by August 2024, the FDA had already authorized 950 AI-enabled medical devices [2][4]. This makes adaptive governance not just a good practice but an essential one. Organizations that weave governance into their daily operations, establish ongoing review processes, and utilize tools tailored to healthcare's unique demands will be better equipped to manage AI risks effectively while unlocking its full potential.

FAQs

How can healthcare organizations keep AI systems safe, effective, and up-to-date?

Healthcare organizations can ensure their AI systems remain safe and effective by implementing flexible governance frameworks that grow alongside technological advancements and shifting clinical demands. These frameworks should focus on ongoing performance monitoring, regular audits to uncover and correct biases, and timely updates to models and policies to address challenges like model drift.

Some effective strategies include creating multidisciplinary oversight teams, leveraging automated tools to identify potential risks, and performing routine validation checks. By staying adaptable and proactive, organizations can keep their AI systems ethical, compliant, and in sync with the ever-evolving healthcare environment.

Why is human oversight essential for AI governance in healthcare?

Human oversight plays a key role in governing AI systems within healthcare, ensuring accountability, safety, and ethical practices. By engaging clinicians, compliance officers, and other essential stakeholders, organizations can thoroughly review, validate, and continuously monitor AI systems at every stage of their development and use. This approach helps mitigate risks like bias, errors, or unintended outcomes.

As AI models grow more advanced, human involvement becomes even more critical. It adds an essential layer of scrutiny, ensuring that the decisions these systems make adhere to clinical standards and ethical guidelines. Beyond that, it builds trust by promoting responsible and transparent use of AI technologies in healthcare environments.

How can modular policies help healthcare organizations adapt AI governance to state-specific regulations?

Modular policies give healthcare organizations the flexibility to tailor AI governance frameworks to the specific legal and regulatory demands of different states. By dividing policies into manageable components, organizations can adapt to state-specific laws - like disclosure rules or opt-out provisions - without needing to revamp their entire governance system.

This method not only helps ensure compliance with shifting state regulations but also encourages ethical AI practices. Additionally, it allows organizations to respond more quickly to new legal changes, strengthening trust and reducing risks across various regions.