Change Management in the AI Era: Preparing People for Intelligent Automation

Post Summary

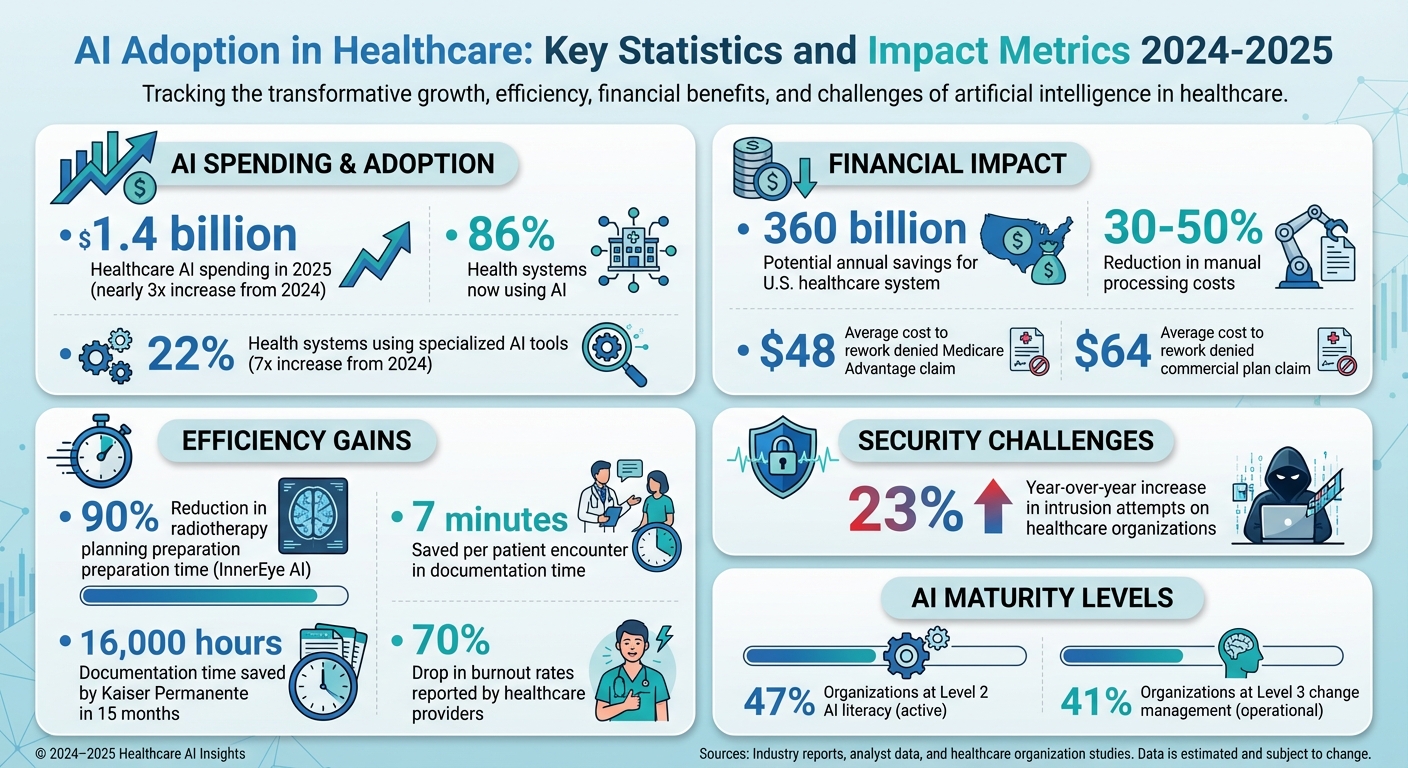

Healthcare is rapidly adopting AI, with spending in 2025 reaching $1.4 billion - nearly triple that of 2024. While 86% of health systems now use AI, the human challenges of integration often derail progress. Common issues include staff resistance due to job security fears, unclear leadership strategies, and trust gaps between clinicians and patients. Without structured change management, AI risks creating inefficiencies and fragmented systems.

Key takeaways:

- AI Adoption Stats: 22% of health systems now use specialized AI tools, a 7x increase from 2024.

- Challenges: Workflow disruptions, low adoption rates, and patient trust issues.

- Solutions: Use change management frameworks (e.g., Kotter's 8-Step Process), cross-functional teamwork, and transparent communication.

- Training: AI literacy programs and hands-on training are essential to prepare teams for new roles.

- Compliance: Align with regulatory frameworks like HIPAA and establish robust AI governance to ensure data security.

AI in healthcare can improve efficiency, reduce costs, and address workforce shortages if paired with a people-first approach, structured change management, and continuous feedback loops.

AI Adoption in Healthcare: Key Statistics and Impact Metrics 2024-2025

How Intelligent Automation Is Changing Healthcare

AI-Driven Changes in Healthcare Workflows

Intelligent automation is transforming the way healthcare organizations function, streamlining processes across clinical, operational, and administrative areas. By optimizing decision-making and driving efficiency, AI has the potential to save the U.S. healthcare system up to $360 billion annually [6][11].

These advancements are making a noticeable impact. AI-powered tools are easing administrative burdens, giving clinicians more time to focus on delivering personalized, high-quality care [1]. For instance, the cost to rework a denied insurance claim averages $48 for Medicare Advantage and $64 for commercial plans - a process that AI can help simplify and reduce [11].

Clinical workflows are also seeing major efficiency improvements. A great example is InnerEye, an AI-based open-source technology that cuts preparation time for radiotherapy planning in head, neck, and prostate cancer by as much as 90% [4]. Diagnostic processes are becoming more precise, with AI often matching or even surpassing human performance in image-based evaluations, which helps reduce diagnostic errors [4][11]. These advancements align with the Quadruple Aim in healthcare: enhancing population health, improving patient experiences, lowering costs, and supporting provider well-being [5].

AI is also making strides in cybersecurity and risk management by ensuring compliance with patient privacy laws, data security protocols, and regulatory requirements. It addresses data security challenges during implementation [1][10][9]. Predictive analytics further enable early interventions, better resource allocation, and the prevention of adverse events [4][11]. Additionally, digital twin models allow for virtual testing of medical interventions, minimizing risks and improving outcomes [4].

While these advancements are reshaping healthcare, organizations must also tackle the human challenges that come with AI adoption.

Human Challenges in AI Adoption

As AI continues to evolve, integrating it into healthcare presents unique challenges tied to human factors. Unlike other industries, healthcare involves complex interactions, high stakes, strict regulations, and an unwavering focus on patient safety and care quality [9]. For AI to succeed, organizations need more than just technological readiness - they must also focus on effective change management, address human concerns, and establish strong governance frameworks [7][5][8][1].

Healthcare staff often face psychological and operational challenges when adapting to AI. Job security is a major concern, with many employees fearing that automation might replace their roles rather than enhance them. For clinicians, legal liability is another pressing issue, requiring clear guidelines on their responsibilities when using AI systems [9]. Building trust in AI solutions means ensuring transparency about how algorithms work, as well as their reliability, safety, and availability [9].

Another critical issue is the risk of bias in AI applications. To mitigate this, organizations need diverse teams working on AI development to ensure fairness and inclusivity in algorithm design [10]. Addressing these human challenges is just as important as the technological advancements AI brings to healthcare.

Strategies for Managing Change During AI Integration

Using Change Management Frameworks

When healthcare organizations integrate AI, leveraging established change management models can make the process smoother. For instance, Kotter's 8-Step Process is a popular choice in healthcare, referenced in 19 studies according to a systematic review. Similarly, Lewin's Unfreeze-Change-Refreeze model has been cited in 11 studies [7]. Another helpful framework is the ADKAR Model, which focuses on five key areas: Awareness, Desire, Knowledge, Ability, and Reinforcement. This model is particularly effective for managing both individual and organizational transitions [7].

These frameworks aren't just theoretical - they can be tailored to address challenges specific to AI. For example, a version of Kotter's model customized for clinical environments has demonstrated its practicality [7]. A benchmarking study of 24 healthcare systems using the AI Maturity Roadmap revealed that most organizations rated their AI progress as either level 2 ("active") or level 3 ("operational"). Specifically, 47% rated their AI literacy at level 2, while 41% assessed their change management at level 3 [14]. These findings highlight the importance of structured frameworks, especially for organizations still in the early stages of AI adoption.

Choosing a framework that considers not just the technology but also the people, processes, and operations involved is crucial. The PPTO framework (People, Process, Technology, and Operations) is particularly effective in healthcare settings. For example, Trillium Health Partners successfully used this approach by conducting stakeholder interviews and co-design workshops [12]. Once a solid framework is in place, fostering collaboration across teams becomes the next priority.

Building Collaboration Across Teams

While frameworks provide structure, collaboration drives success. Cross-functional teamwork - bringing together technical experts and healthcare professionals - is essential to ensure AI solutions are both technically robust and clinically relevant [10]. From data scientists and AI engineers to clinicians and administrators, involving all key players from the outset is critical. Having "super-users" or "role models" lead the way can bridge gaps between technical teams and frontline staff, making implementation more seamless [1][7][9].

Human oversight remains a cornerstone as automation grows. Tools like AI dashboards offer transparency into system performance, decision-making, and workflow impacts. These dashboards allow teams to monitor real-world outcomes, spot issues early, and make data-driven adjustments as needed [1][13]. This collaborative approach doesn't just improve implementation - it also helps ease resistance to change.

Reducing Resistance to Change

Resistance to AI is often rooted in fears about job displacement, loss of autonomy, or distrust of algorithms. Tackling these concerns head-on with clear, transparent communication is key [1][7][9]. Staff need to understand exactly how AI works, what it can and cannot do, and how it will impact their roles and workflows [1][5][7].

Engaging stakeholders early in the process is equally important. Creating open communication channels dedicated to change management allows staff to voice their concerns, ask questions, and receive honest feedback about the integration process [7].

Launching pilot programs can also reduce resistance. By starting small, organizations can demonstrate tangible benefits and build trust. These smaller-scale implementations allow staff to see AI in action, often showcasing quick wins like reduced administrative burdens rather than job losses [1]. As employees witness colleagues successfully using AI tools, skepticism tends to fade. Addressing these human concerns alongside technical deployment ensures teams are prepared for the shift toward intelligent automation.

Training Teams for AI-Driven Roles

Creating AI Literacy Programs

Healthcare professionals need a solid understanding of AI basics to use these tools effectively. AI literacy programs should cover foundational topics, such as what AI is, how it functions, and its various forms, including machine learning, deep learning, generative AI, and natural language processing. Additionally, staff must grasp the importance of data and computing power in building AI systems [15][16][17][18].

Beyond the basics, training should focus on how AI is applied in healthcare - from diagnostics and patient monitoring to hospital management, personalized medicine, clinical decision support, predictive analytics, digital therapeutics, and medical imaging. These programs should also prepare healthcare leaders and professionals to design, propose, and implement AI solutions while navigating the unique cultural, economic, and regulatory challenges of the healthcare industry [15][16].

The curriculum must also address ethical concerns, regulatory frameworks, data privacy, and how AI integrates into clinical workflows and decision-making [15][16][18][17]. By demystifying AI and presenting it as a supportive tool that enhances - rather than replaces - clinical expertise, these programs lay the groundwork for more practical, hands-on training [1].

Hands-On Training with AI Tools

Once the fundamentals are in place, practical training becomes essential to turn knowledge into actionable skills. Hands-on experience is key for successfully adopting AI in healthcare [19]. Simulations using platforms like Censinet RiskOps™ allow staff to practice with AI tools in a controlled environment, building confidence and proficiency before integrating these systems into their daily workflows.

Involving healthcare professionals early in the AI development and implementation process is equally critical [1][10]. By participating from the start, end-users can share insights about their specific workflows and challenges, ensuring that tools are more tailored to their needs. This approach not only makes training more relevant but also fosters a mindset of continuous learning, which is crucial for adapting to AI-driven changes.

Ongoing Learning and Professional Development

Since AI technologies evolve quickly, training can't be a one-and-done effort. Ongoing education is vital to keep up with new features, updated regulations, and emerging best practices [1][10]. This continuous learning complements the change management strategies discussed earlier. Regular skill assessments can help identify gaps, ensuring that staff remain proficient as AI tools are updated or expanded.

To support this, organizations should establish structured pathways for ongoing education. Options could include refresher courses, advanced certifications, or regular workshops, all aimed at keeping healthcare professionals ahead of the curve in an AI-driven environment.

sbb-itb-535baee

Maintaining Compliance and Cybersecurity in AI Integration

As healthcare organizations embrace AI, ensuring strict compliance and robust data security remains a top priority.

Aligning AI with Regulatory Frameworks

On December 4, 2025, the U.S. Department of Health and Human Services unveiled a 21-page AI strategy, underscoring the importance of governance and risk management to build public trust. This strategy highlights key principles such as ethics, transparency, security, and adherence to civil rights and privacy laws in all AI applications [20]. As a result, AI governance is now a critical component of healthcare compliance programs [23].

Navigating the complex web of regulatory requirements is no small task. While HIPAA remains a cornerstone of data protection, healthcare organizations must also address newer, evolving guidelines to ensure their AI systems remain compliant. In a field where AI-driven decisions can directly impact patient outcomes and where safeguarding patient data is non-negotiable, it’s essential for teams to fully grasp the capabilities and limitations of AI within these regulatory frameworks. Once aligned with regulations, securing the data used in AI workflows becomes the next critical step to maintaining trust and operational integrity.

Protecting Data Security in AI Workflows

The cybersecurity landscape is becoming increasingly hostile. Healthcare organizations are now facing a 23% year-over-year rise in intrusion attempts, with AI tools making it easier for cybercriminals to exploit vulnerabilities [21]. This growing threat underscores the importance of implementing strong data and AI governance measures. Security teams play a pivotal role in defending against both conventional and AI-driven attacks.

To minimize risks, AI systems should be designed to access only the data necessary for their specific tasks [22]. Tools like Censinet RiskOps™ offer centralized oversight of protected health information (PHI), aggregating real-time risk data to quickly identify and address vulnerabilities. Establishing robust data security practices is a foundational step in building effective AI governance structures.

Creating AI Governance Structures

A multidisciplinary AI Governance Board is crucial for overseeing AI activities within healthcare organizations [20][24][25][26]. This board should include representatives from diverse areas such as IT, cybersecurity, data management, privacy, legal, compliance, clinical operations, risk management, patient experience, equity, quality, and safety [20][24][26]. By bringing together these varied perspectives, the board ensures that AI decisions reflect technical, clinical, ethical, and regulatory considerations.

The board’s responsibilities extend beyond drafting policies. They guide significant AI-related decisions, oversee implementation, and ensure ongoing monitoring. Tools like Censinet RiskOps™ serve as the operational backbone for AI governance, directing risk assessments to the appropriate stakeholders and ensuring alignment with both internal policies and external regulations. This structured approach helps healthcare organizations navigate the complexities of AI integration while prioritizing security and compliance.

Measuring Success and Maintaining Continuous Improvement

For healthcare organizations, measuring the impact of AI is not just a box to check - it's a critical step to validate its value and guide ongoing improvements. Success metrics should encompass AI system performance, its influence on clinical and operational workflows, financial outcomes, and the experiences of both healthcare professionals and patients. Establishing a baseline before deploying AI is essential. Without understanding where you started, it’s impossible to prove ROI or identify meaningful progress. These metrics provide the foundation for refining workflows and fostering a forward-thinking culture, as explained below.

Setting Metrics for Success

To gauge success effectively, focus on key indicators like patient wait times, readmission rates, staff satisfaction, AI adoption rates, costs, maintenance expenses, and overall efficiency improvements. Blend these quantitative metrics with qualitative insights gathered through regular surveys and focus groups, always comparing the results against pre-implementation data [28].

Using Feedback to Refine AI Workflows

Feedback loops are the engine that turns static AI tools into dynamic, evolving solutions. Create clear communication channels where end users can share feedback directly with developers, ensuring they receive the necessary support during the initial rollout [27]. One Operational Leader shared their approach:

We pilot in select divisions, gather stakeholder feedback, and adjust before full rollout [27].

Assemble a small, cross-functional team with clinical, technical, and administrative representatives to meet regularly. This group can prioritize feedback from frontline clinicians, decide on immediate or planned updates, and ensure changes are effectively communicated [27]. Document every adjustment to the AI system, including its intended use, clinical workflows, and training materials, and promptly update processes based on user input [28]. By continuously refining workflows through feedback, organizations can cultivate a culture that embraces adaptability and progress.

Building a Culture of Innovation

The long-term success of AI in healthcare goes beyond the technology itself - it requires a shift in mindset toward continuous learning and adaptability. Encourage staff to experiment with AI tools and provide hands-on learning opportunities that allow them to explore new capabilities without fear of failure [30]. Support workforce adaptation by offering mentoring, targeted training, and ongoing learning opportunities such as workshops and peer-led sessions [29][31]. Use AI’s predictive insights to anticipate challenges rather than reacting to them after the fact [29].

Balancing short-term efficiency gains with long-term cultural changes is key. Assess how AI usage aligns with your organization’s values and make adjustments as needed [29]. When employees view AI as a tool that enhances their abilities rather than a threat to their roles, innovation becomes part of the organization’s daily rhythm instead of a one-off initiative.

Conclusion

To successfully integrate AI into healthcare, it’s essential to align technology with the human element. This involves tackling the same challenges discussed earlier: structured change management, thorough training, strong collaboration, and a steadfast focus on compliance and cybersecurity.

When healthcare organizations adopt a people-first approach to AI, the impact can be transformative. For example, Kaiser Permanente used AI-powered ambient-listening tools to save nearly 16,000 hours of documentation time in just 15 months [3]. Similarly, other healthcare providers have reported cutting manual processing costs by 30% to 50%, reducing documentation time by seven minutes per patient encounter, and achieving a 70% drop in burnout rates [2].

Microsoft CEO Satya Nadella captured this perfectly:

AI is perhaps the most transformational technology of our time, and healthcare is perhaps AI's most pressing application [4].

This highlights a key takeaway: successful AI adoption depends on leadership's dedication to a forward-thinking, human-centered strategy. With the U.S. healthcare system facing a workforce shortage [32], organizations that act now to integrate intelligent automation will be better equipped to provide outstanding patient care in the future.

The path forward requires balancing immediate efficiency improvements with long-term investments in people. This means prioritizing AI literacy programs, offering hands-on training, and creating professional development opportunities that help staff see AI as a valuable partner rather than a threat. Establishing governance, rolling out AI tools in phases to secure early wins, and maintaining continuous feedback loops will ensure these tools are refined and effective over time.

The age of AI in healthcare has arrived. By treating change management as a strategic priority, not an afterthought, organizations can harness the full potential of intelligent automation. More importantly, they can cultivate a culture of innovation that ensures success for years to come.

FAQs

How can healthcare organizations reduce staff resistance to AI adoption?

Healthcare organizations can ease staff concerns about AI by fostering trust through empathy and open dialogue. It's crucial for leadership to be upfront about how AI will be integrated, emphasizing that its purpose is to assist employees, not replace them.

Engaging staff in the decision-making process is another key step. When employees feel included, they’re more likely to embrace changes. Offering personalized training can also help staff feel equipped and confident to navigate AI-driven workflows. By showcasing how AI can handle repetitive tasks and enhance patient care, organizations can demonstrate its value as a supportive partner in the workplace.

What are the essential elements of an effective AI literacy program in healthcare?

An effective AI literacy program in healthcare begins with defining clear objectives that align with both clinical and operational priorities. The first step is to pinpoint how AI can enhance workflows and address specific challenges. Open and transparent communication is key - sharing evidence-based insights can help ease concerns, build trust, and reduce resistance among staff.

Training should be customized to suit the needs of various roles within the organization. This ensures that every team member gains the knowledge and confidence required to effectively use AI tools in their daily tasks. Promoting a workplace culture that values teamwork, continuous learning, and open dialogue is equally important. These efforts not only make the transition to AI-driven processes more seamless but also ensure compliance with healthcare regulations and maintain high security standards.

What steps can healthcare providers take to ensure AI complies with regulations like HIPAA?

Healthcare providers can align AI systems with regulations like HIPAA by establishing a solid governance framework. Key steps include conducting regular risk assessments, employing strong data encryption, and enforcing strict access controls to safeguard sensitive information.

To strengthen compliance efforts, organizations should keep detailed records of how AI systems manage data, carry out routine audits to uncover potential weaknesses, and promote transparency around AI decision-making processes. Adding human oversight to AI workflows is another crucial step - it not only ensures accountability but also fosters trust among patients and stakeholders.

By addressing these critical areas, healthcare providers can integrate AI responsibly while protecting patient privacy and ensuring data security.

Related Blog Posts

- The Human Element: Why AI Governance Success Depends on People, Not Just Policies

- AI Governance Talent Gap: How Companies Are Building Specialized Teams for 2025 Compliance

- Clinical Intelligence: Using AI to Improve Patient Care While Managing Risk

- Future-Ready Organizations: Aligning People, Process, and AI Technology