The Deepfake Dilemma: AI Cyber Risks That Keep CISOs Awake at Night

Post Summary

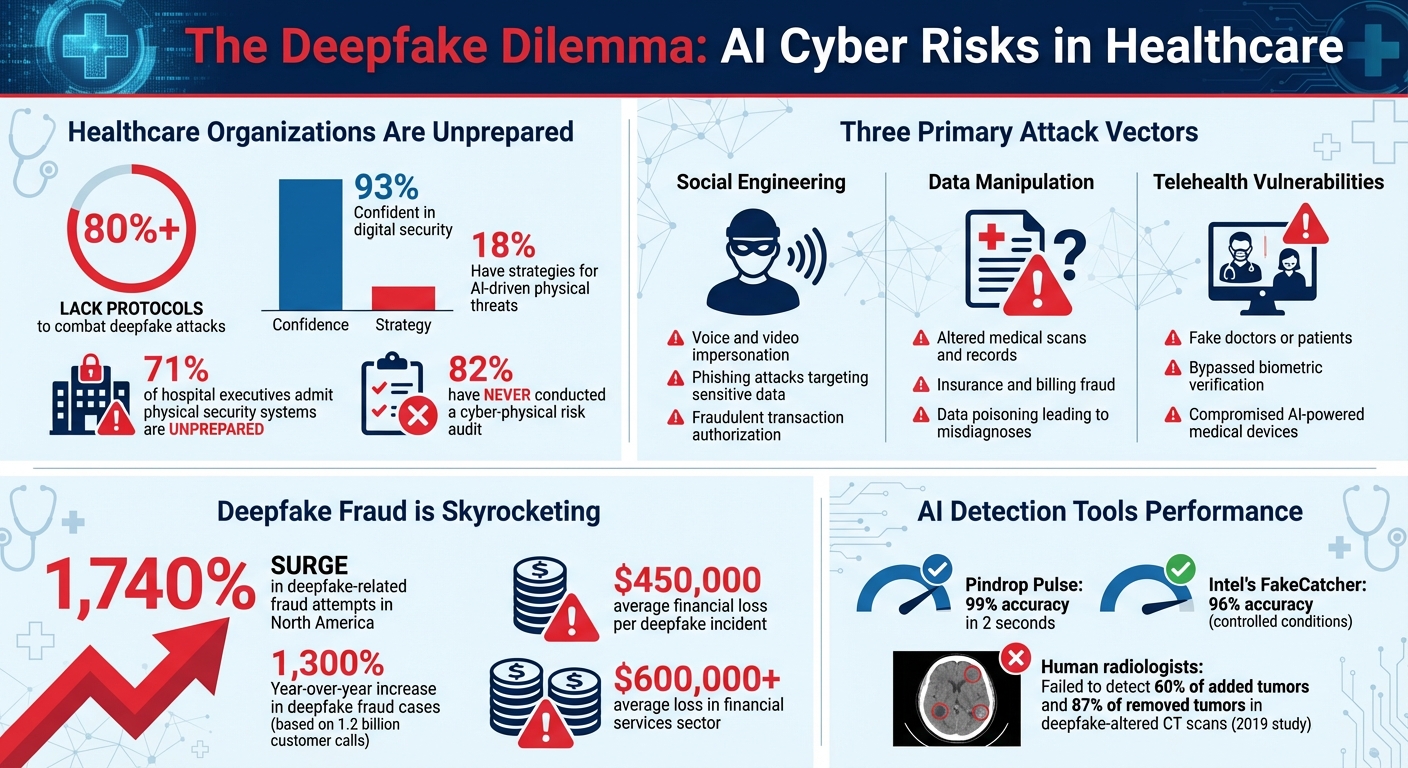

Deepfake technology has emerged as a major cybersecurity threat, especially in healthcare. With AI tools capable of cloning voices in seconds or altering medical images, attackers exploit trust to manipulate systems and people. Healthcare organizations face risks like fraudulent billing, tampered medical records, and impersonated telehealth sessions, directly threatening patient safety and financial stability. Alarmingly, over 80% of companies lack protocols to combat these attacks, leaving them vulnerable.

Key concerns include:

- Voice and video impersonation: Used in phishing to steal sensitive data or authorize fraudulent transactions.

- Manipulated medical data: Altered scans or records can lead to misdiagnoses or disrupted care.

- Telehealth vulnerabilities: Fake doctors or patients bypass security, risking patient safety.

To counter these threats, organizations must combine AI detection tools with human oversight, implement multi-factor verification, and train staff to recognize deepfakes. Platforms like Censinet RiskOps™ can help manage risks by integrating detection tools and streamlining response strategies. A proactive approach is critical to safeguarding healthcare operations and maintaining trust in the face of evolving AI threats.

Deepfake Threats in Healthcare: Key Statistics and Attack Vectors

How Deepfakes Threaten Healthcare Organizations

Healthcare organizations are increasingly vulnerable to deepfake attacks in three key areas: social engineering, fraud through manipulated clinical and financial data, and telehealth impersonation that disrupts patient care. Unlike traditional malware, which targets software vulnerabilities, deepfakes exploit human trust instead [3]. In the healthcare sector, these attacks can result in misdiagnoses, improper treatments, and a loss of public confidence [3]. Let’s dive into how these threats unfold, with examples and strategies to combat them.

Deepfake Phishing and Social Engineering

Cybercriminals are using AI-generated voice and video to convincingly impersonate healthcare professionals - such as doctors, administrators, or executives - in advanced social engineering schemes [7][8]. These synthetic imitations trick employees into revealing sensitive information, transferring funds, or granting access to essential systems [1][7][8]. The alarming part? Creating these convincing deepfakes requires only a small sample of someone’s voice or image, making it easier for attackers to execute these schemes. Beyond impersonation, these attacks can compromise the integrity of critical healthcare data.

Fraud and Manipulated Information

Deepfakes are fueling insurance and billing fraud by enabling the creation of fake patient identities and tampering with medical records [9]. Attackers can alter lab results, imaging data, or patient profiles - a tactic known as data poisoning - which can lead to misdiagnoses and disrupt the delivery of care [9][10][3]. Additionally, fabricated videos of public health officials spreading false information undermine trust in healthcare providers and can influence public health decisions [3][11]. The scale of this threat is staggering: deepfake-related fraud attempts in North America have surged by 1,740% [12]. With the growing reliance on telehealth services, the risks are even greater.

Threats to Telehealth and Remote Care

Telehealth systems, heavily dependent on audiovisual communication and electronic health records (EHRs), are particularly at risk. Deepfakes allow attackers to impersonate patients or healthcare providers on telemedicine platforms, jeopardizing patient safety and disrupting care [9][3]. These impersonations can bypass biometric verifications, granting unauthorized access to sensitive systems and patient data [12]. Worse yet, attackers could target AI-powered medical devices - like insulin pumps, pacemakers, and ventilators - causing dangerous malfunctions and threatening the continuity of care [10].

The rise of telehealth has undoubtedly expanded access to healthcare, but it has also created new vulnerabilities that cybercriminals are eager to exploit. Addressing these risks is critical to safeguarding both patient trust and the integrity of healthcare systems.

Deepfake Attacks in Healthcare: What Has Happened

Deepfake threats in healthcare are becoming more prevalent, but the number of publicly disclosed cases remains relatively low compared to other types of cyberattacks. This scarcity in reporting highlights the difficulty in detecting these incidents and the reluctance of organizations to make them public. However, the few cases that have come to light reveal a troubling trend: cybercriminals are using AI-generated content to outsmart traditional security systems and exploit human vulnerabilities within healthcare operations. These examples provide a glimpse into the real-world consequences of deepfake attacks on the healthcare industry.

Examples of Deepfake Attacks

One common tactic involves using deep voice technology to clone the voices of executives. Cybercriminals extract voice samples from public sources like conference recordings, webinars, or social media posts. They then use generative AI to create fake internal communications. These synthetic messages, complete with the cloned voice, mimic not just the tone but also the speech patterns and technical language of trusted leaders. The goal? To deceive employees - particularly those handling finances - into authorizing fraudulent transactions.

Financial Impact of Deepfake Breaches

The financial toll of deepfake attacks can be staggering. Organizations face expenses related to investigating breaches, repairing compromised systems, and rebuilding their reputation. But the risks don’t stop there. When deepfakes are used to manipulate AI-powered medical devices - such as pacemakers, insulin pumps, or imaging systems - the consequences extend to patient safety. These scenarios introduce significant legal and financial liabilities, emphasizing the urgent need for stronger security protocols.

Unlike ransomware, which often triggers immediate alarms, deepfake fraud is insidious. It can go unnoticed for long periods, quietly siphoning resources and undermining data integrity. By the time the damage is discovered, the financial and operational impact is often severe, underscoring the stealthy and dangerous nature of these attacks.

How to Detect and Stop Deepfake Threats

To tackle the growing threat of deepfakes in healthcare, a combination of advanced tools, strategic platforms, and human oversight is essential. Many organizations still lack formal procedures to identify or respond to deepfake attacks, leaving them vulnerable [17][2].

Tools for Detecting Deepfakes

Detection tools focus on identifying facial inconsistencies, biometric irregularities, and metadata anomalies to uncover manipulated content. For example, Pindrop Pulse can detect synthetic voices in just two seconds with an impressive 99% accuracy [13]. Similarly, Intel's FakeCatcher achieves 96% accuracy under controlled conditions [13]. These tools analyze subtle indicators like unnatural eye movements, mismatched lip-syncing, variations in voice tones, and irregularities in skin textures.

In healthcare settings, especially during live telemedicine sessions or urgent communications, such detection tools are critical. Deepfake-related fraud cases have skyrocketed - rising by 1,300% year-over-year, according to a Pindrop study of 1.2 billion customer calls [16]. Financial losses from deepfake incidents average $450,000 per case, with the financial services sector seeing even higher losses, exceeding $600,000 per incident [16].

To strengthen detection, combining audio, video, and text analysis offers a more thorough evaluation of media authenticity [15]. Some organizations are also leveraging blockchain technology to create secure, tamper-proof digital records for medical data and imaging [14][15]. This ensures any manipulation is immediately detectable. Additionally, liveness detection software is being used in biometric authentication systems to confirm the physical presence of individuals [1][18], adding an extra layer of security.

Using Censinet RiskOps™ to Manage Deepfake Risks

Censinet RiskOps™ provides healthcare organizations with tools to mitigate deepfake risks through automated evidence validation and AI-driven risk dashboards. Acting as a centralized hub for AI governance, the platform routes critical findings to relevant stakeholders, such as AI governance committees, for review and action.

The platform’s Censinet AI™ feature simplifies third-party risk assessments by summarizing vendor evidence, capturing integration details, and identifying risks from fourth-party sources. This system combines automation with human oversight, ensuring that key decisions remain under human control while scaling risk management efforts effectively.

Censinet RiskOps™ also offers a command center for real-time monitoring of deepfake-related vulnerabilities across an organization’s healthcare ecosystem. By embedding deepfake detection tools into media workflows, businesses can identify threats before they escalate [1]. This proactive approach is crucial in combating increasingly sophisticated deepfake technology.

Combining Human Review with AI Systems

While AI detection tools are powerful, they aren’t perfect - human judgment remains indispensable. Siwei Lyu, a professor of computer science and engineering at the University at Buffalo, highlights the importance of human involvement:

"What sets LLMs apart from existing detection methods is the ability to explain their findings in a way that's comprehensible to humans, like identifying an incorrect shadow or a mismatched pair of earrings" [20].

This human-in-the-loop approach ensures that risk management processes maintain both accuracy and accountability. While AI systems can flag potential deepfakes by identifying subtle statistical patterns, human reviewers provide the necessary context to validate these findings - especially for high-stakes decisions involving patient data or financial transactions.

Healthcare organizations should also adopt out-of-band verification protocols. This involves confirming communications through multiple trusted channels, such as making follow-up calls to verified numbers or conducting in-person validations [19]. By combining AI detection capabilities with human skepticism and thorough verification methods, organizations can create a defense system that is far more effective than relying on either approach alone. This hybrid strategy forms the foundation for the robust defenses discussed in the next section.

sbb-itb-535baee

Creating a Defense Plan Against Deepfakes

Protecting against deepfakes requires more than just awareness - it demands integrating detection tools into existing systems like EHRs, imaging platforms, and telemedicine services [3]. Beyond the digital domain, physical vulnerabilities need attention too. Deepfake technology has been used to bypass security measures through forged badge credentials, voice cloning, and sensor manipulation [6]. A truly effective defense plan must address both digital and physical threats in tandem.

While 93% of healthcare cybersecurity leaders express confidence in their digital security, only 18% have strategies to counter AI-driven physical threats. Additionally, 71% of hospital executives admit their physical security systems are unprepared, and 82% have never conducted a cyber-physical risk audit [6]. This gap leaves organizations exposed to risks on multiple fronts. A layered defense strategy that combines technical measures with vigilant human oversight is essential to close these vulnerabilities.

Setting Up Multiple Verification Steps

Multi-factor authentication (MFA) is a critical first step [3][4]. However, it must be reinforced with structured out-of-band verification protocols to handle unexpected or high-stakes requests. This could involve confirming requests through multiple trusted channels, such as making follow-up calls to verified phone numbers, sending emails to pre-approved addresses, or even conducting in-person validations for critical decisions.

To secure sensitive data like medical records and imaging, cryptographic provenance and digital watermarking can be employed, with blockchain-based signing providing an additional layer of security [3][11]. The importance of these measures became evident in a 2019 study where radiologists misdiagnosed 60% of added tumors and 87% of removed tumors in deepfake-altered CT scans - even when they knew manipulation was possible [4].

Training Staff to Recognize Deepfakes

Technical defenses are only part of the solution. A well-trained workforce is equally important. Continuous education programs are vital, as demonstrated by the experience at UC San Diego Health, where employees failed phishing simulations despite prior training [3]. This highlights the need for ongoing training that evolves alongside the tactics used by deepfake creators.

Staff training should address both digital and physical threats. Employees need to learn how to spot unusual delays or unnatural shifts in voice interactions, irregular visual details during telemedicine calls, or discrepancies in communication content [4][6]. For example, 67% of healthcare payers admitted they were unaware of vulnerabilities tied to voice cloning in IVR systems or face-to-face verification processes [6]. Training should extend to all staff, including receptionists, security teams, nurses, and remote employees - anyone who could potentially face deepfake-based attacks.

"Remember to train your staff. While humans are not great at detecting synthetic voices or images, when people are aware of the risks deepfakes pose, they can better spot potential red flags." - Laura Fitzgerald [4]

Regular simulations can provide hands-on experience. Organizations should conduct phishing and vishing exercises using AI-generated content, such as adaptive phishing emails or voice cloning attempts [4][9]. Red-team drills that incorporate deepfake badges or voice-cloned calls can test how well employees detect and respond to these threats in real-world scenarios [6]. These exercises should promote a no-blame culture, turning mistakes into learning opportunities and encouraging open reporting to improve systems continuously [10].

Using Censinet Connect™ for Vendor Risk Management

Deepfake risk management doesn’t stop within an organization - it must also extend to third-party vendors. Censinet Connect™ offers a platform for collaborative vendor risk assessments and provides ongoing oversight of third-party security practices.

When paired with Censinet RiskOps™, the platform offers comprehensive insight into vendor-related deepfake risks. It helps organizations evaluate whether vendors have implemented effective detection tools, established clear verification protocols for unexpected requests, and developed robust incident response plans. This is especially important since deepfake threats can arise from compromised vendor communications or falsified credentials.

With Censinet Connect™, healthcare organizations can enforce standardized security requirements for vendors, such as mandatory MFA, staff training on recognizing deepfakes, and regular security audits. The platform’s collaborative network also allows hospitals, insurers, and vendors to share threat intelligence and best practices, creating a unified defense against the rapidly evolving landscape of deepfake technology [3]. This collective approach ensures that organizations stay ahead of these sophisticated threats.

Conclusion

Deepfake technology presents a serious challenge for healthcare organizations. These synthetic media tools can replicate human voices and appearances with such precision that spotting them becomes nearly impossible [5]. Given the healthcare sector’s reliance on sensitive data and trusted communication, it’s particularly vulnerable to this emerging threat.

Traditional cybersecurity measures alone are no longer enough to counter deepfakes. These attacks exploit human trust and can bypass conventional defenses [17]. To address this, organizations must shift from simply reacting to incidents to proactively managing risks. This includes adopting a layered security strategy where deepfake detection plays a key role within the broader cybersecurity framework.

Ongoing staff training is essential. Realistic simulations and scenario-based exercises help employees recognize suspicious content, critically assess media, and report potential threats [18]. Since deepfakes often exploit trust to bypass technical controls, employees become the last line of defense [17]. When combined with advanced technical tools, this human element strengthens organizational resilience against AI-driven social engineering.

Solutions like Censinet RiskOps™ and Censinet Connect™ allow CISOs to move away from disjointed responses and implement coordinated defense strategies across their networks.

As AI continues to advance, the threat posed by deepfakes will only grow. Healthcare organizations that prioritize awareness, invest in proactive risk management, and remain vigilant will be better equipped to safeguard patient safety, protect sensitive data, and maintain the integrity of their systems - the key goals emphasized throughout this discussion.

FAQs

What steps can healthcare organizations take to detect and respond to deepfake threats?

Healthcare organizations can tackle the challenge of deepfake threats by leveraging real-time media validation tools, digital watermarking, and AI-powered detection systems to spot manipulated content. Equipping staff with the skills to identify synthetic media and keeping an eye out for unusual activity are equally important steps in this effort.

To respond effectively, organizations should create detailed incident response plans and set up clear protocols for managing deepfake-related incidents. Partnering with regulatory bodies to meet cybersecurity standards is another crucial move. Staying up-to-date on new technologies and preparing communication strategies to address potential damage to reputation can help organizations mitigate the impact of these threats.

What deepfake-related vulnerabilities should healthcare organizations watch for in telehealth systems?

Deepfake technology brings a wave of challenges to telehealth systems, creating potential risks for both healthcare providers and patients. Among the most pressing concerns are fraudulent telehealth sessions, where deepfake videos can be used to mimic patients or providers, and voice cloning, which can distort conversations to manipulate outcomes. There's also the danger of fake identification documents, which may allow attackers to bypass security protocols. On top of that, AI-generated images or audio can be employed to mislead healthcare professionals or tamper with patient records.

To combat these threats, it's essential to adopt strong verification measures, keep an eye out for unusual activity, and train staff to spot the telltale signs of deepfake misuse within telehealth platforms. Proactive steps like these can help safeguard the integrity of virtual healthcare.

Why is human oversight essential for addressing deepfake risks in healthcare?

Human judgment is crucial in addressing the risks posed by deepfake technology because these tools are designed to exploit trust and can even mislead experienced professionals. When healthcare organizations pair human oversight with strong verification methods and advanced detection tools, they can more effectively spot manipulated content and mitigate potential dangers.

The stakes are high - deepfakes in healthcare can result in fraudulent schemes, spread misinformation, or even lead to incorrect diagnoses. Human involvement adds an essential layer of scrutiny, safeguarding sensitive patient information and preserving trust in healthcare systems.