Digital Hippocratic Oath: Balancing Medical AI Innovation with Cyber Safety

Post Summary

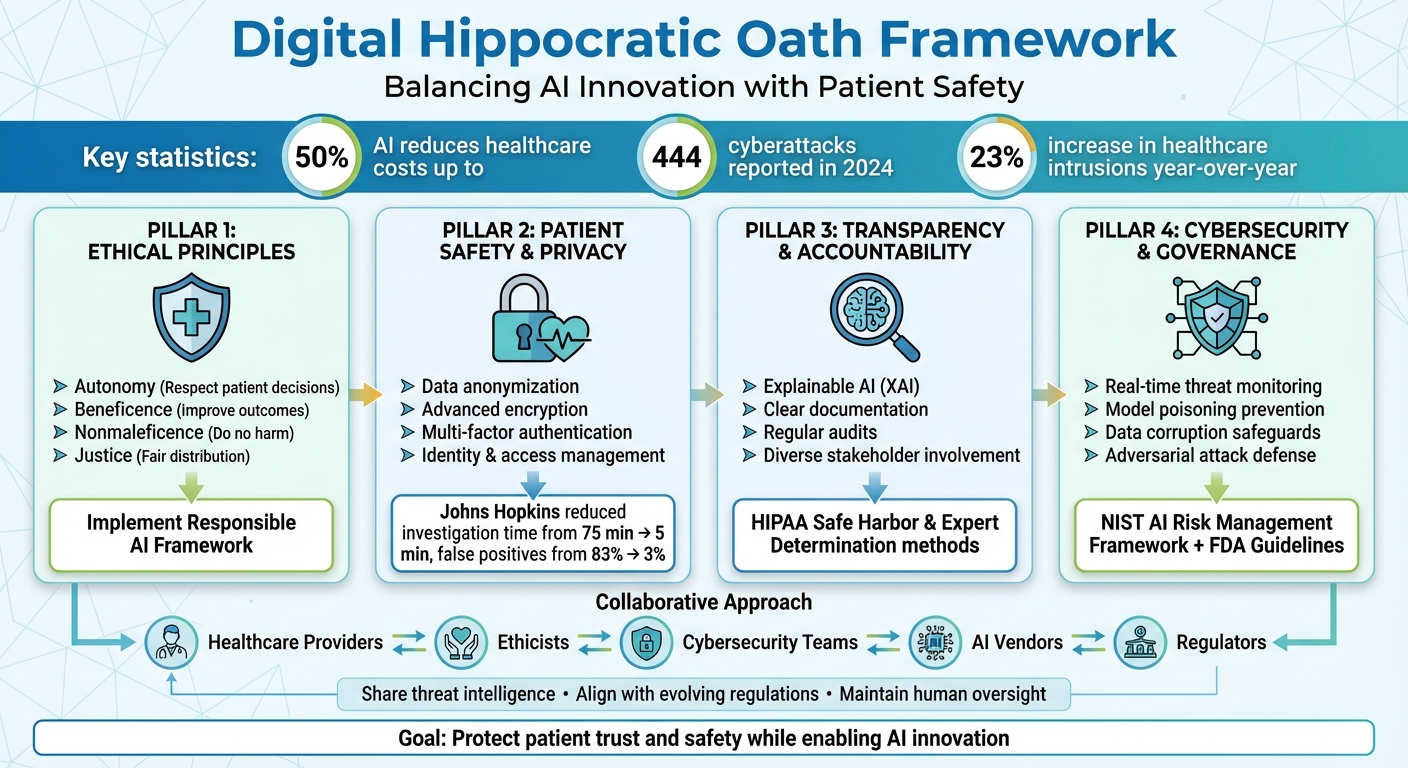

AI in healthcare is transforming patient care but comes with serious risks. While it enables faster diagnostics, better treatment predictions, and cost reductions (up to 50%), it also raises concerns about data security and patient trust. Cyberattacks on healthcare systems are increasing, with 444 incidents reported in 2024 alone. These breaches threaten sensitive patient data, disrupt care, and even endanger lives.

To address this, the concept of a "Digital Hippocratic Oath" has emerged. It calls on healthcare organizations to prioritize safety, transparency, and ethical AI use. This involves:

- Core principles: Respect patient autonomy, avoid harm, and ensure fairness in AI deployment.

- Cybersecurity measures: Use encryption, anonymize data, and regularly test for vulnerabilities.

- Accountability: Implement Explainable AI (XAI) and strong governance frameworks to build trust.

- Collaboration: Share threat intelligence and align with evolving regulations like FDA guidelines.

The goal is clear: balance innovation with responsibility to protect patient trust and safety in a rapidly evolving landscape.

Digital Hippocratic Oath Framework for Healthcare AI Implementation

Core Principles of the Digital Hippocratic Oath

The Digital Hippocratic Oath draws from the same ethical foundation as traditional medical principles: autonomy, beneficence, nonmaleficence, and justice [3]. These core principles act as essential guidelines for using AI in healthcare. Autonomy emphasizes the importance of respecting patients' rights to make informed decisions about their care. Beneficence focuses on ensuring that AI systems actively contribute to better patient outcomes. Nonmaleficence centers on the obligation to avoid causing harm. Justice ensures that the benefits of AI are distributed fairly, regardless of a patient's background or circumstances.

However, turning these principles into actionable practices takes more than just good intentions. Healthcare organizations must adopt a Responsible AI framework that prioritizes fairness, minimizes biases, and ensures the technology works effectively for all users [6]. This involves establishing clear accountability for every aspect of AI operations, so when issues arise, there’s a defined point of responsibility [6]. Formal governance processes are also essential - these define roles, responsibilities, and clinical oversight throughout the AI's lifecycle while aligning with regulatory standards like the NIST AI Risk Management Framework [5][1]. These ethical principles form the backbone of effective AI deployment in healthcare.

Prioritizing Patient Safety in AI Deployment

Patient safety is the cornerstone of the Digital Hippocratic Oath, and AI systems must reflect this priority. Ensuring safety means implementing robust security measures during deployment, such as data anonymization, advanced encryption methods, multi-factor authentication, and stringent identity and access management policies [6][7].

Healthcare organizations also need to involve a diverse group of stakeholders - healthcare providers, ethicists, and community representatives - to proactively identify potential safety risks before they escalate into real-world problems [7]. Regular audits are equally critical to address biases. AI systems trained on limited or unrepresentative datasets can lead to skewed outcomes, potentially harming specific patient groups. To prevent this, diverse and inclusive training data must be a priority [7].

Ensuring Transparency and Accountability

Building trust in healthcare AI systems requires Explainable AI (XAI) [4]. When an AI tool suggests a diagnosis or treatment, clinicians need to understand the reasoning behind the recommendation. Without this transparency, they cannot properly evaluate its validity or explain it to patients.

Clear documentation of AI development processes is another key aspect of transparency. Comprehensive records not only support scientific and regulatory reviews but also foster trust among both healthcare teams and patients. Transparency isn’t just about meeting compliance standards - it’s about creating systems that professionals feel confident using and that patients can rely on.

In addition to transparency, safeguarding data is essential for maintaining trust.

Protecting Patient Privacy and Data Integrity

To uphold patient trust and comply with regulations like HIPAA and GDPR, healthcare organizations must enforce transparent data practices, strong governance policies, and regular audits [8]. These measures are critical for adhering to the "minimum necessary standard."

This standard ensures that AI tools access and use only the Protected Health Information (PHI) strictly required for their intended purpose, even if using larger datasets might enhance performance [9][12]. De-identification processes must meet rigorous standards - such as HIPAA’s Safe Harbor or Expert Determination methods - and protect against re-identification risks, particularly when datasets are combined [9][10].

A compelling example of this approach in action comes from Johns Hopkins. By implementing an AI-powered privacy analytics platform, they cut investigation times from 75 minutes to just 5 minutes per case. Even more impressive, false-positive rates plummeted from 83% to 3% [11]. This demonstrates that safeguarding patient privacy and improving operational efficiency can go hand in hand. By rigorously enforcing privacy protocols, healthcare organizations uphold the oath's commitment to avoid harm while driving meaningful improvements in patient care.

Cybersecurity Risk Management for Healthcare AI

AI systems in healthcare are under increasing threat from cyberattacks. The ECRI Institute has ranked AI as the top health technology hazard for 2025, emphasizing the severe risks posed when medical AI systems are compromised or malfunction. These systems play a vital role in diagnostics and treatment recommendations, making them prime targets for cybercriminals. Alarmingly, healthcare organizations have already seen a 23% rise in intrusions year-over-year, with AI inadvertently aiding attackers in breaching networks [13]. To protect patient safety, robust cybersecurity measures must be integrated into AI development and deployment.

Identifying AI-Specific Cyber Risks

AI in healthcare comes with unique vulnerabilities. Three major risks stand out: model poisoning (tampering with training data or algorithms), data corruption (compromising the integrity of patient information), and adversarial attacks (introducing malicious inputs to manipulate AI outputs). Each of these threats can lead to disastrous outcomes.

For instance, a ransomware attack at Düsseldorf University Hospital forced the transfer of a critical patient, resulting in a fatal delay in treatment [2]. This tragic event underscores how cybersecurity lapses in healthcare IT systems can have life-or-death consequences.

Using Censinet RiskOps™ for Risk Assessments

To tackle these challenges, healthcare organizations can use tools like Censinet RiskOps™, a platform designed to manage AI-specific cybersecurity risks. With its integrated solution, Censinet AI™, the platform streamlines third-party risk assessments by automating security questionnaires, summarizing vendor documentation, and tracking key details on integrations and fourth-party risks.

Censinet RiskOps™ centralizes AI governance and risk management. It routes key findings and tasks to appropriate stakeholders, including members of the AI governance committee, for timely review and approval. Its intuitive AI risk dashboard serves as a central hub for managing policies, risks, and tasks. This "human-in-the-loop" approach ensures that while automation enhances efficiency, critical decisions remain in the hands of healthcare leaders. This balance allows organizations to scale their risk management efforts without compromising patient safety.

Implementing Real-Time Threat Monitoring

Real-time monitoring is essential for identifying AI-specific threats and safeguarding patient data [5]. Healthcare organizations should develop detailed playbooks to handle AI-related cyber incidents. These playbooks should include strategies for addressing threats like model poisoning, data corruption, and adversarial attacks [5].

"Instead of having a human notice an event and react to it, AI-powered incident response solutions can recognize that an incident is occurring and automatically begin the process laid out in the organization's security policies. It can recognize a suspicious login and put constraints around that identity to protect the health system's data. That's becoming more commonplace." - HealthTech Magazine [13]

Organizations must also focus on swiftly restoring compromised AI models and maintaining secure, verifiable backups. Regular resilience testing of AI systems is vital, as is fostering collaboration between cybersecurity and data science teams. Additionally, sharing threat intelligence across the healthcare sector strengthens collective defenses and improves response times [13]. As AI continues to drive innovation in healthcare, protecting patient data must remain a top priority, alongside delivering ethical care.

sbb-itb-535baee

Building Resilient AI Systems: Governance and Compliance

Turning broad AI governance principles into actionable steps can be tricky. For healthcare organizations, it's essential to ensure AI applications remain innovative, safe, and trustworthy [15]. Adding to the complexity, the regulatory environment is constantly evolving - especially with technologies like generative AI - demanding frameworks that are flexible and responsive to both technological advancements and regulatory updates [14]. Without clear guidance, healthcare leaders often find it challenging to identify, evaluate, and manage AI-specific risks while staying compliant. This shifting landscape calls for structured frameworks that make these principles practical.

Establishing AI Governance Frameworks

The Health Sector Coordinating Council (HSCC) is actively working on its 2026 guidance to address AI cybersecurity risks. This includes initiatives focused on AI governance, secure-by-design principles, and managing third-party risks [5]. The HSCC's Governance subgroup is crafting a framework that spans the entire AI lifecycle, aligns with HIPAA and FDA regulations, and incorporates AI-specific security and data management practices, with references to the NIST AI Risk Management Framework [5].

Healthcare organizations should implement formal governance structures with clearly defined roles and responsibilities, ensuring clinical oversight throughout the AI lifecycle [5]. Maintaining an up-to-date inventory of AI systems and their security considerations is crucial [5]. Collaboration between engineering, cybersecurity, and clinical teams is equally important, allowing AI-specific risks to be seamlessly integrated into medical device risk management and development processes [5].

"It's also important to break down siloes where they exist. The security and data teams should be working together rather than duplicating efforts or creating gaps due to lack of communication. This collaboration is helpful when it comes to data inventory as well. Both teams will have a unique perspective on data, and that information should be shared." - HealthTech Magazine [13]

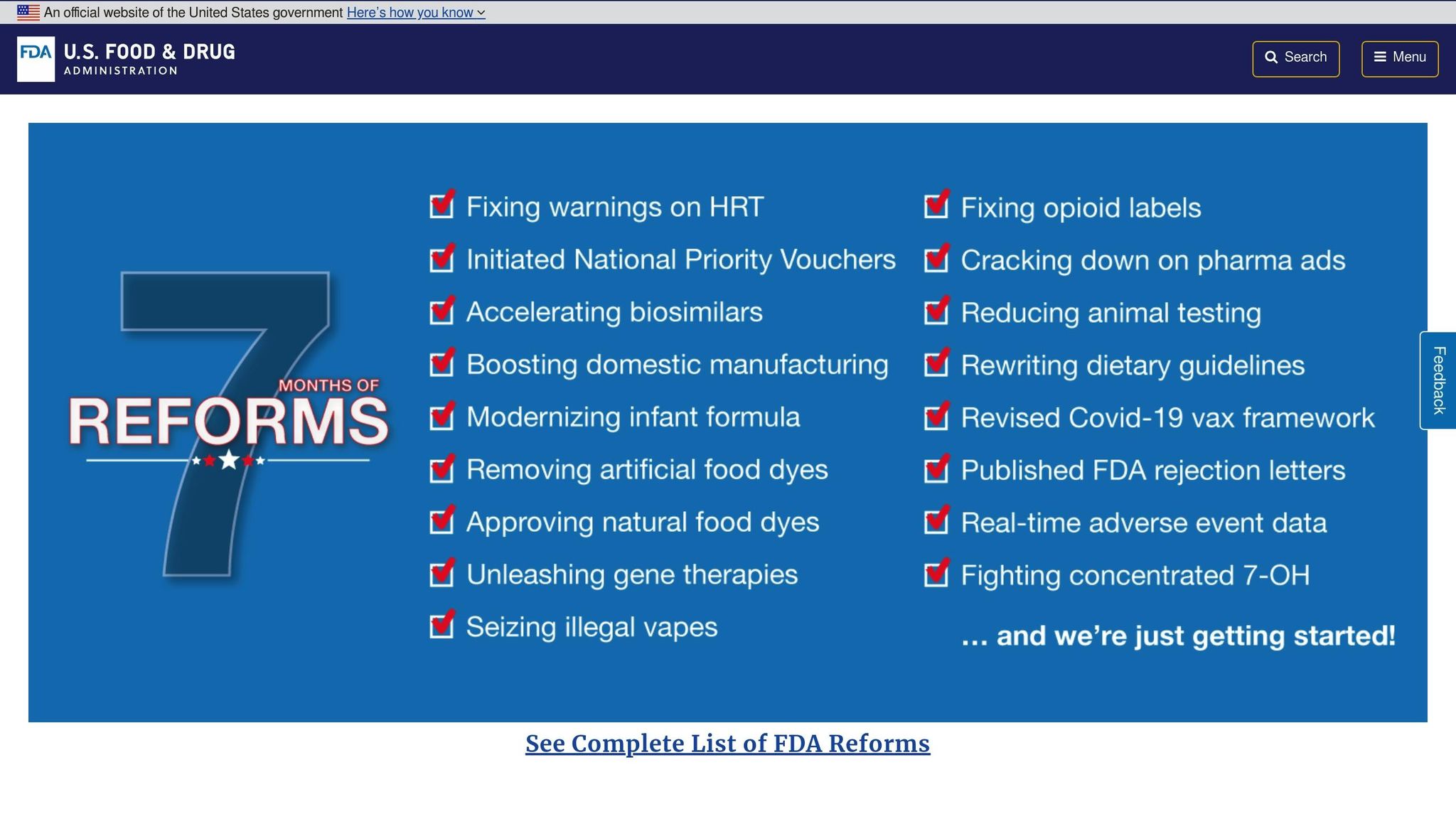

FDA Cybersecurity Guidance for Medical Devices

In 2025, the FDA released updated cybersecurity guidance for medical devices, including draft guidance in January 2025 specifically addressing AI-enabled device software functions. These updates emphasize transparency, secure-by-design principles, and ongoing lifecycle management for AI systems in medical devices [16][17][18][19]. Now, both Healthcare Delivery Organizations (HDOs) and Medical Device Manufacturers (MDMs) share responsibility for mitigating cybersecurity risks in connected devices, including those driven by AI [16].

The HSCC's Secure by Design Medical subgroup is prioritizing strong cybersecurity measures for AI-enabled devices, encouraging collaboration across engineering, cybersecurity, regulatory, and clinical teams [5]. Healthcare organizations need to align their AI governance strategies with these evolving FDA requirements while keeping operations efficient. This includes implementing strict security protocols such as anonymizing data, managing identities and access, encrypting sensitive information, and ensuring secure authentication [22].

As regulatory bodies push for secure design and continuous management, centralized tools can simplify compliance and enhance risk oversight.

Collaborative Risk Management with Censinet

In keeping with the Digital Hippocratic Oath's principle of doing no harm, platforms like Censinet RiskOps™ are helping organizations operationalize ethical AI governance. Acting as a centralized hub, Censinet RiskOps™ provides an AI risk dashboard that consolidates real-time data on AI policies, risks, and tasks. Think of it as "air traffic control" for AI governance, where critical findings and tasks are automatically routed to the appropriate stakeholders, including members of the AI governance committee.

The HSCC's Third-Party AI Risk and Supply Chain Transparency subgroup stresses the importance of collaboration between healthcare organizations and AI vendors to strengthen security and resilience within healthcare supply chains [5]. Organizations are encouraged to improve visibility into third-party AI systems, enforce oversight policies, and standardize procurement and lifecycle management processes [5]. Tools like Censinet AI™ streamline these efforts by automating questionnaires, summarizing vendor documentation, and tracking integration and fourth-party risks.

Healthcare organizations are also urged to voluntarily share information about cyber-related events that could threaten critical infrastructure. Platforms like the Health Sector Cybersecurity Coordination Center (HC3) and Information Sharing and Analysis Centers (ISACs) offer valuable spaces for sharing threat intelligence, best practices, and security insights [20][21]. This collaborative approach allows the healthcare sector to respond more effectively to cybersecurity threats, leveraging shared knowledge to enhance overall security [21].

Conclusion

Key Takeaways for Healthcare Professionals

Healthcare leaders have a responsibility to integrate AI into medical practices with a focus on ethics and security, guided by the principles of the Digital Hippocratic Oath. This involves adopting a risk-based approach throughout the AI lifecycle. To ensure patient safety, privacy, and informed consent, collaboration among clinical, cybersecurity, and engineering teams is critical. Professionals should establish governance structures with clearly defined roles, maintain up-to-date inventories of AI systems, and ensure that human oversight complements AI capabilities. These steps are especially important as regulatory frameworks for AI continue to develop.

The National Academy of Medicine underscores this need for trust:

"As AI becomes more embedded in health care, it is essential to ensure that its use fosters trust and collaboration across the health care ecosystem" [23].

Trust in AI is not built on technology alone but through transparent practices, strong accountability, and a commitment to safeguarding patient data. Moving forward, healthcare professionals must strike a balance between embracing innovation and adhering to rigorous ethical and security standards.

The Path Forward: Aligning Innovation with Responsibility

The future of AI in healthcare lies in balancing innovation with the responsibility to protect sensitive patient data. This requires a multidisciplinary approach and a commitment to continuous learning, given the rapid evolution of both AI technologies and cyber threats. Healthcare organizations should utilize centralized platforms for real-time risk management, adhere to FDA guidelines for AI-enabled medical devices, and actively participate in information-sharing initiatives to enhance situational awareness.

AI's integration into healthcare comes with ethical, safety, and accountability challenges. By adhering to the principles of a Digital Hippocratic Oath, healthcare professionals can ensure that AI developments align with patient trust and safety. Building a shared understanding among all stakeholders - patients, providers, developers, and vendors - will help the healthcare sector harness AI's potential to improve outcomes while preserving the trust that is foundational to patient care.

FAQs

What does the Digital Hippocratic Oath mean for AI in healthcare?

The Digital Hippocratic Oath in healthcare embodies a pledge to apply AI and other digital tools with care, integrity, and security. Its core focus is on safeguarding patient well-being, privacy, and trust by upholding principles such as doing good, avoiding harm, protecting data, and being accountable.

By following this framework, advancements in medical AI are pursued responsibly, ensuring sensitive patient information remains secure and trust is maintained, all while meeting rigorous ethical and cybersecurity guidelines.

How can healthcare providers protect patient data while using AI technologies?

Healthcare providers can safeguard patient data while using AI by adopting a thoughtful and organized approach to cybersecurity. It starts with keeping a detailed inventory of all AI systems in use. This helps ensure a clear understanding of their functions and any possible vulnerabilities.

Next, focus on implementing strong security protocols. These can include measures like multifactor authentication, network segmentation, and designing systems with security built in from the ground up. These steps are critical to protecting sensitive patient information.

Conducting regular risk assessments is another key element. These evaluations help pinpoint potential threats and allow organizations to address them proactively. Additionally, establishing clear governance frameworks that comply with regulations like HIPAA and FDA standards ensures that security practices are aligned with legal requirements.

By emphasizing transparency, adhering to compliance standards, and maintaining robust security measures, healthcare providers can strike a balance between embracing innovation and preserving patient trust.

How does Explainable AI (XAI) help build trust in healthcare AI systems?

Explainable AI (XAI) plays a pivotal role in fostering trust within healthcare by shedding light on how AI systems make decisions. Instead of leaving clinicians and patients guessing, XAI provides a clear view of the reasoning behind specific outcomes, tackling the often-criticized "black-box" nature of many AI models.

This clarity not only boosts accountability but also strengthens confidence in AI-powered tools. In healthcare, where decisions can directly affect patient lives, transparency isn’t just helpful - it’s essential. By making AI processes more understandable, XAI ensures that these tools are both reliable and trustworthy in critical, life-impacting scenarios.