Dynamic Risk Modeling: How AI Adapts Risk Programs in Real-Time

Post Summary

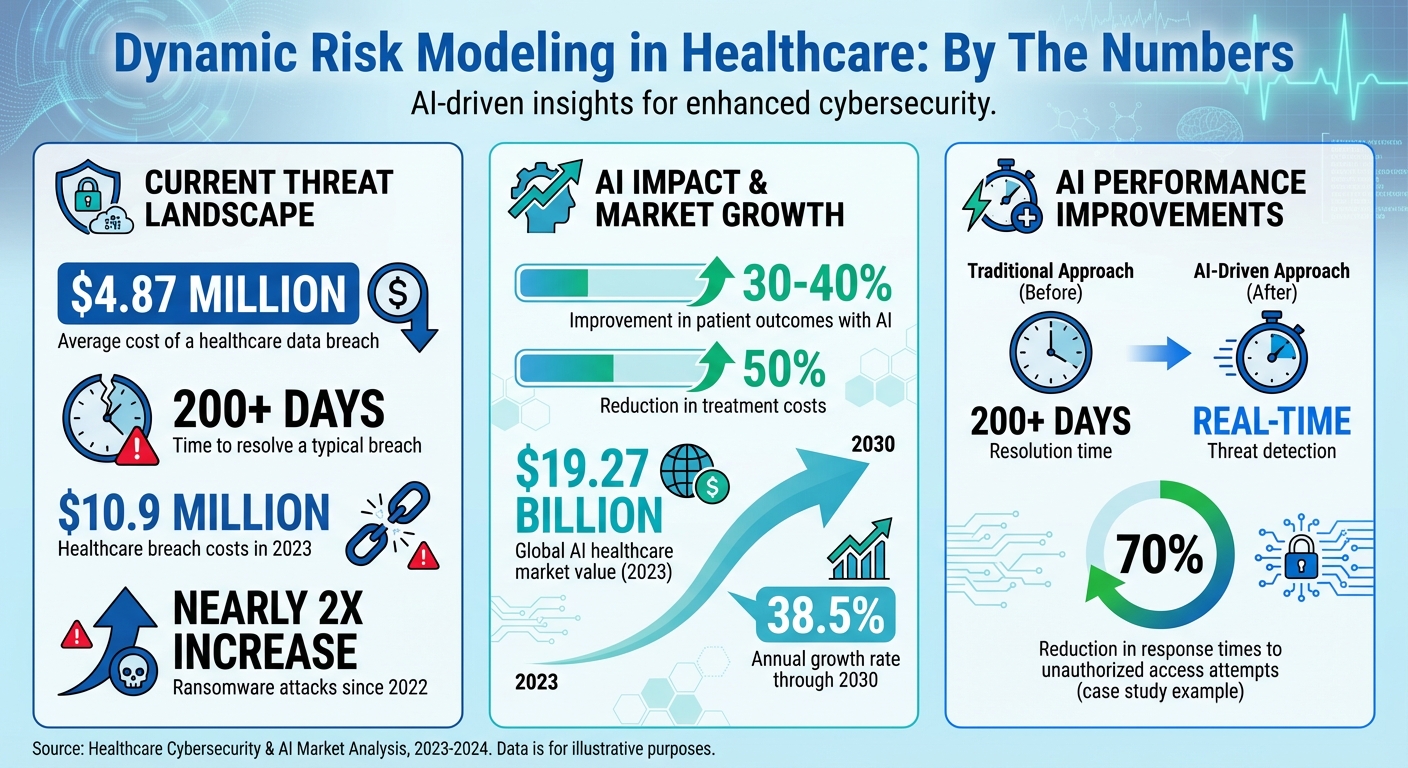

Dynamic risk modeling powered by AI is transforming how healthcare organizations manage threats, especially in cybersecurity. Traditional methods often fail to keep up with the fast-changing risk landscape, where breaches cost an average of $4.87 million and take over 200 days to resolve. AI-driven systems, however, continuously monitor and respond to risks in real time, offering faster detection and mitigation.

Here’s what you need to know:

- Why It Matters: Healthcare faces unique challenges like protecting patient data and maintaining regulatory compliance. AI tools can monitor network activity, detect anomalies, and isolate threats before they escalate.

- How It Works: AI models use centralized data from EHRs, IoMT devices, and more to identify risks. They automate tasks like risk scoring, compliance checks, and vendor assessments.

- Tools in Action: Platforms like Censinet RiskOps™ streamline risk management by aggregating data, automating responses, and ensuring secure workflows.

- Human Oversight: While AI automates many processes, human review remains critical for decisions impacting patient safety or critical systems.

- Future Outlook: With healthcare cybersecurity costs rising, AI will play a key role in real-time threat detection, compliance, and operational safety.

AI-powered risk models are reshaping healthcare cybersecurity, ensuring faster responses and prioritizing patient safety.

AI-Driven Healthcare Cybersecurity: Key Statistics and Impact Metrics

Preparing Data and Technology for AI Risk Models

Connecting Key Data Sources

To build effective AI risk models in healthcare, the first step is to centralize data from various systems across your organization. This includes integrating information from Electronic Health Records (EHRs), Internet of Medical Things (IoMT) devices, medical equipment, medication management systems, ICU monitors, laboratory systems, and more. Beyond clinical data, operational tools, financial systems, HR platforms, incident reporting tools, vulnerability databases, and third-party vendor assessments also play a critical role in creating a comprehensive data network [1][6].

Using interoperability standards like HL7 FHIR, APIs, and connectors, you can unify and normalize these diverse data sets. This approach eliminates blind spots, reduces response times, and mitigates vulnerabilities tied to regulatory and cybersecurity risks [6]. With this integrated data foundation, AI systems can proactively monitor threats and adapt to new risks in real time.

In November 2025, the Health Sector Coordinating Council (HSCC) Cybersecurity Working Group shared early previews of its 2026 guidance on managing AI cybersecurity risks. The guidance highlights the importance of maintaining an up-to-date inventory of all AI systems. This inventory helps organizations understand each system's functionality, data dependencies, and security requirements while emphasizing continuous monitoring and secure model backups [5].

Once your data is centralized, the next step is to assess risks using a structured scoring framework.

Creating a Risk Scoring Framework

A solid risk scoring framework is essential for measuring AI-detected threats. It provides a way to assess the likelihood and impact of risks, especially those that could affect patient safety. This framework should seamlessly integrate into your broader Clinical Risk Management or Enterprise Risk Management strategies and help evaluate new technologies and vulnerabilities [1]. The most effective frameworks take a systems-based approach, analyzing how people, processes, and technologies interact.

For example, a ransomware attack on a hospital in 2020 demonstrated how IT vulnerabilities can directly threaten patient safety. This incident underscores the importance of a well-structured risk scoring framework [1].

"The introduction of AI in healthcare implies unconventional risk management, and risk managers must develop new mitigation strategies to address emerging risks" [1].

To put these strategies into action, tools like Censinet RiskOps™ can simplify data aggregation and reporting, making risk management more efficient.

Using Censinet RiskOps™ for Data Aggregation

Censinet RiskOps™ acts as a centralized platform for managing healthcare cybersecurity data. By breaking down data silos, it creates a single source of truth, offering real-time visibility through user-friendly dashboards. This helps executives make faster, more informed decisions. Built on a HIPAA-compliant cloud infrastructure, the platform incorporates encryption, audit trails, and role-based access controls to ensure secure and streamlined compliance processes. Automated monitoring and reporting further reduce errors and improve data accuracy during audits.

For even greater efficiency, Censinet AI™ accelerates risk assessments by enabling vendors to complete security questionnaires in seconds. It automatically summarizes evidence, documentation, and assessment data into risk summary reports. This human-in-the-loop approach ensures that AI enhances decision-making without replacing critical human oversight. As a result, organizations can scale their risk management operations while prioritizing patient safety.

Building AI Risk Models for Healthcare

Setting Model Goals and Requirements

To create effective AI risk models, start by translating your risk management goals into specific technical requirements. Whether the aim is to automate third-party vendor assessments, detect unusual network activity, or rank vulnerabilities based on their potential impact on patients, each objective demands unique data inputs, algorithms, and performance benchmarks.

Make sure your AI model requirements align with regulatory frameworks like HIPAA and FDA guidelines. For instance, clearly outline how Protected Health Information (PHI) will be handled, ensure audit trails are established, and confirm seamless integration with existing systems. Assign clear roles for clinical oversight throughout the AI lifecycle, embedding security and data governance considerations from the outset.

Once these goals and requirements are well-defined, AI applications can be quickly tailored to meet these needs.

AI Applications in Healthcare Cybersecurity

AI plays a pivotal role in dynamic risk modeling by enhancing continuous threat monitoring. Anomaly detection systems, for example, monitor networks and devices to spot unusual activity. These systems learn typical patterns - whether from Electronic Health Record (EHR) logs or Internet of Medical Things (IoMT) communications - and flag deviations that could signal a threat.

Automated risk scoring further streamlines processes by analyzing vendor documentation, security questionnaires, and compliance data. Additionally, integrating threat intelligence allows AI models to draw from external feeds, enabling real-time adaptation to newly identified vulnerabilities and shifting attack strategies.

Prioritizing Patient Safety in Risk Models

While technical and operational capabilities are essential, patient safety must always take precedence. Configure your models to evaluate threats based on their potential impact on clinical operations and patient care. For example, prioritize risks affecting critical assets like infusion pumps, ventilators, ICU monitors, or medication dispensers, ensuring they trigger immediate responses.

Time sensitivity is especially crucial in healthcare settings. A vulnerability in a laboratory system might allow for a 48-hour remediation window, but a threat to an operating room network requires immediate attention. AI models should incorporate these clinical priorities into their scoring algorithms, ensuring that risks to life-saving equipment or emergency services demand faster action. This approach not only strengthens AI-driven risk management but also ensures that patient safety remains at the heart of every automated decision, aligning seamlessly with broader Clinical Risk Management strategies.

Implementing Dynamic Risk Modeling in Daily Operations

Connecting AI Models to Operational Tools

To bring AI risk models into the daily flow of operations, it's crucial to link them with tools like SIEM systems, electronic health records (EHR), and access control platforms. This connection enables continuous monitoring and automates responses to potential threats. For instance, workflows can be set up to trigger immediate actions - such as isolating compromised systems, disabling accounts showing unusual activity, or stopping ransomware from spreading when suspicious access patterns to patient records or medical device networks are detected. These real-time responses transform AI from a passive detection tool into an active component of your defense strategy.

This seamless integration lays the groundwork for centralized risk management using platforms like Censinet RiskOps™.

Managing Risk Through Censinet RiskOps™

Censinet RiskOps™ takes AI-driven insights and translates them into actionable, real-time responses. Acting as a central hub, it organizes and routes critical risk data to the right teams. The platform pulls in live data on third-party vendor risks, enterprise vulnerabilities, medical device security, and compliance gaps, then ensures swift, coordinated action by automatically alerting the appropriate teams - think of it as the "air traffic control" of risk management.

For example, if AI identifies a high-risk issue with a key medical device, RiskOps™ ensures the alert reaches clinical engineering, biomedical teams, and security operations at the same time, enabling a unified response. Additionally, Censinet AI™ enhances this process by speeding up vendor assessments and generating comprehensive risk reports. This combination delivers faster results without sacrificing accuracy or depth.

Maintaining Human Oversight of AI Decisions

Even with automated responses in place, human oversight remains essential, especially for critical decisions. AI should support human judgment, not replace it. Establish review processes where team members validate AI-generated risk assessments, particularly when decisions affect patient care or critical systems. For high-severity threats, set up approval workflows that require human sign-off before executing automated actions or making changes to vital equipment.

A balanced approach is key. For example, AI might automatically patch vulnerabilities in non-critical systems, but updates to life-supporting medical devices should always require clinical approval. This "human-in-the-loop" strategy combines AI's speed with the expertise of professionals, ensuring that context and nuance are considered. Regular reporting on automated actions helps maintain transparency and accountability across all AI-driven risk management activities.

sbb-itb-535baee

Governing and Improving AI Risk Models

Setting Up AI Governance Structures

Building a strong AI governance framework starts with assigning clear roles and responsibilities throughout the entire AI lifecycle - from initial development to deployment and ongoing operation. Establish an AI governance committee that includes representatives from clinical, IT security, compliance, and operational teams. This group should oversee all AI systems, maintaining a detailed inventory that documents their functions, data dependencies, and security considerations. Such a framework ensures that AI tools consistently align with organizational priorities and support daily risk management efforts [5].

To manage risk effectively, implement a five-level autonomy scale to classify AI tools. Low-risk tools may require minimal oversight, but those that influence clinical decisions demand thorough reviews. Governance policies should clearly define acceptable uses of AI, prohibit consumer-grade tools for handling Protected Health Information (PHI), and require Business Associate Agreements with all AI vendors [8]. These measures should comply with HIPAA regulations and align with frameworks like the NIST AI Risk Management Framework to establish robust security protocols for AI systems [5].

Testing and Monitoring AI Model Performance

AI models need constant validation to ensure they perform as expected. Regularly test them against outcomes and adjust thresholds as needed when patterns shift. Incorporate routine vulnerability assessments and penetration testing (VAPT) across production, user acceptance testing, and deployment environments [1][4]. For example, HIPAA Vault collaborated with a surgical robotics firm to deploy machine learning models that continuously monitored system activity. Their AI-enhanced threat detection reduced response times to unauthorized access attempts by 70% [10].

Bias testing is equally critical to avoid unfair treatment of providers or staff. As Riddle Compliance emphasized:

"AI must be trained, validated, and monitored for bias or blind spots" [9].

Prepare for AI-specific threats by creating incident response playbooks for scenarios like model poisoning, data corruption, or adversarial attacks. Keep verifiable backups to enable quick recovery when needed. These monitoring practices provide valuable insights that support timely updates to your AI models [5].

Updating Models Based on New Information

AI risk models must evolve in step with new threats and regulatory changes. Regular updates are essential to keep pace with emerging risks. When incidents or near misses occur, integrate those lessons into your models immediately. Given the dynamic nature of AI, models should continuously learn from fresh data, recalibrating risk scores as conditions change [3].

Stay informed about industry advancements, such as the Health Sector Coordinating Council's upcoming 2026 guidance on AI cybersecurity risks. This guidance will include playbooks for cyber operations and secure-by-design principles tailored to medical devices [5]. With ransomware attacks nearly doubling since 2022 and healthcare breach costs reaching $10.9 million in 2023, frequent updates are crucial to counter increasingly sophisticated threats [7].

Conclusion: Real-Time Risk Management in Healthcare

Key Takeaways for Healthcare Organizations

AI-powered dynamic risk modeling is changing the game for clinical risk management. Instead of reacting to issues after they arise, healthcare organizations can now proactively address risks, offering a more effective way to safeguard patient safety and ensure compliance [1]. This approach not only speeds up how risks are monitored and assessed but also sharpens the ability to respond. On top of that, AI excels at spotting cybersecurity threats by analyzing patterns and detecting irregularities [2].

The numbers speak for themselves: AI could boost patient outcomes by 30–40% while slashing treatment costs by as much as 50% [4]. With the global AI healthcare market valued at $19.27 billion in 2023 and projected to grow at an annual rate of 38.5% through 2030, organizations slow to adopt these technologies risk being left behind [3]. However, innovation in healthcare must always prioritize patient safety, data privacy, and operational stability [5].

Platforms like Censinet RiskOps™ are leading the way, offering tools that consolidate risk management while keeping human oversight intact. By using AI to streamline third-party risk assessments, these tools ensure scalability without compromising safety. The inclusion of human judgment - through customizable rules and review processes - ensures that automation enhances, rather than replaces, decision-making. This balance allows risk teams to expand their capabilities without losing sight of critical safety measures.

Looking ahead, the potential for AI to further enhance cybersecurity and patient care is immense.

The Future of AI in Healthcare Cybersecurity

AI and predictive analytics are paving the way for a proactive approach to healthcare delivery [3]. New developments are set to transform cybersecurity through predictive tools, tailored risk assessments, and automated compliance processes [2]. The Health Sector Coordinating Council is preparing 2026 guidelines to address AI-related cybersecurity risks, focusing on clear policies and best practices in a phased rollout [5].

Dynamic AI algorithms are becoming smarter and faster, improving the accuracy of risk predictions [3]. These advancements will continue to push healthcare toward a more patient-focused model, featuring personalized treatments, remote monitoring, and ethical data management that aligns with Industry 4.0 principles [1]. Organizations that establish strong AI governance today - complete with clear policies, vendor controls, and ongoing monitoring - will be better equipped to harness these advancements while staying compliant and protecting patient safety.

FAQs

How does AI enhance real-time risk detection in healthcare cybersecurity?

AI plays a crucial role in improving real-time risk detection for healthcare cybersecurity. By constantly monitoring network activity and system behavior, it identifies anomalies - like unauthorized access attempts or irregular data transfers - as they occur. This enables swift threat detection and response, helping to limit potential harm.

Through the use of predictive analytics, AI can also anticipate potential vulnerabilities and adjust defenses accordingly. This proactive strategy not only shortens response times but also helps prevent breaches while ensuring compliance with strict healthcare regulations.

What types of data are essential for AI-driven risk modeling in healthcare cybersecurity?

AI-powered risk modeling in healthcare cybersecurity relies on several key data sources to operate efficiently. These include network traffic data, vulnerability assessments, third-party vendor evaluations, medical device activity logs, user behavior analytics, and real-time threat intelligence.

By processing and analyzing this information, AI systems can spot potential vulnerabilities, detect unusual patterns, and deliver practical insights. This helps healthcare organizations address emerging cyber threats proactively while ensuring they meet regulatory compliance requirements.

How can healthcare organizations maintain human oversight in AI-driven risk management systems?

Healthcare organizations can keep human oversight front and center in AI-driven risk management by establishing clear governance frameworks and involving a mix of experts - like IT specialists, clinical staff, and compliance officers. This collaborative approach ensures decisions are shaped by varied, well-rounded perspectives.

Another key element is leveraging explainable AI (XAI), which brings much-needed clarity to how AI systems reach their conclusions. To maintain control and accountability, organizations should also prioritize regular audits, continuous monitoring, and adversarial testing. These practices help ensure AI processes align with regulatory requirements and uphold high standards of compliance.