Emerging AI Privacy Regulations in Healthcare

Post Summary

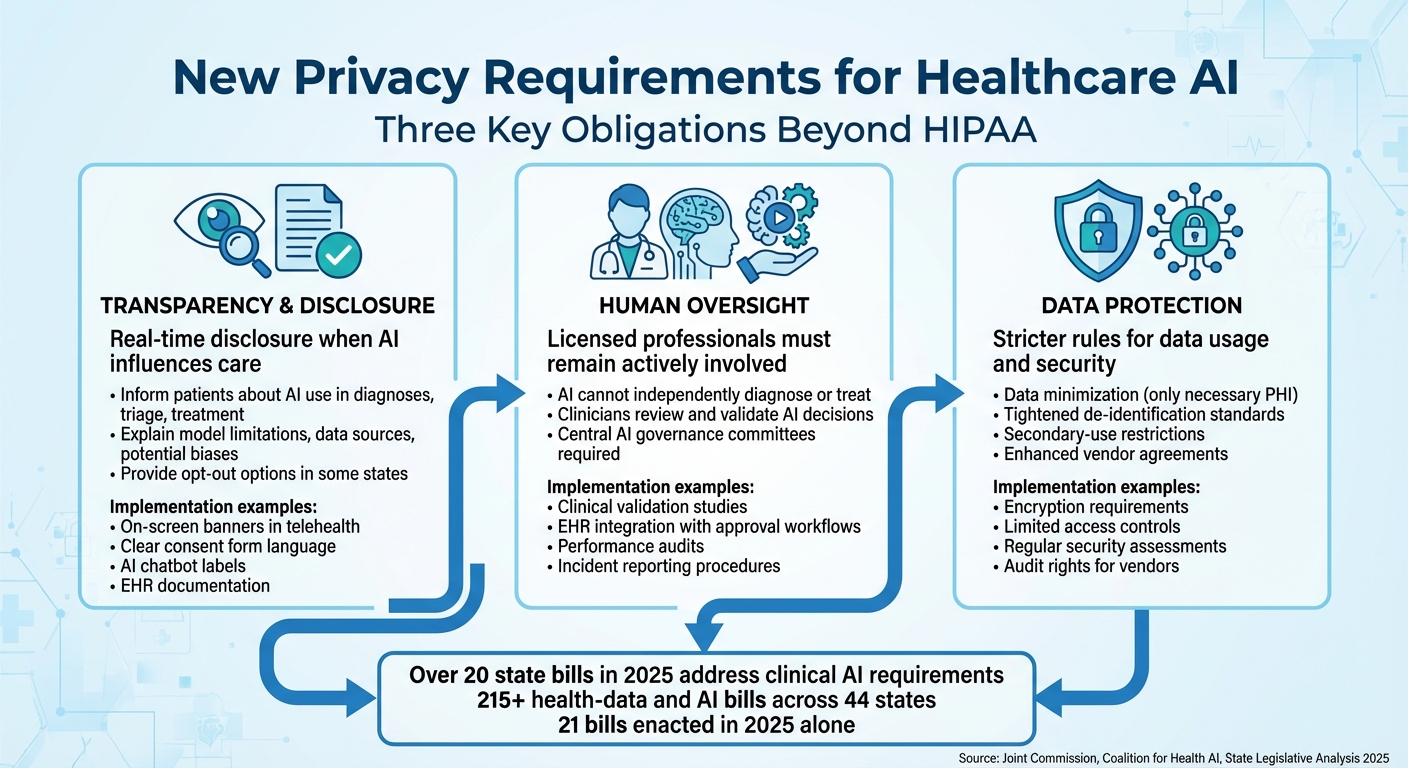

AI is transforming healthcare by improving diagnostics, predicting risks, and streamlining operations. However, its reliance on vast datasets raises privacy concerns, especially with sensitive patient information. New regulations are emerging to address these challenges, extending beyond HIPAA to include transparency, oversight, and stricter data protections. Here's what you need to know:

- Transparency: States like Texas and California now require disclosure when AI influences care, giving patients more awareness and control.

- Human Oversight: Laws mandate that licensed professionals remain involved in AI-supported clinical decisions.

- Data Protections: Stricter rules limit data use, enforce encryption, and refine "de-identification" standards to prevent re-identification risks.

Navigating these regulations is complex, especially for multi-state or international healthcare systems. Compliance requires strong governance, updated consent practices, and tools like cyber risk platforms to track and manage AI risks effectively.

These changes mark a shift toward stricter controls and accountability for AI in healthcare.

Current AI Privacy Regulations in Healthcare

Healthcare AI operates within a maze of federal and state regulations. At the federal level, HIPAA and the HITECH Act serve as the primary frameworks for protecting privacy when AI systems handle protected health information (PHI) for treatment, payment, or operational purposes [2][6]. These laws require covered entities and their business associates (including AI vendors) to sign Business Associate Agreements (BAAs), use encryption, control access, and report breaches [2][6]. Meanwhile, the FDA oversees certain AI-enabled Software as a Medical Device (SaMD) to ensure safety and effectiveness, while the FTC Act addresses deceptive or unfair practices by health apps and AI tools that fall outside HIPAA’s jurisdiction [4].

Federal Regulations and Coverage Gaps

While HIPAA and HITECH provide a foundation, they leave notable gaps when it comes to healthcare AI. These laws apply only to covered entities and their business associates, meaning consumer-facing health apps, wearables, and AI platforms that collect data directly from users often operate outside HIPAA’s scope [1][6]. On top of that, HIPAA doesn’t address challenges specific to AI, such as algorithmic bias, explainability, or the ongoing updates to AI models that could change how PHI is processed over time [6][7].

Another concern lies in how HIPAA defines "de-identified" data. AI techniques can potentially re-identify individuals from large datasets, raising questions about whether training data or model weights might unintentionally expose patient information [1][7]. Additionally, HIPAA’s partial preemption allows stricter state laws to fill these gaps, creating a complex compliance landscape for multi-state health systems and vendors [1][6].

State-Level AI Privacy Laws

To bridge these gaps, states are stepping in with targeted AI privacy laws. Many states are enacting sector-specific rules that emphasize transparency, human oversight, and protections against bias or discrimination [1][3][5]. By 2025, over 20 bills have focused on regulating AI in clinical care, often requiring healthcare providers to disclose when AI is used in diagnoses, treatment recommendations, or patient communications [3][5].

Some states are taking it a step further. At least eight have passed or proposed measures requiring individuals to be informed when interacting with or affected by AI systems. This directly impacts tools like chatbot-based triage, mental health bots, and AI-driven intake processes [5]. For example, Texas House Bill 149, effective January 1, 2026, mandates explicit patient disclosure when AI is involved in healthcare services, prompting updates to consent forms, signage, and digital communications [2].

Other states, like Colorado, have introduced comprehensive frameworks. The Colorado Artificial Intelligence Act (2024) requires risk management, impact assessments, and transparency for high-risk AI systems in healthcare and insurance underwriting. Meanwhile, California’s CCPA/CPRA regulations and proposed automated decision-making rules demand disclosures and offer opt-out rights for profiling in health-related services [5].

New Privacy Requirements for Healthcare AI

Three Key AI Privacy Requirements for Healthcare Organizations Beyond HIPAA

The latest privacy rules for healthcare AI now include three key obligations that go beyond the protections offered by HIPAA: clear disclosure of AI usage, mandatory human oversight, and more stringent data protection protocols. These changes address concerns that existing federal regulations don’t fully tackle issues like algorithmic bias, explainability, or the unique risks that come with using AI to process patient data.

Transparency and Patient Disclosure Requirements

New state laws are making real-time disclosure a must when AI is involved in patient interactions, clinical decisions, or care coordination [1][5]. Several states now require healthcare providers to inform patients when AI significantly influences diagnoses, triage, or treatment plans. In some cases, patients are even given the option to opt out of AI-assisted care [1][5].

But transparency isn’t just about saying, "We used AI." Frameworks like the Joint Commission and Coalition for Health AI’s "Responsible Use of AI in Healthcare" guidance push for more detailed explanations. For instance, providers should share information about model limitations, data sources, potential biases, and known failure points - all in language that patients can easily understand [2]. This shifts the focus from HIPAA’s data-sharing rules to algorithmic transparency, making it an ethical and legal requirement for AI use [10][2][7].

To meet these standards, organizations are implementing consistent disclosure practices across both digital and physical settings. Examples include on-screen banners in telehealth platforms, clear language in consent forms, labels identifying AI chatbots (e.g., "AI assistant"), and documentation of clinical review processes in electronic health records (EHRs) [1][2][5][6].

This emphasis on transparency naturally leads to the next critical requirement: human oversight.

Human Oversight and Accountability Requirements

More than 20 state bills addressing clinical AI in 2025 emphasize that AI cannot independently diagnose, make treatment decisions, or replace licensed professionals, especially in areas like mental health [3][5]. These laws ensure that licensed providers remain actively involved, reviewing and validating any AI-supported decisions [3][5][6]. The Joint Commission and Coalition for Health AI also recommend that organizations clearly define roles for evaluating, approving, monitoring, and retiring AI tools [2].

To put this into practice, many healthcare providers are forming central AI governance committees. These groups, which include clinicians, data scientists, compliance officers, and patient safety experts, oversee the entire lifecycle of AI tools - from procurement and validation to deployment and eventual retirement [2][6]. Specific workflows often include clinical validation studies, clear documentation of intended use, integration of AI outputs into EHRs (where clinicians must approve or override recommendations), and records of how AI influences key decisions [2][3].

Post-deployment, oversight involves regular performance audits, monitoring for bias or drift, incident reporting for adverse events, and clear procedures for deactivating AI tools when necessary [2][3][6]. For large, multi-entity health systems, platforms like Censinet RiskOps™ help manage governance by centralizing risk assessments, tracking vendor compliance, and benchmarking controls across various applications and locations. This approach not only ensures regulatory compliance but also demonstrates due diligence to accrediting bodies [2][6].

While human oversight is crucial, protecting patient data remains just as important.

Data Usage and Protection Standards

New regulations are introducing stricter rules around how patient data is used and protected in AI applications. These guidelines emphasize data minimization, meaning that only the minimum necessary protected health information (PHI) should be used for AI training, tuning, and operations. Even if HIPAA allows broader use, these rules prioritize limiting data access to what’s absolutely required [10][2][7].

States are also tightening definitions of "de-identified data" and imposing secondary-use restrictions. For example, care-collected data can’t be repurposed for unrelated AI development or commercial profiling without explicit consent. Additionally, some laws restrict data sharing across entities and jurisdictions [1][5][9]. The Joint Commission and Coalition for Health AI recommend ensuring that AI use doesn’t weaken existing security measures. This means PHI used for AI must remain encrypted, access should be limited to authorized personnel, and organizations must conduct regular security assessments and have AI-specific incident response plans in place [2].

Healthcare providers are also strengthening business associate and data use agreements with AI vendors. These contracts now often include clauses that limit data sharing, prohibit re-identification, ban unapproved secondary uses, and require vendors to align with the organization’s privacy standards. Providers also retain the right to audit vendors for compliance [2].

The rapid growth of state-level regulations - over 215 health-data and AI-related bills across 44 states, with 21 enacted in 2025 alone - has created a complex patchwork of requirements [1][8][9]. To navigate this, multi-state health systems are implementing "highest common denominator" controls. This means adopting the strictest consent and data-use rules systemwide, maintaining an up-to-date inventory of state laws, and using centralized risk platforms to ensure that AI deployments, vendor contracts, and data flows comply with the most stringent standards [1][2][6].

State and International AI Privacy Rules in Practice

Specific state and international laws are already reshaping how healthcare organizations use AI. From California's disclosure requirements to Texas' oversight rules and Europe's data-protection frameworks, these regulations impose distinct obligations. U.S. providers face added complexity when serving patients across state lines or internationally. Here's how these rules are being applied in real-world settings.

California's AI Disclosure Requirements

California has taken a proactive stance on AI transparency through its privacy framework, including the California Privacy Rights Act (CPRA) and proposed bills like AB 3027 and AB 331. These laws require clear disclosures whenever patients interact with AI systems, such as chatbots, triage tools, or automated clinical decision support. The California Privacy Protection Agency (CPPA) is also drafting Automated Decision-Making Technology (ADMT) regulations, which will require pre-use notices, access rights, and opt-out options for tools that influence healthcare access or pricing.

For example, symptom checkers and mental health chatbots must display clear AI disclaimers during the first interaction. Clinical decision-support tools need to label AI-generated risk scores or recommendations within electronic health record (EHR) systems, ensuring clinicians can identify the source and limitations of the data. To comply, healthcare providers should:

- Create an AI disclosure inventory to map all AI interactions with California residents.

- Standardize disclosure language across digital and physical platforms.

- Update business associate agreements with vendors to align with California's AI rules.

These steps are crucial for integrating California's mandates into broader compliance and risk management strategies.

Texas' Oversight and Record-Keeping Mandates

Texas has introduced the Responsible Artificial Intelligence Governance Act, effective January 1, 2026. This law requires patients to be informed when AI supports healthcare services and prohibits AI tools from independently diagnosing or making treatment decisions without clinician involvement.

To comply, Texas healthcare organizations must focus on oversight and documentation. National guidance from the Joint Commission and the Coalition for Health AI is shaping how these requirements are implemented. In practice, Texas providers should:

- Log versions of AI models in use.

- Maintain documentation of clinical validation studies and bias assessments.

- Record evidence of clinician review processes, such as how AI recommendations are used or overridden in EHRs.

- Keep an inventory of AI systems across clinical and administrative workflows.

These records not only meet state-law expectations but also prepare organizations for inquiries from regulators or accrediting bodies about AI-related outcomes. As state regulations continue to evolve, international mandates add another layer of complexity.

GDPR and EU AI Act Impact on U.S. Healthcare

U.S. organizations managing EU patient data - whether for telehealth, second opinions, or research - must comply with the General Data Protection Regulation (GDPR) and the EU AI Act. GDPR treats health data as highly sensitive, requiring strict processing conditions. AI-driven applications often qualify as high-risk profiling or automated decision-making, which demands additional safeguards. Key requirements include:

- Establishing a lawful basis for processing, often through explicit consent.

- Applying data minimization and purpose limitation principles.

- Implementing robust security measures.

- Conducting Data Protection Impact Assessments (DPIAs) for high-risk AI use cases.

The EU AI Act classifies many healthcare AI systems, such as diagnostic tools and clinical decision-support systems, as high-risk. This triggers stringent requirements around risk management, data governance, transparency, human oversight, and ongoing monitoring. U.S. health-tech vendors and providers working in the EU must:

- Ensure high-quality training data.

- Document model design and intended use.

- Implement human oversight mechanisms.

- Maintain logs to monitor performance and detect drifts.

For hospitals involved in EU research networks or using EU-regulated AI tools, procurement processes must verify vendor compliance with the AI Act. This dual-compliance challenge requires aligning with both U.S. and EU regulations.

Navigating Dual Compliance

The combination of GDPR, the EU AI Act, and U.S. state laws drives healthcare organizations toward privacy-by-design and governance-by-design approaches. Many providers serving both EU and U.S. patients adopt GDPR- and AI Act-aligned controls as a global baseline, layering on state-specific requirements like those in California and Texas. Platforms such as Censinet RiskOps™ can streamline this process by centralizing AI vendor assessments, tracking jurisdictional requirements, and documenting safeguards for audits and regulators. These tools help organizations stay ahead in an increasingly complex regulatory landscape.

sbb-itb-535baee

Impact on Healthcare Data Governance and Risk Management

The surge in AI privacy regulations has pushed healthcare organizations to rethink how they manage data and assess risks. What used to be occasional reviews now demands a formal, organization-wide approach to governing AI throughout the entire data lifecycle. Many organizations are forming cross-functional committees to oversee the validation of AI tools. These committees focus on setting clear standards for data access, de-identification, secondary use, and vendor oversight. This reflects the growing emphasis on transparency and accountability required by both state and federal regulations.

Compliance Challenges from Fragmented Regulations

For healthcare providers operating across multiple states - or even internationally - the patchwork of regulations creates significant challenges. Definitions of terms like "health information", "de-identified data", and "automated decision making" vary widely between jurisdictions. This means that an AI tool compliant in one state might require additional consent, disclosures, or opt-outs in another. Some states place restrictions on AI's role in diagnoses or treatments, while others focus on broader privacy rules that still impact health-related data.

At the federal level, frameworks like HIPAA, HITECH, and the ONC's HTI-1 rule establish basic expectations for certified health IT and clinical decision support. However, they leave gaps when it comes to non-HIPAA data and consumer health apps used in AI workflows. Internationally, regulations like GDPR and the EU AI Act impose even stricter rules on data minimization, purpose limitation, and algorithmic transparency for U.S.-based providers handling EU patient data.

This regulatory fragmentation forces compliance teams to develop unified policies that meet the strictest standards without duplicating efforts. It also requires more frequent, detailed risk assessments tailored to AI. Privacy and security teams must classify each AI use case based on the applicable regulations - whether state laws, GDPR, or the EU AI Act - and evaluate risks tied to data flows, training datasets, and model behavior. This often involves revising agreements with business associates and data vendors to address issues like secondary data use, re-identification, and audit rights. Additionally, organizations must maintain detailed records showing human oversight, reasons for deploying AI, and how patient communications and consent were handled in specific jurisdictions. All of this adds to the workload for compliance, legal, and health information management teams, while emphasizing the need for centralized risk tracking systems for AI tools.

Best Practices for AI Governance

To navigate these challenges, industry leaders recommend several practical strategies for managing AI governance effectively. These include:

- Centralized oversight: Establish a dedicated committee to review AI tools throughout their lifecycle, from sourcing to retirement.

- Comprehensive risk assessments: Standardize evaluations of AI tools, focusing on privacy, security, clinical safety, bias, and explainability. This includes assessing data provenance and de-identification methods used during training and inference.

- Human oversight: Embed requirements for human involvement in workflows to ensure AI doesn't independently diagnose, treat, or deny services. This aligns with many state laws and professional guidelines advocating for "clinician-in-the-loop" models.

- Enhanced data controls: Strengthen governance around protected health information (PHI) used in AI, employing role-based access, encryption, and clear data retention and deletion policies.

- Ongoing monitoring: Continuously track AI performance for issues like model drift, security incidents, or performance degradation, with predefined triggers for rollback or suspension.

- Transparent communication: Provide clear, plain-language disclosures to patients and staff about AI usage, along with updated training on AI-related privacy and security responsibilities.

A practical way to integrate these practices is by treating AI risk assessments as an extension of existing HIPAA security risk analyses and enterprise risk management (ERM) processes. For example, organizations can update risk registers to include AI as a specific asset category and use standardized templates to capture details like data types processed (PHI, de-identified, or consumer data), jurisdictions involved, the model's purpose (clinical or administrative), vendor roles, and potential impacts on patient safety and rights. These templates can then be folded into annual HIPAA risk analyses and vendor reviews, ensuring that AI-specific concerns - such as algorithmic decision logs, bias testing results, and validation studies - are evaluated alongside traditional safeguards like encryption and access controls. High-risk AI applications, such as those influencing diagnoses or resource allocation, can undergo additional scrutiny, much like high-risk medical devices or third-party vendors in current ERM frameworks.

Accountability should also be clearly defined. Organizations need a governing body to approve AI tools and set policies. At the executive level, responsibilities are often shared among the CIO/CTO (technology integration), CISO (security), chief privacy officer (compliance), and clinical leadership (safety and clinical appropriateness), with board-level oversight for high-risk initiatives. Operationally, department or service-line leaders should serve as "AI owners", responsible for local implementation, staff training, and compliance with documentation and disclosure requirements. Compliance and legal teams should stay on top of regulatory developments, update policies and contracts, and coordinate responses to inquiries. Additionally, monitoring AI performance and risks - such as security incidents or model drift - should be tied to existing quality improvement and incident response processes to ensure systematic issue resolution.

How Cyber Risk Management Platforms Support AI Compliance

Managing AI-related privacy and security risks manually - using spreadsheets or basic questionnaires - is no longer sufficient in today’s complex healthcare environment. Specialized cyber risk platforms can make this process far more efficient by centralizing AI vendor assessments, tracking jurisdictional requirements, and documenting safeguards for audits and regulators.

For example, Censinet RiskOps™ provides a cloud-based platform designed specifically for healthcare risk management. Its AI-powered tools speed up third-party risk assessments by automating security questionnaires, summarizing vendor documentation, and capturing integration details and fourth-party risks. The platform generates risk summary reports based on all relevant assessment data, enabling healthcare organizations to address risks more efficiently while maintaining human oversight through configurable review processes.

Censinet AI also enhances collaboration by streamlining workflows within Governance, Risk, and Compliance (GRC) teams. Acting like "air traffic control" for AI governance, the platform routes key findings and tasks to the appropriate stakeholders, including members of the AI governance committee. With real-time data displayed in an intuitive risk dashboard, Censinet RiskOps creates a centralized hub for managing AI-related policies, risks, and tasks. This unified approach ensures continuous oversight and accountability across the organization.

The benefits are clear. At Tower Health, for instance, implementing Censinet RiskOps freed up three full-time employees to return to their primary roles while enabling the organization to complete more risk assessments with just two employees. As Matt Christensen, Senior Director of GRC at Intermountain Health, aptly noted:

"Healthcare is the most complex industry... You can't just take a tool and apply it to healthcare if it wasn't built specifically for healthcare."

Platforms like Censinet RiskOps help healthcare organizations stay ahead of diverse regulatory demands by integrating AI risk assessments into existing HIPAA and ERM workflows, enabling continuous monitoring, and providing the documentation needed to demonstrate compliance with state, federal, and international AI privacy laws.

Conclusion

Key Takeaways

AI-specific privacy and transparency requirements are now expanding well beyond HIPAA, as state, federal, and international regulations evolve. States are introducing rules that require disclosure, consent, and data-use limitations for AI tools used in both clinical and administrative settings. However, these rules vary widely in terms of definitions, disclosure triggers, and enforcement, creating a complex compliance landscape for healthcare providers and vendors operating across multiple states.

Transparency and patient notification have become non-negotiable. Healthcare organizations must inform patients when AI is involved in clinical decisions, mental health chatbots, or patient communications. In some areas, patients even have the option to opt out. Laws and guidelines are also formalizing human oversight, requiring clinicians to stay actively involved and organizations to establish clear processes for approving, monitoring, and auditing AI tools throughout their lifecycle.

Strong data governance and cybersecurity measures are now critical. Many healthcare organizations are turning to structured AI governance frameworks and specialized platforms like Censinet RiskOps™ to handle risk assessments, documentation, and monitoring across various applications, from clinical tools to medical devices and vendor supply chains.

These developments signal that even stricter AI privacy standards are on the horizon.

What's Next for AI Privacy Regulation in Healthcare

Looking ahead, regulations are expected to tighten further. Within the next few years, broader AI disclosure requirements are likely, mandating that patients be informed whenever AI influences diagnoses, treatment recommendations, intake processes, or communications - even in cases where its use might seem obvious. Stricter rules around automated decision-making will also emerge, granting patients more control over high-impact decisions related to coverage, benefits, or triage. These rules will likely include clear notices, options for appeals, and mechanisms for human review.

States are also expected to impose stricter limits on the use of AI in clinical care, with some potentially banning or heavily restricting AI as the sole basis for diagnoses or treatment decisions. Standards for data minimization and de-identification will become more rigorous, with greater scrutiny on what qualifies as de-identified health data for AI training purposes and increased attention to re-identification risks. Mandatory risk assessments and thorough documentation will soon become the norm.

Routine components of healthcare AI governance will likely include algorithmic audits, continuous performance monitoring, and defined roles for human oversight. This will necessitate integrated AI inventories, detailed data mapping, enhanced vendor due diligence, stricter access controls, and formal processes for responding to AI-related incidents.

As the regulatory landscape continues to evolve, healthcare organizations will need to adopt dynamic compliance strategies. Risk management platforms will play a crucial role, helping organizations centralize vendor assessments, stay on top of jurisdiction-specific requirements, and maintain the documentation needed to comply with state, federal, and international AI privacy laws.

FAQs

What new privacy regulations are shaping the use of AI in healthcare beyond HIPAA?

As privacy regulations evolve, healthcare is seeing tighter controls on how AI and machine learning handle sensitive data. In addition to HIPAA, new rules are setting higher standards for data security, requiring clearer transparency in AI-related data use, and emphasizing stronger patient consent protocols. These steps are designed to safeguard patient information in an era where AI technologies are becoming central to healthcare operations.

At the same time, federal oversight is ramping up, ensuring these regulations are followed. This highlights the critical need to balance technological advancements with the protection of patient privacy. To keep up, healthcare providers and technology vendors must stay vigilant and adjust their practices to align with these changing requirements.

What are the key differences between state and federal AI privacy regulations in healthcare?

State-level AI privacy laws tend to focus on addressing local issues, often resulting in stricter or more customized requirements than federal regulations. These laws can differ widely in terms of scope, enforcement mechanisms, and compliance deadlines, leaving healthcare organizations to deal with a complex web of rules.

On the other hand, federal regulations offer a single, nationwide framework, promoting consistent standards for data privacy and security. Still, healthcare organizations need to stay alert and ensure compliance with both state and federal laws, as state regulations may introduce extra obligations that go beyond the federal baseline.

What challenges do healthcare organizations face in meeting AI privacy regulations?

Healthcare organizations face a tough road when it comes to navigating AI privacy regulations, largely because laws and standards differ so much across jurisdictions. Handling sensitive patient information, like Protected Health Information (PHI), means adhering to strict data governance rules that can shift depending on federal, state, or even local requirements.

On top of that, maintaining ongoing risk assessments and addressing potential issues can be a heavy lift. It often demands specialized tools to make the process more manageable. Tools like Censinet RiskOps™ offer a way to simplify this challenge, helping healthcare organizations tackle risks tied to patient data, medical devices, clinical applications, and supply chains. These solutions also make it easier to stay aligned with ever-changing privacy regulations.