Ethical AI by Design: Governance Frameworks That Actually Drive Behavior

Post Summary

AI in healthcare is evolving faster than the systems designed to regulate it. This creates risks like inaccurate recommendations, biased outcomes, and patient harm. A study of the Epic MyChart system revealed serious issues: 6% of AI-generated messages included errors, and 7% posed potential harm. Worse, most weren’t reviewed by healthcare providers, leaving patients unaware they were AI-generated.

To address these challenges, healthcare organizations must move beyond vague ethical principles and establish actionable frameworks. This includes creating governance committees, establishing clear policies, and integrating automated controls to ensure accountability, transparency, and safety throughout the AI lifecycle. Tools like Censinet RiskOps can centralize oversight, flag risks, and enforce compliance, ensuring AI systems meet ethical and regulatory standards.

The key takeaway? Ethical AI requires clear roles, enforceable rules, and continuous monitoring - not just promises.

Core Components of an Ethical AI Governance Framework

Creating an effective governance framework for AI in healthcare involves three key elements: well-defined organizational structures with clear roles, policies that align with U.S. regulations, and actionable controls that bring ethical principles to life. Without these components, governance risks becoming an abstract concept rather than a practical tool.

Governance Structures and Roles

A solid governance framework starts with a dedicated committee - often a subset of senior leadership, such as a Digital Health Committee - with defined responsibilities and regular reviews to ensure the necessary expertise is maintained [5].

This committee should include senior leaders, clinical and operational experts, IT professionals, data scientists, and external collaborators [5]. All participants must have a basic understanding of technical concepts, like Electronic Health Records (EHRs), while technical experts need to grasp clinical workflows to ensure alignment between technology and healthcare practices [5].

Many healthcare organizations are also forming cross-functional AI councils. These groups bring together clinical leaders, IT specialists, compliance officers, ethicists, and patient advocates to ensure diverse viewpoints in AI oversight [6]. Some organizations are even introducing roles like a "Fractional AI Ethicist" to guide boards or senior management on ethical AI practices, helping translate complex risks into practical governance actions [3]. Additionally, each AI use case should have an executive sponsor who ensures alignment with the organization’s strategy, oversees resource allocation, and maintains accountability throughout the AI model’s lifecycle [7].

The governance committee’s main role is oversight - ensuring AI initiatives are ethical and responsible - while the actual implementation is managed by individual departments. These departments address specific concerns such as clinical needs, feasibility, budgeting, interoperability, workflow impacts, and clinician satisfaction. Meanwhile, the governance committee focuses on broader organizational priorities like safety, efficacy, equity, security, privacy, regulatory compliance, and IT integration [5].

This structured approach sets the foundation for formal policies that codify ethical AI standards.

Policies and Standards for AI in U.S. Healthcare

Ethical AI governance in healthcare relies on policies that ensure AI systems are secure, compliant, and aligned with ethical standards [6]. These policies must cover several critical areas, including HIPAA-compliant data governance, transparency in AI decision-making, and rigorous validation standards to confirm model performance before deployment.

Organizations should also establish clear guidelines for retraining or retiring AI tools if their performance declines, integrating these processes into their quality assurance systems [4]. Another best practice is requiring a "Purpose and Request Form" for every AI project. This form documents the model’s purpose, intended use, and expected outcomes before development begins, ensuring accountability and that each project is justified in terms of patient care or operational improvements.

Boards of directors are increasingly responsible for overseeing mission-critical risks, including those related to AI. Their fiduciary duties now extend to managing the operational and ethical risks tied to AI’s role in patient care, organizational operations, and workforce management [3]. As a result, governance policies must address not only technical specifications but also the ethical consequences of AI on patient outcomes and healthcare equity.

Converting Ethical Principles into Controls

With roles and policies in place, the next step is turning ethical principles into actionable controls. This involves bridging the gap between abstract values and practical behavior by embedding these principles into the AI lifecycle. Core pillars like Accountability, Transparency, Fairness, and Safety [6] serve as the foundation for these controls.

For instance, fairness and non-discrimination can be enforced through mandatory bias testing before an AI model is deployed. Similarly, transparency might require detailed documentation explaining how models make decisions, especially in clinical settings. Data governance and privacy principles can be operationalized via access controls, encryption protocols, and audit trails for managing protected health information. Accountability often takes the form of assigning clear ownership and tying performance metrics to specific individuals [6].

Each principle should align with checkpoints, validations, and automated enforcement measures to prevent non-compliant AI systems from being deployed. Organizations can also incorporate ethics consultations, encouraging collaboration between AI ethics experts and technical teams to identify risks and assess potential downstream impacts of AI adoption [5].

These measures ensure that ethical principles are not just ideals but actively shape how AI is developed, deployed, and managed in healthcare.

Governance Across the AI Lifecycle

Ethical AI governance plays a role in every stage of an AI system's lifecycle, ensuring principles like fairness, transparency, accountability, privacy, and human oversight are upheld [8]. This approach builds on established guidelines, applying them consistently across all phases of development and deployment.

Governance Activities by Lifecycle Phase

In the problem definition stage, it's crucial to document the system's purpose, intended outcomes, and potential risks in detail. When it comes to data sourcing, rigorous checks should be conducted to identify issues like bias, ensure data quality, and maintain privacy - particularly in sensitive sectors like healthcare, where strict regulatory standards apply.

During model development, governance efforts focus on testing for bias, validating models against clinical or industry standards, and thoroughly documenting design decisions. The validation phase requires independent reviews to assess performance and fairness before deployment. Post-deployment, monitoring becomes essential to track the system's performance in real-world conditions, identify issues like drift or degradation, and maintain audit trails for accountability. Finally, retirement governance ensures that outdated models are decommissioned appropriately, with clear documentation of the process to maintain transparency.

Adjusting Governance Based on AI Risk and Autonomy

Not all AI systems demand the same level of oversight. The degree of governance should align with the potential impact of the system [8]. Organizations should map all AI use cases and evaluate their risks by considering factors like bias, security vulnerabilities, privacy implications, and the likelihood of operational errors. High-impact systems, such as those used in clinical diagnostics or treatment recommendations, often require a more rigorous governance framework, which may include cross-functional review boards.

The complexity of the AI system and the regulatory environment also influence how governance is applied. As IBM notes, "the levels of governance can vary depending on the organization's size, the complexity of the AI systems in use and the regulatory environment in which the organization operates" [9]. Regardless of automation in governance processes, human oversight remains critical, ensuring meaningful checks and balances are always in place.

Using Censinet RiskOps for Lifecycle Governance

To put these governance principles into practice, specialized tools can be invaluable. Managing AI governance effectively requires centralized coordination and clear visibility across all stages. Censinet RiskOps serves as a central platform that aggregates real-time risk data and routes assessment results to the appropriate stakeholders, such as AI governance committee members.

This automated system ensures that issues are addressed promptly and consistently. By centralizing risk registers, automating the collection of evidence, and fostering collaboration between teams, Censinet RiskOps helps healthcare organizations uphold governance standards throughout the AI lifecycle. At the same time, it ensures that human oversight remains integral to critical decision-making processes.

Tools That Drive Ethical Behavior in Daily Operations

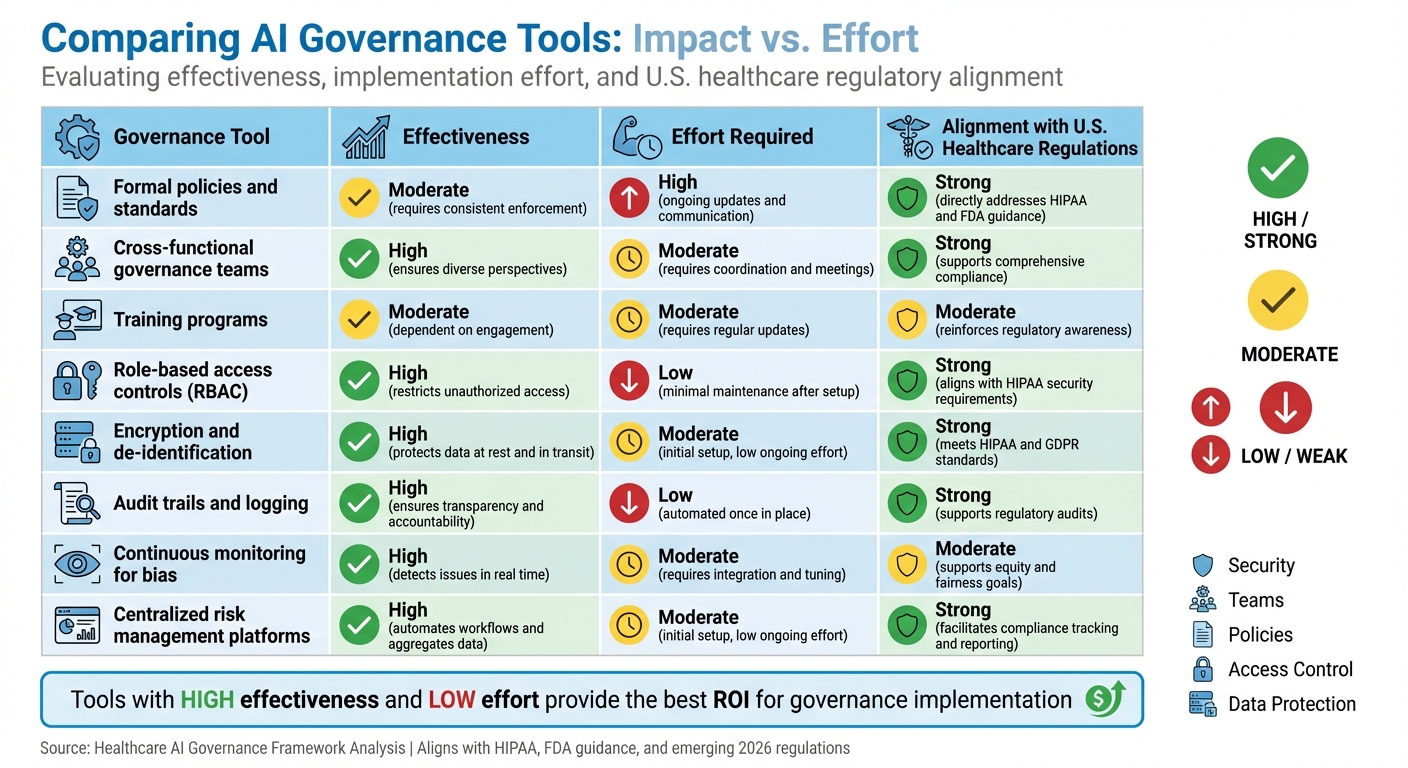

AI Governance Tools Comparison: Effectiveness, Effort, and Regulatory Alignment

To ensure ethical AI practices become part of everyday operations, organizations need more than just governance frameworks - they need tools that seamlessly integrate these frameworks into their daily workflows. This means creating systems that make ethical decision-making second nature by embedding it into processes through clear accountability, automated controls, and compatibility with existing healthcare IT systems.

Methods for Enforcing Ethical AI Governance

Effective governance starts with diverse, cross-functional teams. These teams should include voices from executive leadership, regulatory affairs, IT, patient safety, clinical operations, cybersecurity, data privacy, and patient advocacy [10]. Regular training ensures everyone stays updated on HIPAA regulations and maintains a strong ethical foundation. This approach not only builds awareness but also supports automation, enabling consistent compliance across all AI-related activities.

Automating Compliance with Built-In Controls

Automation is a game-changer for embedding privacy and security into every phase of AI implementation. It reduces reliance on manual oversight by using tools like role-based access controls (RBAC), encryption, consent management, de-identification, and automated audit trails [6][10][2][11]. For example, encryption safeguards data both in transit and at rest, while automated audit trails provide a clear record of AI interactions for regulatory reviews [6]. Continuous monitoring adds an additional layer of protection by identifying biased outcomes in real-time, allowing teams to tweak algorithms before problems escalate [2].

Comparing Governance Tools by Impact and Effort

Not all governance tools are created equal - some require more effort to implement, while others offer greater impact. For instance, policies and standards set a critical foundation but demand ongoing updates and enforcement. Training programs, while essential for team awareness, require frequent refreshes to stay relevant with evolving regulations. On the other hand, automation tools like RBAC and encryption deliver high impact with relatively low maintenance, ensuring compliance by default.

Specialized platforms, such as Censinet RiskOps, take things a step further by centralizing risk data, automating evidence collection, and routing findings to the right stakeholders. These platforms strike a balance between efficiency and human oversight, ensuring that critical decisions remain in the hands of skilled professionals.

Here’s a closer look at how these tools compare in terms of effectiveness, effort, and alignment with U.S. healthcare regulations:

| Governance Tool | Effectiveness | Effort Required | Alignment with U.S. Healthcare Regulations |

|---|---|---|---|

| Formal policies and standards | Moderate – requires consistent enforcement | High – ongoing updates and communication | Strong – directly addresses HIPAA and FDA guidance |

| Cross-functional governance teams | High – ensures diverse perspectives | Moderate – requires coordination and meetings | Strong – supports comprehensive compliance |

| Training programs | Moderate – dependent on engagement | Moderate – requires regular updates | Moderate – reinforces regulatory awareness |

| Role-based access controls (RBAC) | High – restricts unauthorized access | Low – minimal maintenance after setup | Strong – aligns with HIPAA security requirements |

| Encryption and de-identification | High – protects data at rest and in transit | Moderate – initial setup, low ongoing effort | Strong – meets HIPAA and GDPR standards |

| Audit trails and logging | High – ensures transparency and accountability | Low – automated once in place | Strong – supports regulatory audits |

| Continuous monitoring for bias | High – detects issues in real time | Moderate – requires integration and tuning | Moderate – supports equity and fairness goals |

| Centralized risk management platforms | High – automates workflows and aggregates data | Moderate – initial setup, low ongoing effort | Strong – facilitates compliance tracking and reporting |

This comparison highlights how different tools can complement one another, creating a comprehensive approach to ethical AI governance in healthcare.

sbb-itb-535baee

Measuring, Auditing, and Improving AI Governance

For AI governance frameworks to remain effective, they must continually measure their success and adapt to changing technologies and regulations. Without clear metrics and regular audits, these frameworks risk becoming outdated and ineffective.

Metrics for Tracking Ethical AI Governance

Metrics play a key role in ensuring AI systems operate ethically, transparently, and efficiently [12]. Organizations should define specific metrics that evaluate areas like data quality, model security, bias, and accountability. These metrics can be tracked using centralized dashboards [9][10]. For example, the quality and reliability of AI data can be assessed by comparing outputs to predefined standards, while dashboards provide a real-time view of system performance and compliance [10]. By establishing these metrics, organizations set the foundation for thorough audit processes that validate AI performance on an ongoing basis.

Audit and Assurance Methods

Regular audits and continuous monitoring are essential for verifying that AI systems comply with ethical standards and regulatory requirements. In healthcare, for instance, organizations should create clear policies and assign responsibilities for evaluating AI tools [10]. This includes periodic validation and testing to ensure tools perform reliably and meet safety standards. Identifying and addressing risks and biases helps safeguard patient safety, promote fairness in care, and reduce the chances of misdiagnoses [10][1]. Voluntary, blinded reporting of AI-related safety events can encourage learning and drive improvements. Additionally, having clear criteria for retraining, updating, or retiring AI tools ensures they remain effective and aligned with ethical guidelines, especially when performance declines or standards shift [4][10]. Centralized audit processes further strengthen accountability and oversight.

Centralizing Oversight with Censinet RiskOps

Managing multiple data sources and audit processes becomes easier with centralized oversight platforms. Censinet RiskOps offers a unified solution for handling AI-related policies, risks, and tasks. This platform aggregates real-time data into an AI risk dashboard, enabling seamless collaboration and ensuring that critical findings and tasks are directed to the appropriate stakeholders for review and action. Acting like "air traffic control" for AI governance, it ensures that the right teams address key issues promptly. This centralized approach completes the cycle of measurement, auditing, and continuous improvement, reinforcing accountability and effective governance at every step.

Conclusion

Ethical AI governance in healthcare isn’t just about drafting policies - it’s about actively guiding how AI is developed, used, and monitored. Dr. Sneha S. Jain from Stanford Health Care puts it plainly: "AI is transforming health care faster than traditional evaluation frameworks can keep up" [4]. This rapid evolution makes strong governance frameworks absolutely necessary.

In the U.S., the stakes couldn’t be higher. While hundreds of AI tools have gained FDA clearance, only a small percentage undergo thorough evaluation for clinical effectiveness, fairness, or bias [4]. Surveys highlight glaring gaps in testing AI systems for bias [14][4]. These findings make it clear: governance frameworks must go beyond theory. They need to include specific controls, defined responsibilities, and ongoing oversight throughout the entire AI lifecycle.

To make this happen, centralized platforms play a critical role. Tools like Censinet RiskOps help by consolidating real-time data into dashboards, directing key insights to the right stakeholders, and ensuring policies, risks, and tasks tied to AI remain visible and actionable. This approach bridges the gap between ethical principles and practical accountability - an increasingly urgent need as regulations become stricter.

With new laws set to take effect in 2026 [13], organizations that have already built strong governance systems will be better equipped to adapt. Acting now ensures readiness for future challenges. As Dr. Lee H. Schwamm of Yale New Haven Health System states, "Responsible AI use is not optional, it's essential" [4]. The strategies and tools outlined in this guide offer the foundation to turn that essential responsibility into reality.

FAQs

What are the key elements of an effective ethical AI governance framework in healthcare?

An ethical AI governance framework in healthcare relies on several essential elements to ensure systems adhere to ethical principles and foster trust. These elements include:

- Governance and oversight: Setting up a dedicated AI governance committee to manage policies and guide decision-making processes.

- Clear guidelines: Crafting detailed policies and procedures for designing, implementing, and monitoring AI systems effectively.

- Staff training: Offering continuous education to employees about AI-related risks, ethical considerations, and best practices.

- Performance monitoring: Regularly auditing and evaluating AI systems to confirm they operate as intended and comply with regulations.

- Incident response: Establishing clear protocols to address and resolve any issues that arise from AI use.

Incorporating these practices into healthcare operations helps ensure accountability, minimize risks, and strengthen trust in AI technologies among stakeholders.

What steps can organizations take to ensure their AI systems comply with ethical and regulatory standards?

Organizations can meet compliance standards by implementing structured AI governance frameworks that align with federal, state, and industry-specific regulations. These frameworks should focus on critical areas such as risk management, transparency, human oversight, and accountability.

Here’s how organizations can get started:

- Establish clear policies to identify and address potential risks.

- Perform regular audits and thorough impact assessments.

- Keep comprehensive records of AI development and deployment processes.

Equally vital is fostering a workplace culture that prioritizes ethical AI practices. This involves training employees on compliance requirements and routinely monitoring AI systems to ensure they adhere to ethical and legal guidelines. By weaving these practices into everyday operations, organizations can build trust while minimizing potential risks tied to AI technologies.

Why is continuous monitoring important for maintaining ethical AI in healthcare?

Continuous monitoring plays a key role in keeping AI systems in healthcare safe, reliable, and fair over time. As clinical practices shift, patient needs change, and data evolves, this process helps catch issues like performance decline or unexpected biases.

Regular assessments of AI systems help organizations stay compliant with regulations, safeguard patient well-being, and ensure fairness in outcomes. This ongoing effort not only reinforces trust among stakeholders but also ensures AI decisions align with ethical standards and the practical needs of healthcare.