Machine Learning Models for Healthcare Risk Scoring

Post Summary

Machine learning (ML) is transforming healthcare risk scoring. It delivers better predictions in areas like patient outcomes, population health, and cybersecurity compared to older methods. Here's why it matters:

- Better Predictions: ML models, like neural networks and tree-based algorithms, outperform traditional tools (e.g., GRACE, TIMI) in predicting risks like mortality, hospital readmissions, and disease progression.

- Regulatory Alignment: U.S. regulations, such as HIPAA, push for precise, auditable risk management systems. ML meets these needs by handling large, complex datasets.

- Efficiency Gains: Platforms like Censinet RiskOps™ simplify cybersecurity and vendor risk assessments, saving time and resources.

While ML offers better accuracy, choosing the right model depends on factors like data complexity, interpretability, and organizational readiness. Balancing performance with transparency is key to building trust and ensuring compliance.

Core Machine Learning Models for Healthcare Risk Scoring

Comparison of Machine Learning Models for Healthcare Risk Scoring

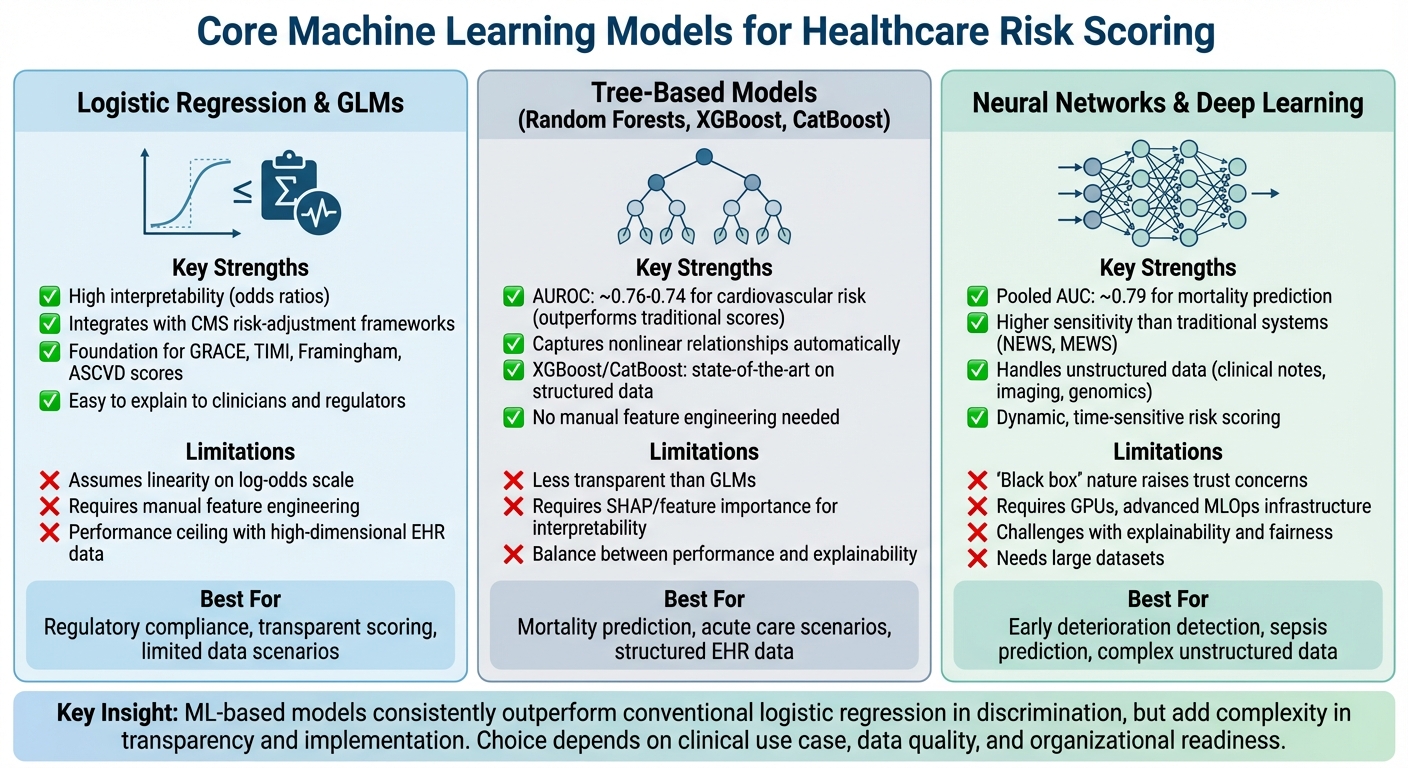

Healthcare organizations rely on three main types of machine learning models for risk scoring: logistic regression/GLMs, tree-based models, and neural networks/deep learning. Each model type has distinct strengths and challenges, offering unique solutions for improving risk prediction in healthcare. Selecting the right model can directly impact whether clinicians adopt and trust the risk scores.

Logistic Regression and Generalized Linear Models

Logistic regression and generalized linear models (GLMs) have long been the foundation for healthcare risk scoring. They are the driving force behind traditional scoring systems like GRACE, TIMI, Framingham, and ASCVD, which predict cardiovascular risk, mortality, and hospital readmissions [2]. These models simplify complex calculations into bedside-friendly point-based scores by assigning integer values to critical factors [4].

The biggest advantage of GLMs is their interpretability. Model coefficients clearly show how each variable influences risk through odds ratios, which makes the results easy to understand for clinicians, payers, and regulatory bodies. Additionally, GLMs integrate seamlessly with U.S. risk-adjustment frameworks, such as CMS models, which assign weights to disease categories. They also help organize diagnosis codes into hierarchies, balancing strong predictive power with reduced complexity [3].

However, GLMs come with limitations. They assume linearity on the log-odds scale, which can oversimplify relationships. Capturing complex interactions often requires manual feature engineering. When working with high-dimensional electronic health record (EHR) data, GLMs may reach a performance ceiling, falling short compared to more advanced machine learning techniques [5]. While they remain a solid starting point, they set the stage for more sophisticated models that can handle nonlinear complexities.

Tree-Based Models (Decision Trees, Random Forests, Gradient Boosting)

Tree-based models have become a popular choice for clinical risk prediction, often outperforming traditional scores and logistic regression in key metrics like AUROC and sensitivity [7].

A single decision tree provides straightforward, rule-based logic - such as “age > 75 AND systolic BP < 100 AND elevated lactate” - making it easy for clinicians to follow. However, individual trees often overfit and can be unstable. Random forests address these issues by combining multiple trees built on bootstrapped samples and diverse feature subsets. Gradient boosting methods, like XGBoost and CatBoost, take this a step further, delivering state-of-the-art performance on structured healthcare data.

For instance, a study on acute gastrointestinal bleeding demonstrated that XGBoost and CatBoost models outperformed conventional tools for mortality risk prediction [5]. Similarly, machine learning models like random forests achieved AUROC values of around 0.76 and 0.74 for cardiovascular risk prediction, surpassing traditional scores [8]. A 2025 JMIR systematic review also highlighted the superior performance of ML-based models compared to conventional scores like GRACE and TIMI [2].

Tree-based models excel in capturing nonlinear relationships and interactions without requiring manual feature engineering. However, their complexity can make them less transparent. Tools like SHAP and feature importance can help address this, but the balance between performance and interpretability remains a challenge. The growing demand for even greater accuracy has driven the adoption of advanced neural network models.

Neural Networks and Deep Learning

Neural networks and deep learning approaches, including feed-forward networks, convolutional neural networks (CNNs), and transformers, are increasingly used in healthcare risk scoring [4]. These models are particularly suited for analyzing complex, unstructured data like clinical notes, imaging, waveform data, genomics, and sequential EHR records. They also enable dynamic, time-sensitive risk scoring.

Deep learning has shown exceptional results in tasks like early deterioration detection, sepsis risk prediction, and forecasting cardiovascular events, especially when leveraging large datasets [8]. A review of clinical risk prediction found that deep learning models incorporating clinical notes or pager messages consistently achieved higher AUROC and sensitivity than traditional early warning systems like NEWS or the Modified Early Warning System across various hospital systems [7].

Despite their strengths, neural networks face challenges in explainability, fairness, and validation. Their "black box" nature raises concerns about bias, regulatory approval, and clinician trust. To address this, researchers are focusing on interpretable models and post-hoc explanation tools like SHAP, LIME, and attention mechanisms. Additionally, implementing these models requires robust infrastructure, such as GPUs, efficient data pipelines, and advanced MLOps capabilities - resources that many healthcare organizations are still building.

Across all three model types, machine learning-based risk models often outperform conventional logistic regression in terms of discrimination. However, they also bring added complexity in terms of transparency and implementation. The choice of model should align with the specific clinical use case, the quality and type of data available, and the organization's readiness to adopt advanced analytics.

Applications of Machine Learning in Clinical and Cybersecurity Risk Scoring

Clinical Risk Scoring and Outcome Prediction

Machine learning (ML) models have proven effective in predicting cardiovascular events, especially following acute myocardial infarction (AMI) and percutaneous coronary intervention. A meta-analysis of 10 retrospective studies, which included 89,702 AMI patients from 2017 to 2024, revealed that ML models used to predict major adverse cardiovascular and cerebrovascular events (MACCEs) and mortality achieved a pooled AUC of about 0.79 for mortality. This performance was higher than traditional scoring systems like GRACE and TIMI [2][6]. These models identified key predictors such as age, systolic blood pressure, and Killip class, synthesizing these factors more effectively than conventional methods.

In acute care scenarios, gradient-boosted techniques like XGBoost and CatBoost have surpassed established clinical tools in predicting mortality for acute gastrointestinal bleeding. These models demonstrated higher AUCs compared to traditional tools, enabling better early intervention and patient stratification across U.S. hospital systems [5].

Population Health and Readmission Risk Prediction

ML models extend their utility to population health by optimizing metrics like readmission risk and healthcare costs. Tree-based models and neural networks are particularly adept at pinpointing high-cost patients and predicting readmissions using claims and electronic health record (EHR) data. For example, an ML-based risk adjustment system streamlined hierarchical diagnosis groups, cutting model parameters by approximately 80% without sacrificing cost-prediction accuracy [3]. This approach improved pricing precision for rare diseases, reducing the risk of underpayment for nearly 3% of individuals with ultra-rare conditions - a critical improvement for U.S. health plans managing risk-adjusted payments.

The adoption of ML-driven diagnostic cost groups (DCGs) also addresses issues like upcoding sensitivity. By generating more compact and robust models, healthcare organizations can better forecast chronic disease progression and utilization patterns, while minimizing the risks associated with traditional risk-adjustment formulas [3].

Cybersecurity and Third-Party Risk Scoring

The influence of ML models goes beyond patient care, playing a crucial role in securing healthcare systems. Tools like Censinet RiskOps™ use ML to streamline cybersecurity and third-party risk assessments. These platforms prioritize threats and manage risks across patient data, medical devices, and supply chains. For instance, Censinet’s AI capabilities allow vendors to complete security questionnaires in seconds, automatically summarize documentation, and generate risk summary reports from assessment data.

James Case, VP & CISO at Baptist Health, emphasized the transformation: "Not only did we get rid of spreadsheets, but we have that larger community [of hospitals] to partner and work with."

This semi-automated strategy enables healthcare organizations to scale their risk management processes while retaining human oversight for critical decisions. By doing so, they maintain patient safety and regulatory compliance in an increasingly complex threat environment. Many organizations report improved efficiency, freeing up staff to focus on strategic initiatives while managing more assessments with fewer resources.

sbb-itb-535baee

Key Considerations for Selecting and Implementing Machine Learning Models

Criteria for Model Selection and Evaluation

When selecting machine learning models, organizations often face a choice between simplicity and complexity. Traditional models like logistic regression and generalized linear models are reliable starting points, especially in cases with limited data or when transparency is required for regulatory compliance. These models are easier to explain to clinicians, executives, and auditors, which is a significant advantage in highly regulated industries.

However, advanced techniques should be considered if they demonstrate clear performance advantages. For instance, a 2025 systematic review highlighted that machine learning models outperformed traditional scores such as GRACE and TIMI in terms of discrimination. In predicting cardiovascular events, deep neural networks showed higher sensitivity, identifying more high-risk patients who might otherwise have been missed [2][8].

Model evaluation should go beyond AUROC metrics. Pay attention to calibration (using Brier scores and calibration plots) and assess clinical utility through decision-curve analysis. External validation on datasets from different facilities or time periods is essential, as is subgroup analysis by factors like race, sex, age, payer type, and comorbidities. These steps help identify and address performance gaps that could impact fairness and equity [4]. Additionally, improving interpretability and ensuring compliance can boost trust and acceptance of the models.

Ensuring Interpretability, Equity, and Compliance

Performance isn’t the only factor that matters - models must also be understandable and fair. Complex models often operate as "black boxes", which can erode trust among clinicians. One way to address this is through hybrid approaches, such as combining transparent rule-based systems for key features with machine learning models to capture more nuanced patterns. This approach balances performance with interpretability [4].

Clear documentation is another critical component. Organizations should outline the intended use, limitations, and restrictions of each model. Keeping thorough audit trails and version control for data preprocessing, feature engineering, and model iterations supports internal audits and meets regulatory requirements for machine learning used as Software as a Medical Device (SaMD) [4]. Establishing an AI governance committee - including clinical experts, data scientists, compliance officers, security professionals, and patient advocates - can further ensure proper oversight. This team can approve models, monitor their performance, and review any adverse events or near-misses.

For tools providing patient-specific recommendations, it’s crucial to determine whether they fall under the FDA’s evolving clinical decision support (CDS) or SaMD frameworks. Proper planning for documentation, risk classification, and post-market surveillance is essential. Importantly, clinical decision support tools should always allow for human override, leaving final decisions in the hands of clinicians [4].

Operationalizing Machine Learning Models for Risk Management

Integrating validated models into daily workflows ensures alignment with both regulatory requirements and operational goals. For example, linking risk scores to U.S. quality and payment programs - such as readmission reduction, Hierarchical Condition Category (HCC) risk adjustment, and Accountable Care Organization (ACO) contracts - helps tie model outputs to measurable financial and operational outcomes.

In areas like cybersecurity and third-party risk management, purpose-built platforms make operationalization more efficient. One example is Censinet RiskOps™, an AI-driven, cloud-based risk exchange tailored for healthcare. This platform facilitates secure and seamless sharing of cybersecurity and risk data among healthcare organizations and over 50,000 vendors. It automates risk scoring and task management across various domains, including vendor assessments, patient data management, clinical applications, medical devices, and supply chain operations [1].

Case studies demonstrate the platform’s effectiveness. For instance, Faith Regional Health utilized Censinet’s cybersecurity benchmarks to secure necessary resources. CIO Brian Sterud noted:

"Benchmarking against industry standards helps us advocate for the right resources and ensures we are leading where it matters" [1].

Similarly, Matt Christensen, Sr. Director GRC at Intermountain Health, highlighted the importance of industry-specific tools:

"Healthcare is the most complex industry... You can't just take a tool and apply it to healthcare if it wasn't built specifically for healthcare" [1].

These examples underline the need for risk scoring solutions that address the unique challenges of U.S. healthcare, including regulatory demands, operational complexities, and data intricacies.

Conclusion and Key Takeaways

Machine Learning's Role in Healthcare Risk Scoring

Machine learning (ML) models are proving to be game-changers in healthcare, consistently outperforming traditional risk scores in areas like clinical care, population health, and cybersecurity. Advanced techniques such as tree-based ensembles (e.g., XGBoost) and deep neural networks have shown measurable improvements over conventional tools like the Framingham, GRACE, and TIMI scores. For instance, ML models deliver higher AUROC values and better sensitivity in predicting cardiovascular events, often identifying high-risk patients that traditional methods overlook [8]. Similarly, tools like XGBoost and CatBoost have demonstrated superior accuracy and calibration in forecasting mortality for acute gastrointestinal bleeding [5].

Beyond clinical applications, ML is reshaping risk management by enabling scalable, real-time scoring. Platforms like Censinet RiskOps™ allow healthcare organizations to perform more risk assessments with fewer resources, freeing up staff for strategic priorities. Moreover, ML's ability to process complex, high-dimensional datasets - ranging from unstructured text and imaging to telemetry and social determinants of health - gives it a significant edge. These capabilities help uncover patterns that traditional linear models might miss, reinforcing the shift toward ML-driven risk management across healthcare.

Navigating Adoption and Implementation

The path to adopting ML in healthcare requires a careful balance between performance, interpretability, and fairness. While advanced models often deliver better accuracy, they must remain understandable to clinicians, auditors, and compliance teams. Hybrid approaches that combine transparent rule-based systems with sophisticated ML techniques can help bridge this gap, ensuring trust while capturing complex patterns. To ensure long-term success, organizations should focus on external validation, conduct subgroup analyses (e.g., by demographics or insurance status), and continuously monitor models for issues like performance drift and bias.

For healthcare leaders in the U.S., the message is clear: ML-based risk scoring isn't just about making better predictions - it’s about integrating those insights into clinical workflows, quality initiatives, and cybersecurity strategies. Whether it's linking scores to programs like readmission reduction, HCC risk adjustment, or vendor risk assessments, the ultimate goal is to translate model outputs into measurable financial and operational improvements. As Matt Christensen from Intermountain Health aptly noted:

"Healthcare is the most complex industry... You can't just take a tool and apply it to healthcare if it wasn't built specifically for healthcare" [1].

To truly harness ML's potential, healthcare organizations must adopt solutions designed specifically for their unique challenges, ensuring seamless integration of insights into both clinical and cybersecurity operations.

FAQs

How do machine learning models make healthcare risk scoring more effective?

Machine learning models are reshaping healthcare risk scoring by offering insights that are more precise, tailored, and adaptable than traditional methods. Unlike older approaches that depend on fixed rules and limited datasets, these models can analyze vast and complex data to detect patterns, predict risks, and adjust as new information becomes available.

This capability allows healthcare organizations to act ahead of time, use resources more effectively, and enhance patient care. By tapping into advanced analytics, machine learning makes it possible to identify risks sooner and support smarter decisions across the entire healthcare system.

What challenges arise when using neural networks for healthcare risk scoring?

Using neural networks for healthcare risk scoring isn't without its hurdles. For starters, these models demand large, high-quality datasets, which can be tough to gather while adhering to strict patient privacy laws. On top of that, the complex nature of healthcare data - think unstructured medical records or the wide variety of patient demographics - makes training and validating these models a complex task.

Another major challenge lies in the lack of transparency in neural networks. Often referred to as "black boxes", these models make it hard to explain how they arrive at their predictions - something that's absolutely critical in healthcare. Add to this the need for significant computational power and a strong infrastructure, and the road to implementation becomes even steeper. Overcoming these obstacles takes thoughtful planning and close collaboration between technical experts and healthcare professionals.

How can healthcare organizations ensure machine learning models are fair and easy to understand?

Healthcare organizations can take meaningful steps to improve fairness and clarity in machine learning models by adopting explainable AI methods. These techniques make decision-making processes easier to understand, ensuring that both patients and providers can grasp how outcomes are determined. Regular evaluations of these models are also essential to uncover and address any biases that might affect different patient groups.

Building trust is another key factor. Organizations should validate their models using datasets that represent a wide range of populations. Additionally, involving stakeholders - such as clinicians, patients, and data scientists - in reviewing the results can strengthen confidence in the models, particularly when they’re used for critical tasks like healthcare risk scoring.