Safety-Critical AI: Lessons from Aviation for Machine Learning Systems

Post Summary

AI in healthcare must prioritize safety. Just like aviation transformed from high-risk to highly reliable, healthcare AI can adopt similar safety frameworks to prevent failures. Key principles from aviation include:

- Layered Protection: Use multiple AI models, human oversight, and safety rules to avoid single points of failure.

- Fail-Safe Design: Systems should default to the safest option when uncertain, alerting humans for intervention.

- Real-Time Monitoring: Continuously track performance to detect and address issues like model or data drift.

- Governance and Accountability: Establish safety boards, clear oversight, and non-punitive reporting processes to analyze and improve.

The stakes are high: biased algorithms or flawed predictions can harm patients. By leveraging aviation’s rigorous practices, healthcare AI can ensure reliability, protect patients, and build trust.

Safety-Critical Systems in AI and Healthcare

What Defines a Safety-Critical System?

A safety-critical system is one where failure could lead to serious consequences, including loss of life, environmental harm, or significant financial damage [2]. These systems are built on three key pillars of dependability: safety (avoiding catastrophic outcomes), reliability (delivering consistent and correct service), and availability (being ready to perform as needed) [2]. Think of Air Traffic Management systems in aviation - they’re designed to prevent collisions, making them inherently safety-critical. Similarly, in healthcare, AI systems used for diagnosing diseases, predicting sepsis, or recommending treatments carry equally high stakes. These shared traits highlight why machine learning (ML) introduces unique challenges compared to traditional software.

Machine Learning vs. Traditional Software Safety

Machine learning systems operate very differently from traditional software, and this difference creates new safety challenges. Traditional software is predictable and explainable - it follows explicit rules written by developers. ML systems, on the other hand, learn patterns from data, which makes their behavior less transparent and harder to explain [5][6]. As the FAA explains:

"Conventional aviation safety assurance techniques assume that a designer can explain every aspect of the system design, but such explanations are not readily extendable to AI."

– FAA [5][6]

This unpredictability can lead to serious problems. For example, Epic's 2017 sepsis prediction model was later found to issue late alerts and too many false alarms [1][3]. This failure was partly due to concept drift - where changes in patient demographics or conditions make the model less effective - and data drift, caused by updates to medical equipment or procedures [1][3]. Unlike traditional software, which remains static unless updated, ML systems can degrade silently over time.

Another key issue is explainability. In one study on antidepressant recommendation models, providing clinicians with explanations for incorrect predictions actually misled them, resulting in harmful outcomes for patients [1][3]. This lack of transparency makes validating ML systems incredibly difficult. Will Hunt from the AI Security Initiative highlights this challenge:

"Many modern AI systems have a number of features, such as data-intensivity, opacity, and unpredictability, that pose serious challenges for traditional safety certification approaches."

– Will Hunt [4]

These complexities emphasize the importance of adopting proven safety strategies, like those developed in aviation, to ensure ML systems can be trusted in critical applications.

Aviation's Path to Safety Standards

Aviation’s safety standards didn’t emerge overnight - they are the result of decades of learning from crashes, near-misses, and system failures [7][1]. Every incident was meticulously analyzed, lessons were documented, and protocols were refined. This relentless focus on improvement has made aviation one of the safest industries today.

Healthcare AI finds itself at a similar turning point. Like aviation, it must embrace a rigorous approach to prevent AI-related failures before they occur. The good news? Healthcare doesn’t have to start from scratch. By adopting aviation’s model - combining strict engineering practices, continuous monitoring, and a culture that prioritizes safety above all else - healthcare can chart a safer path for integrating AI into clinical environments.

Aviation Safety Principles Applied to AI

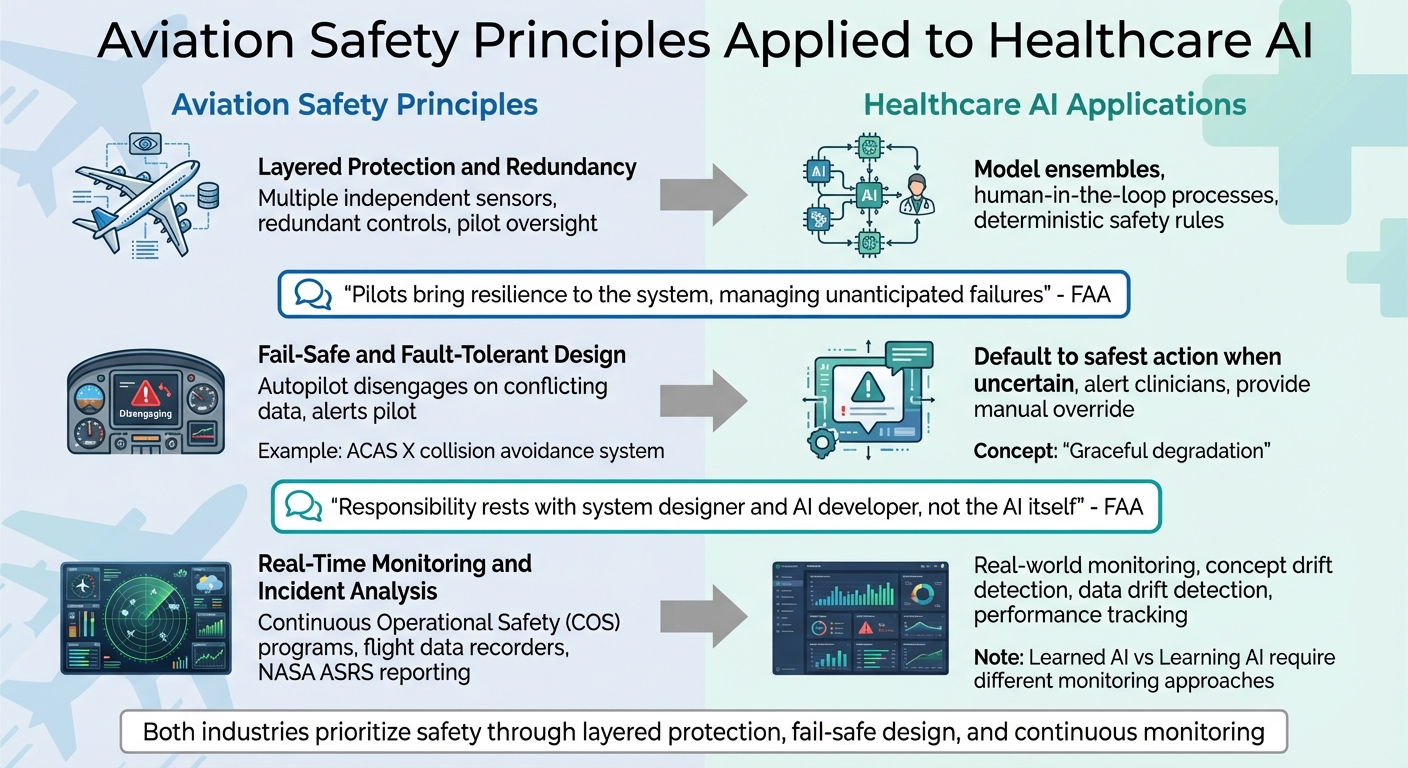

Aviation Safety Principles Applied to Healthcare AI Systems

Aviation has achieved an impressive safety record by using strategies like layered protection, fail-safe design, and continuous monitoring. These same principles can significantly improve the reliability of machine learning systems in healthcare. Let’s break down how these approaches translate to AI.

Layered Protection and Redundancy

In aviation, relying on a single system is never an option. Aircraft are equipped with multiple independent sensors, redundant controls, and pilots who can step in if automation fails. Similarly, healthcare AI systems should avoid depending on just one model. Instead, organizations can:

- Use model ensembles - multiple AI models working together to cross-verify results.

- Implement human-in-the-loop processes, where clinicians review AI-generated recommendations.

- Apply deterministic safety rules to validate AI outputs against established medical guidelines [10].

The Federal Aviation Administration (FAA) underscores the importance of human oversight in its guidance:

"Pilots traditionally bring resilience to the system, managing situations that occur outside what the aircraft designer and operator may have anticipated, including unanticipated failures, environments, and operations" [8].

In healthcare, clinicians play a similar role. They monitor AI systems, identify errors, and intervene when needed. Beyond preventing single points of failure, systems must also be designed to handle unexpected breakdowns safely.

Fail-Safe and Fault-Tolerant Design

Aviation systems are designed to fail in a controlled way. For example, if an autopilot system encounters conflicting data, it disengages and alerts the pilot instead of making a risky decision. Healthcare AI should follow a similar approach by defaulting to the safest action when faced with uncertainty. If an AI system detects anomalies in its input data, it should flag the issue and defer to human intervention rather than producing unreliable results [9][10].

This concept, known as graceful degradation, ensures that a system continues to function at a reduced capacity instead of failing outright. For instance, an AI diagnostic tool encountering data inconsistencies should immediately alert clinicians and provide backup manual controls to override its decisions in critical situations [10].

The FAA emphasizes this responsibility:

"The responsibility for systems to meet their requirements rests with the system designer and AI developer, not the AI itself" [5][6].

Aviation has also integrated advanced AI-enabled safety mechanisms, such as the Aircraft Collision Avoidance System (ACAS X). This system uses machine learning to weigh risks and make decisions, replacing older, scenario-based algorithms. It demonstrates how careful design can allow AI to enhance safety [5][6]. Alongside thoughtful design, real-time monitoring is essential to catch and address issues as they arise.

Real-Time Monitoring and Incident Analysis

Aviation doesn’t wait for accidents to happen. Through continuous operational safety (COS) programs, it monitors flights in real time, analyzes near-misses, and implements preventative measures. Tools like flight data recorders capture critical details, while systems like NASA's Aviation Safety Reporting System (ASRS) encourage voluntary reporting to identify potential risks early [1].

Healthcare AI needs a comparable level of vigilance. Organizations should establish real-world monitoring to track model performance and detect issues like concept drift (shifts in patient demographics) or data drift (changes in medical equipment or procedures) before they affect patient safety [1][5]. By continuously collecting and analyzing operational data, teams can spot performance trends and anomalies that might otherwise go unnoticed [5][6].

The FAA also differentiates between "learned AI" (static models trained offline) and "learning AI" (dynamic models that adapt during operation). While learned AI can undergo rigorous safety checks during design, learning AI requires built-in safeguards for active use, as well as ongoing monitoring and potential regulatory oversight [5][6]. Healthcare organizations must understand which type of AI they are deploying and implement tailored monitoring protocols. In safety-critical environments, silent failures are simply unacceptable.

Building Safety-Critical AI Systems Using Aviation Methods

The aviation industry has earned its reputation for safety through meticulous engineering and operational practices. Now, healthcare AI can borrow from this playbook to minimize risks in clinical environments. By adopting methods like hazard analysis, redundancy, and continuous monitoring, healthcare organizations can build AI systems that are safer and more reliable. Let’s dive into how these strategies can be applied.

Hazard Analysis and Requirements for AI

In aviation, rigorous hazard analysis is a cornerstone of safety, and healthcare AI needs to meet the same standards. Techniques such as System-Theoretic Process Analysis (STPA) help identify potential failure points by examining how different components interact within a system. For healthcare AI, this means defining an Operational Design Domain (ODD) - a clear set of rules outlining where and how the AI should operate. This includes specifying the types of data it can process and identifying conditions that could lead to failure [13].

A well-defined ODD ensures that the AI system is prepared for the real-world scenarios it will encounter. This involves careful data selection, collection, and preparation to create datasets that are representative of actual clinical conditions. Such preparation helps establish generalization bounds, which are the limits within which the AI can be trusted to perform reliably [13]. To further ensure safety, organizations should conduct stability and robustness tests. These tests evaluate how the system handles unexpected inputs, such as out-of-distribution data or adversarial attacks, reducing the risk of dangerous outputs [13].

Redundancy and Human Oversight in AI

After identifying hazards, the next step is to build in redundancy and human oversight. Healthcare AI should never depend on a single model or decision pathway. Instead, model ensembles - a setup where multiple algorithms cross-check each other’s outputs - can provide an added layer of safety. This approach mirrors aviation’s use of distributed systems to ensure redundancy across critical functions.

Equally important is incorporating human-in-the-loop processes, where clinicians review AI-generated recommendations before taking action. For example, platforms like Censinet RiskOps integrate human oversight directly into AI workflows. This allows clinical teams to validate AI outputs against established medical standards, ensuring that probabilistic AI decisions are tempered by deterministic safety rules. Such measures are crucial because generative AI, which relies on probabilities, can sometimes produce unpredictable outcomes, creating potential cybersecurity vulnerabilities [11][12].

Performance Monitoring and Drift Detection

In aviation, Continuous Operational Safety (COS) programs monitor flights in real time to identify and address issues before they escalate. Healthcare AI requires a similar level of vigilance. For Learned AI systems - models trained offline - ongoing monitoring is essential to detect performance gaps and apply corrections. For Learning AI systems - models that adapt during use - active monitoring and periodic re-certification are critical to prevent performance degradation or unintended learning behaviors [6][5].

Tools like Censinet RiskOps play a key role in real-time performance monitoring. These systems can identify subtle trends in safety metrics and key performance indicators that might escape human notice. Additionally, they enable predictive maintenance by analyzing historical and real-time data to anticipate potential system failures before they occur [14][15]. When AI models are updated or repurposed through techniques like transfer learning, new certification procedures and configuration management systems are needed to document changes and maintain safety standards [13]. By implementing these measures, healthcare organizations can not only reduce risks but also promote a culture of continuous safety - an essential principle shared by both aviation and healthcare.

sbb-itb-535baee

AI Governance, Regulation, and Safety Culture in Healthcare

Aviation didn’t become one of the safest industries overnight - it took decades of evolving regulations, learning from failures, and fostering a culture that prioritizes safety. Healthcare AI now stands at a similar turning point. Establishing strong governance systems could either elevate patient safety or unintentionally create new risks. The encouraging news? Healthcare organizations can draw inspiration from aviation’s proven frameworks to guide the development of safer AI systems.

Regulatory Feedback and AI Safety Boards

Aviation’s safety record owes much to its feedback-driven regulatory practices. These practices include learning from failures, promoting a culture of openness where issues can be reported without fear, and ensuring personnel managing automated systems are rigorously trained and accredited [1].

The Federal Aviation Administration (FAA) is already paving the way for AI safety. In July 2024, it introduced the "Roadmap for Artificial Intelligence Safety Assurance", followed by the "Safety Framework for Aircraft Automation" in September 2025. These documents lay out principles that healthcare organizations can adapt to create their own AI governance strategies [16]. One approach could involve forming dedicated AI Safety Boards - committees tasked with tracking incidents, investigating root causes, and implementing corrective actions. This mirrors the role of the National Transportation Safety Board (NTSB) in aviation and provides a blueprint for strengthening oversight in healthcare AI [1].

Organizational Governance and Oversight Structures

Aviation’s multi-layered governance model offers another valuable lesson for healthcare. To ensure safe and effective AI implementation, healthcare organizations should establish clear oversight structures. AI steering committees, for example, can bring together clinical leaders, IT experts, risk managers, and compliance officers to oversee AI deployment, monitor performance metrics, and ensure that systems align with patient safety goals.

Tools like Censinet RiskOps can centralize AI risk management by streamlining the review and approval of key findings. By involving Governance, Risk, and Compliance (GRC) teams, healthcare organizations can maintain continuous oversight and accountability throughout the AI lifecycle.

Creating a Non-Punitive Culture for AI Incidents

One of aviation’s most impactful lessons is its "just culture" - an approach that prioritizes system improvement over blame, except in cases of deliberate misconduct [17]. A prime example of this is NASA’s Aviation Safety Reporting System (ASRS). This confidential, voluntary program encourages pilots, air traffic controllers, and others to report incidents or near misses without fear of punishment, fostering transparency and learning [17].

Healthcare can adopt a similar mindset by creating a non-punitive reporting process for AI-related incidents. This process should document issues, identify recurring patterns, and drive improvements across the system. Tools like Censinet RiskOps support this by offering structured documentation and root-cause analysis, recording the context, timing, and impact of incidents. By encouraging open communication and focusing on learning rather than assigning blame, healthcare organizations can address potential safety concerns before they affect patients.

Conclusion

Drawing inspiration from aviation, healthcare AI stands at a critical juncture. Just as aviation became one of the safest industries through disciplined governance, rigorous oversight, and a commitment to learning, healthcare AI can follow a similar path to ensure safety and reliability in high-stakes environments.

One key lesson from aviation is the importance of accountability throughout the entire system lifecycle. As the FAA aptly puts it:

"The responsibility for systems to meet their requirements rests with the system designer and AI developer, not the AI itself" [5].

This principle highlights the need for clear accountability, paired with robust technical safeguards, to ensure that healthcare AI meets safety standards.

With 90% of organizations already using AI and 65% leveraging generative AI [18], the urgency for strong governance has never been greater. Healthcare leaders must act now to establish the frameworks, monitoring systems, and safety practices needed to protect patients as AI becomes a central part of clinical workflows.

Aviation’s safety record demonstrates that even the most complex automated systems can perform reliably in critical settings - but only with an unwavering focus on safety, transparency, and continuous improvement. For healthcare AI to succeed, it must embrace these same principles.

FAQs

How can healthcare AI systems adopt aviation-style fail-safe designs?

Healthcare AI systems can improve safety by borrowing key principles from aviation, such as redundancy, fail-safe mechanisms, and a proactive safety culture. For instance, backup systems can be implemented to manage unexpected failures, AI can be designed for gradual and controlled deployment, and real-time error detection and correction mechanisms can be integrated to minimize risks.

Equally important is fostering a culture of accountability. This involves encouraging open discussions about issues, learning from past mistakes through regulatory feedback, and offering thorough training for staff who work with AI systems. Together, these strategies build trust, enhance reliability, and help reduce risks in critical areas like healthcare.

What challenges arise when applying aviation safety principles to machine learning systems?

Adapting aviation safety principles to machine learning systems isn’t straightforward. One major hurdle is the lack of explainability in many machine learning models. This makes it difficult to ensure proper human oversight and accountability, as the reasoning behind AI decisions can be opaque. Unlike aviation systems, which follow predictable rules, machine learning models can exhibit unpredictable behavior due to their learning-based nature. This unpredictability complicates the creation of reliable fail-safe mechanisms.

Another challenge lies in developing rigorous safety assurance processes. Traditional standards don’t always align with the dynamic, ever-evolving characteristics of machine learning systems, requiring significant adjustments. On top of that, cybersecurity risks are a pressing issue. AI systems can be susceptible to malicious attacks, which raises concerns about their reliability and security. Lastly, the fast-changing landscape of regulatory standards adds yet another layer of complexity, especially in critical fields like healthcare, where precision and safety are paramount.

Why is continuous monitoring essential for ensuring the safety of AI systems in healthcare?

Continuous monitoring plays a key role in ensuring AI systems in healthcare remain safe and reliable. It allows for the detection and resolution of unexpected issues, helps systems adjust to changing conditions, and reduces risks - particularly in critical areas like patient care.

Drawing inspiration from aviation safety practices, such as incorporating redundancy and fail-safe mechanisms, healthcare organizations can build AI systems that are dependable and secure over time. This forward-thinking strategy reduces errors and strengthens accountability, safeguarding both patients and healthcare providers.

Related Blog Posts

- The AI Safety Imperative: Why Getting It Right Matters More Than Getting There First

- Human-Centered AI Safety: Keeping People at the Heart of Intelligent Systems

- The Psychology of AI Safety: Understanding Human Factors in Machine Intelligence

- Life and Death Decisions: Managing AI Risk in Critical Care Settings