AI Accountability: Building Governance Systems for Autonomous Decision-Making

Post Summary

AI in healthcare is transforming patient care but raises accountability challenges. When AI systems make errors, who is responsible? This article explains how healthcare organizations can build governance frameworks to ensure AI systems are safe, ethical, and compliant with regulations. Here's what you'll learn:

- AI Accountability Basics: Policies and standards to ensure AI safety, transparency, and compliance.

- Risks of Unchecked AI: Algorithmic bias, lack of transparency, and potential harm to patients.

- Governance Framework: Key elements like committees, policies, training, and audits.

- US Regulations: Evolving state laws and the need for tailored governance strategies.

- Cybersecurity and Risk Management: Addressing data breaches, model drift, and adversarial attacks.

- Human Oversight: Balancing AI autonomy with human intervention for high-risk decisions.

The goal? To create systems that protect patients, meet legal requirements, and build trust in AI-driven healthcare.

Building an AI Governance Framework

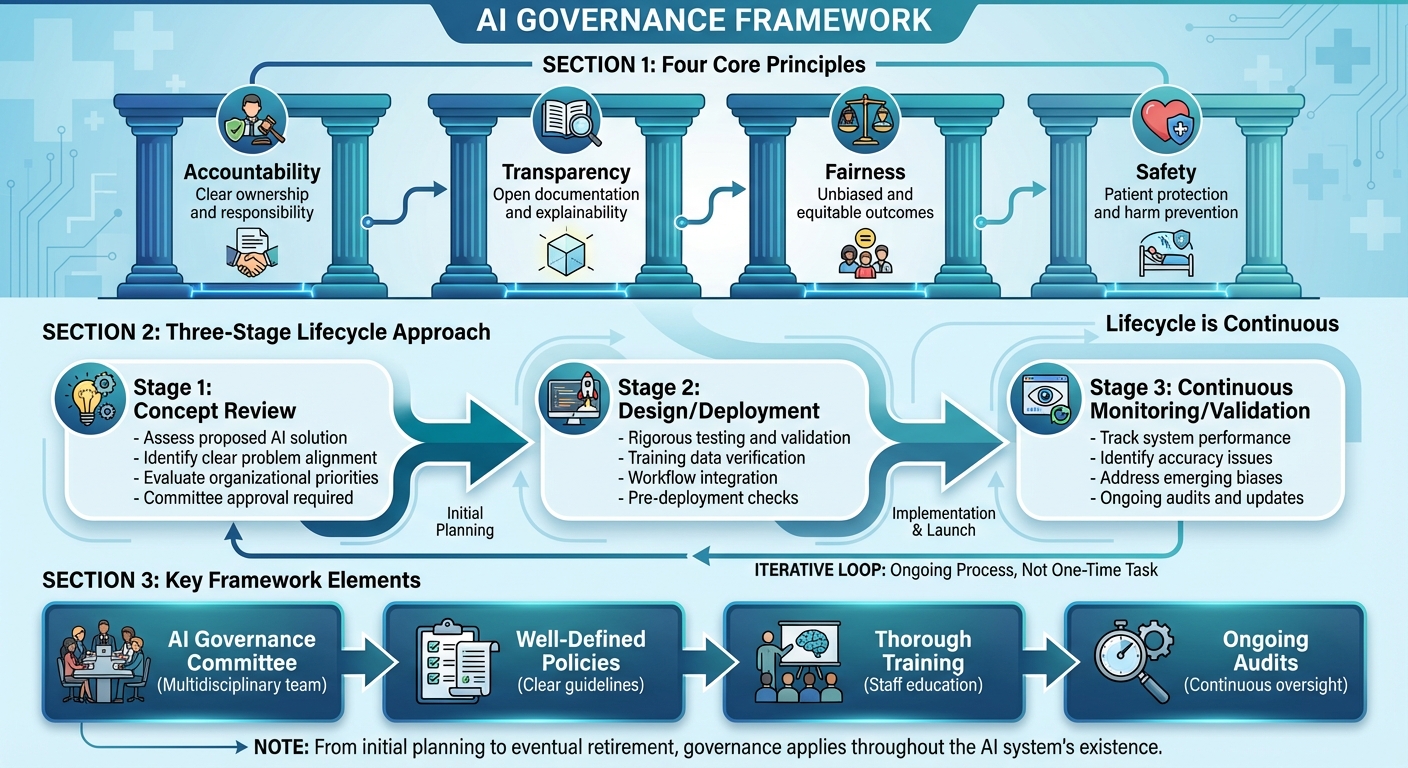

AI Governance Framework: Three-Stage Lifecycle and Four Core Principles

An effective AI governance framework in healthcare relies on several key elements: an AI governance committee, well-defined policies, thorough training, and ongoing audits [6]. Together, these components ensure AI systems operate safely, transparently, and in alignment with both organizational goals and regulatory expectations. The framework rests on four core principles: accountability, transparency, fairness, and safety [3].

A structured approach, following three stages - Concept Review, Design/Deployment, and Continuous Monitoring/Validation - supports this framework [5]. This lifecycle approach emphasizes that governance isn’t a one-time task but an ongoing process that evolves with each AI system, from its initial planning to its eventual retirement.

Defining Roles and Responsibilities

Strong leadership is critical for AI governance. Without clear executive accountability, AI initiatives can lack direction and resources [7]. A three-tiered governance model works well, with clinical executives offering strategic oversight, advisory councils providing specialized expertise, and front-line staff addressing operational needs.

For example, the AI governance committee can function as a subcommittee under an existing digital health committee, as seen with Trillium Health Partners in Canada in August 2025 [1]. This committee should include members from diverse areas such as clinical leadership, IT, compliance, ethics, legal, and patient advocacy. This multidisciplinary approach ensures accountability is distributed across the organization. While individual departments handle operational concerns like clinical requirements, budgets, and workflow impacts, the governance committee focuses on broader issues like safety, equity, efficacy, privacy, and regulatory compliance. Regularly reviewing and updating the committee’s membership each year ensures the group maintains the necessary expertise [1].

Once roles are clearly defined, formal policies are necessary to turn these responsibilities into actionable steps.

Creating AI Governance Policies

AI governance policies must provide clear guidance on how systems are used, how protected health information (PHI) is managed, and what actions to take if something goes wrong [8]. These policies should outline who oversees AI systems, how harm is reported, and how issues are escalated when performance problems arise [6]. For example, they should establish thresholds that trigger human intervention, require documentation of AI-generated recommendations, and detail protocols for pausing or shutting down systems that exhibit problematic behavior.

Given the ever-changing regulatory landscape in the U.S., these policies need to be adaptable to comply with varying state and federal requirements while maintaining consistent internal standards. They serve as a guide throughout the AI lifecycle, ensuring accountability at every stage.

Managing AI Systems Throughout Their Lifecycle

AI governance must cover the entire lifecycle of a system - from its initial concept to deployment, ongoing monitoring, and eventual decommissioning. This approach ensures AI systems remain effective and safe, even as clinical practices and patient needs evolve.

Each stage of the lifecycle requires specific governance activities. During the Concept Review phase, the committee assesses whether a proposed AI solution addresses a clear problem and aligns with organizational priorities. The Design/Deployment phase involves rigorous testing, validation of training data, and smooth integration into existing workflows. Once deployed, Continuous Monitoring/Validation ensures the system’s performance is tracked, accuracy issues are identified, and any emerging biases are addressed. Tools like Censinet RiskOps™ help centralize governance efforts, enabling quick reviews of critical findings while reinforcing the framework’s core principles of accountability and transparency.

Connecting Cybersecurity and Risk Management

The rise of healthcare AI brings with it a host of cybersecurity challenges - think data breaches, adversarial attacks, data poisoning, and even model drift. These threats can jeopardize patient safety and the integrity of healthcare organizations. Building on earlier discussions about governance, it's clear that cybersecurity measures are a must for keeping AI operations safe. Unlike traditional software systems, AI is dynamic - it learns and adapts over time. This evolving nature means healthcare providers need to bolster their cybersecurity frameworks with safeguards tailored to AI. These measures align closely with the robust AI governance framework previously outlined.

Identifying AI-Related Risks

AI in healthcare introduces various risks that demand attention. For instance, training and operating AI models often require access to vast amounts of protected health information (PHI), which raises concerns about data exposure. Adversarial attacks - where malicious inputs are designed to trick AI systems - could lead to harmful outcomes like misdiagnoses or improper treatment recommendations. Then there’s model drift, which happens when real-world data begins to differ from the data used to train the AI, potentially degrading its performance over time. Other concerns include poor data quality, vendor lock-in, and the misuse of AI, all of which introduce operational and ethical challenges. Addressing these risks effectively requires collaboration across various teams, including medical informatics, clinical leadership, legal, compliance, safety and quality, data science, bioethics, and patient advocacy.

Once these risks are identified, structured frameworks can help mitigate them effectively.

Using Risk Assessment Frameworks

Risk assessment frameworks like NIST and the Health Sector Coordinating Council (HSCC) provide healthcare organizations with tools to identify, evaluate, and manage AI-related risks. These frameworks also integrate seamlessly into broader Governance, Risk, and Compliance (GRC) strategies. The HSCC is working on 2026 guidance aimed at managing AI cybersecurity risks, which will include actionable playbooks for cyber operations, governance structures, and secure-by-design principles for medical devices [9].

In 2025, the U.S. Department of Health and Human Services (HHS) introduced an AI strategy that prioritizes governance, risk management, infrastructure, and workforce development. Acting Chief AI Officer Clark Minor highlighted the strategy’s goal: "harnessing AI to empower our workforce and drive innovation across the Department" [10]. Known as "OneHHS", this initiative promotes sharing AI solutions across divisions and mandates that organizations implement minimum risk management practices for high-impact AI systems - such as bias mitigation, outcome monitoring, security, and human oversight - by April 3, 2026 [10].

A critical step in managing these risks is maintaining a comprehensive inventory of all AI systems. This inventory helps organizations understand how their systems function, what data they rely on, and their security implications [9]. It also allows teams to focus on high-risk systems and allocate resources effectively. To ensure compliance, oversight of AI systems should be integrated into broader GRC strategies, addressing data privacy, bias, and other potential vulnerabilities [11].

Technology plays a key role in making these processes more efficient.

Using Technology for Cybersecurity Governance

Technology platforms are essential for streamlining risk management and centralizing AI governance. For example, Censinet RiskOps™ acts as a central hub where organizations can manage AI-related policies, risks, and tasks, all while offering real-time insights into the security status of their AI systems. Its routing and orchestration features ensure that critical findings are automatically sent to the right stakeholders, enabling timely responses to pressing issues.

Another tool, Censinet AITM™, speeds up risk assessments by allowing vendors to complete security questionnaires in seconds. It automatically summarizes vendor-provided evidence, captures critical product details, identifies fourth-party risks, and generates comprehensive risk reports. This system balances automation with human oversight, helping healthcare organizations manage risks efficiently without losing control.

The FDA is also embracing technology to enhance oversight. In December 2025, the agency launched a secure, agency-wide "agentic AI" platform. Available to all FDA employees, this platform supports complex tasks like managing regulatory schedules, assisting with pre-market product reviews, and automating parts of post-market surveillance. Importantly, it incorporates built-in human oversight and remains optional for employees [10].

These advancements showcase how technology can support robust cybersecurity governance while maintaining the human element that’s critical for responsible AI use.

Balancing AI Autonomy with Human Oversight

When it comes to cybersecurity governance, balancing AI autonomy with human oversight is about creating a partnership where technical capabilities and human judgment work seamlessly together.

For healthcare organizations, finding this balance is no small task. If AI systems operate with too much independence, there’s a risk of patient harm. On the flip side, too much oversight can bog down operations. The solution lies in tailoring the level of AI autonomy to match the risk associated with each decision.

Setting Autonomy Levels and Oversight Requirements

Not every decision made by AI carries the same weight. For instance, scheduling routine appointments is quite different from generating treatment recommendations. To address this, healthcare organizations should classify AI systems based on their risk levels. High-risk systems - like those involved in clinical decision-making - should undergo independent evaluations, require clear transparency measures, and have strong human oversight in place [12].

This classification helps determine the level of human involvement. For high-risk systems, you might need a human-in-the-loop setup, where every AI decision gets reviewed by a person. For moderate-risk scenarios, a human-on-the-loop approach might suffice, where AI operates independently, but humans monitor its performance continuously. For low-risk, administrative tasks, minimal oversight may be enough. The ultimate goal is to move away from rigid compliance checklists and instead adopt a more dynamic, integrated approach to oversight that blends seamlessly into everyday operations [12].

Setting Escalation and Decision Thresholds

Another critical piece of the puzzle is knowing when AI should defer to a human expert. Escalation protocols and decision thresholds act as safety nets to prevent errors like misdiagnoses or unsuitable treatments [13][4]. These thresholds can be based on factors such as the AI’s confidence level, the complexity of the clinical situation, or the potential impact on the patient.

For example, if an AI system encounters a scenario where its confidence is low or the case is too ambiguous, it should automatically escalate the decision to a human for review. This approach not only reduces the risks of the so-called "black box" problem but also ensures that crucial decisions always get the human validation they need [13][4]. These protocols provide a clear framework for effective oversight.

Tools for Human Oversight

Technology plays a big role in scaling oversight efforts. Platforms like Censinet AI make it easier for healthcare organizations to manage cyber risks without compromising safety. By combining human oversight with automated processes, Censinet AI streamlines risk assessments while keeping human expertise at the heart of decision-making.

Censinet AI also supports collaboration by acting as an "air traffic control" system for AI governance. It routes critical findings and tasks to the appropriate Governance, Risk, and Compliance (GRC) teams for review and action. With real-time data displayed in an intuitive AI risk dashboard, Censinet RiskOps becomes the central hub for managing AI-related policies, risks, and tasks. This coordinated approach ensures that the right teams address the right issues at the right time, enabling continuous oversight, accountability, and effective governance across the organization.

sbb-itb-535baee

Implementing AI Governance in Healthcare Organizations

Putting AI governance into practice in healthcare requires a thoughtful and structured approach that aligns seamlessly with the industry’s unique operations. By 2024, only 16% of healthcare organizations had established a system-wide AI governance framework [14]. This statistic underscores not only the challenges but also the opportunities for organizations willing to take the lead. To bridge this gap, a clear and strategic roadmap is essential.

Creating an AI Governance Roadmap

Building effective AI governance takes time and careful planning. The American Medical Association (AMA) has outlined an 8-step guide specifically designed to help healthcare systems create and implement governance frameworks [15]. This step-by-step approach ensures organizations can move from drafting policies to embedding AI oversight across departments.

A successful roadmap must address three core areas: People, Process, and Technology. Start by assembling a multidisciplinary team to lead governance efforts. This team should include clinical leaders, data scientists, and administrative decision-makers [16]. Then, define processes for validating AI systems, setting approval thresholds, and establishing protocols for continuous monitoring once systems are operational. It’s critical to remember that AI governance frameworks are not static - they require regular updates to stay effective [14].

Resource allocation is another key component. Governance efforts must balance the potential impact on patient care with the need to mitigate risks [5]. Prioritize AI projects based on their ability to improve care while aligning investments with overall organizational goals. Set realistic timelines that reflect the complexities of healthcare environments and the importance of stakeholder engagement at every level. This roadmap underscores the importance of accountability, transparency, and ethical oversight in managing AI-driven decision-making.

Connecting AI Governance with Existing Processes

AI oversight works best when integrated into existing risk and compliance workflows. The U.S. Department of Health and Human Services (HHS) emphasizes embedding AI requirements into established processes, such as the Authority to Operate (ATO) review, to ensure continuous compliance monitoring after deployment [10].

For example, AI validation can be incorporated into the existing clinical technology approval process, with decision thresholds aligned to current workflows [2]. This approach minimizes disruptions and makes adoption smoother by avoiding the need for entirely new procedures.

Platforms like Censinet RiskOps can further streamline this integration by centralizing cybersecurity and AI governance efforts. Consolidating governance within existing risk management systems prevents the creation of siloed processes, which can complicate operations and reduce efficiency.

Measuring and Improving Governance Performance

For governance to be effective, it must be regularly measured and refined. Ongoing monitoring and feedback from clinicians are essential to identify risks and adjust governance practices as needed [16]. Focus on metrics that directly impact patient outcomes and clinical relevance [2].

Audits play a vital role in this process, validating both AI performance and the effectiveness of governance practices. Real-world insights gathered from audits often reveal issues that technical metrics alone might overlook. As AI technology and clinical needs evolve, governance practices must adapt accordingly.

AI governance platforms offer centralized tools for managing the entire AI lifecycle, including monitoring, validation, and compliance [17][18]. With real-time data displayed in intuitive dashboards, organizations can track performance across multiple AI systems, identify trends, and benchmark progress. These tools also make it easier to demonstrate accountability to regulators and stakeholders, ensuring that governance frameworks remain robust and effective over time.

Conclusion: Safe and Accountable AI in Healthcare

AI governance is the backbone of using AI responsibly in healthcare, ensuring patient safety and preserving trust. The principles outlined earlier should guide every stage - development, deployment, and ongoing oversight - to make AI a reliable tool in healthcare settings [3].

When left unchecked, AI systems can lead to serious consequences, such as biased diagnoses, inaccurate outputs from generative AI, and even HIPAA violations. These risks not only jeopardize patient safety but also undermine trust among clinicians and patients [3]. With 950 FDA-approved AI systems as of August 2024 and a surge in AI research publications, the rapid adoption of AI calls for immediate and decisive action [1].

Healthcare organizations face a distinctive challenge: the lack of consistent federal regulations means the responsibility for determining AI's safety and effectiveness falls on clinicians, IT professionals, and leadership teams [2]. To address this, organizations must establish robust internal governance systems. This includes forming multidisciplinary teams, integrating AI oversight into risk management processes, and keeping an up-to-date inventory of AI systems [2].

Shifting the focus from technical performance metrics to real-world patient outcomes is critical. Organizations should set clear standards for acceptable benefits and risks, implement continuous monitoring after deployment, and ensure accountability at all levels of leadership [2][7]. Transparency is key - documenting each AI model’s purpose, input data, decisions, and outcomes helps ensure clinical safety and regulatory compliance.

The future of AI in healthcare depends on a steadfast commitment to strong governance. As highlighted throughout this guide, scalable and well-structured governance frameworks are essential for managing AI effectively. Organizations that take proactive steps now will not only mitigate risks but also position themselves as pioneers of responsible AI use in healthcare.

FAQs

What steps can healthcare organizations take to keep AI systems compliant with changing regulations?

Healthcare organizations can stay on top of compliance by creating strong governance frameworks designed to keep pace with regulations like HIPAA and FDA guidelines. These frameworks should focus on regular risk assessments, clear accountability, and continuous monitoring to ensure AI systems operate within ethical and legal boundaries.

Some practical strategies include:

- Bringing stakeholders into the conversation to address concerns and build trust.

- Establishing internal policies for data privacy, reducing bias, and ensuring transparency.

- Performing regular audits and keeping up with regulatory updates.

By focusing on patient outcomes and developing oversight processes that can grow with the organization, healthcare providers can not only manage risks but also encourage innovation in AI-powered healthcare solutions.

How can we reduce algorithmic bias in AI systems used in healthcare?

To address algorithmic bias in healthcare AI systems, taking deliberate and thoughtful actions is key. Start by performing regular bias audits to spot and correct any imbalances in how decisions are made. Ensure the AI models are trained on diverse and representative datasets so they accurately reflect the communities they are designed to serve. Additionally, employing explainable AI methods can make the decision-making process more transparent and easier for everyone to grasp.

Involving a wide range of stakeholders, such as clinicians, data scientists, and patients, can help identify overlooked issues and ensure ethical concerns are thoroughly considered. Lastly, maintain human oversight at every stage of the AI's lifecycle to validate its results and step in when adjustments are needed. These practices are essential for fostering trust and promoting fairness in healthcare AI systems.

How do AI governance frameworks help protect healthcare systems from cybersecurity threats?

AI governance frameworks play a critical role in protecting healthcare systems from cybersecurity threats. They combine real-time threat detection, predictive analytics, and continuous monitoring to keep a close eye on networks, devices, and user activity. These systems work to uncover vulnerabilities, enforce strict access protocols, and update outdated technologies, effectively minimizing risks like data breaches and ransomware attacks.

Regular AI audits are essential for spotting anomalies early, ensuring quick responses to potential threats. Following established standards, such as guidelines from NIST and HHS, helps maintain compliance and transparency. By addressing both technical risks and ethical concerns, these frameworks not only strengthen cybersecurity but also help maintain trust in the integrity of healthcare systems.