AI in Cloud Anomaly Detection for Healthcare

Post Summary

AI is transforming how healthcare organizations protect sensitive data in cloud environments. With the rise of electronic health records (EHRs), telehealth, and connected medical devices, healthcare faces growing cybersecurity risks. Traditional security tools often fail to detect advanced threats or generate too many false positives. AI-driven anomaly detection offers a solution by identifying unusual behaviors in real-time, such as unexpected data access, login anomalies, or abnormal device activity. Here's why it's important:

- Improved Threat Detection: AI models, like Isolation Forests and LSTMs, analyze patterns in user behavior, network traffic, and device telemetry to flag potential breaches.

- Compliance Support: Helps meet HIPAA requirements by monitoring access to protected health information (PHI) and detecting unauthorized activities.

- Operational Protection: Detects threats that could disrupt clinical systems, such as ransomware or compromised IoMT devices.

- Reduced False Positives: Hybrid AI models combine supervised and unsupervised learning to minimize unnecessary alerts, allowing teams to focus on real risks.

AI anomaly detection isn't just about technology - it requires high-quality data, tailored models, and strong governance to succeed. Healthcare organizations must integrate these tools into broader risk management frameworks to safeguard patient data and ensure uninterrupted care.

AI Techniques for Cloud Anomaly Detection

Healthcare organizations rely on a mix of supervised, unsupervised, and hybrid machine learning techniques to spot anomalies in cloud environments. Each approach brings its own strengths, with supervised learning excelling when labeled data is available, while unsupervised methods shine when dealing with unknown or emerging threats.

Supervised and Unsupervised Learning

Supervised learning involves training models like Support Vector Machines (SVMs), Random Forests, and Gradient Boosting on labeled datasets that include examples of both normal and malicious behavior. For instance, SVMs have shown impressive accuracy in identifying unusual patterns in Electronic Health Record (EHR) access, such as unauthorized attempts to view sensitive patient data[1].

Unsupervised learning, on the other hand, is indispensable when labeled data is limited or when dealing with new, unforeseen threats. Techniques like Isolation Forest, Local Outlier Factor (LOF), K-Means clustering, and autoencoders analyze patterns in cloud logs, API calls, and network traffic to identify deviations from the norm. These methods can differentiate routine activities - like a nurse accessing a patient portal - from suspicious behaviors, such as a lab technician downloading large volumes of imaging data in the middle of the night. Research suggests that combining Isolation Forest with SVM can enhance detection accuracy while minimizing false positives[1].

Because cloud data is constantly changing, time-series and sequence models are also critical for capturing these evolving patterns.

Time-Series and Sequence Models

Cloud environments generate a continuous stream of data, from login attempts and API calls to system metrics and device telemetry. Time-series and sequence models, such as Recurrent Neural Networks (RNNs), Long Short-Term Memory (LSTM) networks, and temporal Convolutional Neural Networks (CNNs), are designed to track these sequential data points over time. For example, LSTMs can learn the typical sequence of user actions - like logging in, viewing EHRs, and downloading files - and flag unusual patterns, such as a sudden burst of bulk exports paired with configuration changes.

In Internet of Medical Things (IoMT) settings, LSTM-based models have been particularly effective at spotting compromised devices. These models can detect anomalies like a device initiating unexpected outbound connections or transmitting data at odd hours. Additionally, they monitor system performance metrics - CPU usage, memory consumption, and network throughput - to provide early warnings about potential disruptions that could impact patient care.

Reducing False Positives with Hybrid Models

Hybrid models combine the strengths of both static and sequential analysis to improve detection accuracy and reduce unnecessary alerts. By blending unsupervised and supervised methods, these models help healthcare IT and security teams focus on genuine threats without being overwhelmed by false positives.

For instance, an unsupervised method like Isolation Forest might assign anomaly scores to EHR access sessions. A supervised classifier, such as an SVM trained on past incidents, then analyzes these flagged sessions to distinguish between benign outliers - like a clinician working across multiple units during an emergency - and actual malicious activity. This approach not only sharpens detection precision but also improves metrics like specificity and F1 scores, ensuring that critical threats are identified without overloading teams with irrelevant alerts.

AI Use Cases in Healthcare Cloud Anomaly Detection

Securing Cloud-Hosted EHR and Clinical Applications

Cloud-hosted Electronic Health Records (EHR) and clinical applications are prime targets for cyberattacks, whether from external hackers or internal threats. AI-powered anomaly detection plays a critical role in spotting unauthorized access, data leaks, and risky misconfigurations before they spiral into larger problems.

Unsupervised machine learning models, such as Isolation Forest and Local Outlier Factor, are particularly effective when there's limited labeled attack data. These models establish a baseline of typical user behavior - like usual login times, devices used, number of records accessed, and standard clinical workflows. Any significant deviation from this baseline raises a red flag. For instance, if a nurse who typically accesses 20–30 patient records per shift suddenly queries hundreds of unrelated records across multiple facilities, the system assigns a high anomaly score [1].

Research shows that combining unsupervised methods with supervised classifiers can reduce false positives and identify anomalies that clustering methods alone might miss [1]. These anomalies could include unexpected login locations, sudden spikes in record exports, access to sensitive data outside of normal clinical workflows, or unapproved changes to cloud storage or access management policies.

Once anomalies are detected, healthcare organizations can act quickly by implementing automated responses such as requiring step-up multi-factor authentication (MFA), suspending sessions temporarily, or initiating manual reviews. These proactive measures not only align with HIPAA security standards but also help avoid costly breaches, regulatory fines, and damage to the organization's reputation. This approach also serves as a foundation for tackling challenges in other critical areas of healthcare.

Monitoring Telehealth and Patient Portals

AI isn't just protecting clinical applications - it’s also safeguarding platforms used directly by patients. Telehealth services and patient portals are frequent targets for attacks like credential stuffing, account takeovers, and bot-driven exploits. By analyzing session behavior - such as login speed, device fingerprints, and the reputation of IP addresses - AI can detect unusual patterns that may signal malicious activity [2].

Time-series models, which track activities across multiple accounts, are particularly useful in spotting coordinated attacks. For example, a sudden surge of login attempts from a single IP range or repetitive, automated behaviors could indicate a credential-stuffing campaign. Similarly, AI can differentiate between normal patient activity and suspicious actions, such as mass downloads of visit summaries or abrupt changes to account details, which might point to an account takeover. When such anomalies are flagged, adaptive measures like multi-factor authentication, CAPTCHA challenges, IP rate-limiting, or temporary restrictions on sensitive functions can be deployed to mitigate risks.

Protecting IoMT and Cloud-Connected Clinical Systems

AI’s capabilities extend to safeguarding the Internet of Medical Things (IoMT) and cloud-connected clinical systems, which are vital for patient care and operational efficiency. IoMT devices - such as vital-sign monitors, infusion pumps, imaging equipment, and ventilators - along with systems like PACS and laboratory information systems, continuously produce telemetry data. AI models analyze this data to detect both security threats and operational issues [3].

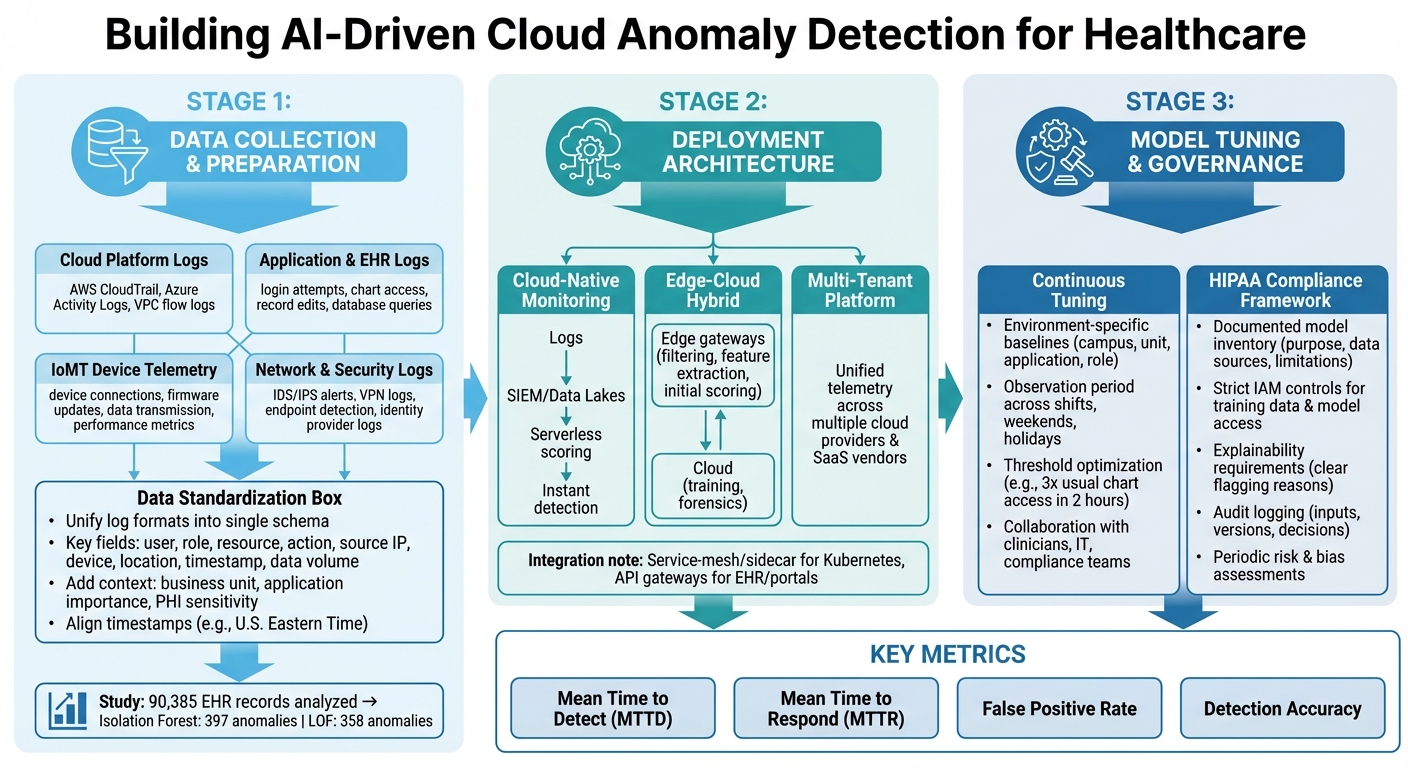

Building AI-Driven Cloud Anomaly Detection for Healthcare

AI Anomaly Detection Implementation Framework for Healthcare Cloud Security

Data Sources Required for AI Models

To effectively detect anomalies using AI, healthcare organizations need to pull data from a variety of sources and standardize it. This includes logs from cloud platforms like AWS CloudTrail, Azure Activity Logs, and VPC flow logs, which track access to protected health information (PHI) - identifying who accessed it, from where, and how frequently [1]. Additionally, application and EHR logs, which document login attempts, chart access, record edits, and database queries, can expose patterns signaling insider threats or compromised accounts [1].

IoMT (Internet of Medical Things) devices add another layer of data. Logs from device connections, firmware updates, data transmission, and performance metrics can help spot unusual communication with cloud endpoints or deviations from typical device behavior [3]. Network and security logs - such as IDS/IPS alerts, VPN logs, endpoint detection telemetry, and identity provider logs - further support anomaly detection by correlating user, device, and network activity into higher-confidence incidents [1].

AI models need this data to be both standardized and enriched. To achieve this, healthcare teams should unify diverse log formats into a single schema that captures key details like user, role, resource, action, source IP, device, location, timestamp, and data volume [1]. Adding context - such as the business unit, application importance, and PHI sensitivity - enhances anomaly scoring accuracy. Aligning all timestamps to U.S. Eastern Time ensures models can accurately reflect workflows, like a clinician’s typical weekday morning routine [1].

For example, a study in a UK hospital analyzing 90,385 EHR access records found that Isolation Forest detected 397 anomalies, while LOF identified 358 anomalies [1]. This highlights how rare genuine anomalies are compared to routine operations, making high-quality data preparation essential to avoid overwhelming security teams with false positives.

Once the data is prepared, the next step is deploying architectures that enable fast and effective threat detection.

Deployment Strategies and Architectures

A cloud-native approach to monitoring streams logs into centralized systems like SIEMs or data lakes, where serverless functions can score events almost instantly [1]. This setup ensures consistent visibility across various healthcare systems, including EHRs, imaging archives, and third-party clinical applications.

For IoMT devices and critical systems, edge-cloud hybrid architectures are often the best fit. Lightweight agents or gateways at the hospital network edge handle tasks like filtering, feature extraction, and even initial anomaly scoring on device traffic [3]. This reduces bandwidth usage and allows for near-instant detection, which is crucial for ICU monitoring or connected medical devices. Meanwhile, more resource-intensive processes like training and forensics occur in the cloud. The edge focuses on immediate threats, while the cloud provides a broader, more comprehensive view.

Some healthcare organizations also use sidecar or service-mesh techniques within Kubernetes environments or API gateways. This method mirrors traffic from EHR front-ends, patient portals, and telehealth services into an AI detection pipeline without altering application code. For those managing multiple cloud providers and SaaS vendors, multi-tenant observability platforms can unify telemetry into a single detection layer, applying consistent policies across diverse systems. Tools like Censinet RiskOps™ help streamline this process by centralizing risk posture and vendor data, enabling teams to prioritize anomalies linked to high-risk third parties.

Once the architecture is in place, maintaining detection accuracy requires continuous model tuning and strict governance.

Model Tuning and Governance in Healthcare

Continuous tuning of AI models is essential in healthcare, not just for accuracy but also for meeting HIPAA requirements. Models need environment-specific baselines - tailored to factors like hospital campus, clinical unit, application, and job role. This ensures that a night-shift ICU nurse and a day-shift radiologist have distinct "normal" behavior profiles, reducing unnecessary alerts [1]. Before activating high-priority alerts, an observation period should be used to establish baselines across different work shifts, weekends, and holidays.

Thresholds must be carefully optimized to balance precision and recall. For example, teams might flag suspicious activity when chart access exceeds three times the usual amount within a two-hour window. These thresholds should be fine-tuned based on historical incident data and red-team simulations [1]. Collaborating with clinicians, IT staff, and compliance teams to review key contributing features ensures the models align with real-world workflows and don’t penalize legitimate urgent actions, such as mass chart access during emergencies.

HIPAA compliance needs to be embedded in the governance framework. This includes maintaining a documented inventory of models that outlines their purpose, data sources, and limitations, allowing compliance and risk teams to verify their appropriateness [1]. Strict IAM controls should restrict access to training data, models, and outputs, ensuring only authorized personnel can view behavioral analytics. Explainability is also critical - security teams need clear reasons for flagged sessions, such as unusual login locations combined with after-hours EHR access to VIP patient records. All model inputs, versions, and decisions should be logged for forensic and regulatory purposes. Periodic risk and bias assessments ensure that models remain fair and effective, protecting patient data while supporting clinical operations.

sbb-itb-535baee

How Censinet Supports AI-Enabled Healthcare Risk Management

Censinet leverages advanced AI to seamlessly integrate risk management into healthcare cloud environments, enhancing the AI-driven anomaly detection capabilities highlighted in this guide.

Centralizing Cloud Risk Assessments

Censinet RiskOps™ simplifies the process of managing third-party and cloud vendor risks. For security teams evaluating AI-powered anomaly detection solutions, the platform provides a centralized way to inventory all cloud and AI vendors, link them to data types (like PHI, ePHI, or IoMT telemetry), and assess risks ranging from data breaches to service disruptions.

The platform gathers standardized responses from healthcare security questionnaires, along with critical evidence such as SOC 2 or HITRUST certifications. It also collects details on AI/ML model training and updates, data flow diagrams for PHI, and information about cloud hosting regions, redundancy, and backup strategies. Risk teams can assess AI-specific controls, including model access restrictions, training data origins, anonymization methods, and safeguards against data leakage or model inversion. This ensures vendors meet security standards before their solutions are integrated with cloud-hosted EHRs or clinical systems. Censinet RiskOps™ also aligns these assessments with U.S. regulatory requirements like HIPAA, HITECH, and the 21st Century Cures Act, as well as frameworks such as NIST CSF, NIST 800-53, and HITRUST. This gives CISOs and compliance leaders a clear view of how each solution could impact patient safety, privacy, and operations.

"Healthcare is the most complex industry... You can't just take a tool and apply it to healthcare if it wasn't built specifically for healthcare." - Matt Christensen, Sr. Director GRC, Intermountain Health

Automating Risk Workflows with Censinet AI

Censinet AI takes on repetitive tasks like reviewing questionnaires, classifying evidence, and mapping responses to control requirements. It suggests initial risk ratings, flags incomplete or inconsistent responses, and highlights critical risks - such as missing PHI encryption, weak monitoring of privileged access, or unclear AI model update processes - for further human review. Risk leaders can set thresholds, finalize ratings, and determine required remediations. The platform also tracks vendor updates and automatically triggers reassessments when vendors introduce new AI capabilities or expand data collection practices.

For instance, if a U.S. hospital is evaluating a cloud-based anomaly detection solution for its IoMT devices, Censinet AI can automatically identify whether PHI is stored, how logs are managed, and whether multi-factor authentication is enforced. It then routes the assessment to relevant teams - such as security, privacy, and clinical engineering - using workflow rules. This blend of AI-driven efficiency and human oversight shortens assessment times while ensuring decisions remain well-documented.

"Censinet RiskOps allowed 3 FTEs to go back to their real jobs! Now we do a lot more risk assessments with only 2 FTEs required." - Terry Grogan, CISO, Tower Health

This approach creates a strong foundation for collaborative governance across departments.

Collaborative Governance and Benchmarking

Censinet RiskOps™ promotes teamwork by offering shared vendor risk views, role-based access, and structured workflows. These features bring together security, compliance, privacy, legal, IT, clinical operations, and biomedical engineering teams. For example, when evaluating a cloud AI anomaly detection solution that monitors EHR activity or IoMT traffic, security teams can review network and identity controls, privacy teams can ensure HIPAA compliance, and clinical leaders can assess the potential impact on patient care and workflows. The platform supports comments, task assignments, and approvals, creating an auditable review process.

Additionally, because many healthcare organizations and vendors use Censinet RiskOps™, the platform offers anonymous benchmarking. This feature compares a vendor's control maturity to industry peers in areas like access management, data protection, incident response, AI governance, and business continuity. When healthcare delivery organizations evaluate multiple cloud-based anomaly detection providers, they can see which vendors meet or exceed industry averages and which fall short. This helps in making informed decisions about vendor selection, contract negotiations, and security requirements.

"Benchmarking against industry standards helps us advocate for the right resources and ensures we are leading where it matters." - Brian Sterud, CIO, Faith Regional Health

Conclusion

AI-powered anomaly detection is reshaping how healthcare cloud environments handle patient safety and protect sensitive health data. By keeping a close eye on EHR access patterns, IoMT device behavior, telehealth sessions, and clinical application performance, these systems can catch potential threats and operational issues before they escalate into serious problems or compromise protected health information (PHI). As highlighted in this guide, machine learning makes it possible to detect anomalies on a large scale while reducing false positives that could overwhelm security teams [1].

However, success hinges on more than just sophisticated algorithms. Healthcare organizations need to gather high-quality log data from cloud providers, clinical systems, and connected devices, feeding this data into secure analytics platforms. Piloting AI detection for specific use cases and fine-tuning these systems over time ensures they adapt to changing clinical workflows and emerging threats. Performance metrics like mean time to detect (MTTD) and mean time to respond (MTTR) are essential for tracking and improving system efficiency.

For these detection systems to truly excel, they must be part of a larger risk management framework. AI anomaly detection becomes far more effective when integrated into comprehensive governance processes. Tools like Censinet RiskOps™ enhance real-time monitoring by centralizing vendor risk assessments, automating compliance tasks, and offering benchmarking insights. This approach transforms isolated alerts into actionable decisions, helping healthcare organizations allocate security resources more effectively.

As digital healthcare continues to grow, collaboration across roles is key. CISOs should create clear roadmaps for AI monitoring, IT teams must address visibility gaps and test detection tools in controlled settings, and clinical leaders should pinpoint workflows most at risk of IT failures. By working together, these stakeholders can build a governance structure that not only refines AI models but also strengthens the surrounding processes - ensuring better protection for patients in an increasingly digital healthcare landscape.

FAQs

How does AI help reduce false positives in detecting anomalies in healthcare cloud systems?

AI plays a crucial role in minimizing false positives in healthcare cloud anomaly detection by examining patterns in real time and differentiating between regular system activity and actual security threats. Thanks to advanced algorithms, AI can spot subtle variations that traditional methods might overlook, leading to more accurate and relevant alerts.

This means fewer unnecessary interruptions caused by incorrect warnings, enabling healthcare organizations to concentrate on real risks. The improved accuracy also safeguards sensitive patient information and supports compliance with the strict regulations governing the healthcare sector.

How do time-series models help detect anomalies in IoMT devices?

Time-series models analyze sequential data from Internet of Medical Things (IoMT) devices to spot unusual patterns or deviations from normal behavior. This capability is key to identifying potential problems, such as device malfunctions or cybersecurity risks, early on. By doing so, these models help safeguard patient safety and ensure smooth device operations.

Through constant monitoring of data trends, these models deliver real-time insights. This enables healthcare providers to take timely action, address potential risks, and secure sensitive patient information effectively.

Why is high-quality data crucial for AI-powered anomaly detection in healthcare?

High-quality data is the backbone of AI-driven anomaly detection in healthcare. It ensures that results are both accurate and dependable. When data is clean and well-organized, AI systems can spot unusual patterns with greater precision, minimizing the risk of false alarms or overlooked anomalies.

Reliable data allows AI to deliver timely insights that improve patient safety, bolster cybersecurity, and help meet healthcare regulations. This becomes especially critical when protecting sensitive information, such as patient records and data from medical devices.