The AI Governance Revolution: Moving Beyond Compliance to True Risk Control

Post Summary

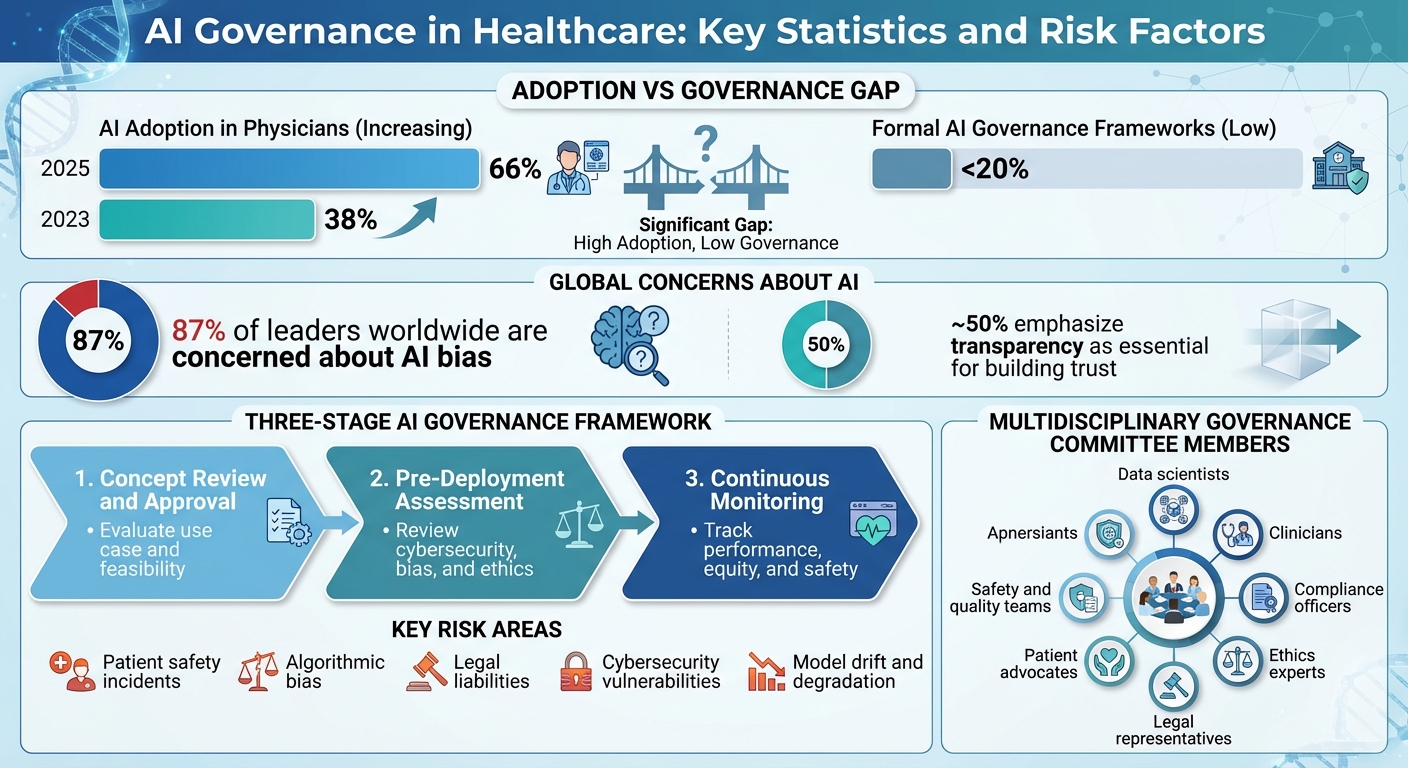

AI in healthcare is growing fast, with 66% of physicians now using it, up from 38% in 2023. But here's the issue: fewer than 20% of organizations have proper AI governance frameworks. This gap leaves them exposed to risks like patient safety issues, algorithmic bias, and legal challenges. Traditional compliance rules like HIPAA aren't enough to manage AI's dynamic risks.

Key takeaways:

- Compliance isn't sufficient: Static policies can't address AI's evolving risks, such as model drift or vendor updates.

- Major risks include: Patient safety incidents (e.g., sepsis predictor failures), bias in algorithms, and legal liabilities from unreliable tools.

- Integrated governance is critical: AI oversight must involve clinical, IT, legal, and executive teams, with continuous monitoring and real-time risk assessments.

- Examples of failure: Ransomware attacks causing fatalities, and AI tools with high error rates leading to lawsuits.

- Solutions: Tools like Censinet RiskOps™ help organizations centralize AI risk management, ensuring systems remain safe and effective.

Healthcare organizations must move beyond check-the-box compliance to proactive governance, prioritizing patient safety and ethical AI use.

AI Governance in Healthcare: Key Statistics and Risk Factors 2025

Core Principles of AI Governance in Healthcare

Static compliance alone isn’t enough to tackle the evolving risks that come with using AI in healthcare. That’s where robust governance frameworks step in, providing the necessary principles and structures to guide AI's ethical use. AI governance refers to the frameworks, policies, and processes that ensure AI systems are designed, developed, deployed, and used responsibly in healthcare settings [1]. Without these safeguards, AI systems could lead to harmful outcomes [1]. By December 13, 2025, 66% of physicians reported incorporating AI into their practices, a significant jump from 38% in 2023. However, fewer than one-fifth of healthcare organizations have formal AI governance frameworks in place [6], leaving them exposed to serious risks. Below, we break down the principles, integration strategies, and organizational structures that can help healthcare leaders effectively manage AI.

Key Principles for AI Governance

The values-based principles established by the OECD - spanning inclusive growth, human rights, transparency, robustness, security, and accountability - serve as a global benchmark. These principles resonate with 87% of leaders worldwide who are concerned about AI bias, while nearly 50% emphasize transparency as essential for building trust [4][5][7]. These ideas have shaped regulations like the EU AI Act and the NIST AI Risk Management Framework.

For healthcare organizations, patient outcomes must take precedence over model performance. This involves setting clear thresholds for acceptable benefits and risks, and closely monitoring AI’s real-world impact after deployment [3]. A stark example of what can go wrong came in August 2025, when the U.S. Department of Justice resolved a criminal case with a healthcare insurance company. The company’s AI platform had improperly facilitated payments to pharmacies for patient referrals, leading to legal and regulatory consequences [5]. This incident underscores the liabilities that arise from deploying AI without proper governance [2].

How AI Governance Fits with Existing Healthcare Systems

AI governance isn’t a standalone effort. It needs to integrate seamlessly with clinical governance, enterprise risk management (ERM), and IT change management systems already in place. For example, in 2024, the Office of the National Coordinator for Health Information Technology (ONC) updated its Health Information Technology Certification Program. This update introduced requirements for AI disclosures and risk management within electronic health record systems [1][5]. The goal? To ensure AI systems aren’t "black boxes", giving clinicians the transparency they need to understand how recommendations are made [1].

Effective governance spans every stage of AI’s lifecycle, from its development to ongoing monitoring [2]. Centralizing compliance reporting can streamline oversight, improve accuracy, and enable real-time monitoring of AI systems [1]. This approach helps prevent gaps that often emerge when AI governance is treated as separate from existing risk management processes.

Organizational Structures for AI Oversight

To manage AI risks effectively, healthcare organizations should establish a multidisciplinary governance committee. This group should bring together data scientists, clinicians, compliance officers, ethics experts, medical informatics specialists, legal representatives, safety and quality teams, bioethics professionals, and patient advocates [1][3]. The committee must operate with centralized coordination but also allow for local accountability. Clearly defined roles are crucial - who oversees what, how harm is reported, and how escalation is handled when AI performance issues arise [3].

As AI becomes a board-level concern, organizations are creating specialized roles to address both the risks and opportunities that come with its adoption [8]. Regulators are demanding oversight, and leaders are looking for assurance that AI systems are safe and effective [8]. Human oversight remains critical in every workflow to avoid over-reliance on AI recommendations [1]. Forward-thinking leaders view regulation not as a burden but as a chance to strengthen data integrity and fuel innovation [8].

Building an AI Governance Framework for Risk Control

Governance Throughout the AI Lifecycle

Effective oversight of AI systems requires a structured approach that addresses risks at every stage of their lifecycle. From the moment an AI concept is conceived to its eventual retirement, governance must remain a priority. This lifecycle approach transforms core principles into actionable steps for identifying, assessing, and mitigating risks at each phase [7]. A comprehensive three-stage framework can guide this process:

- Concept Review and Approval: Evaluate the potential use case, determine its feasibility, and set conditions for moving forward.

- Pre-Deployment Assessment: Conduct thorough reviews of cybersecurity, bias, and ethical concerns before the system is launched.

- Continuous Monitoring: Track the system’s performance, equity, and safety after deployment to ensure ongoing compliance.

Each stage benefits from involving the right experts to identify risks early and address issues promptly. This structured approach not only minimizes risks but also ensures that every step of the AI implementation process is safeguarded.

Pre-Deployment Risk Assessment and Approval

Before an AI system interacts with patient data or influences clinical decisions, it must undergo a detailed evaluation. Start by determining if AI is the best solution compared to non-AI alternatives, ensuring its advantages are clear and measurable [1]. The assessment should cover critical areas like cybersecurity, bias, and ethical considerations to identify and mitigate potential harms. Tools such as Censinet RiskOps™ simplify this process by routing findings to the appropriate stakeholders - AI governance committees, compliance teams, and clinical leaders - for review and approval. This centralized process ensures accountability and that all concerns are addressed before the system becomes operational.

"Effective risk management and oversight of AI hinge on a critical, yet underappreciated tool: comprehensive documentation." - Amy Winecoff & Miranda Bogen, Center for Democracy & Technology [5]

Documentation is non-negotiable. Keeping detailed records - such as purpose statements, risk assessments, and approval decisions - throughout the AI lifecycle is essential for maintaining oversight and confirming the system operates as intended [5]. Once the pre-deployment process is complete, ongoing monitoring ensures the AI system remains reliable and safe.

Continuous Monitoring and Updates

Ongoing monitoring is crucial for evaluating an AI system’s technical performance, fairness, and safety over time [3]. Your governance committee should maintain an up-to-date inventory of all AI tools in use and focus evaluations on real-world patient outcomes, not just technical metrics [3]. Establish internal harm reporting mechanisms and clear escalation pathways to address emerging issues [3]. Monitoring Key Performance Indicators (KPIs) can help detect model degradation, while regular reassessments and audits ensure the system continues to perform effectively [1].

In December 2025, the U.S. Department of Health and Human Services announced plans to monitor AI projects for compliance post-deployment and release annual public reports on AI use cases and risk assessments [9][10]. Advanced governance tools can further streamline this process by automating performance reassessments and consolidating real-time data into an intuitive AI risk dashboard. This centralized hub serves as a single source of truth for managing policies, risks, and tasks, ensuring governance measures adapt as AI systems evolve and new risks emerge.

sbb-itb-535baee

Implementing AI Governance in Healthcare Operations

Connecting AI Governance with Cybersecurity Programs

To effectively manage AI in healthcare, it's crucial to weave AI governance into existing cybersecurity and risk management frameworks. This includes aligning AI risk assessments with processes like third-party risk management, incident response plans, and regulatory compliance efforts. By embedding AI governance into daily operations, healthcare organizations can avoid becoming the subject of negative headlines.

"The healthcare organizations that avoid the big headlines aren't lucky – they're intentional. They've made AI governance part of their everyday risk and compliance program" [11].

Frameworks such as OWASP AI and ISO 42001 offer structured methods for evaluating AI-specific risks alongside traditional cybersecurity threats [7]. Tools like Censinet RiskOps™ simplify this process by centralizing risk assessments across AI and cybersecurity teams. They ensure that AI-related findings are directed to the right stakeholders, from governance committees to compliance teams, creating a seamless connection between cybersecurity and AI oversight.

Assigning Roles and Responsibilities

Clear role definition is the backbone of effective AI oversight. An AI governance committee should bring together a diverse group of experts, such as data scientists, clinicians, compliance officers, ethics specialists, and community advocates. This team collectively evaluates AI systems throughout their lifecycle, focusing on safety, effectiveness, and ethical considerations to ensure alignment with organizational standards before deployment [1].

Key leadership roles, like Chief Information Security Officers (CISOs) and Chief Information Officers (CIOs), should focus on securing technical infrastructure, while clinical leaders ensure patient safety and quality of care. Executive sponsorship is also critical - without support from the C-suite, governance initiatives often lack the momentum needed to succeed. Each team member must have a clear understanding of their responsibilities and the authority to halt or adjust AI systems if risks arise. These clearly defined roles enable smooth tracking, reporting, and integration of governance into daily operations.

Metrics and Reporting for AI Risk Management

Turning governance into actionable practice requires measurable outcomes. Start by defining and logging key performance indicators (KPIs) during the initial evaluation of an AI system. These metrics are essential for identifying potential model degradation over time [1].

"Ongoing oversight over AI implementations must focus on the monitoring of predefined Key Performance Indicators (KPIs). Such performance metrics can be defined during the initial evaluation of the AI product and historically logged to track potential model degradation" [1].

Instead of focusing solely on technical metrics, prioritize reporting structures that emphasize actual patient outcomes [3]. Regularly assess AI model fairness, maintain an updated registry of all AI tools in use, and document data lineage to ensure transparency during audits [12]. Automated reporting tools can further streamline oversight, reducing human error and freeing up staff for more in-depth analysis. Additionally, having clear internal mechanisms for reporting harm ensures that any issues are addressed promptly and effectively.

Conclusion: Advancing Healthcare with Mature AI Governance

First Steps for AI Governance Implementation

The first step toward effective AI governance in healthcare is assembling a diverse, cross-functional committee. This group should include professionals like data scientists, clinicians, compliance officers, ethics experts, and community advocates. By bringing together varied perspectives, this team can evaluate AI systems comprehensively throughout their lifecycle.

Next, create a detailed inventory of all AI tools in use. This inventory should outline each system's purpose, the data it relies on, and any potential risks. Tools like Censinet RiskOps™ can streamline this process by centralizing risk assessments and directing findings to the appropriate stakeholders. This ensures that both cybersecurity and AI governance are managed in a coordinated way.

These foundational steps serve as a launchpad for integrating AI governance into healthcare operations, promoting both innovation and patient safety in the long run.

The Future of AI Governance in Healthcare

As healthcare providers build on these initial measures, they can refine their governance practices to support ongoing improvements and advancements. Organizations that embrace structured AI governance are better positioned to unlock AI's potential while minimizing risks. Without such oversight, AI systems could inadvertently cause harm rather than deliver benefits [1].

The path forward belongs to healthcare providers who treat AI governance as a continuous effort - one that prioritizes risk management, patient safety, and responsible innovation. By fostering trust through transparent processes, assigning clear accountability, and utilizing scalable governance tools, these organizations can confidently push their AI initiatives forward while safeguarding patient data and ensuring privacy [13].

FAQs

What risks can arise from using AI in healthcare without proper governance?

Using AI in healthcare without proper oversight can bring about serious risks. These include clinical errors stemming from biased algorithms or misinterpretations, data breaches that jeopardize patient privacy, and cybersecurity weaknesses that expose sensitive medical information. Beyond these technical issues, the absence of clear accountability for AI-driven decisions and reliance on untested or overly complicated AI systems can raise ethical concerns and potentially endanger patient safety.

To tackle these challenges, it's crucial to establish strong AI governance frameworks. Such frameworks help ensure that AI systems are secure, transparent, and ethically sound, while also adhering to regulatory standards.

How can healthcare organizations effectively incorporate AI governance into their existing systems?

Healthcare organizations can integrate AI governance into their operations by establishing a structured framework that fits seamlessly with their goals and workflows. This process should involve collaboration across departments, clear accountability measures, and embedding governance practices into everyday activities. Key actions include keeping an up-to-date inventory of AI tools, performing rigorous pre-deployment evaluations, and maintaining continuous monitoring to mitigate risks like bias, data security breaches, and ethical issues.

Centralized platforms can help simplify risk assessments and compliance tasks, making oversight more efficient while staying in step with changing regulations. By aligning AI governance with existing protocols for cybersecurity, data privacy, and ethics, organizations can promote responsible AI usage, protect patient data, and advance their broader objectives.

Why is continuous monitoring important for AI governance in healthcare?

Continuous monitoring plays a key role in managing AI effectively, especially in healthcare, where real-time risk detection is critical. It helps spot issues like model drift, bias, or errors early on, minimizing their potential to affect patient safety or violate regulatory standards.

By regularly evaluating AI performance, healthcare organizations can tackle vulnerabilities head-on, protect sensitive patient information, and uphold trust in their systems. This ongoing vigilance strengthens risk management efforts while promoting ethical and secure AI applications in the healthcare field.