AI Model Drift Monitoring: Ensuring Ongoing Performance of Healthcare AI Vendors

Post Summary

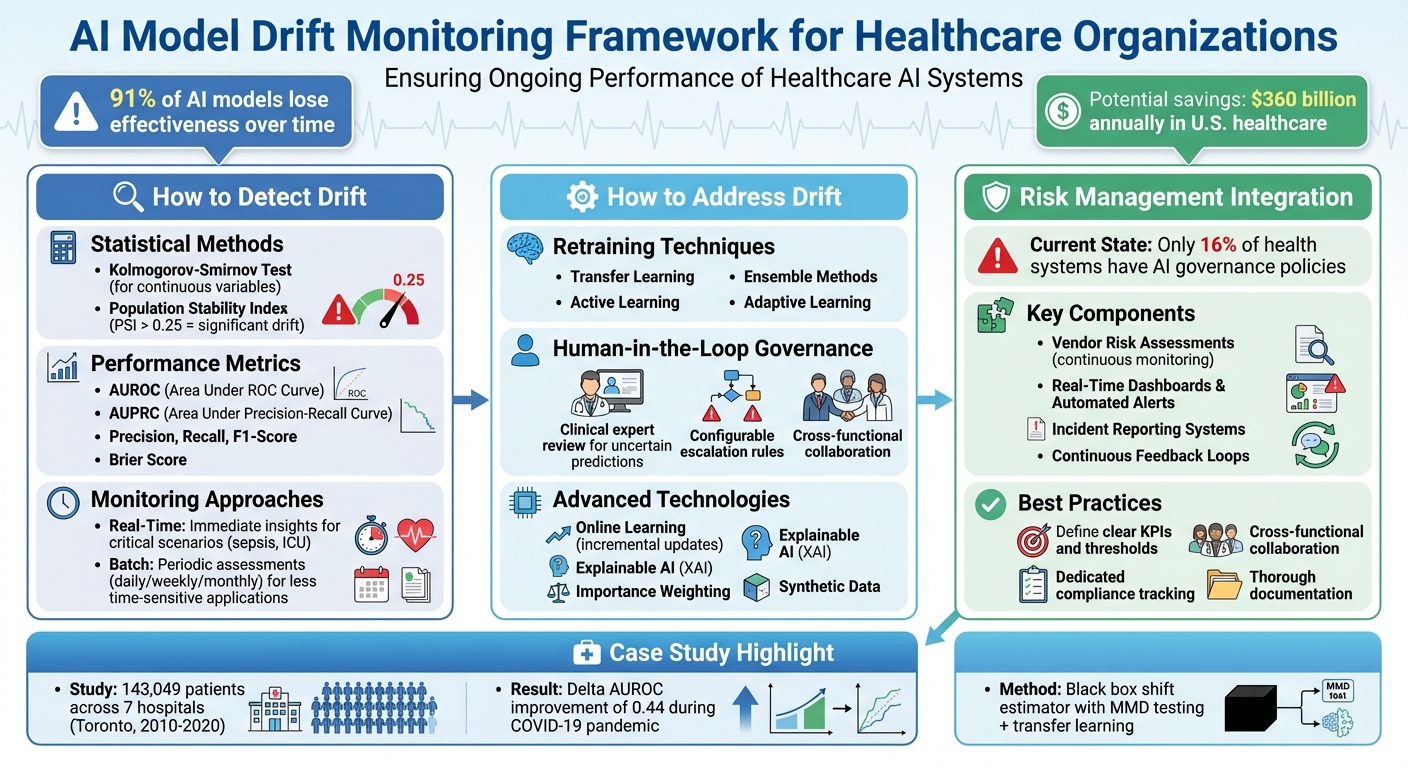

AI model drift is a significant challenge in healthcare, where AI systems must remain reliable over time. Drift occurs when an AI model’s performance declines due to changes in input data or evolving relationships between inputs and outputs. This can lead to errors like misdiagnoses, biased decisions, and regulatory issues, ultimately impacting patient safety and trust.

To combat this, healthcare organizations and AI vendors need robust monitoring systems. Key strategies include:

- Drift Detection: Use statistical methods (e.g., Kolmogorov-Smirnov tests, Population Stability Index) and performance metrics (e.g., AUROC, precision, recall) to identify changes.

- Real-Time and Batch Monitoring: Real-time systems handle critical scenarios, while batch monitoring tracks trends over longer periods.

- Retraining Models: Techniques like transfer learning, active learning, and ensemble methods help maintain accuracy as data evolves.

- Human Oversight: Incorporating human-in-the-loop governance ensures that errors are caught and addressed promptly.

- Advanced Tools: Platforms like Censinet RiskOps™ integrate monitoring into workflows, supporting compliance and risk management.

AI Model Drift Monitoring Framework for Healthcare Organizations

Methods for Detecting AI Model Drift

Detecting model drift requires a mix of statistical techniques and performance metrics. Relying solely on performance metrics can overlook subtle data shifts, especially when obtaining ground-truth labels is delayed or expensive [4]. For healthcare organizations, it's essential to use approaches that align with the constraints of clinical settings.

Statistical Methods for Drift Detection

Statistical methods focus on comparing data distributions - either from inputs or model outputs - over time to spot changes [7,2]. The choice of method depends on the type of data and the type of drift being monitored, such as covariate, label, or concept drift [4]. For continuous variables, the Kolmogorov-Smirnov test is a common tool that measures differences between cumulative distributions, making it useful for identifying shifts like changes in patient demographics. Another widely used tool, the Population Stability Index (PSI), quantifies distribution changes between time periods. Typically, a PSI value above 0.25 signals a significant drift that warrants further investigation.

Performance Metrics for Healthcare AI

Healthcare organizations often track key metrics like AUROC (Area Under the Receiver Operating Characteristic Curve), AUPRC (Area Under the Precision-Recall Curve), accuracy, precision, recall, F1-score, and the Brier score to monitor for potential drift [6]. In supervised monitoring, these metrics are compared against a predefined benchmark - often based on a validation dataset - when ground-truth labels are available [5]. However, in healthcare, labels often come with delays, making real-time monitoring tricky. This creates a trade-off between waiting for accurate results and taking timely action.

Real-Time vs. Batch Monitoring

Real-time monitoring evaluates AI predictions as they happen, offering immediate insights into potential issues. This approach is ideal for high-stakes clinical scenarios, like sepsis prediction or ICU monitoring, where quick action can save lives. However, real-time systems require robust infrastructure and careful calibration to avoid overwhelming clinicians with unnecessary alerts (a phenomenon known as alert fatigue).

On the other hand, batch monitoring involves periodic assessments - daily, weekly, or monthly - by analyzing aggregated data. This method is often more practical in healthcare environments, especially for applications like diagnostic imaging or risk stratification tools where decisions aren’t as time-sensitive. Batch monitoring allows teams to identify trends and address issues without needing constant oversight. Together, these approaches support the development of tools designed to simplify and enhance drift detection.

Tools for AI Model Drift Monitoring

Healthcare organizations face unique challenges when it comes to AI model drift monitoring, and having the right tools is crucial. The ideal platform should seamlessly integrate with existing clinical workflows, help maintain regulatory compliance, and provide actionable insights - all without overwhelming IT teams. When considering solutions, it's important to focus on tools that offer both pre-deployment validation and ongoing post-deployment monitoring. Establishing a strong baseline before launch is just as important as keeping track of changes over time. Platforms like Censinet RiskOps™ are setting the standard for effective AI drift monitoring in healthcare.

Censinet RiskOps™ for AI Drift Monitoring

Censinet RiskOps™ takes AI risk management to the next level by embedding it into the vendor oversight process. It provides healthcare organizations with a centralized system for tracking AI-related risks. The platform's Censinet AI™ feature automates the summarization of evidence and generates risk reports based on assessment data, streamlining vendor evaluations. Its user-friendly dashboard allows organizations to monitor performance metrics, ensure compliance, and identify real-time issues - all while maintaining human oversight through customizable review processes.

What makes RiskOps™ stand out is its "human-in-the-loop" approach to AI governance. Instead of automating critical decisions, the platform directs key findings and tasks to designated stakeholders, such as members of the AI governance committee, ensuring timely and informed resolution of issues. This model functions like an "air traffic control" system, scaling risk management efforts without compromising safety or accountability.

Strategies to Address and Reduce AI Model Drift

When drift is identified, healthcare organizations need to act quickly to restore the AI system's performance. With research showing that 91% of AI models lose effectiveness over time, taking proactive steps like retraining is not just recommended - it’s necessary [3].

Retraining and Updating AI Models

Retraining AI models effectively involves using techniques like periodic updates based on current clinical data. One approach, adaptive learning, allows models to adjust to new data patterns over time without needing a full rebuild. For instance, a hospital network improved its sepsis prediction system by blending supervised learning with unsupervised anomaly detection. They then fine-tuned their main model periodically to address discrepancies, ensuring it stayed aligned with evolving patient data [7]. This hybrid strategy helped maintain both accuracy and reliability.

Other methods like transfer learning and domain adaptation reuse knowledge from pre-trained models or related domains, making it easier and faster to adapt to new tasks. Active learning focuses on labeling the most valuable data samples, ensuring experts spend their time where it has the greatest impact. Ensemble methods, which combine multiple models, create systems that are more resilient to individual model weaknesses. Together, these approaches ensure models stay accurate while working within governance frameworks to maintain consistency.

Human-in-the-Loop Governance

AI automation alone isn’t enough in healthcare, where patient safety is critical. Human-in-the-loop governance steps in by routing uncertain or unusual AI predictions to clinical experts for review. This approach acts as a safety net, ensuring that AI errors don’t negatively affect patient care [1]. If a model begins to drift or shows uncertainty, decisions can be escalated to human reviewers or shifted to simpler, more reliable systems.

To make this process efficient, configurable rules guide human review, ensuring flagged issues are addressed promptly. Collaboration between clinicians, IT teams, biostatisticians, and model developers is crucial. These groups work together to investigate the root causes of drift and determine the necessary fixes [8]. Regular bias audits during updates and involving stakeholders in validation processes also help keep ethical considerations front and center in healthcare AI [7].

Advanced Technologies for Drift Reduction

Beyond retraining and human oversight, advanced tools play a key role in managing drift. Techniques like online learning, explainable AI (XAI), importance weighting, and synthetic data integrate seamlessly into MLOps workflows to enhance drift management.

- Online learning algorithms allow models to update incrementally as new data comes in, eliminating the need to wait for periodic retraining.

- Explainable AI (XAI) helps make predictions more transparent, enabling stakeholders to spot drift patterns and respond effectively [7].

- Importance weighting and synthetic data prepare models to handle changes in data distribution.

MLOps workflows automate the critical processes of monitoring, retraining, and deploying AI systems, ensuring drift is managed across multiple models. However, while these technologies provide the tools to scale, human oversight remains essential. In healthcare, where the stakes are high, the balance of advanced technology and strong governance ensures both safety and accountability.

sbb-itb-535baee

Integrating Drift Monitoring into Healthcare Risk Management

Incorporating AI drift monitoring into your healthcare organization's risk management framework is no longer optional - it's essential. Today, only 16% of health systems have a systemwide governance policy specifically addressing AI usage and data access. This lack of safeguards leaves a significant gap, especially when AI has the potential to save the U.S. healthcare system up to $360 billion annually - but only if these systems remain dependable and secure [9].

To address this, healthcare organizations need to expand their existing Enterprise Risk Management (ERM) frameworks to account for AI-specific risks. This means moving beyond basic compliance checklists to establish clear roles and responsibilities for AI oversight. Collaboration between technical and clinical teams is key, as is leadership's commitment to embedding AI oversight into the organization's culture. By prioritizing ethical use, transparency, and accountability, organizations can maintain the reliability of their AI systems over time.

Adding Monitoring to Vendor Risk Assessments

Third-party AI vendors also require continuous scrutiny. When assessing vendors, healthcare organizations should insist on transparency through contractual agreements. These contracts should mandate access to audit trails, performance testing results, and detailed documentation of how algorithms are updated and maintained.

For example, Censinet Connect™ integrates drift monitoring into vendor risk assessments. It centralizes vendor documentation, performance metrics, and compliance evidence, enabling risk teams to quickly detect when an AI model starts to drift. This allows organizations to take immediate corrective action, ensuring vendor partnerships remain aligned with ethical and regulatory standards throughout the relationship.

Continuous Feedback and Closed-Loop Systems

Real-time dashboards and automated alerts are invaluable tools for monitoring key performance metrics like precision, recall, and F1 scores. These systems can flag when metrics surpass acceptable thresholds, triggering immediate investigations through incident management systems.

A compelling example of this approach comes from a June 2025 study by Vallijah Subasri and colleagues. They analyzed electronic health record data from 143,049 adult inpatients across seven hospitals in Toronto, Canada, covering a period from January 1, 2010, to August 31, 2020. Using a black box shift estimator with Maximum Mean Discrepancy (MMD) testing, they developed a proactive monitoring pipeline. This system applied transfer learning and drift-triggered continual learning strategies, significantly improving model performance during the COVID-19 pandemic (Delta AUROC [SD], 0.44 [0.02]; P = .007, Mann-Whitney U test) [6].

"Early detection enables proactive intervention, allowing data teams to recalibrate models before they cause major disruptions." – Lumenova AI [10]

Incident reporting software also plays a vital role in these feedback loops. It allows staff to document and categorize issues such as algorithm malfunctions, unexpected outcomes, or patient complaints related to AI tools. These reports help identify recurring problems, guiding technical updates and process improvements. Such continuous feedback mechanisms are critical for maintaining best practices in AI risk management.

Best Practices for AI Risk Management

Healthcare organizations that embrace continuous updates to their AI governance frameworks are better equipped to handle regulatory changes and evolving technologies [11]. Start by defining clear key performance indicators (KPIs) to establish acceptable thresholds for model performance. Use historical data to set benchmarks for automated alerts. Encourage cross-functional collaboration by involving clinicians and data scientists in monitoring and auditing AI models. Clinicians need accessible ways to flag anomalies, while leadership must have actionable insights at their fingertips.

Assign a dedicated compliance team to track changes in laws and regulations - such as HIPAA, GDPR, and emerging AI legislation - and ensure compliance checkpoints are embedded throughout the AI system lifecycle.

"Communication is the linchpin of effective risk management." – Performance Health Partners [9]

Thorough documentation is another cornerstone of AI risk management. Configurable workflows should capture model performance, retraining events, and compliance evidence. This documentation not only meets regulatory requirements - such as those outlined in the EU AI Act - but also provides the necessary audit trail to demonstrate due diligence. Tools like Censinet RiskOps™ can serve as a centralized hub for managing AI-related policies, risks, and tasks, ensuring the right teams address the right issues at the right time. By taking these steps, healthcare organizations can create a unified and proactive approach to AI risk management.

Conclusion

AI model drift is an unavoidable reality. Over time, most AI models lose their effectiveness, and in healthcare, failing to address this issue can lead to serious consequences - misdiagnoses, incorrect treatments, and a loss of patient trust.

The risks tied to model drift are backed by industry data, which reveal a troubling gap between the availability of AI-enabled devices and the evidence validating their performance. As Hao Guan and colleagues from Harvard Medical School have pointed out, “As these AI systems continue to be adopted, ensuring their reliability and sustained performance becomes critically important” [2].

To address these challenges, healthcare organizations must adopt tools like real-time dashboards, automated alerts, and closed-loop feedback systems. These can help detect performance issues early, well before they impact patient care. Combining statistical monitoring methods with regular retraining schedules and human-in-the-loop governance creates a solid framework for maintaining effective AI systems. This approach ties directly into the strategies discussed earlier, ensuring long-term reliability.

Solutions like Censinet RiskOps™ take this a step further by centralizing AI risk tracking and embedding drift monitoring into vendor assessments. By defining clear KPIs for model performance and leveraging human oversight, healthcare organizations can turn AI management into a strategic advantage rather than a compliance hurdle.

In the face of rapidly expanding AI-enabled workflows - expected to grow nearly eightfold by 2026 - healthcare organizations that invest in robust monitoring frameworks today will be better equipped to maintain compliance and ensure patient safety. Proactive preparation now ensures that AI can fulfill its promise without compromising care quality or trust [12].

FAQs

What challenges does AI model drift pose for healthcare organizations?

AI model drift in healthcare presents some tough challenges. One major issue is data drift - this happens when the input data feeding into the AI system starts to differ from the data it was originally trained on. Changes in clinical practices, shifts in patient demographics, or updates to treatment protocols can all contribute to this. When this happens, the model's predictions can become less accurate and less reliable over time.

There’s also label drift or concept drift, where the relationships between inputs and outputs evolve, making the AI’s predictions less useful. What makes these problems tricky is that performance often declines gradually, making them harder to spot. On top of that, AI models often struggle to adapt across different healthcare settings, which means their performance can vary depending on the environment they're used in.

To tackle these issues, it’s crucial to actively monitor and regularly update AI models. This ensures they stay effective and continue to align with healthcare standards.

How does involving humans in AI decision-making improve safety in healthcare?

Incorporating humans into AI decision-making - known as human-in-the-loop governance - adds a critical layer of oversight in healthcare, enhancing safety and accountability. Human experts play a key role by monitoring AI outputs, validating its decisions, and stepping in when needed. This proactive involvement helps catch and address problems early, such as model drift, which can compromise system accuracy over time.

This collaborative approach strengthens compliance with healthcare safety regulations, ensures the protection of sensitive patient information, and reinforces the dependability of AI systems. By blending human expertise with AI's capabilities, healthcare organizations can more effectively manage risks and uphold the integrity of their operations.

What are the best ways to detect and manage AI model drift in healthcare systems?

Detecting and managing AI model drift in healthcare systems demands a mix of smart tools and forward-thinking approaches. Continuous monitoring platforms play a key role by keeping an eye on model performance, spotting anomalies, and identifying data drift as it happens. Automated systems for data validation and alerts ensure that any issues are flagged quickly, minimizing disruptions.

To tackle drift effectively, it’s crucial to establish feedback loops for refining models, set up retraining protocols to address performance changes, and rely on advanced metrics to gauge accuracy and reliability. Regular monitoring and updates not only help meet healthcare standards but also protect patient data and maintain smooth operations.

Related Blog Posts

- Healthcare Predictive Analytics Vendor Risk: Data Quality and Model Reliability

- The AI Governance Revolution: Moving Beyond Compliance to True Risk Control

- The Regulated Future: How AI Governance Will Shape Business Strategy

- Clinical AI Bias Testing: How to Assess and Mitigate Algorithmic Risks in Healthcare