The AI Physician's Assistant: Transforming Healthcare While Managing Risk

Post Summary

They enhance diagnostics, support clinical decisions, automate administrative tasks, and improve remote monitoring.

Better diagnostic accuracy, faster decision-making, improved chronic disease management, and reduced clinician burnout.

Data breaches, algorithm manipulation, PHI exposure, and vulnerabilities in connected devices and third-party vendors.

Because most healthcare breaches originate from vendors with outdated systems or weaker security controls.

It speeds vendor assessments, automates evidence review, centralizes oversight, and provides real-time AI risk dashboards.

Strengthen governance, enforce human oversight, ensure HIPAA compliance, and continuously monitor AI systems.

AI physician's assistants are changing healthcare by improving diagnostics, reducing administrative burdens, and enabling remote patient monitoring. These tools analyze vast data, assist clinical decisions, and streamline workflows. However, they also introduce cybersecurity risks, such as data breaches, algorithm bias, and vulnerabilities in third-party systems. Solutions like Censinet RiskOps™ help healthcare providers manage these risks while maintaining patient safety and regulatory compliance.

Key Takeaways:

Balancing AI's benefits with robust security measures is critical to ensuring safe and effective healthcare advancements.

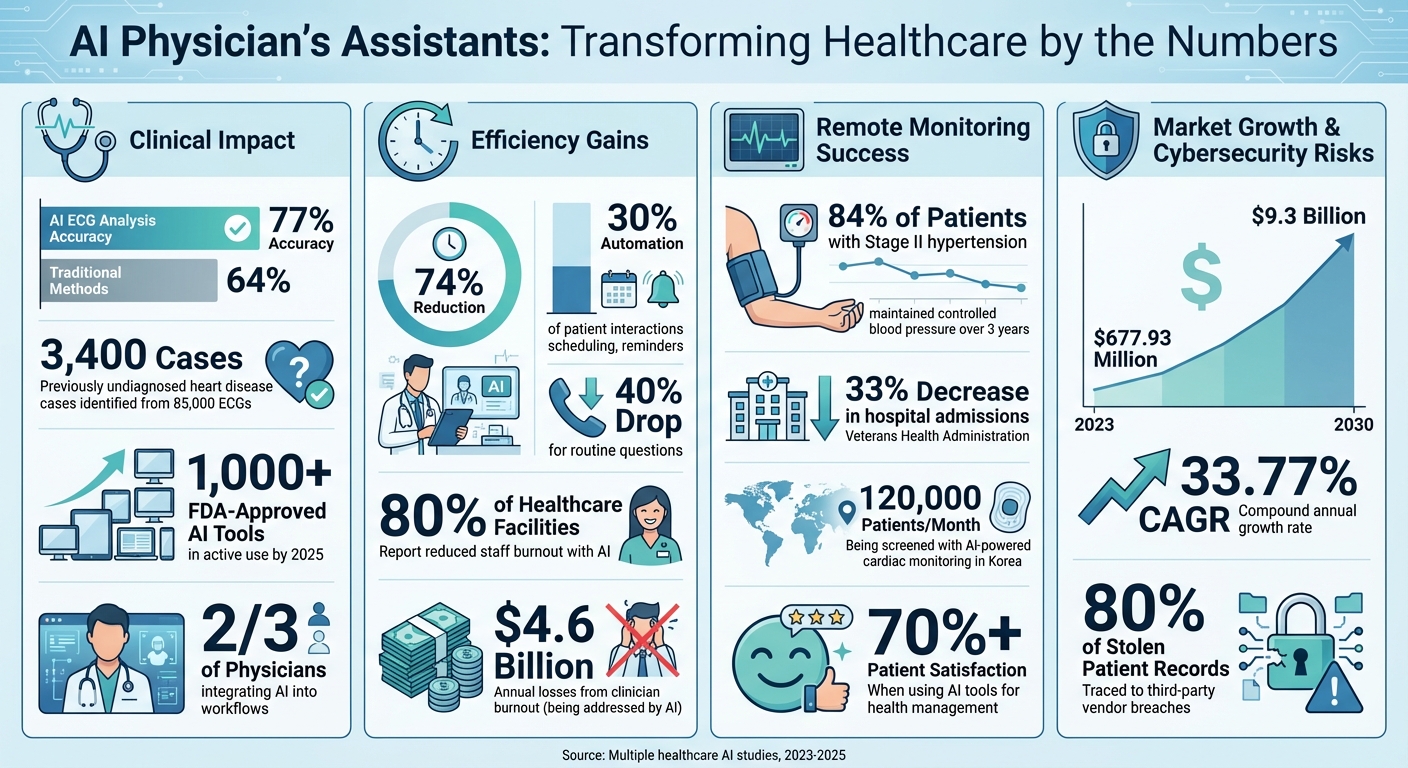

: Key Statistics on Efficiency, Accuracy, and Market Growth

How AI Physician's Assistants Are Used in Healthcare

AI physician's assistants are making waves in healthcare by improving clinical decision-making, streamlining administrative tasks, and advancing remote patient monitoring. These advancements, however, come with a need for strong cybersecurity measures and compliance protocols, which are explored further in the following sections.

Clinical Decision Support and Diagnostics

AI systems have transformed how healthcare providers analyze patient data, uncovering insights that humans might miss. By processing medical histories, lab results, imaging studies, and genetic profiles, these tools offer tailored treatment recommendations based on individual patient needs [4].

For example, in 2025, Columbia University's EchoNext AI tool analyzed 85,000 ECGs, identifying 3,400 previously undiagnosed heart disease cases with an accuracy of 77%, compared to 64% using traditional methods [9]. Similarly, Cleveland Clinic implemented an AI tool in neuro-ICUs to monitor EEG data in real time, quickly flagging critical patterns like seizures [9].

AI also powers predictive analytics, helping healthcare providers anticipate potential health issues and intervene early. By 2025, over 1,000 FDA-approved AI tools were in active use, with more than two-thirds of physicians integrating AI into their workflows [9]. However, these advancements come with challenges, particularly around data privacy and regulatory compliance, which require careful management.

Administrative Tasks and Provider Support

Administrative work can be a significant burden for healthcare providers, but AI is helping to lighten the load. From scheduling appointments to transcribing conversations and processing insurance claims, AI tools are reducing time spent on paperwork [5]. For instance, Abridge's ambient clinical intelligence system in 2025 cut charting time by 74%, while HCA Healthcare's AI pilot program streamlined electronic health record (EHR) entries, alleviating clinician burnout in a system losing $4.6 billion annually due to staff fatigue [6].

A study from MIT revealed that 80% of healthcare facilities using AI experienced reduced staff burnout [11][12]. AI virtual assistants are also automating up to 30% of patient interactions - like appointment scheduling and reminders - leading to a 40% drop in call center volume for routine questions [8].

Remote Monitoring and Telehealth

AI-powered remote monitoring systems are redefining patient care by continuously analyzing data from wearables and sensors. These systems help identify early health risks, manage chronic conditions, and reduce hospital admissions [6][9].

One example: a JAMA study showed that 84% of patients with Stage II hypertension maintained controlled blood pressure over three years using a connected blood pressure cuff paired with a smartphone app [9][10]. During the COVID-19 pandemic, the Veterans Health Administration's remote monitoring team reported a 33% drop in hospital admissions [7]. Similarly, Livongo's connected glucometer, which automatically uploads readings to its AI platform, improved blood sugar control and medication adherence - key factors in its $18.5 billion acquisition by Teladoc [8][9].

In 2025, Samsung announced an upcoming Galaxy Watch feature that uses AI from Medical AI to screen for Left Ventricular Systolic Dysfunction (LVSD). This technology is already screening 120,000 patients monthly across 100 hospitals in Korea [9]. The global market for AI virtual assistants in healthcare reached $677.93 million in 2023 and is projected to grow to $9.3 billion by 2030, with a compound annual growth rate of 33.77% [8].

"AI is truly revolutionizing remote patient monitoring: it enhances what can be measured and analyzed remotely, yields demonstrable improvements in care, and is reshaping healthcare delivery models." – IntuitionLabs

AI assistants also provide 24/7 support for symptom checks, medication advice, and educational content, improving patient engagement and care continuity [6][8][11]. More than 70% of patients report satisfaction when using AI tools for managing health-related inquiries and appointments [8]. Platforms like HealthSnap, which integrates with over 80 EHR systems, combine cellular-enabled remote patient monitoring devices with AI insights to improve chronic disease outcomes [6].

While these advancements improve care delivery, they also emphasize the importance of addressing the cybersecurity challenges discussed in the next section.

Cybersecurity Risks in AI-Powered Healthcare

AI-driven physician's assistants are transforming healthcare by improving efficiency and decision-making. However, they also create new cybersecurity challenges, particularly by exposing sensitive patient data to a variety of potential threats. Let’s explore some of these vulnerabilities.

Threats to Protected Health Information (PHI)

AI systems handle vast amounts of Protected Health Information (PHI), which increases the likelihood of data breaches, unauthorized access, and even identity theft [1]. The complexity and ever-changing nature of AI algorithms also make it harder for patients to fully understand how their data is being used. This lack of transparency complicates the process of obtaining informed consent, adding another layer of risk [1].

AI Model Bias and Patient Safety Issues

AI algorithms, while powerful, come with their own set of vulnerabilities that can directly impact patient care and safety. The "black box" design of many AI models makes it difficult to trace how decisions are made, limiting accountability. Even worse, these systems can be targeted by adversarial attacks, where intentionally manipulated inputs lead to errors like misdiagnoses or harmful treatment recommendations.

Generative AI systems add another concern. They can sometimes produce false or unverified information - commonly referred to as "hallucinations" - which could jeopardize patient safety. Additionally, such inaccuracies may exacerbate existing disparities in healthcare, leading to unequal treatment outcomes across different patient groups [1][13][15].

External factors further intensify these risks, particularly when it comes to third-party partnerships and supply chains.

Third-Party Vendor and Supply Chain Risks

Third-party vendors are a significant weak point in healthcare cybersecurity. Alarmingly, 80% of stolen patient records can be traced back to breaches involving these external entities [13]. Many vendors operate on outdated IT systems or have weaker security measures, making them attractive targets for cybercriminals.

Supply chain attacks are another growing concern. Hackers may exploit trusted relationships by embedding malicious code into software or compromising hardware components, potentially impacting multiple healthcare facilities. Additionally, AI-enabled medical devices - like pacemakers, insulin pumps, and imaging tools - are particularly vulnerable to threats such as ransomware, denial-of-service attacks, and unauthorized changes to device functionality [1][14].

These interconnected risks highlight the need for robust cybersecurity measures to safeguard both patient data and the integrity of AI-powered healthcare systems.

Managing AI Risks in Healthcare with Censinet

Healthcare organizations need practical solutions to tackle the cybersecurity risks associated with AI. Censinet RiskOps™ offers a way to streamline risk management without compromising the efficiency of AI systems. With tools like Censinet, healthcare providers can now address these challenges head-on, ensuring robust and effective risk mitigation.

Faster Third-Party Risk Assessments with Censinet AITM

Third-party vulnerabilities in healthcare are a serious concern, making rapid assessments a necessity. Traditional assessments can take weeks to complete, but Censinet AITM speeds up the process dramatically. Vendors can finish security questionnaires in seconds, thanks to automated features. The platform summarizes vendor evidence, documents key product integrations, identifies associated fourth-party risks, and generates detailed risk summaries. This efficiency allows healthcare organizations to assess AI-powered tools and their vendors quickly, avoiding delays that could hinder operations.

Real-Time Risk Monitoring and Benchmarking

Censinet RiskOps™ provides continuous, real-time insights into AI risks through live dashboards and automated workflows. It operates within a collaborative network of healthcare delivery organizations and over 50,000 vendors and products across the industry. This network offers a broad view of potential risks [16]. Additionally, the platform includes Cybersecurity Benchmarks, the only peer benchmarking tool in the industry, allowing organizations to compare their AI risk posture with similar institutions [16].

"Benchmarking against industry standards helps us advocate for the right resources and ensures we are leading where it matters"

.

This real-time monitoring helps security teams detect emerging threats and respond to vulnerabilities swiftly.

Human-in-the-Loop AI Governance

Censinet combines AI automation with critical human oversight to ensure a balanced approach. The platform supports customizable rules and workflows, allowing automation to complement - not replace - key decision-making processes. Risk teams retain control while scaling their efforts in managing cyber risks. Acting like "air traffic control" for AI governance, the system routes key findings to the appropriate stakeholders for timely review. A centralized AI risk dashboard aggregates real-time data, providing a single hub for managing policies, risks, and tasks. This setup ensures that the right teams handle the right issues at the right time, maintaining continuous oversight and accountability. By tailoring risk management strategies, Censinet helps ensure that advancements in AI do not compromise patient safety.

sbb-itb-535baee

Best Practices for AI Adoption in Healthcare

Meeting HIPAA and Regulatory Requirements

When adopting AI in healthcare, ensuring compliance with HIPAA is non-negotiable. This involves implementing strong data encryption and setting up secure storage systems for all patient information handled by AI tools [17][2][18]. HIPAA serves as a legal safeguard to protect both patients and healthcare providers.

It's also crucial to obtain explicit patient consent when AI systems record clinical conversations or use patient data for training purposes. This consent should include clear documentation that patients fully understand how their data will be used [17][3][2][18]. Additionally, AI vendors need to be transparent about how their systems work. This includes openly discussing limitations, acknowledging potential biases, and regularly reporting error rates [17][3][2].

By adhering to these regulatory requirements, healthcare organizations can establish a solid foundation for managing risks collaboratively and effectively.

Collaborative Risk Management with Censinet Connect™

Censinet Connect™ offers a platform that fosters collaboration among healthcare providers, vendors, and various teams by creating a shared network for identifying risks and vulnerabilities. This approach allows organizations to tap into collective knowledge about vendor risks, new threats, and industry-wide challenges. The platform simplifies communication between security teams, clinical staff, and external vendors, ensuring everyone stays on the same page regarding risk priorities and mitigation plans. This level of coordination plays a key role in building a robust and scalable risk management framework that prioritizes patient safety.

Scalable Risk Mitigation for Patient Safety

AI in healthcare should always support clinicians, not replace them [19][20]. To minimize errors and biases, healthcare organizations need to establish strong governance structures and maintain continuous human oversight [3][2]. Regular monitoring systems should be in place to validate AI-generated outputs, ensuring accuracy and reliability. These practices should align seamlessly with the organization’s broader cybersecurity measures to protect both clinical workflows and sensitive patient data.

Transparency is another critical element. Patients should be informed whenever AI tools are involved in their care, and any AI-generated content should be properly documented in medical records. This not only builds trust but also ensures accountability in the use of AI technologies.

Conclusion

AI-powered physician assistants are transforming healthcare by enhancing clinical decision-making and simplifying workflows, allowing providers to dedicate more time to patient care. This shift has the potential to lead to better health outcomes and more efficient care delivery [3][20].

However, the rapid integration of AI into healthcare comes with its own set of challenges. One pressing concern is that the speed of AI adoption often surpasses the necessary validation and oversight processes [17]. Additionally, healthcare organizations are increasingly vulnerable to sophisticated cyberattacks, including those leveraging AI, making the sector a prime target for malicious activities [13][21].

The path forward requires a delicate balance between embracing innovation and ensuring security. Strong governance structures, continuous human oversight, and adherence to regulations like HIPAA are essential. Solutions like Censinet RiskOps™ offer a way for healthcare organizations to tackle these challenges effectively, enabling them to leverage AI's capabilities while maintaining robust cybersecurity. This balance is critical for delivering patient care that is both innovative and secure.

FAQs

How do AI-powered physician assistants enhance diagnostic accuracy in healthcare?

AI-driven physician assistants are transforming diagnostics by analyzing extensive medical data, spotting patterns, and providing insights grounded in research. They excel at catching subtle indicators of diseases that human eyes might miss, reducing errors and boosting patient care.

By blending sophisticated algorithms with medical knowledge, these tools empower healthcare professionals to make faster, more accurate diagnoses, enhancing both the quality and efficiency of care.

What are the biggest cybersecurity risks of using AI in healthcare?

AI's role in healthcare brings along some serious cybersecurity challenges that demand attention. Among the most pressing concerns are data breaches and privacy violations, which can expose highly sensitive patient information. There's also the danger of unauthorized access to medical devices or systems, which could directly jeopardize patient safety.

Other risks include adversarial attacks, such as data poisoning or tampering with AI models. Cybercriminals might also deploy ransomware or malware to target healthcare networks. On top of that, phishing scams and exploiting weaknesses in interconnected medical devices can disrupt operations and threaten patient care. Tackling these challenges calls for strong security protocols and constant vigilance to safeguard both patients and healthcare systems.

How does Censinet support healthcare organizations in managing AI-related risks?

Censinet supports healthcare organizations in tackling AI-related challenges by providing customized solutions in risk management, cybersecurity, and compliance. These services help ensure that AI tools are deployed securely, safeguarding sensitive information and aligning with regulatory standards.

By prioritizing data protection, security measures, and compliance with healthcare regulations, Censinet empowers organizations to adopt AI technologies with confidence, maintaining both patient trust and safety throughout the process.

Related Blog Posts

- The AI-Augmented Risk Assessor: How Technology is Redefining Professional Roles in 2025

- The Healthcare AI Paradox: Better Outcomes, New Risks

- Clinical Intelligence: Using AI to Improve Patient Care While Managing Risk

- The Future of Medical Practice: AI-Augmented Clinicians and Risk Management

{"@context":"https://schema.org","@type":"FAQPage","mainEntity":[{"@type":"Question","name":"How do AI-powered physician assistants enhance diagnostic accuracy in healthcare?","acceptedAnswer":{"@type":"Answer","text":"<p>AI-driven physician assistants are transforming diagnostics by analyzing extensive medical data, spotting patterns, and providing insights grounded in research. They excel at catching subtle indicators of diseases that human eyes might miss, reducing errors and boosting patient care.</p> <p>By blending sophisticated algorithms with medical knowledge, these tools empower healthcare professionals to make faster, more accurate diagnoses, enhancing both the quality and efficiency of care.</p>"}},{"@type":"Question","name":"What are the biggest cybersecurity risks of using AI in healthcare?","acceptedAnswer":{"@type":"Answer","text":"<p>AI's role in healthcare brings along some serious cybersecurity challenges that demand attention. Among the most pressing concerns are <strong>data breaches</strong> and <strong>privacy violations</strong>, which can expose highly sensitive patient information. There's also the danger of <strong>unauthorized access</strong> to medical devices or systems, which could directly jeopardize patient safety.</p> <p>Other risks include <strong>adversarial attacks</strong>, such as data poisoning or tampering with AI models. Cybercriminals might also deploy <strong>ransomware</strong> or <strong>malware</strong> to target healthcare networks. On top of that, phishing scams and exploiting weaknesses in <a href=\"https://www.censinet.com/third-party-risk/medical-devices\">interconnected medical devices</a> can disrupt operations and threaten patient care. Tackling these challenges calls for strong security protocols and constant vigilance to safeguard both patients and healthcare systems.</p>"}},{"@type":"Question","name":"How does Censinet support healthcare organizations in managing AI-related risks?","acceptedAnswer":{"@type":"Answer","text":"<p>Censinet supports healthcare organizations in tackling AI-related challenges by providing customized solutions in <strong>risk management</strong>, <strong>cybersecurity</strong>, and <strong>compliance</strong>. These services help ensure that AI tools are deployed securely, safeguarding sensitive information and aligning with regulatory standards.</p> <p>By prioritizing data protection, security measures, and compliance with healthcare regulations, Censinet empowers organizations to adopt AI technologies with confidence, maintaining both patient trust and safety throughout the process.</p>"}}]}

Key Points:

How do AI physician assistants improve diagnostics and clinical decision-making?

- Analyze large datasets such as ECGs, labs, imaging, and genetics.

- Identify conditions earlier, including heart disease risk and neurological events.

- Support personalized treatment by comparing patients to similar cases.

- Reduce manual workload, allowing clinicians to focus on care.

What administrative burdens does AI reduce for healthcare providers?

- Automates charting, clinical note drafting, and EHR updates.

- Handles scheduling and reminders, lowering call center volume.

- Streamlines prior authorizations and claims processing.

- Cuts burnout, with reports showing up to 74% reduction in documentation time.

How is AI transforming remote patient monitoring and telehealth?

- Continuous vitals tracking through wearables and sensors.

- Early detection of hypertension, arrhythmias, and chronic disease deterioration.

- Improved adherence with connected glucometers and blood pressure cuffs.

- Population‑level screening, including LVSD screening via smartwatches.

What cybersecurity risks arise from AI-powered physician assistants?

- PHI exposure through data leakage or unauthorized access.

- Adversarial manipulation of AI models leading to misdiagnosis.

- Third‑party vendor weaknesses, linked to most healthcare breaches.

- Device vulnerabilities, including ransomware risks in IoT medical equipment.

How does Censinet RiskOps™ strengthen AI risk governance?

- Automates third‑party assessments, reducing review time from weeks to seconds.

- Summarizes vendor evidence and identifies fourth‑party dependencies.

- Provides real‑time monitoring across 50,000+ vendors and products.

- Maintains human oversight, routing findings to the correct reviewers.

What best practices support safe adoption of AI assistants in healthcare?

- Meet HIPAA requirements through encryption, consent, and secure storage.

- Validate AI outputs with diverse datasets to detect bias.

- Inform patients when AI tools participate in care.

- Establish governance committees and ongoing monitoring workflows.