AI-Powered Drug Discovery Vendor Risk: Research Data Security and IP Protection

Post Summary

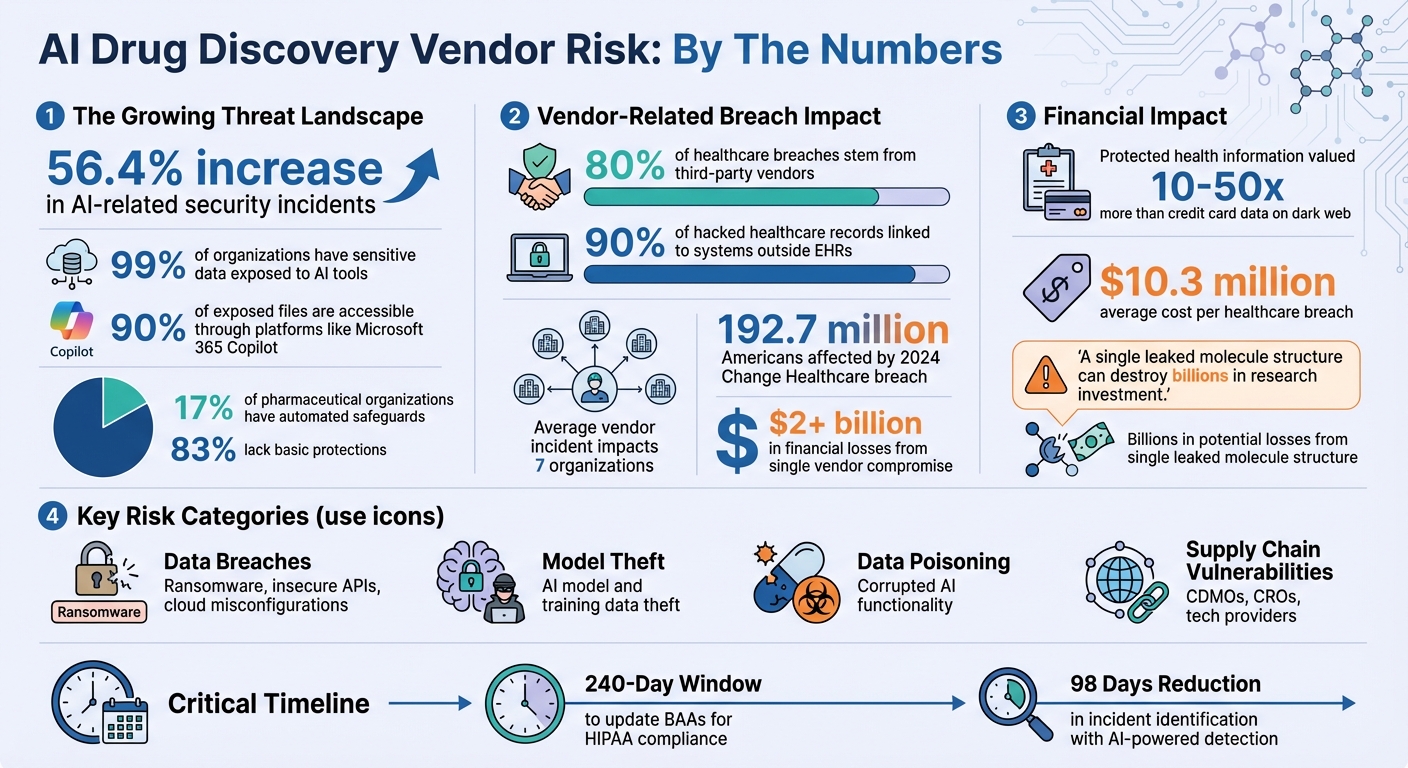

AI is reshaping pharmaceutical research, but it comes with risks. Sensitive data like molecular structures and clinical trial results are highly valuable and vulnerable to cyberattacks. With 56.4% more AI-related security incidents reported recently, safeguarding research data and intellectual property (IP) is essential. The challenge grows as organizations rely on third-party vendors, increasing exposure to potential breaches.

Key Takeaways:

- Data Risks: Leaked molecular structures or stolen training data can lead to financial and competitive losses.

- Cyber Threats: Common risks include ransomware, data poisoning, and model theft.

- Vendor Challenges: Third- and fourth-party vendors expand the attack surface, making security harder to manage.

- Solutions: Strong governance, technical safeguards like encryption, and continuous risk monitoring are critical.

Failing to address these risks could result in billions of dollars in losses, regulatory penalties, and reputational damage. A proactive approach to vendor risk management ensures the security of pharmaceutical innovations.

AI Drug Discovery Vendor Risk Statistics and Key Threats

Cybersecurity and IP Risks from AI Drug Discovery Vendors

AI drug discovery vendors open the door to a range of cybersecurity threats, putting valuable pharmaceutical research at risk. Protecting this research is critical, especially in a field where "a single leaked molecule structure can destroy billions in research investment" [3]. Addressing these risks requires targeted strategies to secure both data and intellectual property (IP) throughout the drug discovery process.

Research Data Breaches and Weaknesses in Security

AI platforms handling sensitive pharmaceutical data are prime targets for cyberattacks. Threats like ransomware, insecure APIs, misconfigured cloud storage, and lax access controls can leave sensitive data exposed [1][2]. The scale of this problem is alarming: 99% of organizations have sensitive data exposed to AI tools, and 90% of these files are accessible through platforms like Microsoft 365 Copilot. Despite this, only 17% of pharmaceutical organizations have implemented automated safeguards, leaving the majority - 83% - without basic protections [3].

The data at risk includes proprietary molecular structures, clinical trial results, manufacturing processes, and patient health information. Each of these represents not just massive financial investments but also critical competitive advantages. Once compromised, this information can be exploited, leading to significant financial and reputational damage.

Theft of AI Models and Training Data

Cyberattacks don’t stop at stealing data - they also target the AI models themselves. These proprietary models and their training data are valuable intellectual property, representing years of development and substantial investment. When stolen, they can be reverse-engineered, allowing attackers to replicate drug discovery capabilities without incurring the original costs [1][6].

One particularly concerning tactic is the model inversion attack, where hackers reconstruct training data from an AI model. This exposes proprietary molecular structures and clinical insights, jeopardizing both current and future research efforts. Beyond the immediate financial loss, such theft undermines licensing strategies and damages partnerships, creating long-term repercussions for the affected organizations.

Data Poisoning and Model Integrity Threats

Data poisoning and other model integrity attacks strike at the heart of AI systems, corrupting their functionality. These attacks can lead to flawed data analysis and compromised decision-making, with potentially disastrous consequences for drug discovery and production [1][5][6].

For example, adversarial data poisoning can degrade the performance of quality-control models used in manufacturing. This increases the risk of releasing defective products or submitting incorrect regulatory filings. A study published in Nature Communications in February 2025, titled "Prompt Injection Attacks on Vision Language Models in Oncology", highlighted how prompt injection attacks can compromise medical AI applications [5]. Such incidents demonstrate how these threats can ripple through the research and production phases, endangering patient safety and scientific integrity.

Supply Chain and Vendor-Related Risks

The pharmaceutical industry relies heavily on partnerships with contract development and manufacturing organizations (CDMOs), contract research organizations (CROs), academic institutions, and technology providers. While these collaborations are essential, they also introduce new vulnerabilities [3]. Weaknesses in external vendors’ systems or software can serve as entry points for cyberattacks, putting sensitive pharmaceutical data at risk [9].

For instance, a security flaw in a vendor’s AI software might expose proprietary data or create opportunities for attackers to infiltrate interconnected systems [9]. The challenge lies in monitoring these complex relationships and securing every link in the supply chain. Without robust protections, these vulnerabilities can jeopardize both research investments and competitive positioning [7].

Addressing these risks demands a comprehensive approach that prioritizes security across the entire network of partners and vendors, ensuring that research assets remain protected at every stage.

Governance and Risk Management for AI Vendors

Effective governance begins with identifying what needs protection and determining ownership. Here's a stark reality: 80% of healthcare breaches stem from third-party vendors, and 90% of hacked healthcare records are linked to systems outside electronic health records, often through vendor-controlled infrastructure [10]. These numbers highlight the necessity for organizations working with AI drug discovery vendors to implement structured governance frameworks. These frameworks guide efforts to assess and mitigate vendor-related vulnerabilities effectively.

Defining Data Classification and IP Ownership

Consistency in data classification is key, and automation can help achieve it. Surprisingly, many pharmaceutical companies lack automated controls to prevent AI-related data leaks [3]. Organizations must clearly categorize their data into distinct tiers, such as electronic protected health information (ePHI), proprietary molecular structures, clinical trial results, manufacturing processes, and patient records. Each category demands specific protection measures and handling protocols.

Defining intellectual property (IP) ownership for AI-generated compounds adds another layer of complexity. Patent law mandates "significant human contribution" for a new drug to qualify for patent protection [12]. This makes it crucial for contracts to spell out who owns AI-generated outputs and what level of human involvement is required. For example, antibodies and polypeptides, with commonly known synthesis methods, present higher IP risks because courts might view AI as completing the "conception" process. On the other hand, small molecule drugs generally pose lower IP risks due to their complexity, which often requires substantial input from synthetic organic chemists [12]. Clear data categorization lays the groundwork for creating strong contractual and policy frameworks.

Contract and Policy Requirements

Building on the foundation of data classification and IP ownership, enforceable contracts are essential for safeguarding these principles. Business Associate Agreements (BAAs) should go beyond standard compliance, incorporating technical controls like encryption, multi-factor authentication (MFA), and network segmentation. Organizations have a 240-day window to update existing BAAs to align with new HIPAA Security Rule requirements [10]. These agreements must also include breach notification protocols, especially since protected health information is valued 10 to 50 times more than credit card data on dark web marketplaces [10].

Fourth-party risk management - the vendors of your vendors - requires explicit focus in contracts. Primary vendors must ensure their subcontractors uphold equivalent security standards, notify you of any changes, and maintain contingency plans for supply chain disruptions [10]. The 2024 Change Healthcare breach, which impacted 192.7 million Americans and resulted in over $2 billion in financial losses, underscores how a single vendor compromise can ripple through the healthcare system [10]. Contracts should also outline roles and responsibilities for incident response, establish escalation pathways, and mandate joint exercises to test coordination across organizations.

Vendor Risk Assessment and Monitoring Processes

To address vendor vulnerabilities, organizations need thorough risk assessments and continuous monitoring. These assessments should evaluate both a vendor's current security posture and ongoing risk indicators. Tailor security questionnaires to focus on healthcare-specific risks, including technical controls, incident response capabilities, and the management of fourth-party vendors [10]. Instead of relying solely on traditional risk matrices, adopt multidimensional approaches that consider factors like data sensitivity, system criticality, access levels, and geographic dispersion.

Continuous monitoring involves more than periodic checks - it requires real-time threat detection. Use tools for real-time network, API, and anomaly monitoring, and consolidate findings in a centralized risk register to track AI-specific risks [10][11]. Testing AI models for robustness is crucial, particularly under attack conditions. Techniques like adversarial example generation and boundary testing can help identify vulnerabilities, while routine checks for bias or data drift ensure models maintain their integrity [10][11].

Technical Controls for Data and Model Protection

Technical controls play a crucial role in enforcing governance rules. However, only 17% of pharmaceutical organizations have automated protections against AI-driven data leaks. This is particularly concerning given the 56.4% increase in AI-related security incidents, highlighting the urgent need for layered defenses to secure data at rest, in transit, and during processing[1][3].

Access Controls and System Architecture

Layered defenses begin with robust access controls and secure system architecture. AI-powered Identity and Access Management (IAM) systems serve as a strong first line of defense. These systems actively monitor access, block suspicious requests, require additional verification for anomalies, and revoke outdated permissions in real time[14]. Multi-factor authentication (MFA) should be mandatory for anyone accessing AI drug discovery platforms, especially when dealing with sensitive data like proprietary molecular structures or clinical trial information.

Encryption is also essential. It should protect data stored in systems, data transferred between vendors and internal platforms, and data actively used during AI model training. Real-time scanning of data flows helps prevent sensitive information - such as molecular structures, patient details, and clinical results - from being exposed to unauthorized AI platforms[3]. Additionally, audit trails that log all access to electronic protected health information (ePHI) not only meet HIPAA requirements but also provide critical visibility into potential security breaches[3].

Data Integrity and Tracking

While access controls are vital, maintaining data integrity is equally important. Blockchain technology offers a strong solution for this challenge, particularly in clinical data management. Its decentralized, immutable, and transparent nature ensures that sensitive research data can be tracked and protected from unauthorized changes[2]. Permissioned blockchain networks allow authorized users to monitor data modifications, enhancing traceability and ensuring compliance with regulatory standards[2].

Frank Balonis, Chief Information Security Officer at Kiteworks, emphasized: "HIPAA demands comprehensive audit trails for all electronic protected health information (ePHI) access, yet companies cannot track what flows into shadow AI tools"[3].

To address this, validated systems with electronic signatures, as required by FDA 21 CFR Part 11, should be used[3]. Continuous monitoring solutions, such as AI data gateways and unified governance platforms, track every interaction with data across cloud services, on-premises systems, and shadow IT environments. These tools detect unusual behavior and provide the transparency needed to maintain data integrity[3][15]. Automated data classification systems further enhance security by identifying and categorizing sensitive information in real time, reducing the risk of unauthorized exposure[3].

Model and Algorithm Protection Methods

Securing AI models themselves requires specialized strategies. Adversarial resilience techniques, such as simulation-based protocols and AI-driven data validation, can identify and counteract data poisoning by flagging irregularities against established norms[5]. For large language models used in drug discovery, safeguards like instruction-following filters, output monitoring, and reinforcement learning from human feedback (RLHF) help mitigate risks from prompt injection attacks and other unintended behaviors[5].

The opaque nature of AI models, often referred to as the "black box" problem, adds another layer of complexity. Yulu Fan and colleagues from the College of Pharmacy, Shanghai University of Medicine & Health Science, noted:

"The decision process of AI models is often opaque, making it complex to verify the reliability of AI-proposed drug designs or research results. This 'black-box' characteristic may cause researchers difficulty in understanding the R&D logic and make regulators struggle to assess compliance and scientific validity"[4].

To address these challenges, organizations should prioritize algorithmic transparency. By fostering openness, researchers and regulators can better understand AI model logic, verify its reliability, and evaluate compliance with scientific and regulatory standards[4][16].

sbb-itb-535baee

Implementing Continuous AI Vendor Risk Management

Continuous risk management requires real-time vigilance. A significant number of security breaches originate from third-party vendors, with the average vendor-related incident involving multiple organizations - often as many as seven. Disjointed methods simply don’t work[10]. Healthcare organizations need a structured approach to identify vulnerabilities before they snowball, especially since most lack automated defenses to prevent sensitive data leaks through AI tools[3]. This ongoing monitoring builds on the technical controls already discussed, ensuring vendor risks are managed in a dynamic and proactive way.

Embedding Risk Checks in Research and Procurement

Risk assessments should be an integral part of every vendor relationship. For example, when research teams evaluate AI platforms for drug discovery, security questionnaires should address AI-specific risks like data poisoning, model integrity threats, and exposures from fourth-party vendors. Requests for proposals (RFPs) should demand robust safeguards, including encryption, access controls, and incident response protocols. Contract renewals are another key moment to reassess vendor security, especially given the 56.4% rise in AI-related security incidents highlighted in Stanford's 2025 AI Index Report[3]. At a minimum, risk assessments should be updated annually or whenever significant changes occur in business operations or technology infrastructure[17].

Using Platforms to Scale Risk Management

As manual processes become unmanageable, technology platforms are critical for scaling vendor oversight. The reality is stark: 99% of organizations have sensitive data at risk due to AI tools[3]. Platforms like Censinet RiskOps™ simplify vendor risk management by automating security questionnaires, monitoring technical controls, and tracking fourth-party relationships. Censinet AITM speeds up assessments by enabling vendors to complete questionnaires in seconds, with automated evidence summaries and risk reports. This blend of automation and human oversight allows risk teams to handle growing threats without needing to hire additional staff, maintaining efficiency while ensuring thorough evaluations.

Tracking and Reporting Vendor Risk Metrics

Effective vendor governance depends on tracking the right metrics to provide executives with actionable insights. Leaders need clear data on vendor risk trends to make informed decisions. Key metrics to monitor include time to remediation for vulnerabilities, incident counts by vendor and risk type, and compliance gaps with regulations like HIPAA, FDA 21 CFR Part 11, and new AI-specific rules. With AI-powered threat detection systems reducing incident identification times by 98 days[10], it’s crucial to measure the impact of automated monitoring tools. Dashboards should highlight vendors with access to the most sensitive data, those with high-risk scores, and any vendors experiencing security incidents. Regular reports to boards and executives ensure vendor risk management remains a priority, especially when healthcare breach costs average $10.3 million per incident[10].

Conclusion

AI-powered drug discovery has transformed the pace of research and the delivery of new treatments to the market. But these advancements come with significant cybersecurity challenges. Vendor-related breaches are a major concern, as even a single leaked molecule structure could wipe out billions of dollars in research investments [10][3].

To address these risks, organizations need a focused and effective strategy to protect their research data and intellectual property. This can be achieved through a three-part approach:

- Governance frameworks: Establish clear data classifications, define intellectual property ownership, and set strict contract requirements to hold vendors accountable.

- Technical controls: Implement tools like automated data classification, AI-driven data gateways, and advanced anomaly detection to shield against unauthorized access and data leaks.

- Continuous risk management: Adapt protections to evolving threats by using platforms like Censinet RiskOps™ to automate risk assessments and monitor vendor relationships at scale.

Failing to manage vendor risks properly can lead to far-reaching consequences. Beyond the immediate costs of a breach, organizations face potential fines for violating regulations such as HIPAA, FDA, and GDPR. Executives could even face criminal liability, and the resulting reputational damage could erode patient trust [8][3][13].

By adopting these measures, healthcare organizations can avoid devastating losses and build a secure foundation for innovation. Tackling risks from third- and fourth-party vendors head-on allows pharmaceutical companies to fully leverage AI's capabilities while minimizing liabilities [11][6]. This not only strengthens supply chain resilience but also protects operational integrity and safeguards the competitive edge needed to drive global biopharmaceutical advancements.

The choice is clear: invest in robust vendor risk management or face the potentially unaffordable consequences. With the right mix of governance, technical safeguards, and ongoing monitoring, healthcare organizations can protect their most critical assets and continue to push the boundaries of medicine.

FAQs

How can pharmaceutical companies safeguard their AI models from theft or reverse engineering?

Pharmaceutical companies can protect their AI models by adopting measures like encryption, strict access controls, and model watermarking to safeguard sensitive data. Conducting regular security audits and monitoring for any unusual activity can help identify and fix vulnerabilities before they become serious issues.

To take security a step further, advanced approaches such as federated learning, homomorphic encryption, and running models in secure environments can be employed. These strategies not only protect intellectual property but also make it harder for others to reverse-engineer the models, ensuring proprietary advancements remain secure.

How can organizations reduce cybersecurity risks when working with AI-powered drug discovery vendors?

When collaborating with AI-driven drug discovery vendors, minimizing cybersecurity risks requires a proactive strategy. Start by setting up automated security controls, performing frequent vendor risk evaluations, and enforcing strict access protocols for sensitive information. It's equally important to encrypt data during transmission and storage, while maintaining comprehensive audit logs to track all activities.

Another key step is promoting a strong security mindset among employees through focused training programs to reduce the chances of human error. Carefully review vendor security measures to ensure they align with healthcare regulations and safeguard your organization's intellectual property and research data effectively.

Why is it important to define intellectual property (IP) ownership in AI-driven drug discovery?

Defining intellectual property (IP) ownership in AI-driven drug discovery is crucial for ensuring legal clarity, protecting proprietary research, and avoiding disputes. It determines who holds the rights to discoveries made by AI systems - an important factor for staying competitive and securing advancements down the line.

Establishing clear IP ownership also helps organizations align with U.S. regulations and honor contractual obligations, which minimizes the risk of legal complications. By addressing ownership early, pharmaceutical and healthcare companies can protect sensitive data, retain control over their innovations, and build stronger partnerships with collaborators and vendors.