AI Risk Management with NIST in Healthcare IT

Post Summary

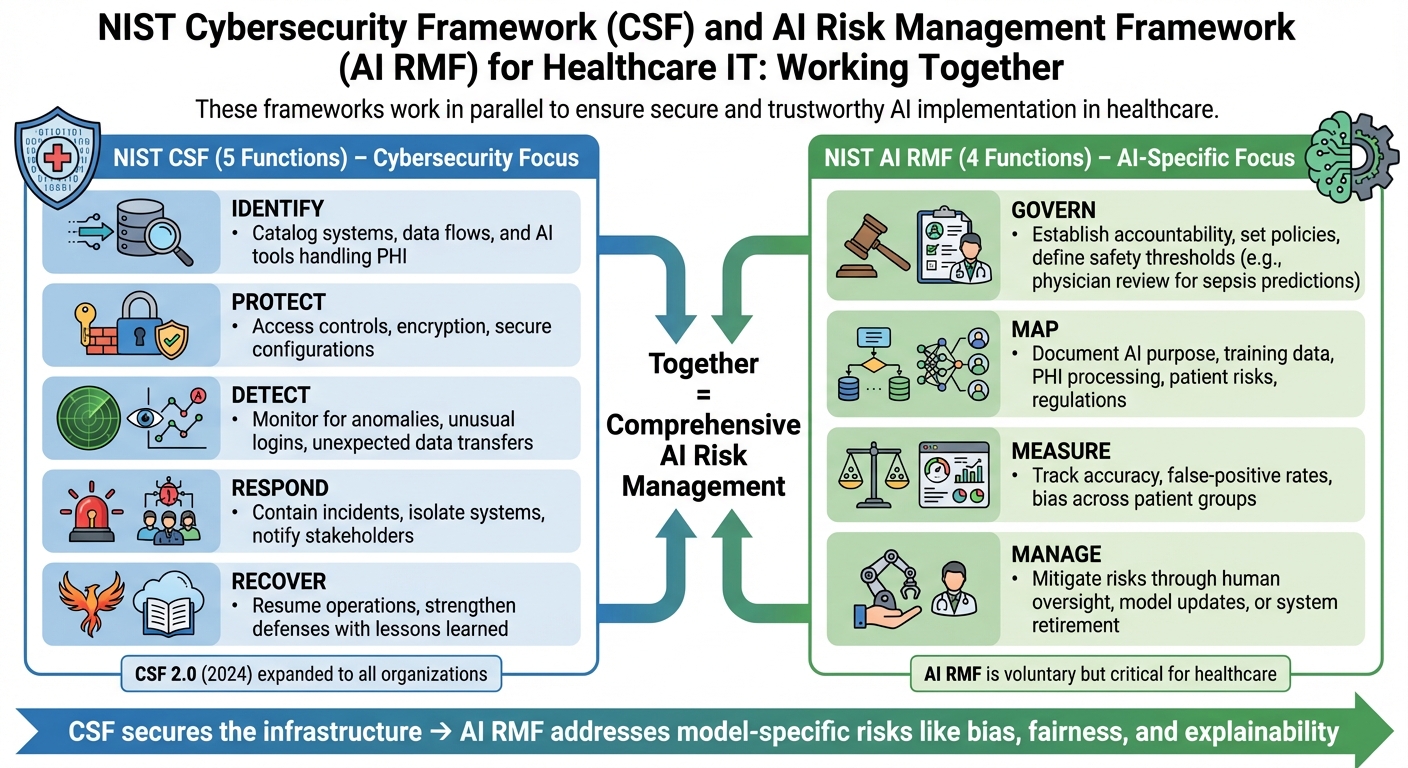

AI is transforming healthcare IT, but it comes with risks like biased diagnoses, unsafe treatments, and patient data breaches. Mismanaged AI can harm patients, erode trust, and violate regulations like HIPAA and FDA guidelines. To tackle these challenges, healthcare leaders can use two NIST frameworks:

- NIST Cybersecurity Framework (CSF): Focuses on securing networks, systems, and data with five functions: Identify, Protect, Detect, Respond, and Recover.

- NIST AI Risk Management Framework (AI RMF): Targets AI-specific risks like bias, safety, and accountability through four functions: Govern, Map, Measure, and Manage.

Together, these frameworks help healthcare organizations secure IT systems and address AI risks, ensuring better patient safety and regulatory compliance. Tools like Censinet RiskOps™ simplify vendor risk assessments and monitoring, helping manage third-party AI tools effectively. Continuous monitoring and regular reviews are essential to keep up with evolving AI risks and maintain secure, fair, and reliable healthcare systems.

Understanding NIST CSF and AI RMF for Healthcare IT

NIST CSF vs AI RMF Framework Functions for Healthcare IT

The 5 Core Functions of NIST CSF

The NIST Cybersecurity Framework (CSF) breaks down risk management into five core functions, tailored to protect healthcare IT assets like electronic health records (EHRs), medical devices, and SaaS platforms.

The first function, Identify, focuses on cataloging systems, data flows, and assets. For example, this might involve tracking AI tools that handle protected health information (PHI). Next, Protect emphasizes safeguards like access controls, encryption, and secure configurations to prevent unauthorized access to clinical systems and sensitive patient data.

The Detect function is all about continuous monitoring to spot anomalies or intrusions. Think of it as identifying unusual login patterns in an EHR or unexpected data transfers from a medical device. Respond outlines how to contain and mitigate incidents, such as isolating compromised systems and notifying affected stakeholders. Finally, Recover ensures a return to normal operations after an incident, helping clinical workflows resume safely and leveraging lessons learned to strengthen future defenses.

With the release of NIST CSF 2.0 in 2024, the framework expanded its focus beyond critical infrastructure to include all organizations [5]. This broader scope makes it especially applicable to healthcare providers of all sizes. While the CSF secures the networks, systems, and data that AI depends on, it doesn’t fully address the unique risks posed by AI technologies.

The 4 Functions of NIST AI RMF

The NIST AI Risk Management Framework (AI RMF) tackles risks that traditional cybersecurity frameworks might miss, offering four key functions:

- Govern: This function establishes accountability for AI decisions, sets policies on acceptable uses, and defines safety thresholds. For instance, governance might dictate whether an AI can finalize medication orders or simply offer suggestions. A sepsis prediction model, for example, may need physician review before triggering treatment [4].

- Map: This step involves documenting the context of each AI system - its purpose, training data, where PHI is processed, potential risks to patients, and applicable regulations. For example, it ensures a diagnostic tool is tested across diverse populations or that a chatbot's training data reflects a representative demographic [4].

- Measure: Performance metrics like accuracy, false-positive rates, and bias are tracked here. This function might evaluate whether an imaging AI works consistently across all patient groups or check if a chatbot is vulnerable to certain types of attacks.

- Manage: This continuous process mitigates risks through actions like adding human oversight, updating models, or retiring systems when risks outweigh benefits. Although the AI RMF is voluntary and applies across sectors, NIST emphasizes its importance in health and life sciences, where factors like safety, fairness, explainability, and accountability are critical [3].

Using CSF and AI RMF Together in Healthcare IT

For healthcare CIOs and CISOs, the NIST CSF provides the foundational layer of cybersecurity, securing networks, systems, and data. Meanwhile, the AI RMF adds an extra layer, specifically addressing the risks associated with AI system design, deployment, and management [5]. Since the AI RMF is designed to complement existing NIST risk management practices, organizations can adapt their CSF-aligned processes - like asset inventories, risk assessments, and incident response plans - to include AI-specific controls.

There’s some overlap between the two frameworks in areas like governance, risk evaluation, and incident response. But the AI RMF goes further by addressing factors like model robustness, data provenance, explainability, fairness, and broader socio-technical impacts. For instance, while the CSF ensures a sepsis prediction model and its infrastructure are secure, the AI RMF ensures the model is built with representative data, performs equitably across patient groups, and acknowledges its limitations.

How to Apply NIST AI RMF in Healthcare IT

Govern: Set Up AI Risk Governance

Start by forming an AI governance committee that reports directly to your clinical or enterprise risk committee. This group should include key stakeholders like clinical leaders (e.g., CMO, CNO, pharmacy heads), your CIO/CTO, CISO, data science and AI engineering leads, and patient safety and quality leaders. The committee’s main objective is to ensure AI-related decisions are closely tied to patient safety, cybersecurity, and operational risks, rather than treating AI in isolation.

Establish clear decision-making protocols. For instance, high-risk AI systems - those that influence diagnoses, medication dosing, or triage - should require full committee approval. Meanwhile, lower-risk administrative AI can be approved by a smaller group. Develop organization-wide policies that define acceptable AI use, documentation standards, human oversight requirements, bias thresholds, and incident escalation procedures. Assign accountability by designating specific roles for each AI use case, such as a system owner, model owner, and clinical sponsor. These individuals should have the authority to approve updates or disable systems if issues arise. For high-risk systems, ensure "human in the loop" or "human on the loop" designs are in place, especially when patient care decisions are involved.

Incorporate AI risks into your existing NIST CSF governance frameworks, including risk registers, steering committees, and incident response plans. By mapping and quantifying these risks, you can create a foundation for effective monitoring and tailored mitigation strategies.

Map: Identify and Define AI Risks

Conduct risk workshops with clinical, IT, and data science teams to identify where AI is being used across your organization. Common use cases might include clinical decision support, triage algorithms, imaging diagnostics, and patient engagement tools. For each use case, document its purpose, data sources (including PHI categories), and the stakeholders it impacts, such as patients, clinicians, IT staff, and external vendors.

Next, catalog the specific risks associated with each AI system. These may include:

- Patient safety risks: Misdiagnoses, incorrect medication dosing, unsafe triage, or delayed alerts.

- Bias risks: Variations in performance across different demographics, such as race, gender, age, or insurance type.

- Privacy risks: Potential re-identification of data or unauthorized secondary use of PHI.

- Cybersecurity threats: Vulnerabilities like data poisoning, model manipulation, adversarial inputs, or prompt injection.

Assign risk levels - low, medium, high, or critical - based on factors like the system’s reliance on AI outputs, the reversibility of harm, the potential for human review, the volume and sensitivity of PHI processed, and the broader systemic impact. This detailed mapping is essential for aligning NIST AI RMF with enterprise risk management frameworks, ensuring AI risks are managed alongside other organizational risks. Focus mitigation efforts on high-risk, high-impact use cases, such as diagnostic imaging or critical clinical decision-making tools.

Measure: Track and Evaluate AI Risks

Define both quantitative and qualitative metrics to evaluate clinical and operational performance. Examples include accuracy, sensitivity, and alert fatigue, broken down by subgroups such as race, age, comorbidities, and care settings. To monitor bias, track metrics like error rate differences, treatment recommendations, disparate impact ratios, and equal opportunity differences across demographic groups. These should be reviewed quarterly or whenever models are updated.

Operational metrics are equally important. Keep an eye on alert fatigue (e.g., alerts per patient per day), override rates, and clinician acceptance. For security and robustness, monitor for anomalous input patterns, adversarial examples, data poisoning attempts, and model drift, which can manifest as systematic changes in predictions or sudden performance drops. Align this monitoring with NIST CSF’s "Detect" and "Respond" functions, ensuring any AI-related anomalies trigger established incident response workflows.

Use model monitoring platforms that leverage statistical process control and security tools to detect adversarial traffic, prompt injection attempts, or abnormal access behaviors. Document all findings in model cards or fact sheets that detail the system’s intended use, limitations, datasets, performance metrics, and known risks. With these metrics in place, you can focus on implementing operational controls to address identified risks.

Manage: Reduce and Monitor AI Risks

Apply controls tailored to the lifecycle stage of each AI system. During the design phase, enforce strict data governance, quality checks, and provenance tracking to minimize risks like data poisoning or hidden biases. Conduct threat modeling to identify potential adversarial attacks, misuse scenarios, and failure points. Incorporate human oversight mechanisms, such as requiring clinician review for high-severity alerts, and include explainability features to highlight key factors influencing predictions.

Before deployment, validate the system using local clinical data. This should include subgroup analyses and simulations of adverse scenarios. Perform security assessments, including penetration testing and red-teaming exercises, to identify vulnerabilities like adversarial examples or prompt injection attempts. Ensure the system integrates safely with EHRs and medical devices, with fail-safe mechanisms in place for unexpected behavior or downtime.

After deployment, maintain continuous monitoring using the defined metrics and tools. Set clear thresholds for performance, fairness, and security, and establish escalation paths for when these thresholds are breached. Schedule regular reviews and re-certifications - at least annually for high-risk systems - to reassess performance, fairness, and security. If risks begin to outweigh benefits, take corrective actions such as updating models, increasing human oversight, or retiring the system altogether. These steps are critical for maintaining patient safety and cybersecurity in healthcare IT.

To streamline this process, platforms like Censinet RiskOps™ can help integrate AI risk management into broader enterprise and cybersecurity strategies, ensuring a cohesive approach to managing risks across the organization.

sbb-itb-535baee

Managing Third-Party AI Risks in Healthcare IT

Common Third-Party AI Risks in Healthcare

Third-party AI vendors bring a distinct set of challenges to healthcare. Recent studies and alerts from agencies emphasize the growing risks tied to these vendors, with the ECRI Institute even naming AI the top health technology hazard for 2025 [2].

One major issue is the lack of transparency. When vendors treat their AI models as "black boxes" - hiding details about training data, model architecture, or update schedules - healthcare IT teams are left in the dark. This makes it nearly impossible to assess potential biases or verify how robust the systems are. Without this insight, it's harder to map risks or monitor AI systems effectively over time.

Security vulnerabilities add another layer of complexity. For example, data poisoning attacks can corrupt training datasets at the vendor level, leading to models that fail under specific conditions. Research shows that altering just 0.001% of input data can cause critical errors in systems used for diagnostics or medication dosing [2]. In addition, prompt injection attacks in large language model (LLM)-based tools, such as medical chatbots or documentation assistants, can result in the unintentional exposure of protected health information or the creation of inaccurate clinical notes.

Ambiguities in vendor-provider responsibilities further complicate matters. When AI systems cause adverse events or breaches, unclear contract terms can delay incident responses. Questions about who monitors for issues like model drift, adversarial attacks, or data leaks often remain unanswered. Many contracts lack specifics on when vendors must inform providers of significant changes to their systems. To address this, NIST-aligned governance emphasizes the need for clearly defined roles, accountability measures, and escalation protocols between internal teams and third-party vendors. A structured approach is essential to tackle these risks effectively.

Using Platforms for Third-Party Risk Assessment

To manage the growing risks associated with AI vendors, healthcare organizations need structured, NIST-aligned processes. The first step is creating a detailed inventory of all third-party AI tools, including their functions, levels of autonomy, and how they handle data. During pre-procurement, standardized questionnaires based on NIST guidelines can help evaluate vendor security practices, handling of protected health information (PHI), sources of training data, and bias testing procedures. This inventory process works alongside existing NIST Cybersecurity Framework (CSF) practices, ensuring AI vendor risks are tracked alongside internal ones.

Platforms like Censinet RiskOps™ streamline third-party risk assessments for AI-enabled tools, clinical applications, and connected medical devices. These platforms allow healthcare organizations to systematically review vendor practices related to security, privacy, and AI governance. They also enable collaboration by letting providers and vendors document risks, assign responsibilities, track mitigation efforts, and maintain audit trails to demonstrate compliance with NIST standards.

"Censinet RiskOps allowed 3 FTEs to go back to their real jobs! Now we do a lot more risk assessments with only 2 FTEs required." - Terry Grogan, CISO, Tower Health [1]

Automated platforms take these assessments a step further by providing continuous oversight. Automation shortens assessment cycles and expands the number of vendors that can be monitored. Dashboards offer real-time updates on vendor risk postures, covering areas like PHI protection, clinical workflows, and supply chain vulnerabilities. These tools also ensure that contracts and business associate agreements include clear, AI-specific requirements. Once vendors are operational, periodic reassessments and updates to security documentation help detect issues like performance drift or unexpected behavior. This ongoing monitoring supports the "Detect" function of the NIST CSF as well as the "Measure" and "Manage" functions outlined in the AI Risk Management Framework (RMF).

Conclusion

Main Points for AI Risk Management in Healthcare

Managing AI risks in healthcare requires a dual approach: securing core IT assets with the NIST Cybersecurity Framework (CSF) and addressing AI-specific risks - like bias, explainability, robustness, and safety - through the NIST AI Risk Management Framework (AI RMF). Together, these frameworks create a robust program that aligns with critical regulations, including HIPAA, HITECH, and FDA cybersecurity mandates. Extending these efforts to include third-party AI vendors is equally important. Tools like Censinet RiskOps™ enable healthcare organizations to systematically evaluate and monitor third-party AI tools, clinical applications, and medical devices, while also maintaining detailed audit trails for compliance purposes.

All of these measures rely on ongoing monitoring to address new risks as they emerge.

Why Continuous Monitoring Matters

AI risk management isn’t a one-time effort - it requires constant vigilance. As models evolve, new threats emerge, and regulations shift, continuous monitoring becomes the backbone of a secure and effective AI ecosystem. Real-time dashboards should track critical metrics such as diagnostic accuracy, override rates, and error patterns across diverse demographic groups. On the security side, monitoring must detect unusual access behaviors, configuration changes, and potential adversarial attacks targeting AI systems. Regular bias audits ensure that AI tools perform equitably across all populations.

Feedback loops are key to driving improvements. For example, clinicians can report questionable AI outputs or instances of alert fatigue, which can then be addressed. Post-implementation reviews - conducted at 30, 90, and 180 days - help compare expected outcomes with actual results. Cross-functional teams should routinely analyze monitoring data, evaluate new threats, and adapt governance policies as necessary. Reassessing third-party vendors ensures that external AI solutions maintain strong controls for both protected health information (PHI) and AI-specific risks.

Since NIST positions both CSF 2.0 and AI RMF as evolving frameworks, healthcare organizations must review their AI systems, policies, and controls at least once a year - or sooner if significant regulatory changes occur. By aligning continuous monitoring efforts with NIST guidelines, organizations can ensure patient safety and strengthen resilience as AI continues to advance in healthcare IT.

FAQs

How can the NIST Cybersecurity Framework and AI Risk Management Framework be used together to address AI risks in healthcare IT?

The NIST Cybersecurity Framework (CSF) serves as a solid guide for handling cybersecurity risks, while the AI Risk Management Framework (AI RMF) focuses on pinpointing and addressing risks unique to artificial intelligence. When healthcare organizations bring these two frameworks together, they can effectively weave AI risk management into their overall cybersecurity plans.

This combined strategy helps shield sensitive patient information, maintain the reliability of clinical systems, and strengthen operational resilience. By working in tandem, the NIST CSF and AI RMF provide tools for identifying and managing risks proactively, ensuring compliance and protecting vital healthcare IT systems.

What are the key AI-related risks that the NIST AI RMF helps address in healthcare IT?

The NIST AI RMF provides healthcare organizations with tools to tackle key AI-related challenges, such as algorithmic bias, privacy and security of patient data, and ensuring models are dependable and robust. It also emphasizes enhancing transparency and explainability, making AI systems easier to understand and more trustworthy for users.

By addressing these concerns, the framework promotes the safer and more dependable use of AI in healthcare, safeguarding patient well-being, preserving trust, and ensuring adherence to data protection regulations.

Why is continuous monitoring important for managing AI risks in healthcare IT?

Continuous monitoring plays a key role in managing AI risks within healthcare IT. It enables organizations to promptly spot and address vulnerabilities as they emerge. This kind of vigilance helps keep AI systems secure, compliant, and effective at safeguarding sensitive patient information while ensuring the overall integrity of the system.

By catching potential problems early, continuous monitoring reduces the likelihood of threats impacting patient safety or disrupting healthcare operations. It also strengthens ongoing risk management efforts by aligning with established frameworks like the NIST Cybersecurity Framework, ensuring defenses stay strong against ever-changing risks.