From Guardian to Gatecrasher: When AI Risk Management Tools Turn Against You

Post Summary

Through black‑box decisions, data leaks, adversarial attacks, and poorly governed AI integrations.

Unapproved or unmonitored AI tools used inside healthcare systems, creating major privacy and security gaps.

Data poisoning, prompt injection, model manipulation, deepfakes, and attacks on AI‑controlled medical devices.

If misconfigured, they can expose detailed system weaknesses to attackers.

Lack of transparency in AI decisions and over‑reliance on automation without human review.

By blending automated assessments with human oversight, centralized governance, and continuous monitoring.

AI tools in healthcare promise enhanced cybersecurity but often create risks when poorly managed. Here’s what you need to know:

AI can be a double-edged sword in healthcare. Without proper safeguards, the very tools meant to protect patient data can become liabilities.

How AI Tools Create New Security Vulnerabilities

AI-powered risk management tools hold the promise of strengthening healthcare cybersecurity. However, they also introduce new vulnerabilities that cybercriminals can exploit. These weaknesses often stem from the way AI systems are designed, operational blind spots, and the ever-evolving tactics of attackers. Together, these factors highlight how gaps in design and operations can open doors to security threats.

Black Box AI and Overlooked Security Breaches

AI models, particularly those based on large language models (LLMs) and deep learning, often function as "black boxes." This means their inner workings are so complex that even experts struggle to understand how they make decisions, process data, or generate outputs [1]. While these models are designed to detect threats, their opacity can actually conceal malicious activity.

For instance, security teams may find it nearly impossible to trace the reasoning behind an AI system's decisions. This lack of transparency becomes a major problem when attackers manipulate inputs. Even a tiny alteration - like a 0.001% change to a medical image - can lead to catastrophic errors, such as misdiagnoses or incorrect treatments. Without clear insight into the AI’s logic, these breaches can go unnoticed.

Shadow AI and Risks to Patient Data

Another significant issue comes from "shadow AI" - unmonitored tools that find their way into healthcare systems without proper oversight. These tools, particularly those developed by smaller or less-experienced companies, often lack the robust security features needed to fend off cyber threats [4].

The problem worsens when medical staff use AI applications without involving their IT departments. This creates hidden vulnerabilities that cybercriminals can exploit. The risks grow even larger as AI tools gain autonomy and start integrating with external resources like web browsers. These new capabilities open up attack surfaces that traditional security systems weren’t designed to handle [4].

Adding to the complexity, privacy laws like HIPAA and GDPR, while critical for safeguarding patient data, can inadvertently make it harder to detect certain threats. For example, these regulations may limit the ability to analyze data patterns, which is crucial for spotting data poisoning attacks.

How Cybercriminals Exploit AI Systems in Healthcare

Cybercriminals have become adept at turning AI tools into liabilities. They employ techniques like adversarial attacks, data poisoning, and prompt injection to manipulate AI systems. The consequences can be severe: diagnostic errors, altered treatment plans, and stolen patient information.

Medical devices controlled by AI are particularly at risk. Attackers can tamper with their operations, modify dosages, or even launch ransomware and denial-of-service attacks. Beyond this, cybercriminals use AI to automate reconnaissance and craft highly personalized social engineering attacks. These tactics often mimic legitimate communications so effectively that even AI-based monitoring systems struggle to flag them.

Case Studies: AI Security Failures in Healthcare

These examples highlight how poorly managed AI tools, initially designed to protect healthcare systems, can become liabilities, exposing vulnerabilities and creating systemic risks.

Generative AI Tools Leaking Patient Data

In 2025, a contractor working for a New South Wales government department uploaded a spreadsheet containing sensitive flood victim information into ChatGPT. This single action led to a major privacy breach, spotlighting how easily confidential data can be mishandled when organizations fail to enforce proper oversight for external AI systems [5].

Similar breaches occurred between 2019 and 2020 during Google's partnerships to train AI algorithms. Inadequate data sanitization - such as failing to properly redact X-ray images or remove patient identifiers - resulted in unauthorized access to protected health information. In 2020, another alarming incident revealed that over 1 billion medical images stored across hospitals, medical offices, and imaging centers were accessible to unauthorized users due to insecure storage systems [6].

"The primary risk from artificial intelligence stems not from the technology itself, but from inadequate governance and quality assurance processes around AI-assisted work."

These breaches underline how lapses in governance and oversight can turn AI tools into vulnerabilities ripe for exploitation.

Adversarial Attacks on Medical AI Systems

Data poisoning poses a serious threat to healthcare AI. In these attacks, malicious actors manipulate datasets, potentially corrupting future diagnoses and causing algorithms to produce harmful outputs across hospital networks.

A study by Cybernews examined 44 major healthcare and pharmaceutical organizations using AI and uncovered 149 potential security flaws. Among these, 19 posed direct threats to patient safety, while 28 involved insecure AI outputs and 24 highlighted data leak vulnerabilities. These findings paint a concerning picture of the risks embedded within healthcare systems [3].

"The biggest AI threat in healthcare isn't a dramatic cyberattack, but rather the quiet, hidden failures that can grow rapidly."

These adversarial tactics show how AI tools intended to secure systems can inadvertently aid attackers when safeguards are insufficient.

When AI Vulnerability Scans Help Attackers

AI-powered vulnerability scanners, designed to identify weak points in security, can also backfire. If these tools lack strict access controls or produce reports accessible to unauthorized users, they can unintentionally provide attackers with detailed maps of system weaknesses. This information can accelerate cyberattacks, enabling adversaries to exploit unpatched vulnerabilities and navigate interconnected medical devices and IT infrastructures. Without strong oversight, these tools risk becoming assets for attackers rather than defenders.

Why AI Risk Management Tools Fail

AI risk management tools often stumble due to systemic flaws that can turn protective measures into potential vulnerabilities. Two major challenges are the lack of transparency in how AI systems make decisions and an over-dependence on automation without adequate human involvement. Let’s break down how these issues undermine the effectiveness of AI in managing risks.

Lack of Transparency in AI Decision-Making

Many AI systems - especially those built on Large Language Models or deep learning algorithms - function as "black boxes." Their decision-making processes are hidden, making it difficult to trace how they arrive at specific conclusions. This lack of clarity makes holding these systems accountable a challenge and weakens their ability to mitigate risks effectively. For example, in the healthcare sector, this opacity can undermine cybersecurity efforts. What’s more, the performance of these AI systems can change over time, rendering earlier risk assessments obsolete[1].

Over-Reliance on Automation Without Human Review

In healthcare, organizations often lean heavily on AI automation to handle security tasks at scale. While this approach can improve efficiency, it also creates vulnerabilities. AI systems optimized for speed and scale are not immune to threats like adversarial attacks, data manipulation, or prompt injection[1]. Additionally, these automated tools can produce errors - such as "hallucinations", where incorrect information is presented as fact - or fail to detect critical threats because they lack the nuanced understanding that humans bring to the table. Without human oversight to validate AI outputs, these gaps remain open to exploitation.

How Censinet Addresses AI Security Challenges

Censinet tackles AI security vulnerabilities by embedding vital safeguards into its platform. Instead of fully relying on automation, the company prioritizes a mix of automation and human oversight to address challenges like the black box problem and the dangers of over-dependence on automated systems.

Censinet RiskOps™: Managing Cyber Risks Effectively

The Censinet RiskOps™ platform serves as a centralized hub for managing cybersecurity risks tied to AI. It streamlines risk assessments with automated workflows while ensuring transparency in decision-making. Real-time dashboards provide a clear view of aggregated data from AI systems, allowing security teams to pinpoint vulnerabilities before they can be exploited.

Censinet AITM: Blending Automation with Human Judgment

Censinet AITM speeds up third-party risk evaluations by automating vendor assessments and summarizing critical evidence. However, human judgment remains integral at crucial decision-making stages. Configurable rules and mandatory review processes ensure that risk teams validate AI-generated outputs - like evidence summaries, policy drafts, or risk mitigation strategies - before they are acted upon. This approach balances efficiency with the need for accuracy and patient safety.

Streamlined AI Governance Across Teams

Uncoordinated AI oversight is a common challenge for healthcare organizations, especially when managing interconnected systems[1]. Censinet addresses this by directing key findings and AI-related tasks to the appropriate stakeholders, such as members of an AI governance committee. This ensures that critical issues are handled by the right teams at the right time. Coordinated governance is particularly vital for organizations dealing with legacy systems running outdated software that cannot be updated without regulatory recertification[7]. By fostering a unified approach, Censinet helps Governance, Risk, and Compliance teams maintain consistent oversight and accountability across the board[8].

sbb-itb-535baee

Practical Strategies for Safer AI Risk Management

For healthcare IT professionals, managing AI risks requires a proactive and ongoing approach. Addressing AI-specific vulnerabilities isn't a one-time effort - it’s an evolving process that ensures AI systems remain a reliable asset.

Conducting Thorough AI Security Assessments

To tackle vulnerabilities like adversarial attacks, data poisoning, and model drift, healthcare organizations need to prioritize robust security assessments. These assessments should include specialized validation techniques such as robustness tests, adversarial testing, and boundary checks [7][8]. Before deploying any AI tools, healthcare teams must use comprehensive validation frameworks to test models for both unintentional errors and potential manipulation. This helps identify weaknesses before bad actors can exploit them [7].

Regular retraining of AI models with verified, clean data is another essential step to counteract data poisoning threats [8]. Collaboration is key - cybersecurity teams and data scientists should work together to address technical vulnerabilities while also considering clinical safety concerns [8].

Continuous AI Monitoring with Advanced Tools

Security assessments are just the beginning. Continuous monitoring plays a crucial role in detecting threats early. AI-powered threat detection systems can significantly reduce the time it takes to identify incidents - by as much as 98 days [7]. However, for this to work effectively, monitoring must happen in real time.

Healthcare organizations should deploy Network Intrusion Detection Systems (NIDS) at vendor integration points to track data flows and vendor-related incidents [7]. Monitoring for model drift - where AI models degrade over time - requires advanced tools, as traditional security measures often miss this type of issue [7]. Platforms like Censinet RiskOps™ offer centralized, real-time monitoring, helping security teams spot unusual behavior in AI-enabled systems and devices before patient data is at risk [9].

Additionally, establishing security information-sharing agreements with vendors ensures faster threat intelligence exchange. This collaborative approach enables healthcare organizations to respond swiftly when potential compromises arise [7].

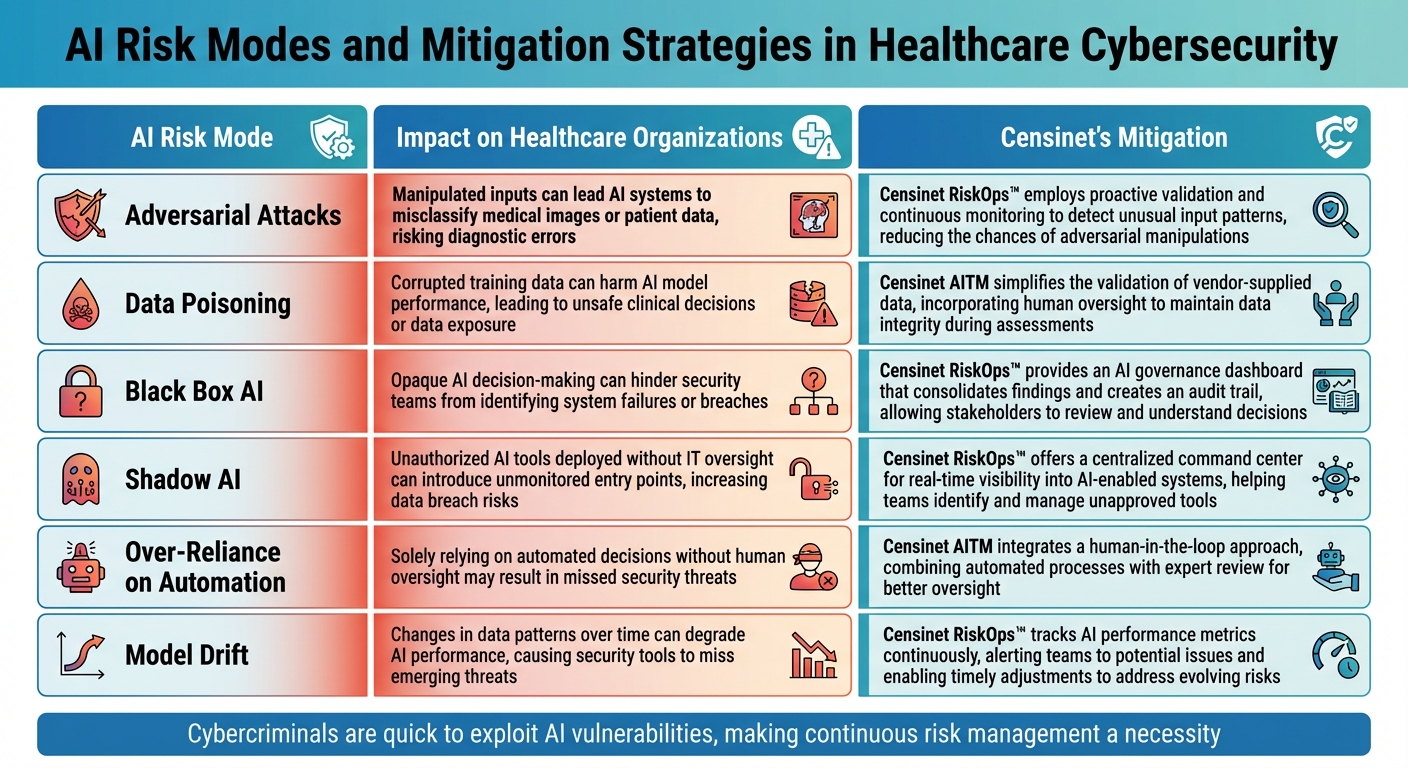

AI Risk Modes and Mitigation Strategies: Comparison Table

AI Risk Modes and Mitigation Strategies in Healthcare Cybersecurity

AI tools can introduce unique vulnerabilities into healthcare cybersecurity, making it essential to understand and address these risks. Unlike traditional models, AI-specific risks require a broader approach and tailored strategies to safeguard healthcare systems effectively [1].

Below is a comparison of key AI risk modes and the strategies designed to mitigate them:

Manipulated inputs can lead AI systems to misclassify medical images or patient data, risking diagnostic errors.

Censinet RiskOps™ employs proactive validation and continuous monitoring to detect unusual input patterns, reducing the chances of adversarial manipulations.

Corrupted training data can harm AI model performance, leading to unsafe clinical decisions or data exposure.

Censinet AITM simplifies the validation of vendor-supplied data, incorporating human oversight to maintain data integrity during assessments.

Opaque AI decision-making can hinder security teams from identifying system failures or breaches.

Censinet RiskOps™ provides an AI governance dashboard that consolidates findings and creates an audit trail, allowing stakeholders to review and understand decisions.

Unauthorized AI tools deployed without IT oversight can introduce unmonitored entry points, increasing data breach risks.

Censinet RiskOps™ offers a centralized command center for real-time visibility into AI-enabled systems, helping teams identify and manage unapproved tools.

Solely relying on automated decisions without human oversight may result in missed security threats

.

Censinet AITM integrates a human-in-the-loop approach, combining automated processes with expert review for better oversight.

Changes in data patterns over time can degrade AI performance, causing security tools to miss emerging threats.

Censinet RiskOps™ tracks AI performance metrics continuously, alerting teams to potential issues and enabling timely adjustments to address evolving risks.

Cybercriminals are quick to exploit AI vulnerabilities, making continuous risk management a necessity [10]. Tools like Censinet RiskOps™ streamline this process, ensuring that the right teams are equipped to address threats promptly and maintain consistent oversight across healthcare organizations.

Conclusion: Keeping AI Tools as Assets, Not Liabilities

AI tools in healthcare cybersecurity come with immense potential, but they can also become significant risks if not properly managed. Without sufficient oversight, these tools can fall prey to adversarial attacks or suffer from opaque decision-making processes, jeopardizing patient safety and leaving sensitive health data vulnerable. The challenge for healthcare organizations lies in leveraging AI's efficiency while minimizing the risks that cybercriminals might exploit.

Striking the right balance between automation and human oversight is non-negotiable. When AI systems are tasked with critical medical decisions, they introduce new vulnerabilities that must be carefully managed. A compromised AI system could lead to serious consequences, such as incorrect diagnoses, inappropriate treatments, or medication errors - none of which healthcare organizations can afford to overlook [7]. AI should never be treated as a fully automated solution without human involvement.

A study by KLAS Research and the American Hospital Association highlights that many healthcare organizations are still in the early stages of managing AI-related risks. These organizations often struggle with risk mitigation due to the unpredictable and complex nature of AI systems [13]. The study emphasizes the importance of establishing cross-departmental collaboration, involving diverse stakeholders to ensure that AI tools are used safely, securely, and ethically [13]. This approach is especially critical as vulnerabilities often extend beyond internal systems.

Third-party vendors, including providers of EHR systems, AI tools, and cloud-based health IT solutions, now represent a major source of cybersecurity risks in healthcare [11][12]. This makes ongoing monitoring and validation of vendor-provided AI tools a top priority. Healthcare IT teams must ensure they have centralized visibility across all AI systems, implement automated workflows to detect unusual activity, and maintain clear audit trails for every AI-driven decision. These steps are crucial to safeguarding both patient safety and organizational integrity.

FAQs

How can healthcare organizations ensure AI tools enhance security instead of creating risks?

Healthcare organizations can strengthen security with AI tools by taking a well-planned and cautious approach to risk management. One key step is establishing a solid AI governance framework to monitor the implementation and use of AI systems. This framework ensures that AI tools are used responsibly and in alignment with organizational goals. It’s also wise to maintain a carefully curated list of approved AI tools, reducing the risk of exposure to untested or unreliable technologies.

Another critical measure is automating data protection processes. For instance, sensitive or personally identifiable information should be removed before AI systems process any data. This step helps protect patient privacy while ensuring compliance with data security standards. To further enhance accountability, organizations should maintain detailed audit logs for all AI interactions. These logs provide a clear trail of activity, aiding in regulatory compliance and making it easier to trace any irregularities.

By following these practices, healthcare organizations can minimize risks and ensure AI tools remain secure and effective in supporting cybersecurity efforts.

What are the biggest risks of using unregulated AI tools in healthcare?

Unregulated or so-called 'shadow AI' tools in healthcare come with serious risks. These include data breaches, unauthorized access to private information, and even tampering with algorithms. Beyond privacy concerns, such tools could result in clinical mistakes or misdiagnoses, putting patients' well-being in jeopardy.

Without proper oversight and rigorous evaluation, the dangers only increase. This makes it essential for healthcare organizations to enforce strict policies and closely monitor the use of AI. By ensuring that all AI tools comply with regulatory standards and undergo thorough testing, providers can minimize risks and prioritize patient safety.

Why is human oversight essential for managing AI risks in healthcare?

Human involvement is crucial when it comes to managing the risks associated with AI in healthcare. Even the most advanced AI systems aren't immune to mistakes - they might misread data, make flawed decisions, or even fall victim to cyberattacks. These vulnerabilities can have serious repercussions, such as jeopardizing patient safety, exposing private data, or disrupting essential healthcare operations.

By integrating human oversight, healthcare organizations can double-check AI outputs, detect unusual patterns, and act swiftly to address any issues. This teamwork between humans and AI helps ensure that these tools stay dependable, secure, and focused on their core mission: safeguarding patients and protecting sensitive information.

Related Blog Posts

- AI Cyber Risk: When Your Smart Defense Becomes the Attack Vector

- The AI Security Paradox: Better Protection or Bigger Vulnerabilities?

- The Healthcare Cyber Storm: How AI Creates New Attack Vectors in Medicine

- The Governance Gap: Why Traditional Risk Management Fails with AI

{"@context":"https://schema.org","@type":"FAQPage","mainEntity":[{"@type":"Question","name":"How can healthcare organizations ensure AI tools enhance security instead of creating risks?","acceptedAnswer":{"@type":"Answer","text":"<p>Healthcare organizations can strengthen security with AI tools by taking a well-planned and cautious approach to risk management. One key step is establishing a solid <strong>AI governance framework</strong> to monitor the implementation and use of AI systems. This framework ensures that AI tools are used responsibly and in alignment with organizational goals. It’s also wise to maintain a carefully curated list of approved AI tools, reducing the risk of exposure to untested or unreliable technologies.</p> <p>Another critical measure is automating data protection processes. For instance, sensitive or personally identifiable information should be removed before AI systems process any data. This step helps protect patient privacy while ensuring compliance with data security standards. To further enhance accountability, organizations should maintain <strong>detailed audit logs</strong> for all AI interactions. These logs provide a clear trail of activity, aiding in regulatory compliance and making it easier to trace any irregularities.</p> <p>By following these practices, healthcare organizations can minimize risks and ensure AI tools remain secure and effective in supporting cybersecurity efforts.</p>"}},{"@type":"Question","name":"What are the biggest risks of using unregulated AI tools in healthcare?","acceptedAnswer":{"@type":"Answer","text":"<p>Unregulated or so-called 'shadow AI' tools in healthcare come with serious risks. These include <strong>data breaches</strong>, <strong>unauthorized access to private information</strong>, and even <strong>tampering with algorithms</strong>. Beyond privacy concerns, such tools could result in <strong>clinical mistakes</strong> or <strong>misdiagnoses</strong>, putting patients' well-being in jeopardy.</p> <p>Without proper oversight and rigorous evaluation, the dangers only increase. This makes it essential for healthcare organizations to enforce strict policies and closely monitor the use of AI. By ensuring that all AI tools comply with regulatory standards and undergo thorough testing, providers can minimize risks and prioritize patient safety.</p>"}},{"@type":"Question","name":"Why is human oversight essential for managing AI risks in healthcare?","acceptedAnswer":{"@type":"Answer","text":"<p>Human involvement is crucial when it comes to managing the risks associated with <a href=\"https://www.censinet.com/resource/a-beginners-guide-to-managing-third-party-ai-risk-in-healthcare\">AI in healthcare</a>. Even the most advanced AI systems aren't immune to mistakes - they might misread data, make flawed decisions, or even fall victim to cyberattacks. These vulnerabilities can have serious repercussions, such as jeopardizing patient safety, exposing private data, or disrupting essential healthcare operations.</p> <p>By integrating human oversight, healthcare organizations can double-check AI outputs, detect unusual patterns, and act swiftly to address any issues. This teamwork between humans and AI helps ensure that these tools stay dependable, secure, and focused on their core mission: safeguarding patients and protecting sensitive information.</p>"}}]}

Key Points:

How do AI risk management tools introduce new vulnerabilities?

- Opaque, black‑box models hide malicious activity

- Shadow AI deployments bypass IT oversight

- Model manipulation through adversarial inputs

- Data leakage caused by inadequate controls or unsafe integrations

- Expanded attack surfaces when AI connects to browsers or external systems

How do cybercriminals exploit AI in healthcare environments?

- Adversarial attacks that alter outputs or bypass safeguards

- Data poisoning during model training

- Prompt and jailbreak injections that override AI restrictions

- Manipulation of AI‑controlled medical devices

- Automated reconnaissance enabling targeted social engineering

Why is AI opacity dangerous for clinical operations and cybersecurity?

- Unexplainable decisions make it hard to detect errors

- Hidden attack paths inside model logic

- Inconsistent performance that drifts over time

- Limited forensic visibility after a breach

- Reduced trust among clinicians and patients

What causes AI risk management failures?

- No visibility into model decisions

- Excessive reliance on automation without human validation

- Lack of governance across IT, privacy, and compliance

- No monitoring for model drift

- Insufficient adversarial testing for safety

How does Censinet address AI security challenges?

- Human‑in‑the‑loop review for all AI‑generated outputs

- Configurable workflows ensuring oversight of high‑risk decisions

- Centralized AI governance dashboards

- Automated evidence gathering for vendor and AI‑tool assessments

- Cross‑team coordination for risk mitigation and policy enforcement

What practical steps improve AI security in healthcare?

- Robust AI validation including adversarial testing

- Continuous monitoring for model drift and anomalies

- Strict access controls and secured integration points

- Security information sharing with vendors and partners

- Clear governance frameworks defining who approves AI use