The Great AI Risk Miscalculation: Why 90% of Companies Are Unprepared

Post Summary

Rapid AI adoption has outpaced governance, inventory controls, oversight, and department coordination.

Threats like model poisoning, adversarial attacks, and opaque algorithms can lead to misdiagnoses, device manipulation, and patient safety failures.

Employees use unapproved AI tools, creating major data exposure and compliance risks without IT visibility.

Weak vendor controls, supply chain gaps, and unmonitored fourth parties expose sensitive data and clinical systems to attacks.

HIPAA and existing healthcare rules weren’t designed for AI, creating uncertainty around consent, data use, and accountability.

They centralize AI governance, automate assessments, track evidence, highlight fourth‑party exposures, and support NIST AI RMF alignment.

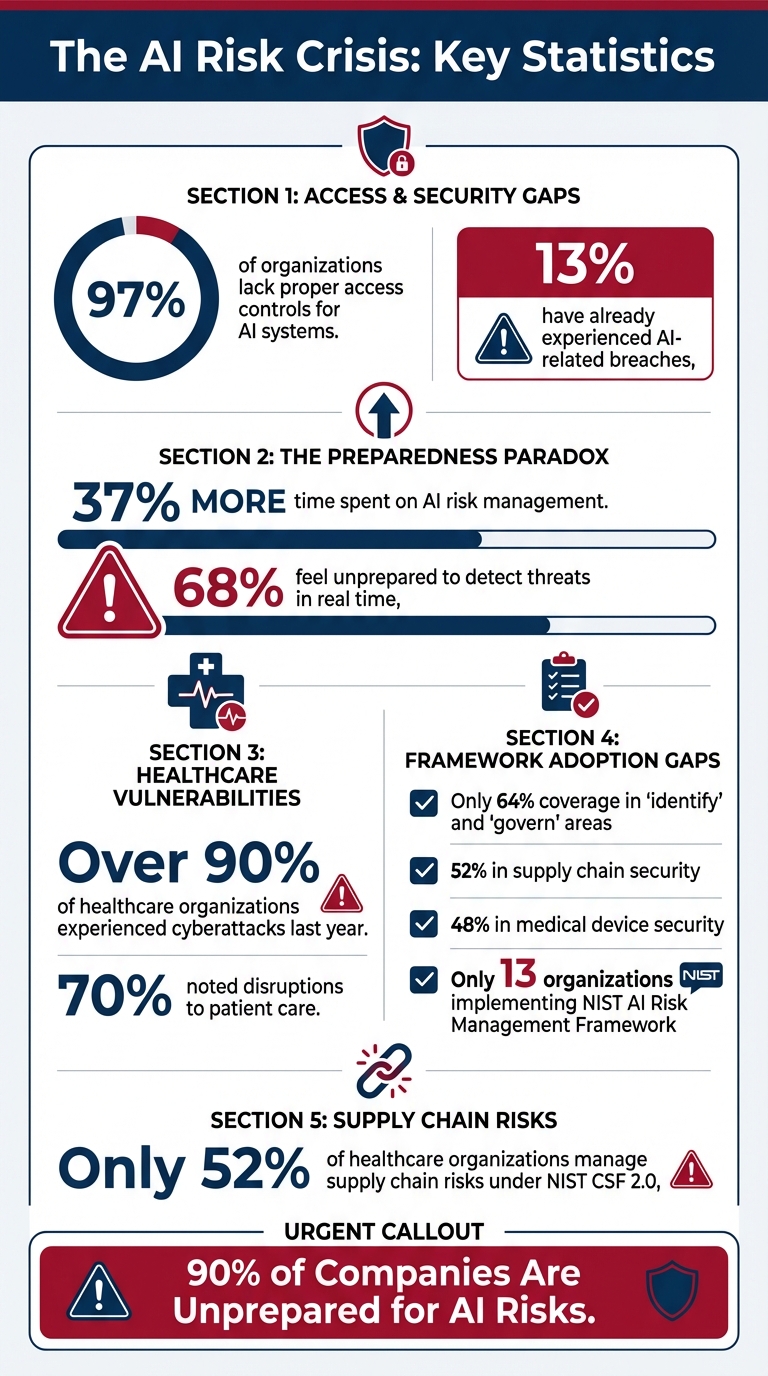

AI is transforming industries like healthcare and cybersecurity, but most companies are failing to manage the risks it brings. Key stats highlight the problem:

This disconnect stems from inadequate governance, poor visibility into AI systems, and outdated risk management frameworks. Many organizations don't even know what AI tools they use, leaving them vulnerable to data breaches, cyberattacks, and compliance failures.

To address these challenges, businesses need better oversight, stronger frameworks like the NIST AI Risk Management Framework, and tools like Censinet RiskOps for centralized risk management. Without these measures, they risk exposing sensitive data, harming patient safety, and incurring financial losses.

AI Risk Management Statistics: Why 90% of Companies Are Unprepared

AI Risks in Healthcare and Cybersecurity

Healthcare AI systems face unique challenges that go beyond the typical security concerns. One major issue is model poisoning, where attackers tamper with training data to make AI systems produce harmful outcomes, such as misdiagnosing illnesses or suggesting inappropriate treatments. Another threat comes from adversarial attacks, where subtle manipulations to AI inputs can lead to catastrophic errors. Adding to these challenges is the opacity of AI algorithms, which makes it harder to identify vulnerabilities and predict failures, creating significant risks to both patient safety and data security [3][5][6]. These problems not only hinder system performance but also introduce new technical and regulatory hurdles.

These risks aren't just hypothetical. For example, AI-powered medical devices can be remotely altered, hospital IT systems are exposed to new forms of cyberattacks, and criminals are leveraging AI to create malware that’s harder to detect [3]. The lack of transparency in AI models compounds the issue, leaving healthcare providers in a constant cycle of reacting to threats instead of preventing them.

AI-Driven Threats: Model Poisoning and Adversarial Attacks

The technical risks posed by AI in healthcare and cybersecurity are serious. Corrupted training data and model manipulation can cause AI systems to learn incorrect patterns, leading to unpredictable behavior. Another issue, known as drift exploitation, occurs when real-world data starts to differ from the data the AI was originally trained on, degrading its performance over time [3][5][6].

In cybersecurity, these same threats can compromise AI-based defense tools. Attackers may poison models used for threat detection, causing them to ignore specific malware, or they can create adversarial inputs that bypass AI-powered security entirely. This enables attackers to execute sophisticated, stealthy attacks that traditional security measures simply can't stop [3].

Regulatory and Compliance Requirements

Healthcare AI operates in a tightly regulated environment, but the rapid evolution of technology is outpacing the rules. For instance, HIPAA wasn’t designed with AI in mind, leaving questions around data use, patient consent, and accountability [5]. The FDA is stepping in to address this by promoting "Secure by Design" principles, which integrate cybersecurity protections throughout the lifecycle of AI-enabled medical devices [5].

Adapting regulations to keep up with AI's growth is critical. The Health Sector Coordinating Council is working on new guidelines for AI cybersecurity risks, set to be released by 2026, while also aligning AI practices with existing HIPAA and FDA standards [5]. On the state level, around 200 bills are being introduced to address various aspects of healthcare AI, showing that states are taking action even as federal regulations lag behind [8]. These regulatory gaps contribute to increased financial and operational risks for healthcare organizations.

The Cost of Poor AI Governance

Weak governance in AI systems can lead to skyrocketing costs, legal troubles, and reactive cybersecurity measures. Failing to comply with HIPAA, CMS, and OSHA standards can result in hefty fines and long-lasting reputational damage [7].

The consequences extend beyond finances. Poorly governed AI systems jeopardize patient safety, whether through errors in decision-making, tampering with medical devices, or treatment delays caused by cyberattacks. Data breaches expose sensitive patient information, and advanced threats like model inversion attacks, prompt injection vulnerabilities, and unauthorized system access further highlight the dangers [3]. Without robust governance measures - such as HIPAA-compliant cloud environments, encryption, audit trails, and role-based access controls - healthcare organizations remain vulnerable to these risks [7]. Addressing these gaps isn't just important; it's essential for protecting both patients and the future of healthcare operations.

Why Most Companies Are Unprepared for AI Risks

The rapid adoption of AI is outpacing the ability of many companies to manage the risks it brings. Traditional oversight methods simply aren't equipped to handle the complexity of AI, leaving businesses vulnerable [4][10]. This vulnerability stems from critical gaps in areas like system oversight, third-party risks, and the lack of AI-specific risk management frameworks.

Lack of AI Inventory and Oversight

Many organizations don’t have a clear view of the AI systems they use, making it hard to assess risks or enforce policies [4][9]. The rise of "shadow AI" - AI tools adopted by employees without IT's knowledge - only adds to the problem [11]. This lack of visibility puts sensitive data at risk and can even threaten compliance and patient safety.

Current approaches relying on employee training and warnings fall short without automated monitoring tools. These tools are essential for tracking AI usage in real time and identifying potential violations before they escalate [9]. Unfortunately, companies often discover issues too late, during governance reviews, when vulnerabilities have already taken root.

Third-Party and Supply Chain Vulnerabilities

The risks don’t stop at internal operations - external partnerships bring additional challenges. Vendor ecosystems, particularly in industries like healthcare, amplify these risks. For example, only 52% of healthcare organizations manage supply chain risks under the NIST Cybersecurity Framework 2.0, leaving nearly half exposed [1]. Alarmingly, over 90% of healthcare organizations reported experiencing a cyberattack last year, with 70% noting disruptions to patient care [2].

Attackers often target less secure vendors, such as billing companies, outsourced IT providers, or medical device manufacturers, to infiltrate core systems [11]. While healthcare providers may have limited control over their vendors' security practices, they still face the fallout - both clinical and financial - when breaches occur [11]. The interconnected nature of healthcare systems means that even a small vulnerability in an obscure vendor can have widespread consequences, and these third-party breaches are increasing each year [1][2].

Absence of Tailored AI Risk Frameworks

AI risk management is often treated as a narrow technical challenge rather than a broader business priority [12][10][13]. Risk and compliance teams are frequently involved too late, after AI systems have already been deployed. While frameworks like the NIST AI Risk Management Framework and the Health Sector Coordinating Council models exist, they are rarely woven into everyday operations.

Without coordination across departments, organizations struggle to enforce consistent policies [4]. This leads to a reactive approach, where risks are addressed only after incidents occur, rather than being mitigated proactively. Ultimately, this lack of preparation leaves companies scrambling to keep up with the challenges AI introduces.

sbb-itb-535baee

How to Manage AI Risks Effectively

Effectively managing AI risks calls for a well-rounded approach that combines technology, structured processes, and human expertise. Organizations need scalable systems that can grow alongside AI adoption while maintaining clear oversight and control. The goal is to shift from reactive problem-solving to proactive governance - spotting and addressing potential issues before they escalate. With risks on the rise, unified platforms like Censinet RiskOps have become crucial for modern AI governance.

Using Censinet RiskOps for AI Governance at Scale

Censinet RiskOps offers a centralized solution for managing AI risks, particularly in healthcare settings. The platform simplifies third-party risk assessments by automating key tasks such as streamlining security questionnaires, summarizing evidence, and identifying fourth-party exposures [15]. This automation not only speeds up the assessment process but also ensures accuracy.

Functioning like an "air traffic control" system, Censinet RiskOps directs critical findings and tasks to the appropriate stakeholders, including members of AI governance committees, for review and action. All relevant data is displayed in a real-time, user-friendly dashboard, providing a single, reliable source for tracking AI-related policies, risks, and tasks.

Balancing Automation with Human Oversight

AI works best when it complements human decision-making, especially in healthcare. Censinet AI uses a human-in-the-loop model, ensuring that risk teams stay in control through customizable rules and review workflows. While automation handles time-consuming tasks like evidence validation, drafting policies, and mitigating risks, human expertise remains essential for making critical decisions. With continuous monitoring, active oversight, and regular audits, the system ensures reliability and minimizes risks [14].

Applying Industry Frameworks with Censinet Tools

Censinet RiskOps aligns with established standards such as the NIST AI Risk Management Framework (RMF), enabling organizations to enforce strong AI policies [15]. This integration helps teams assess and manage risks across their entire ecosystem, from internal AI systems to third-party vendors and supply chain partners.

"With Censinet RiskOps, we're enabling healthcare leaders to manage

at scale to ensure safe, uninterrupted care."

Conclusion

The rapid adoption of AI in healthcare is moving faster than the development of effective risk management strategies, leaving organizations exposed to risks that could compromise patient safety, regulatory compliance, and trust. As AI systems evolve and introduce new vulnerabilities, relying on reactive measures is no longer enough.

To address this, a unified approach to risk management is essential. Tackling these risks requires collaboration across IT, clinical, compliance, and executive teams to ensure AI is used securely and ethically [1]. By aligning with frameworks like the NIST AI Risk Management Framework and utilizing unified oversight platforms, organizations can elevate AI governance from a mere compliance task to a strategic advantage.

Adopting frameworks such as the NIST Cybersecurity Framework 2.0 has shown measurable progress [1]. However, significant gaps remain: only 64% coverage in areas like "identify" and "govern", 52% in supply chain security, and 48% in medical device security. These gaps highlight vulnerabilities that traditional safeguards cannot fully address.

Moreover, AI adoption is outpacing the trust of patients and clinicians [16]. Proactive governance is crucial to prevent harm, mitigate liabilities, and reduce financial losses. With only 13 organizations currently implementing the NIST AI Risk Management Framework [1], early adopters are setting the standard for risk maturity.

Healthcare leaders must act decisively. Proven frameworks and scalable platforms are already available, making it possible to move from reactive to proactive governance. This shift will not only secure operations but also pave the way for safe and trustworthy AI advancements in healthcare.

FAQs

Why are so many companies unprepared to manage AI risks effectively?

Many companies face challenges in managing AI risks because they don’t have strong governance structures or specific policies designed to address the complexities of AI. This lack of preparation often leaves them vulnerable to issues like biases, data security weaknesses, and ethical dilemmas.

On top of that, the rapid growth of AI adoption has outpaced the creation of oversight systems and regulatory safeguards. As a result, many businesses are unprepared to tackle new threats. To close these gaps and strengthen their defenses, organizations need to take a proactive stance by conducting thorough risk assessments and maintaining continuous monitoring.

What are the key risks AI introduces in healthcare and cybersecurity?

AI systems in healthcare come with their share of risks, including data breaches, algorithm manipulation, and security gaps in medical devices. These issues could jeopardize both patient safety and privacy. In the realm of cybersecurity, dangers like adversarial attacks, model poisoning, data theft, and supply-chain vulnerabilities pose serious threats to the integrity and reliability of essential systems.

To tackle these challenges, organizations need to stay ahead of the curve. This means adopting strong risk management practices, establishing clear AI governance frameworks, and remaining alert to emerging threats to protect their operations and the people they serve.

How can organizations use frameworks like NIST to manage AI risks effectively?

Organizations can tackle AI risks head-on by integrating frameworks like NIST into their processes in a structured way that aligns with their unique needs. At its core, the framework focuses on four key functions: Govern, Map, Measure, and Manage. The key is to adapt these functions to fit the specific challenges and objectives of your organization.

To put this into action, start by forming cross-functional governance teams that bring together diverse expertise. Regular risk assessments are essential - evaluate potential risks and rank them based on their impact. From there, establish clear policies and controls to continuously monitor your AI systems, ensuring they stay secure and dependable over time. Tools like risk registers or dashboards can be incredibly useful for tracking risks in real time. These tools not only simplify oversight but also help maintain compliance and build trust in your AI operations.

Related Blog Posts

- The AI Cyber Risk Time Bomb: Why Your Security Team Isn't Ready

- Beyond the Hype: 7 Hidden AI Risks Every Executive Must Address in 2025

- AI Cyber Risk: When Your Smart Defense Becomes the Attack Vector

- The Governance Gap: Why Traditional Risk Management Fails with AI

{"@context":"https://schema.org","@type":"FAQPage","mainEntity":[{"@type":"Question","name":"Why are so many companies unprepared to manage AI risks effectively?","acceptedAnswer":{"@type":"Answer","text":"<p>Many companies face challenges in managing AI risks because they don’t have <strong>strong governance structures</strong> or <strong>specific policies</strong> designed to address the complexities of AI. This lack of preparation often leaves them vulnerable to issues like biases, data security weaknesses, and ethical dilemmas.</p> <p>On top of that, the rapid growth of AI adoption has outpaced the creation of <strong>oversight systems</strong> and <strong>regulatory safeguards</strong>. As a result, many businesses are unprepared to tackle new threats. To close these gaps and strengthen their defenses, organizations need to take a proactive stance by conducting thorough risk assessments and maintaining continuous monitoring.</p>"}},{"@type":"Question","name":"What are the key risks AI introduces in healthcare and cybersecurity?","acceptedAnswer":{"@type":"Answer","text":"<p>AI systems in healthcare come with their share of risks, including <strong>data breaches</strong>, <strong>algorithm manipulation</strong>, and <strong>security gaps in medical devices</strong>. These issues could jeopardize both patient safety and privacy. In the realm of cybersecurity, dangers like <strong>adversarial attacks</strong>, <strong>model poisoning</strong>, <strong>data theft</strong>, and <strong>supply-chain vulnerabilities</strong> pose serious threats to the integrity and reliability of essential systems.</p> <p>To tackle these challenges, organizations need to stay ahead of the curve. This means adopting strong risk management practices, establishing clear AI governance frameworks, and remaining alert to emerging threats to protect their operations and the people they serve.</p>"}},{"@type":"Question","name":"How can organizations use frameworks like NIST to manage AI risks effectively?","acceptedAnswer":{"@type":"Answer","text":"<p>Organizations can tackle AI risks head-on by integrating frameworks like <strong>NIST</strong> into their processes in a structured way that aligns with their unique needs. At its core, the framework focuses on four key functions: <em>Govern</em>, <em>Map</em>, <em>Measure</em>, and <em>Manage</em>. The key is to adapt these functions to fit the specific challenges and objectives of your organization.</p> <p>To put this into action, start by forming cross-functional governance teams that bring together diverse expertise. Regular risk assessments are essential - evaluate potential risks and rank them based on their impact. From there, establish clear policies and controls to continuously monitor your AI systems, ensuring they stay secure and dependable over time. Tools like risk registers or dashboards can be incredibly useful for tracking risks in real time. These tools not only simplify oversight but also help maintain compliance and build trust in your AI operations.</p>"}}]}

Key Points:

Why are most companies struggling to manage AI risks effectively?

- AI adoption is moving faster than governance, leaving major oversight gaps

- Organizations lack AI inventories, making it impossible to track systems and tools

- Shadow AI bypasses IT controls, increasing data leakage and compliance violations

- Traditional risk frameworks don’t apply, creating blind spots in model behavior

- Cross‑department collaboration is missing, delaying policy enforcement

What are the biggest AI risks facing healthcare organizations?

- Model poisoning attacks that corrupt data and produce dangerous clinical outputs

- Adversarial inputs that manipulate diagnostics or recommendations

- Opaque algorithms that hide failure points and bias

- Remote device manipulation, threatening patient safety

- AI‑driven malware designed to evade detection systems

How does poor AI governance lead to financial and operational harm?

- Regulatory fines from HIPAA, CMS, or OSHA violations

- Patient safety failures from incorrect AI‑generated decisions

- Data breaches exposing PHI through AI model vulnerabilities

- Legal liabilities linked to model inversion and prompt injection attacks

- Reputational damage when AI systems behave unpredictably

Why is lack of AI inventory and oversight so dangerous?

- Organizations don’t know what AI tools are in use, preventing proper controls

- Shadow AI expands attack surfaces without monitoring

- High‑risk workflows operate without review, leading to compliance gaps

- Manual oversight fails, discovering issues only during audits

- Automated monitoring is needed to detect real‑time misuse or unsafe behavior

How do third‑party and supply chain vendors increase AI risk?

- Vendors may introduce insecure AI models, exposing sensitive systems

- Fourth‑party subcontractors go unseen, creating hidden vulnerabilities

- Supply chain risk is poorly managed, especially in healthcare

- Attacks often target the weakest vendor, not the primary organization

- Clinical disruptions occur when vendor failures affect patient care

How does Censinet RiskOps support scalable AI governance?

- Automates AI risk assessments and evidence review

- Identifies fourth‑party exposures across AI ecosystems

- Centralizes dashboards for real‑time AI policy and risk tracking

- Routes tasks to governance committees, ensuring accountability

- Supports NIST AI RMF alignment, embedding structured controls