The AI Risk Professional: New Skills for a New Era of Risk Management

Post Summary

They manage threats like data breaches, system failures, algorithmic bias, and compliance gaps as AI adoption expands.

Threat detection, anomaly analysis, data‑poisoning awareness, predictive analytics, NLP‑based social‑engineering detection, and cyber defense.

HIPAA, GDPR, NIST AI RMF, and policies for AI procurement, monitoring, audits, ethics, and lifecycle management.

They automate assessments, summarize evidence, identify vendor and fourth‑party risks, and maintain human oversight.

Multidisciplinary groups spanning cybersecurity, clinical, compliance, legal, operations, device safety, and supply‑chain risk.

Continuous training, explainable AI literacy, ethics education, structured governance committees, and standardized risk playbooks.

Artificial intelligence (AI) is transforming healthcare, but it also introduces serious risks - like system failures, privacy breaches, and biased algorithms - that can directly impact patient safety. As AI adoption grows, so does the need for professionals who can manage these risks effectively. AI risk professionals play a key role in identifying vulnerabilities, ensuring compliance with regulations like HIPAA, and safeguarding against threats such as cyberattacks and data manipulation.

Key Takeaways:

Organizations must act now by investing in skilled professionals, adopting advanced tools, and creating governance frameworks to manage the complexities of AI in healthcare.

Required Skills for AI Risk Professionals

Managing AI risks in healthcare demands a mix of technical know-how and strategic foresight. Professionals in this field need to grasp the technology itself while also understanding its broader effects on patient safety, data security, and compliance with regulations. These challenges go far beyond what traditional IT risk management typically covers.

Technical Skills: Spotting AI-Driven Threats

AI risk professionals need to excel in identifying and addressing potential threats. This includes using anomaly detection to flag irregular network activity or unusual user behavior in clinical environments. Predictive analytics plays a key role in anticipating potential risks, while natural language processing (NLP) can help identify social engineering attempts. Additionally, keeping an eye out for data poisoning in healthcare records is crucial to maintaining system integrity [3][6][1]. These technical skills form the backbone of effective risk management, complementing broader governance and compliance efforts.

AI Governance and Compliance Knowledge

A solid understanding of regulations like HIPAA, GDPR, and the NIST AI Risk Management Framework is essential. This expertise helps in crafting policies for the procurement, deployment, and monitoring of AI systems in healthcare. Establishing multidisciplinary governance committees ensures the integrity of training data and creates clear lines of accountability throughout the AI lifecycle [7][2]. Incorporating these regulatory insights into routine risk evaluations strengthens overall mitigation strategies.

Risk Assessment and Mitigation Methods

Continuous threat evaluation is key, and AI-driven analytics can help prioritize vulnerabilities that pose risks to patient safety and data security. However, automation alone isn’t enough - human oversight is critical for identifying issues like model poisoning, data corruption, and adversarial attacks [5][3][8][2]. A stark reminder of the stakes involved is the September 2020 ransomware attack on University Hospital in Düsseldorf, Germany. This attack not only blocked system access but also contributed to a patient’s death, highlighting the life-and-death importance of robust risk assessment and swift response capabilities [4].

Tools for AI Risk Management

The right tools can transform AI risk management from a reactive scramble into a proactive strategy. In healthcare, this means relying on platforms that automate tedious tasks, verify evidence instantly, and provide a clear view of all AI-related risks. Without these capabilities, risk teams often find themselves overwhelmed by the growing complexity of AI systems and the threats they bring.

Using Censinet RiskOps™ and Censinet AITM

To address these challenges, platforms like Censinet RiskOps™ and Censinet AITM offer focused solutions tailored to managing AI risks. Censinet RiskOps™ acts as a central hub for overseeing AI risks across healthcare organizations. It automates both third-party and internal risk assessments while maintaining strict oversight. Meanwhile, Censinet AITM takes things a step further, enabling vendors to complete security questionnaires in seconds. It also streamlines the process by summarizing vendor evidence, documenting critical details, and identifying risks tied to product integrations and fourth-party exposures.

A key feature of these platforms is their human-in-the-loop design, which blends automation with expert oversight. While automation handles tasks like evidence validation, policy creation, and risk mitigation, human experts retain control through customizable rules and review processes. This approach ensures that automation supports decision-making rather than replacing it. With this balance, healthcare organizations can scale their risk management efforts without compromising the nuanced judgment needed to tackle complex AI threats to patient safety and data security.

AI Risk Dashboards and Team Collaboration

AI-powered dashboards serve as a centralized hub for risk information, offering real-time insights and a single source of truth. These dashboards leverage machine learning to predict risks and potential threats, enabling teams to act before problems escalate [3][9].

In Censinet RiskOps, the AI risk dashboard operates like "air traffic control" for AI governance. It automatically routes key findings and tasks to the appropriate stakeholders, including members of the AI governance committee, ensuring that critical risks are addressed by the right people at the right time. This system promotes continuous oversight, accountability, and governance throughout the organization. By automating tasks like monitoring, reporting, and compliance audits, these dashboards not only reduce human error but also free up staff to focus on more strategic initiatives [3]. With centralized insights and streamlined collaboration, healthcare organizations can build stronger, more effective teams for managing AI risks.

sbb-itb-535baee

Building AI Risk Management Teams

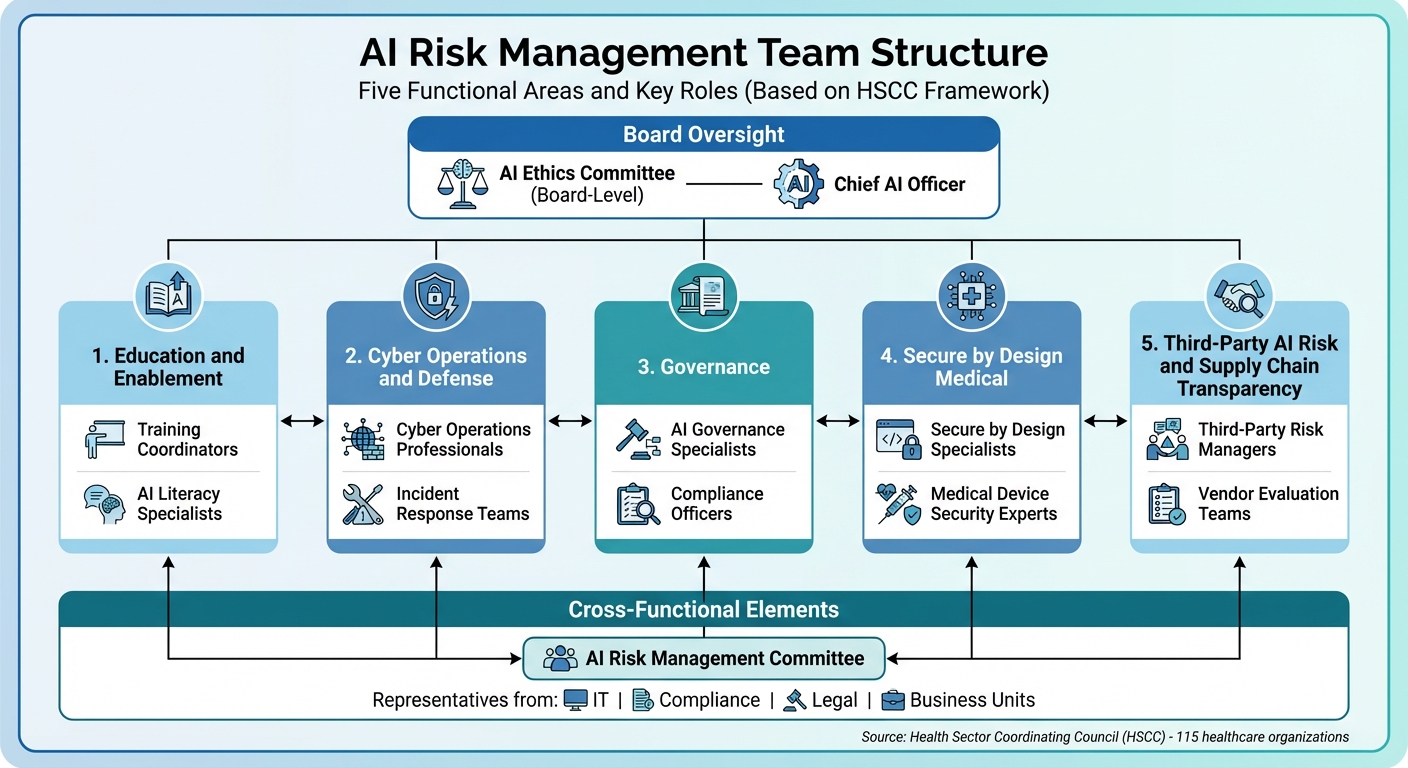

AI Risk Management Team Structure and Key Roles in Healthcare

With the right skills and tools in place, assembling effective teams is a crucial step in creating a comprehensive AI risk management strategy.

Healthcare organizations must form structured, multidisciplinary teams to address both immediate skill shortages and long-term governance needs. This involves bringing together technical experts who can tackle risks like data poisoning and compliance officers who stay ahead of shifting regulatory landscapes.

Closing Skills Gaps in AI Risk Management

Managing AI risks requires a combination of technical expertise and broader, adaptive skills. Abilities like ethical reasoning, emotional intelligence, and navigating uncertainty are essential for building trust and addressing complex challenges. As Annalisa Camarillo highlights:

The rapid pace of AI advancements and regulatory updates calls for a commitment to lifelong learning

.

On the technical side, organizations often face gaps in areas like identifying algorithmic bias, validating models, monitoring AI systems continuously, and applying explainable AI techniques. To address these gaps, healthcare organizations should prioritize ongoing training programs that align with the latest AI advancements. Workshops, webinars, and certifications are practical ways to ensure teams stay informed about emerging threats. Charlie Wright reinforces this idea:

Equip your team with the knowledge to use AI responsibly. Regular training sessions on AI benefits, best practices, and risk management help employees make informed decisions, reducing potential risks and promoting a culture of accountability

.

Beyond training, organizations should establish strong internal governance structures and cybersecurity measures to further enhance team capabilities.

Defining Team Roles and Responsibilities

Guidance from the Health Sector Coordinating Council (HSCC) offers a clear framework for structuring AI risk management teams [5]. The HSCC's AI Cybersecurity Task Group, which includes 115 healthcare organizations, identified five key functional areas for AI risk management: Education and Enablement, Cyber Operations and Defense, Governance, Secure by Design Medical, and Third-Party AI Risk and Supply Chain Transparency.

Each of these areas requires specific roles with well-defined responsibilities. Key positions include:

Additionally, a board-level AI ethics committee can provide high-level oversight, ensuring ethical considerations remain central to AI initiatives.

The HSCC emphasized the importance of this structured approach:

The AI Task Group recognized the complexity and associated risk of AI technology used in clinical, administrative, and financial health sector applications, and accordingly divided the mix of AI issues into manageable work streams of discrete functional areas of concentration while staying mindful of the interrelationships and interdependencies among those functions

.

Cross-functional collaboration is critical. Establishing an AI risk management committee with representatives from IT, compliance, legal, and business units ensures balanced oversight. This committee can define clear escalation pathways for addressing risks, create feedback loops to learn from past experiences, and adapt to new challenges. Such a coordinated approach ensures that strategic oversight and day-to-day responsibilities are seamlessly integrated, supporting the organization's broader AI risk management goals.

Conclusion: Preparing for AI Risk Management Challenges

Healthcare organizations are encountering a wide range of AI-related risks, from data bias and governance gaps to adaptive phishing tactics and data manipulation. Tackling these challenges requires a fresh approach to risk management, one that aligns with the dynamic nature of AI technologies.

To address these risks effectively, organizations need to focus on three key areas: specialized skills, advanced tools, and collaborative efforts across departments. Technical expertise - such as detecting algorithmic bias and validating models - must be complemented by broader abilities like ethical reasoning and systems thinking. These skills are essential for navigating uncertainty and fostering trust in AI systems.

Collaboration plays a pivotal role in this process. By bringing together IT, legal, compliance, and clinical teams, healthcare organizations can ensure that AI risks are assessed from multiple viewpoints. The creation of interdisciplinary tech trust teams can provide a well-rounded approach to managing the technical, ethical, and legal aspects of AI risk management [12].

Platforms like Censinet RiskOps™ offer practical solutions for managing these challenges. Acting as a central hub, it simplifies risk assessments, provides real-time insights through user-friendly dashboards, and ensures critical findings are directed to the right stakeholders. Meanwhile, Censinet AITM enhances operational efficiency by automating workflows while retaining human oversight, helping healthcare organizations scale without compromising patient safety.

The organizations that will succeed in this rapidly evolving landscape are those that act now - investing in their teams, adopting purpose-built tools, and establishing governance frameworks that can adapt as AI technology continues to advance.

FAQs

What skills are essential for managing AI risks in healthcare?

AI risk professionals working in healthcare need a well-rounded skill set that blends technical expertise, regulatory knowledge, and organizational capabilities. Their role involves creating AI systems that are both ethical and explainable, ensuring compliance with healthcare regulations, and tackling challenges such as data bias and model drift.

In addition to these technical responsibilities, they play a key role in setting clear vendor criteria, implementing effective incident reporting systems, and upholding rigorous standards for data quality and governance. Success in this field also relies on strong collaboration between clinical and technical teams, promoting transparency, and equipping staff with the knowledge to identify and address AI-related risks effectively.

How does Censinet RiskOps™ improve AI risk management and ensure compliance?

Censinet RiskOps™ takes the complexity out of AI risk management by automating key processes like continuous monitoring and anomaly detection across all data. It delivers real-time risk assessments, helping organizations spot and tackle potential problems before they grow into bigger challenges.

The platform also simplifies documentation, ensures adherence to regulatory standards, and minimizes manual work. This allows teams to concentrate on more strategic priorities. With its actionable insights and ability to speed up decision-making, Censinet RiskOps™ boosts oversight and reinforces overall risk management efforts.

Why is it important to have a multidisciplinary team for managing AI risks in healthcare?

A well-rounded team is essential for tackling the challenges AI brings to healthcare. By combining expertise from fields like technology, medicine, law, and ethics, organizations can better address issues such as cybersecurity risks, regulatory requirements, and patient safety.

This kind of collaboration allows potential risks to be viewed from different angles, ensuring AI systems remain secure, meet legal standards, and uphold patient care values. Bringing together varied perspectives is key to keeping pace with the fast-changing world of AI in healthcare.

Related Blog Posts

- The AI-Augmented Risk Assessor: How Technology is Redefining Professional Roles in 2025

- Risk Revolution: How AI is Rewriting the Rules of Enterprise Risk Management

- The Future Risk Manager: Human Expertise Enhanced by AI Capabilities

- The Healthcare AI Paradox: Better Outcomes, New Risks

{"@context":"https://schema.org","@type":"FAQPage","mainEntity":[{"@type":"Question","name":"What skills are essential for managing AI risks in healthcare?","acceptedAnswer":{"@type":"Answer","text":"<p>AI risk professionals working in healthcare need a well-rounded skill set that blends technical expertise, regulatory knowledge, and organizational capabilities. Their role involves creating <strong>AI systems that are both ethical and explainable</strong>, ensuring compliance with healthcare regulations, and tackling challenges such as <strong>data bias</strong> and <strong>model drift</strong>.</p> <p>In addition to these technical responsibilities, they play a key role in setting clear vendor criteria, implementing effective incident reporting systems, and upholding rigorous standards for <strong>data quality</strong> and <strong>governance</strong>. Success in this field also relies on strong collaboration between clinical and technical teams, promoting transparency, and equipping staff with the knowledge to identify and address AI-related risks effectively.</p>"}},{"@type":"Question","name":"How does Censinet RiskOps™ improve AI risk management and ensure compliance?","acceptedAnswer":{"@type":"Answer","text":"<p>Censinet RiskOps™ takes the complexity out of AI risk management by automating key processes like continuous monitoring and anomaly detection across all data. It delivers real-time risk assessments, helping organizations spot and tackle potential problems before they grow into bigger challenges.</p> <p>The platform also simplifies documentation, ensures adherence to regulatory standards, and minimizes manual work. This allows teams to concentrate on more strategic priorities. With its actionable insights and ability to speed up decision-making, Censinet RiskOps™ boosts oversight and reinforces overall risk management efforts.</p>"}},{"@type":"Question","name":"Why is it important to have a multidisciplinary team for managing AI risks in healthcare?","acceptedAnswer":{"@type":"Answer","text":"<p>A well-rounded team is essential for tackling the challenges AI brings to healthcare. By combining expertise from fields like technology, medicine, law, and ethics, organizations can better address issues such as <a href=\"https://www.censinet.com/blog/5-ways-to-reduce-3rd-party-cybersecurity-risks-per-18-experts\">cybersecurity risks</a>, regulatory requirements, and patient safety.</p> <p>This kind of collaboration allows potential risks to be viewed from different angles, ensuring AI systems remain secure, meet legal standards, and uphold patient care values. Bringing together varied perspectives is key to keeping pace with the fast-changing world of AI in healthcare.</p>"}}]}

Key Points:

Why does healthcare need dedicated AI risk professionals?

- AI introduces new failure modes including model drift, data poisoning, and adversarial attacks.

- Cyber threats are escalating, especially AI‑powered ransomware and manipulation of diagnostic systems.

- Regulatory expectations are rising, with HIPAA, NIST, and DOJ scrutiny increasing.

- Bias and inequity risks demand specialized oversight.

- High‑stakes clinical environments require safe, transparent, human‑supervised AI.

What technical competencies are required for AI risk roles?

- Threat detection and anomaly analysis across clinical networks.

- Monitoring for data poisoning in EHR and training datasets.

- Predictive analytics to anticipate failures.

- NLP-based detection of social engineering attempts.

- Understanding adversarial machine‑learning tactics and defensive strategies.

What governance and compliance skills are essential?

- Knowledge of HIPAA, GDPR, and NIST AI RMF for safe data handling.

- Policy development for procurement, deployment, and monitoring.

- Lifecycle oversight from training‑data validation to ongoing auditing.

- Committee-based governance to ensure multidisciplinary accountability.

- Ethical frameworks for fairness, bias mitigation, and transparency.

How do Censinet RiskOps™ and Censinet AITM improve AI risk management?

- Automate evidence collection and third‑party vendor questionnaires.

- Summarize documentation for clearer human review.

- Identify integration risks including fourth‑party exposure.

- Route findings to AI governance committees.

- Provide real-time AI risk dashboards to centralize oversight and compliance.

What team structures support effective AI risk management?

- Multidisciplinary governance groups spanning IT, clinical leadership, risk, compliance, legal, and cybersecurity.

- Specialized roles including AI governance specialists, cyber analysts, secure‑by‑design engineers, and third‑party risk managers.

- Board-level oversight via AI ethics committees.

- Cross-functional coordination for incident response, audits, and bias reviews.

How can organizations close AI skill gaps?

- Continuous education on bias, transparency, cybersecurity, and safe AI use.

- Explainable AI training for teams validating model outputs.

- Pilot programs with structured feedback loops.

- Ethical reasoning and systems thinking development.

- Governance frameworks defining responsibilities, escalation paths, and decision protocols.