AI Risks in Medical Device Development: Security Solutions

Post Summary

Artificial intelligence is transforming medical devices, but it introduces serious cybersecurity challenges that traditional security measures can't handle. AI-enabled devices, like diagnostic tools and wearable monitors, rely on cloud connectivity and continuous learning, which makes them vulnerable to attacks such as data breaches, adversarial inputs, and model tampering. These risks directly threaten patient safety, with examples including altered insulin dosages or manipulated diagnostic outcomes.

To address these challenges, manufacturers and healthcare organizations need tailored security strategies. Key solutions include:

- Data Integrity Protocols: Use cryptographic hashing, encryption, and immutable audit logs to safeguard training data.

- Adversarial Testing: Simulate attacks and implement anomaly detection to protect AI models.

- Secure Development Practices: Employ threat modeling, secure coding, and supply chain transparency (e.g., SBOMs).

- Regulatory Compliance: Follow FDA guidelines, NIST frameworks, and HSCC best practices to ensure safety and functionality.

- Centralized Risk Management: Platforms like Censinet RiskOps™ streamline monitoring and mitigation efforts.

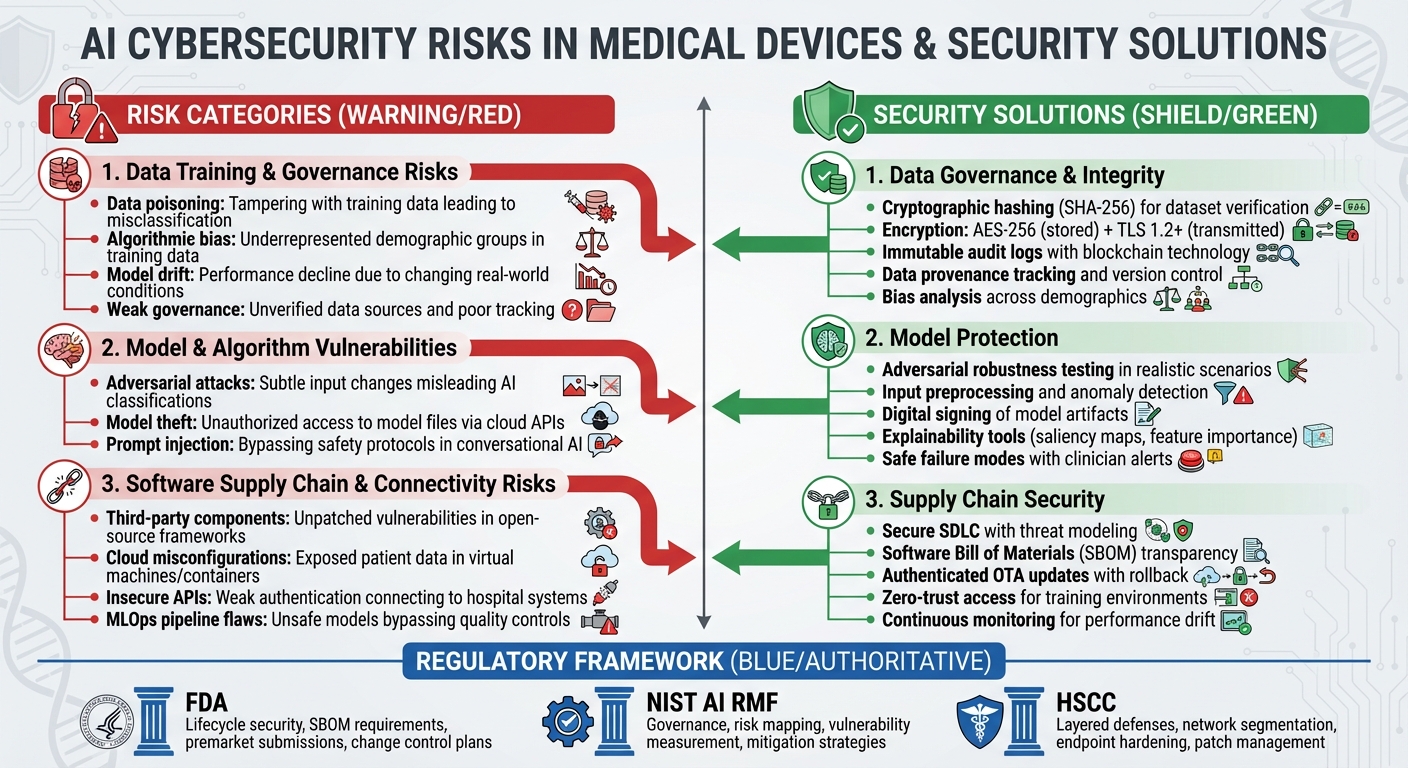

AI Medical Device Security: Risk Categories and Protection Solutions Framework

AI Cybersecurity Risks in Medical Device Development

AI-powered medical devices face security challenges throughout their lifecycle - from data collection to deployment and updates. These challenges fall into specific categories, each requiring tailored defenses. Recognizing where vulnerabilities arise is key for manufacturers and healthcare providers to strengthen their security measures. Let’s take a closer look at the risks tied to data and governance practices.

Data Training and Governance Risks

One major concern is data poisoning, where attackers tamper with training data. This could involve altering imaging labels, inserting misleading samples, or corrupting datasets. The result? AI systems may misclassify data, leading to missed diagnoses or incorrect therapy recommendations that could directly harm patients. [5]

Another issue is algorithmic bias, which occurs when training data doesn’t adequately represent certain demographic or clinical groups. Research has shown that some AI tools fail to identify care needs for specific racial groups, raising both fairness and safety concerns.[9][3] For instance, if a device is trained predominantly on data from one population, it may not perform reliably for others, potentially causing misdiagnoses or delays in treatment.

Model drift is yet another challenge. Over time, real-world conditions - like changes in imaging protocols, evolving disease trends, or shifts in patient demographics - can cause AI performance to decline. Without regular retraining or recalibration, the device's accuracy may falter.[3][4] Weak data governance adds to the problem. Unverified data sources, poor tracking of data origins, and insufficient documentation make it difficult to reproduce results, ensure safety for regulators, or investigate adverse events.[9][6] These factors highlight the importance of continuous monitoring and robust AI-specific safeguards.

Model and Algorithm Vulnerabilities

AI models are also vulnerable to adversarial attacks. These attacks involve subtle, often invisible changes to inputs - like medical images or physiological signals - that can mislead the AI into making incorrect classifications. For example, a diagnostic AI might fail to detect a tumor or misinterpret a heart rhythm due to such manipulations.[9][6] Research has shown that even minor noise can drastically alter AI outputs, raising alarms about potential tampering by malicious insiders or compromised devices in clinical settings.[9]

Another threat is model theft, where attackers access model files or query interfaces exposed via cloud APIs. This allows them to replicate the model, leading to intellectual property theft, the creation of unregulated knockoff devices, or even crafting more effective adversarial attacks.[5] For AI systems with generative or conversational capabilities, prompt injection attacks can be particularly dangerous. Such attacks might cause models to bypass safety protocols, leak sensitive data, or produce unsafe clinical recommendations.[7]

These vulnerabilities not only compromise device reliability but also pose risks to patient safety. The reliance on third-party components further increases these challenges.

Software Supply Chain and Connectivity Risks

AI medical devices often incorporate third-party software components, such as open-source machine learning frameworks, pre-trained models, and commercial libraries. If these components contain unpatched vulnerabilities or malicious code, they can directly compromise clinical systems.[5]

Cloud-based workflows and insecure APIs add another layer of risk. Cloud-based training or inference workflows rely on virtual machines, containers, and storage systems. If misconfigured, these can expose sensitive patient data or model assets to external threats.[5] Similarly, APIs that connect devices to hospital systems - like electronic health records, imaging archives, or remote monitoring platforms - can become attack points if they lack secure authentication, encryption, or proper permissions.[9][5]

In automated MLOps pipelines, improper validation during model updates or data refreshes can introduce unsafe or untested models into production, bypassing critical quality controls. These supply chain and connectivity risks underscore the need for thorough vendor assessments and secure development practices to safeguard AI-enabled medical devices.

Security Solutions for AI-Enabled Medical Devices

Tackling the risks associated with AI-enabled medical devices calls for a multi-layered defense strategy. This approach should safeguard data, strengthen AI models, and secure the entire development and deployment process. Manufacturers must prioritize patient safety, data accuracy, system reliability, and compliance with regulations - aligning protective measures directly with identified threats. Below, we'll explore practical solutions that address vulnerabilities at every stage of the AI device lifecycle.

Data Governance and Integrity Solutions

To combat risks like data poisoning and governance issues, manufacturers need robust data integrity protocols. This begins with verifying the integrity of datasets. Using cryptographic hashing methods like SHA-256, manufacturers can generate unique digital fingerprints for training and validation datasets. These fingerprints should be checked regularly to detect tampering. Encrypting sensitive health data - whether stored (using AES-256) or transmitted (via TLS 1.2 or higher) - alongside hardware-backed key management systems, ensures that unauthorized access is minimized. Adding immutable audit logs, potentially leveraging blockchain technology, helps maintain a transparent record of data access, transformations, and labeling activities, meeting the growing demands of regulatory authorities.

On the governance front, formalized policies should outline data provenance, version control, lineage tracking, and retention guidelines that align with quality system regulations and Good Machine Learning Practice (GMLP). A comprehensive data catalog should include details like source systems, collection dates, demographics, and intended use - supporting both FDA submissions and post-market investigations. To address potential biases, manufacturers should analyze training data demographics such as race, age, and geography, comparing these to the intended-use population. Performance metrics should be reviewed across subgroups to identify any disparities. Corrective measures might include re-sampling underrepresented groups, applying fairness-aware algorithms, or calibrating models for specific populations. Oversight by a clinical or ethics board can help define acceptable performance thresholds.

Protecting AI Models from Adversarial Threats

AI models are vulnerable to adversarial attacks - subtle input changes that can disrupt diagnostic accuracy. To counter this, manufacturers should incorporate adversarial robustness testing into their verification and validation processes. Simulating attacks within medically realistic scenarios and documenting the outcomes as part of risk assessments is essential. Techniques like input preprocessing (e.g., denoising), anomaly detection for unusual patterns, and ensemble modeling can improve system resilience. For real-time or embedded devices, defining safe failure modes - such as alerting clinicians when inputs seem unreliable or reverting to traditional algorithms - provides an added layer of safety.

Every model artifact, including weights, configurations, and preprocessing code, should be securely versioned and digitally signed using trusted certificates or hardware-backed keys. Device firmware must verify these signatures before loading models to prevent unauthorized or altered versions from being used. Maintaining detailed audit logs that link deployed model versions to their source code, datasets, training runs, and validation results ensures traceability, aligning with FDA expectations. Tools that enhance explainability, like saliency maps or feature importance scores, can further support clinical trust by helping practitioners evaluate the reliability of AI outputs and reduce overreliance on automation.

Securing the AI Software Supply Chain

A secure software development lifecycle (SSDLC) is critical for addressing threats across the AI software supply chain. This includes threat modeling, secure coding practices, code reviews, and automated security testing within continuous integration/continuous delivery (CI/CD) pipelines. Given the unique risks of AI systems, the SSDLC should also address data pipeline security, such as input validation and anomaly detection, and include steps to verify fairness and robustness.

For training and inference environments, enforcing secure configurations - like hardened images, network segmentation, and zero-trust access - on U.S. cloud providers and container platforms is crucial. Any compromise in these environments could directly impact device behavior. Manufacturers should also provide Software Bills of Materials (SBOMs) that detail all software components, including AI libraries, frameworks, and third-party dependencies. This transparency allows for quick responses when vulnerabilities are discovered. Implementing authenticated over-the-air (OTA) updates with rollback options ensures timely patching while maintaining system stability.

To secure MLOps pipelines, manufacturers must enforce strict access controls on training code, data, and hyperparameters. Segregated environments and authenticated access to model registries are necessary to prevent data poisoning or unauthorized modifications. Post-market surveillance should include continuous monitoring for performance shifts or data drift, with clear protocols for retraining or updating models when performance declines - especially among specific patient subgroups. These measures directly address the connectivity and third-party risks linked to AI-enabled medical devices.

U.S. Regulatory and Industry Standards for AI Medical Devices

To ensure the safety and effectiveness of AI-enabled medical devices, manufacturers and healthcare organizations in the U.S. must comply with regulatory requirements and follow established industry standards. Key players like the FDA, NIST, and the Health Sector Coordinating Council (HSCC) provide frameworks that, when combined, help organizations achieve compliance and maintain a strong security posture for these devices. These frameworks build on technical safeguards to deliver a comprehensive approach to protection.

FDA Cybersecurity Requirements for AI Medical Devices

The FDA considers cybersecurity a critical element of device safety and functionality, especially given the growing threats targeting AI-enabled medical devices. In September 2023, the FDA issued finalized guidance titled "Cybersecurity in Medical Devices: Quality System Considerations and Content of Premarket Submissions." This document outlines the expectations for manufacturers seeking 510(k) clearance, De Novo authorization, or premarket approval (PMA) [11].

Manufacturers are required to demonstrate lifecycle security through a Secure Product Development Framework, which includes practices like threat modeling, secure coding, and coordinated vulnerability disclosure [11]. For AI-enabled devices, premarket submissions must address AI-specific risks such as data poisoning, adversarial inputs, and model tampering. Submissions should also include a Software Bill of Materials (SBOM) to facilitate quick identification and resolution of vulnerabilities [11]. Additionally, manufacturers must provide detailed documentation covering model intent, datasets, validation methods, and subgroup performance to address potential biases. The FDA also requires a preapproved change control plan for post-deployment model updates, which must specify what changes are permissible, the data and methods used for updates, and how safety and cybersecurity risks will be managed [6][4].

Postmarket, the FDA underscores the importance of risk-based monitoring for cyber vulnerabilities. This includes timely patching, real-world performance tracking, and continuous monitoring for model drift, performance degradation, and new safety concerns [2][6][4]. Non-compliance with these requirements has, in some cases, delayed FDA approvals or even resulted in product recalls [10].

Applying NIST AI RMF and HSCC Guidance

While the FDA mandates regulatory compliance, NIST and HSCC provide additional frameworks to manage risks effectively. The NIST AI Risk Management Framework (AI RMF 1.0) focuses on governance, risk mapping, vulnerability measurement, and mitigation strategies [5][9][2]. Meanwhile, HSCC offers actionable guidance tailored for the healthcare sector.

The HSCC Cybersecurity Working Group has released resources like the "Medical Device and Health IT Joint Security Plan" and "Health Industry Cybersecurity – Managing Legacy Technology (HIC-MaLTe)" [5]. These resources emphasize layered defenses and coordinated governance across clinical engineering, IT, and security teams. For AI-enabled devices, HSCC-aligned practices include secure network segmentation, endpoint hardening, identity and access management, and robust patch management. Specific to AI, these practices extend to secure machine learning pipelines, data integrity checks, and controls to prevent tampering with models or parameters [2][5].

By combining the FDA's regulatory framework, NIST's risk management structure, and HSCC's operational best practices, healthcare organizations can design and implement AI-enabled devices that meet compliance requirements while maintaining operational security [2][5][8].

Managing Third-Party and Enterprise Risks

Managing third-party risks is just as critical as internal controls when addressing the security of AI-enabled medical devices. These devices often depend on external vendors for tasks like model development, data labeling, and cloud hosting. A robust third-party risk management program should categorize vendors by risk level, with high-risk vendors - such as those handling training data or cloud-based endpoints - receiving more thorough scrutiny [5][8].

Security and privacy assessments for vendors should cover secure software development, HIPAA-compliant data protection, SBOM transparency, AI model governance, incident response capabilities, and business continuity planning. Contracts should include clear terms for vulnerability disclosure, patching timelines, data residency, and audit rights, along with assurances like SOC 2 or ISO 27001 certifications.

Ongoing oversight is essential. This includes periodic reassessments, continuous monitoring of the vendor's security posture, and keeping an eye on attack surfaces or breach notifications. Platforms like Censinet RiskOps™ can help centralize and automate these assessments, tracking AI-specific factors such as training data sources, model update mechanisms, and data-sharing relationships [5].

"Healthcare is the most complex industry... You can't just take a tool and apply it to healthcare if it wasn't built specifically for healthcare." - Matt Christensen, Sr. Director GRC, Intermountain Health [1]

For example, a hospital deploying an AI-enabled imaging device might require the manufacturer to provide details on training data diversity, robustness testing, and SBOM documentation. These details would then be integrated into the hospital's risk management process, aligning with FDA and NIST AI RMF guidelines [9][5][6]. Following HSCC guidance, the hospital could place the device on segmented VLANs, enable multi-factor authentication for admin access, and establish strict procedures for validating and applying security patches and model updates. Using a platform like Censinet, the hospital could also monitor the vendor's security posture, track vulnerabilities, and enforce contractual obligations for breach notifications and patching [5][8]. This multi-layered approach ensures both compliance and security for AI-enabled medical devices.

sbb-itb-535baee

AI Risk Management with Censinet

When it comes to addressing the risks associated with AI in healthcare, having a centralized platform is no longer optional - it's a necessity. Managing these risks throughout the lifecycle of medical devices demands a system that can handle the complexities of modern healthcare. Enter Censinet RiskOps™, a platform designed to help healthcare organizations assess, monitor, and mitigate AI risks. From initial evaluation to post-market surveillance, RiskOps™ consolidates data from medical devices, clinical applications, cloud services, and supporting infrastructure into one unified system. This allows security, clinical engineering, and compliance teams to make informed decisions that align with FDA and NIST guidelines. This centralized approach sets the stage for the detailed risk assessments discussed below.

Centralized Risk Visibility and Assessment

One of the biggest challenges in healthcare is managing fragmented risk data. Censinet RiskOps™ tackles this issue by bringing together inventories of AI-enabled medical devices, Software as a Medical Device (SaMD), third-party AI tools, networked infrastructure, and cloud-hosted models or training data. For each asset, it consolidates key information such as security controls, vulnerability findings, regulatory attestations, incident histories, and remediation statuses. This comprehensive view is crucial because AI-related risks - like algorithmic bias, model degradation, or unpatched software - can originate from multiple layers of the technology stack and often go unnoticed in traditional device management systems.

The platform's interactive dashboards make it easy for clinical, security, and supply chain teams to filter devices by risk level, clinical application, or department. These dashboards also provide actionable recommendations, such as adjusting configurations, implementing compensating controls, or revising contractual terms. Leadership teams can drill down by service areas like radiology or cardiology to prioritize investments and mitigation efforts. This evidence-based approach offers healthcare leaders a clear picture of residual risks compared to the clinical benefits of AI tools, paving the way for more informed decisions around vendor and supply chain risks.

Vendor and AI Supply Chain Risk Assessments

Censinet also simplifies the process of assessing vendors and managing AI supply chain risks. Pre-built healthcare workflows streamline vendor evaluations, offering detailed scoring and risk ratings. These assessments cover critical areas such as data governance, model training and validation, access controls, secure development practices, vulnerability management, and business continuity planning.

By correlating vendor responses with external threat intelligence and known vulnerabilities, RiskOps™ identifies weak links in cybersecurity or AI governance. For example, it flags vendors or sub-vendors with poor practices, helping organizations spot systemic risks - like multiple devices relying on the same external AI inference API. This insight enables practical decisions: approving, conditionally approving with remediation, or rejecting AI solutions based on a transparent and repeatable evaluation process.

AI Governance and Oversight

Governance is at the heart of effective AI risk management, and Censinet AI™ takes it to the next level. Shared dashboards provide risk, compliance, and clinical leaders with a centralized view of AI-enabled devices, associated risks, remediation tasks, and policy compliance across the organization. The platform ensures that human oversight remains a priority, requiring approvals from security, clinical, privacy, and ethics teams before deployment. Organizations can set clear policies for AI use, such as validating models with local patient data, limiting autonomous functions, or requiring human review for specific outputs. These policies are directly linked to risk assessments and procurement decisions, ensuring consistency and accountability.

Collaboration tools within the platform enhance communication between GRC, IT, clinical, and supply chain teams. Tasks are assigned, tracked, and documented, creating a transparent and auditable process as AI models and use cases evolve. Acting like "air traffic control" for AI risk management, the platform routes critical findings and tasks to the right stakeholders, ensuring timely reviews and approvals. With real-time data displayed in an intuitive dashboard, Censinet RiskOps™ becomes the central hub for all AI-related risks, policies, and actions. This unified approach ensures that the right teams address the right issues at the right time, creating a seamless framework for managing AI risks in healthcare.

Conclusion: Secure AI Medical Device Development

Creating secure AI medical devices demands a proactive approach to identifying and addressing risks. Here’s how organizations can ensure patient safety and regulatory compliance while advancing innovation.

Strengthen AI-Specific Security Protocols

AI-powered medical devices face unique challenges like data poisoning, adversarial attacks, and supply chain vulnerabilities. To tackle these threats, organizations should adopt measures such as data provenance tracking, strict access controls for training datasets, and anomaly detection systems to block compromised inputs. Techniques like adversarial training, input sanitization, and runtime monitoring can help harden models against manipulation. For supply chain risks, maintaining Software Bill of Materials (SBOMs), secure code signing, ongoing third-party monitoring, and thorough vendor assessments are vital. Addressing these risks throughout the device lifecycle ensures better protection for patients and clinical outcomes.

Align with U.S. AI Security Standards

The FDA mandates that cybersecurity must be embedded into medical devices at every stage, from design to maintenance [11]. Following FDA guidelines on authentication, patching, and secure updates is critical. Additionally, the NIST AI Risk Management Framework (AI RMF) provides a roadmap for addressing AI-specific risks like bias, robustness, and explainability. Complementary guidance from the Health Sector Coordinating Council (HSCC) offers best practices for threat modeling and incident response. Incorporating these standards into development processes not only ensures safety but also accelerates FDA clearance and builds trust with healthcare providers and insurers. By integrating these frameworks early, organizations can minimize delays and reduce unexpected challenges after market release.

Centralize Risk Management with Censinet

As discussed earlier, managing AI risks effectively requires a unified system. Censinet RiskOps™ offers a centralized platform to handle the complexities of AI risk management. It integrates risk data from devices, vendors, and clinical applications into one system, enabling teams to make informed decisions that align with FDA and NIST guidelines. The platform simplifies vendor risk assessments by focusing on critical areas like data governance, model validation, access controls, and continuity planning. By maintaining an auditable record of policies, risks, and mitigation actions, Censinet RiskOps™ ensures continuous AI oversight. For healthcare organizations and medtech manufacturers, this approach transforms fragmented risk management into a cohesive program that supports regulatory compliance while prioritizing patient safety and clinical effectiveness.

FAQs

What are the main cybersecurity risks of using AI in medical devices?

AI-powered medical devices bring with them a host of cybersecurity risks that could jeopardize both patient safety and the functionality of the devices themselves. Here are some key concerns:

- Data breaches: Sensitive patient data, including protected health information (PHI), can be exposed, potentially leading to privacy violations.

- Algorithm manipulation: If attackers tamper with AI algorithms, it could result in inaccurate diagnoses or unsafe device behavior.

- Unauthorized access: Hackers could gain control of devices, disrupting their operation or even endangering patients.

- Software vulnerabilities: Weaknesses in the software could be exploited, undermining the system's integrity.

To combat these threats, healthcare providers and manufacturers must implement strong security protocols. This includes regular risk assessments, timely software updates, and constant system monitoring to ensure the safety of both patients and healthcare infrastructure.

How can medical device manufacturers protect AI systems from data poisoning and ensure data accuracy?

To keep AI systems safe from data poisoning and ensure the accuracy of their data, manufacturers should rely on a multi-layered defense strategy. This means sourcing data from reliable and secure platforms, applying thorough validation and verification methods, and maintaining constant vigilance for any unusual patterns or anomalies in the data. Additionally, taking a proactive stance by utilizing advanced risk management tools - especially those tailored for industries like healthcare - can play a crucial role in protecting patient safety and preserving the integrity of these systems.

What regulations ensure the security of AI-powered medical devices?

In the United States, several important regulations govern the security of AI-powered medical devices. These include the FDA's guidance on AI and machine learning in medical devices, the FDA's cybersecurity guidelines for medical devices, and HIPAA regulations aimed at protecting patient data. Together, these frameworks are crafted to ensure the safety of medical devices, safeguard sensitive health information, and reduce cybersecurity risks.

By following these regulations, healthcare organizations and developers can stay compliant while focusing on protecting patient safety and maintaining data privacy.