The AI Safety Imperative: Why Getting It Right Matters More Than Getting There First

Post Summary

Artificial intelligence (AI) is transforming healthcare but comes with serious risks. Rushing AI adoption without strong safeguards can jeopardize patient safety and data security. Cyberattacks like model poisoning and adversarial manipulation threaten diagnostic accuracy, medical devices, and patient trust. Despite these dangers, many healthcare organizations lack proper AI governance frameworks, leaving critical vulnerabilities exposed.

Key takeaways from this article:

- AI Risks in Healthcare: Model poisoning, adversarial attacks, and third-party vulnerabilities can harm patients and compromise systems.

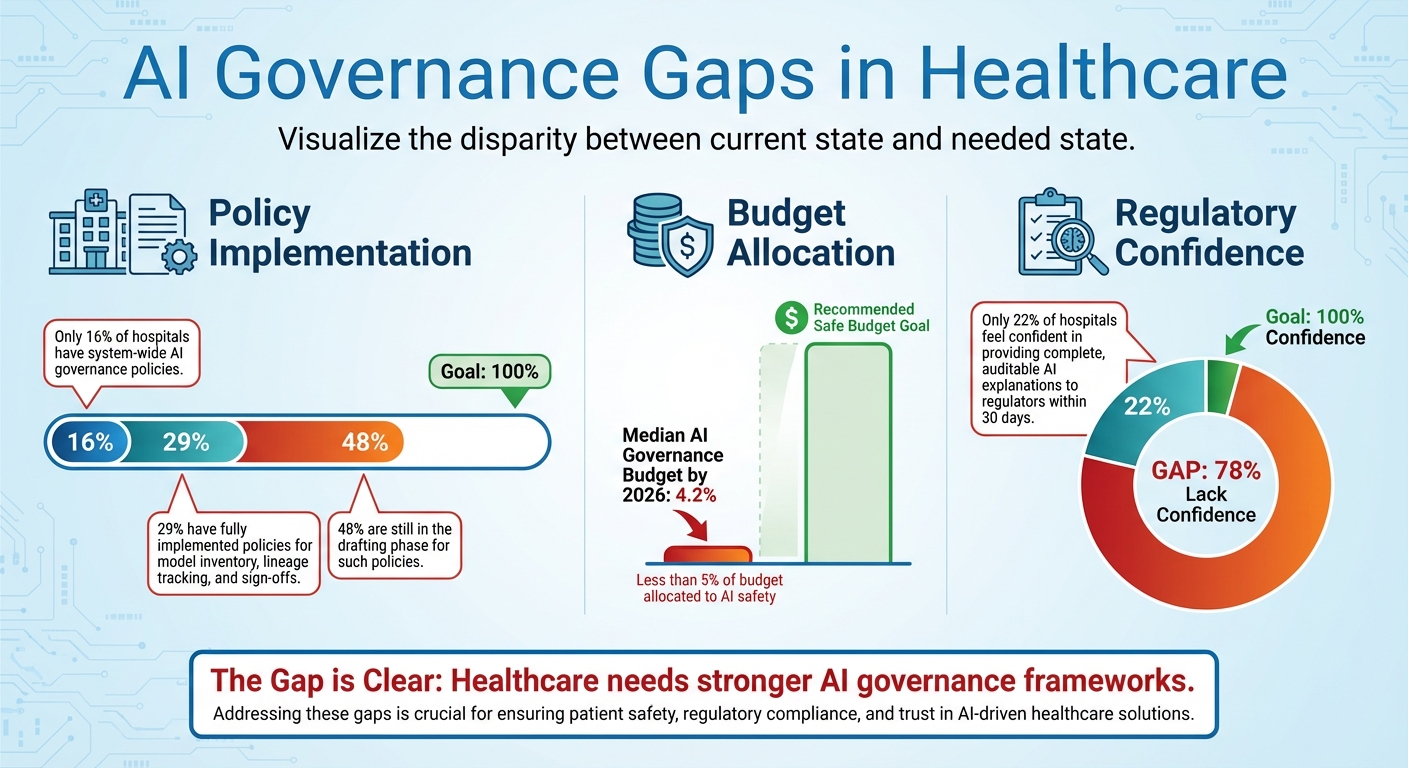

- Governance Gaps: Only 16% of hospitals have AI governance policies, and most allocate less than 5% of their budget to AI safety.

- Proven Frameworks: Tools like the NIST AI Risk Management Framework and healthcare-specific models offer strategies for managing risks.

- Actionable Steps: Maintain detailed AI inventories, embed security during development, and create AI-specific incident response plans.

- Tools for Risk Management: Platforms like Censinet RiskOps™ help streamline AI governance while ensuring human oversight.

The message is clear: prioritizing AI safety over speed is critical. By implementing structured governance and proactive strategies, healthcare organizations can harness AI securely while protecting patients and data.

Key Risks of AI in Healthcare Cybersecurity

Model Poisoning and Data Integrity Threats

Model poisoning is a serious danger for AI systems in healthcare. In this type of attack, hackers tamper with training data or directly compromise AI models, leading to flawed decisions that can negatively impact patient care. For instance, an altered system might misread imaging scans, recommend inappropriate treatments, or fail to detect critical changes in a patient’s condition. What makes this threat particularly alarming is how easily these manipulations can go unnoticed until they cause significant harm.

Adversarial Attacks on AI Models

Adversarial attacks are another growing concern. These attacks involve feeding manipulated inputs into AI systems to trick them into making critical errors. By exploiting the way AI models interpret data, attackers can introduce subtle, almost undetectable changes that lead to incorrect conclusions. In a healthcare setting, this could mean altering patient records, lab results, or imaging data - potentially leading to false diagnoses or unsuitable treatment plans. Even more troubling, vulnerabilities in external partners’ systems can open additional pathways for such attacks, making the problem even harder to contain.

Third-Party AI Supply Chain Vulnerabilities

The interconnected nature of healthcare technology presents significant risks through third-party vendors. Alarmingly, 80% of stolen patient records now originate from third-party vendors[2]. Modern healthcare systems rely on thousands of external services, such as electronic health record (EHR) providers and medical device manufacturers, each of which could serve as an entry point for attackers. Business associates, who often have extensive access to patient data but weaker security measures compared to hospitals, are particularly attractive to cybercriminals. The risk doesn’t stop there - fourth-party connections, including subcontractors and their partners, add another layer of vulnerability that healthcare organizations might not even realize exists.

Frameworks for Governing AI Safety

AI Governance Gaps in Healthcare: Key Statistics on Policy Implementation and Budget Allocation

With vulnerabilities like model poisoning and adversarial attacks posing serious risks, establishing structured governance in healthcare is no longer optional - it's essential. Yet, oversight remains limited. A 2023 survey found that only 16% of hospitals have system-wide AI governance policies in place[4]. On top of that, the median budget allocation for AI governance and safety by 2026 is projected to be just 4.2%[5]. These numbers highlight the pressing need for effective governance frameworks in this critical sector.

NIST AI Risk Management Framework

The NIST AI Risk Management Framework offers a practical foundation for tackling AI-specific security challenges in healthcare. A key element of this framework is maintaining a comprehensive inventory of all AI systems - detailing their functions, data dependencies, and potential security risks[3]. This approach helps identify and address threats like data poisoning and model manipulation that could compromise patient care. By adopting the NIST framework, healthcare organizations can establish controls to mitigate these risks effectively.

Healthcare-Specific AI Governance Models

In addition to general frameworks, healthcare-specific models provide tailored solutions. For example, in November 2025, the Health Sector Coordinating Council (HSCC) Cybersecurity Working Group released a preview of its 2026 guidance on managing AI cybersecurity risks. One of the key components is the AI Governance Maturity Model, designed specifically for healthcare settings[3]. This model spans governance processes across the AI lifecycle, aligns with regulations like HIPAA and FDA requirements, and incorporates security practices suited to healthcare's unique needs. It also draws from established standards like the NIST framework.

Despite these advancements, implementation remains uneven. Currently, only 29% of hospitals have fully implemented policies addressing critical areas like model inventory, lineage tracking, and sign-offs. Meanwhile, 48% are still in the drafting phase for such policies[5]. This inconsistency underscores the need for a more unified approach.

Balancing Automation and Oversight with the 5-Level AI Autonomy Scale

To navigate the balance between automation and human oversight, the HSCC introduced a five-level autonomy scale. This scale categorizes AI risks and clarifies when human intervention is necessary[3]. For example, low-risk tools like appointment scheduling chatbots require minimal oversight, while high-stakes systems managing cancer treatment protocols demand rigorous human involvement.

The importance of this scale becomes even clearer when considering that only 22% of hospitals feel confident in their ability to provide complete, auditable AI explanations to regulators within 30 days[5]. By following this framework, healthcare organizations can enhance compliance and safeguard patient outcomes more effectively.

Strategies for Reducing AI Risks in Healthcare IT

Effectively addressing AI vulnerabilities in healthcare requires actionable strategies that integrate seamlessly into current workflows. These approaches are essential for closing the gap between theoretical frameworks and the practical safeguards needed in today’s healthcare IT landscape.

Complete AI Inventory and Monitoring

Keeping a detailed inventory of AI systems is a critical first step. This inventory should outline each system’s role, data dependencies, and potential security risks. Monitoring these systems for anomalies - like unexpected outputs, reduced accuracy, or unusual API activity - can help detect early signs of compromise[3][7]. Without a clear understanding of the AI tools in use, protecting them - and the sensitive patient data they handle - becomes nearly impossible.

Such anomalies may indicate threats like data breaches or adversarial interference before they escalate into full-scale attacks. To stay ahead of these risks, healthcare organizations are leveraging AI-driven analytics to shift from a reactive to a proactive approach. This allows for real-time threat detection across their IT infrastructure, setting the stage for more secure AI operations[6].

Secure-by-Design Principles

Building security into AI systems from the ground up is another vital strategy. For example, in October 2024, the Health Sector Coordinating Council (HSCC) Cybersecurity Working Group launched an AI Cybersecurity Task Group with representatives from 115 healthcare organizations. This group is developing guidance for 2026, including "Secure by Design Medical" principles, which aim to embed cybersecurity into AI-enabled medical devices. Their efforts include creating an AI Security Risk Taxonomy and Secure by Design frameworks to mitigate vulnerabilities in healthcare AI tools[3].

Incorporating tools like the AI Bill of Materials (AIBOM) and Trusted AI BOM (TAIBOM) can further enhance system transparency. These tools provide detailed insights into the components of AI systems, including third-party libraries and data sources. With this level of visibility, organizations can identify and address weaknesses before they are exploited[3].

Incident Response Plans for AI Compromises

Traditional incident response plans need to evolve to address the unique threats posed by AI, such as model poisoning, data corruption, and adversarial attacks. The HSCC is currently creating practical playbooks to help healthcare organizations prepare for, detect, respond to, and recover from AI-specific cyber incidents. These playbooks are designed to tackle challenges that standard cybersecurity protocols often overlook[3].

To ensure a coordinated response, cybersecurity and data science teams must work together when AI systems show signs of compromise. This involves assessing the damage, containing the threat, and restoring operations. Key measures include maintaining secure, verifiable backups of AI models, conducting resilience testing, and ensuring quick recovery of compromised systems. Organizations should also focus on learning from incidents to improve future preparedness. By integrating best practices for business continuity and regulatory compliance, healthcare providers can strengthen their overall resilience to AI-related threats[3].

sbb-itb-535baee

Censinet RiskOps™: A Scalable Solution for AI Risk Management

Navigating the complexities of AI risks in healthcare IT requires a centralized system to handle third-party assessments, enterprise risks, and AI vulnerabilities. Censinet RiskOps™ rises to this challenge by offering healthcare organizations a robust platform tailored to manage cyber risks on a large scale. Now enhanced with AI-specific features, the platform is designed to meet the unique demands of healthcare cybersecurity. Its tools streamline assessments, promote collaboration, and ensure human expertise remains integral at every step.

Censinet AITM for Accelerated Risk Assessments

Censinet AITM revolutionizes third-party risk assessments by enabling vendors to complete security questionnaires in just seconds - tasks that once took weeks. The platform consolidates vendor evidence, integration details, and even fourth-party risks into concise, easy-to-digest risk reports. This level of automation empowers healthcare organizations to address risks much faster, a critical advantage given the rapid influx of AI-powered tools in healthcare IT. By reducing what used to take days or weeks into mere minutes, Censinet AITM ensures risk teams can keep up with the fast-paced adoption of new AI technologies without compromising on thoroughness.

Collaborative Governance and AI Risk Dashboards

Rapid assessments are just the beginning. Censinet integrates collaborative oversight into its platform, acting as a central hub for AI governance. Think of it as an "air traffic control" system for managing AI risks - it routes key findings and critical tasks to the appropriate stakeholders for review and approval. AI governance committees, in particular, benefit from real-time visibility into risks across the organization. The AI risk dashboard serves as a centralized space for tracking policies, risks, and tasks, ensuring that the right teams are addressing the right issues at the right time. This unified approach fosters accountability, continuous oversight, and smooth collaboration across Governance, Risk, and Compliance (GRC) teams.

Human-in-the-Loop for AI Safety and Efficiency

Censinet AI combines automation with human expertise to validate evidence, draft policies, and mitigate risks effectively. With configurable rules and review processes, the platform ensures that automation enhances, rather than replaces, critical decision-making. This "human-in-the-loop" strategy allows healthcare organizations to scale their efforts in managing cyber risks while maintaining high safety standards. By blending automation with human oversight, healthcare leaders can tackle complex risks with greater speed and accuracy, aligning with industry best practices while safeguarding patient care. Censinet RiskOps™ strikes a crucial balance between innovation and safety, ensuring that AI advancements in healthcare remain secure and reliable.

Best Practices for Long-Term AI Safety and Resilience

Creating a strong foundation for AI safety in healthcare requires ongoing effort and intentional strategies. Leading organizations view AI governance as an ongoing journey, not a one-time task. This mindset ensures that safety protocols evolve hand-in-hand with advancements in AI technology, aligning with industry standards and fostering sustainable governance practices.

Cross-Functional Collaboration for AI Governance

Successful AI governance thrives on teamwork across multiple disciplines. IT teams manage infrastructure vulnerabilities, security experts identify potential threats, compliance officers navigate complex regulations, and clinical staff focus on patient safety concerns. Each group plays a vital role in addressing the unique challenges posed by AI systems.

For example, in October 2024, the HSCC established an AI Cybersecurity Task Group, bringing together 115 healthcare organizations. This initiative aimed to incorporate AI-specific risks into medical device development and foster cross-functional governance [3].

Collaboration doesn’t stop at internal teams. Partnering with AI vendors and technology providers can bolster supply chain security and ensure your organization stays informed about emerging threats. Coordinated efforts between security and data teams are essential for robust risk assessments [12][1]. Clear roles, responsibilities, and clinical oversight throughout the AI lifecycle not only enhance accountability but also encourage a system-wide approach to risk management [1].

In addition to teamwork, staying aligned with changing regulations is vital for long-term safety and compliance.

Alignment with Regulatory Standards

As regulations evolve, healthcare organizations must embed AI governance into the design and operation of their systems. The EU AI Act, effective in 2025, introduces mandatory requirements for high-risk AI systems, such as medical devices. Starting in February 2025, this legislation will require all personnel interacting with AI systems to undergo AI literacy training, making education a non-negotiable part of compliance [10].

Organizations must also apply existing federal laws to AI data, ensuring proper handling of medical information. However, gaps remain - such as HIPAA’s limited scope regarding genetic testing data [9]. This highlights the need for continuous monitoring of AI tools in clinical environments, with thorough evaluations of their impact on quality, safety, reliability, and equity before implementation [8]. AI governance is no longer optional; it is a core component of enterprise compliance programs, demanding the same level of attention as cybersecurity and privacy efforts [10][11].

Conclusion: Building a Safer AI-Driven Future in Healthcare

The rapid integration of AI into healthcare brings undeniable benefits, but it also opens the door to significant risks. From data breaches to potential vulnerabilities in medical devices, these risks directly impact patient safety and trust [1]. Healthcare organizations now face a pivotal decision: adopt AI hastily and risk preventable harm, or take the time to implement strong governance frameworks that balance innovation with safety. The stakes are high, and cutting corners in the name of speed is a gamble no one can afford.

Regulatory bodies emphasize the importance of responsible AI use. As highlighted earlier, clear and robust frameworks are critical for managing AI-related risks effectively. Key organizations are actively shaping these guidelines to ensure AI can be integrated securely without compromising trust or safety [3]. These frameworks act as guardrails, allowing innovation to thrive while minimizing potential harm.

Traditional security measures are no longer enough to address the complexity of AI systems [1]. To stay ahead, healthcare organizations must adopt comprehensive strategies, including maintaining detailed AI inventories, embedding secure-by-design principles, and developing incident response plans tailored to AI-specific challenges. Aligning these measures with federal frameworks ensures a more resilient approach to AI risk management [3].

Sustaining AI governance requires an ongoing commitment. Successful risk management depends on collaboration across IT, security, compliance, and clinical teams. By integrating safety protocols into daily operations and leveraging tools like Censinet RiskOps™, which combines automation with expert oversight, healthcare organizations can scale their efforts to protect patient care and data integrity.

The healthcare industry is at a turning point. Organizations that prioritize AI safety today will not only build systems that patients can trust but also strengthen their overall cybersecurity posture for the long run. Ignoring these safeguards risks exposing vulnerabilities, incurring penalties, and losing the trust that is so vital in healthcare.

FAQs

What are the biggest risks of using AI in healthcare?

AI's role in healthcare brings a host of risks that need careful consideration. One major concern is bias in data or algorithms, which can lead to unfair or uneven outcomes for patients. Another pressing issue is privacy and security, as sensitive patient information could be exposed to breaches or unauthorized access. Then there’s the risk of model inaccuracies, which might result in misdiagnoses or incorrect treatments. Adding to this complexity, some AI systems operate as "black box" models, making their decision-making processes difficult to interpret or trust.

Healthcare professionals also face alert fatigue - a state of being overwhelmed by excessive false positives, which can hinder their ability to respond effectively. There's also the danger of overreliance on AI, which can erode essential human judgment in critical situations. On top of all that, failing to meet stringent healthcare regulations could lead to legal trouble and financial penalties.

To tackle these risks, it’s crucial to establish strong frameworks, conduct regular audits, and prioritize ethical AI practices. Without these measures, the potential benefits of AI in healthcare could be overshadowed by its challenges.

What steps can healthcare organizations take to strengthen their AI governance frameworks?

Healthcare organizations can strengthen their AI governance by setting up clear processes that outline roles, responsibilities, and oversight at every stage of the AI lifecycle. This means aligning internal policies with regulations like HIPAA and FDA requirements while drawing on established frameworks such as those provided by NIST.

Keeping a detailed inventory of AI systems and categorizing them based on their level of autonomy is a key step. This approach ensures that oversight matches the level of risk each system presents. Additionally, implementing strong AI-specific security measures and data controls is crucial to protect sensitive healthcare information and reduce potential risks. By focusing on these strategies, healthcare providers can maintain compliance, enhance security, and build trust in their AI-based solutions.

Why is it essential to focus on AI safety instead of rushing deployment in healthcare?

Focusing on AI safety rather than rushing its deployment in healthcare is crucial to protecting sensitive patient information, preserving system integrity, and maintaining trust within the industry. The integration of AI brings specific cybersecurity challenges, including risks like data breaches and vulnerabilities in algorithms, which could lead to serious repercussions if not carefully managed.

By placing safety at the forefront, healthcare organizations can reduce these risks, adhere to regulatory standards, and prioritize patient well-being. Taking a measured and cautious approach to AI adoption helps prevent avoidable mistakes or security failures, paving the way for sustainable and secure advancements in healthcare.