The AI Security Analyst: Augmenting Human Expertise with Machine Intelligence

Post Summary

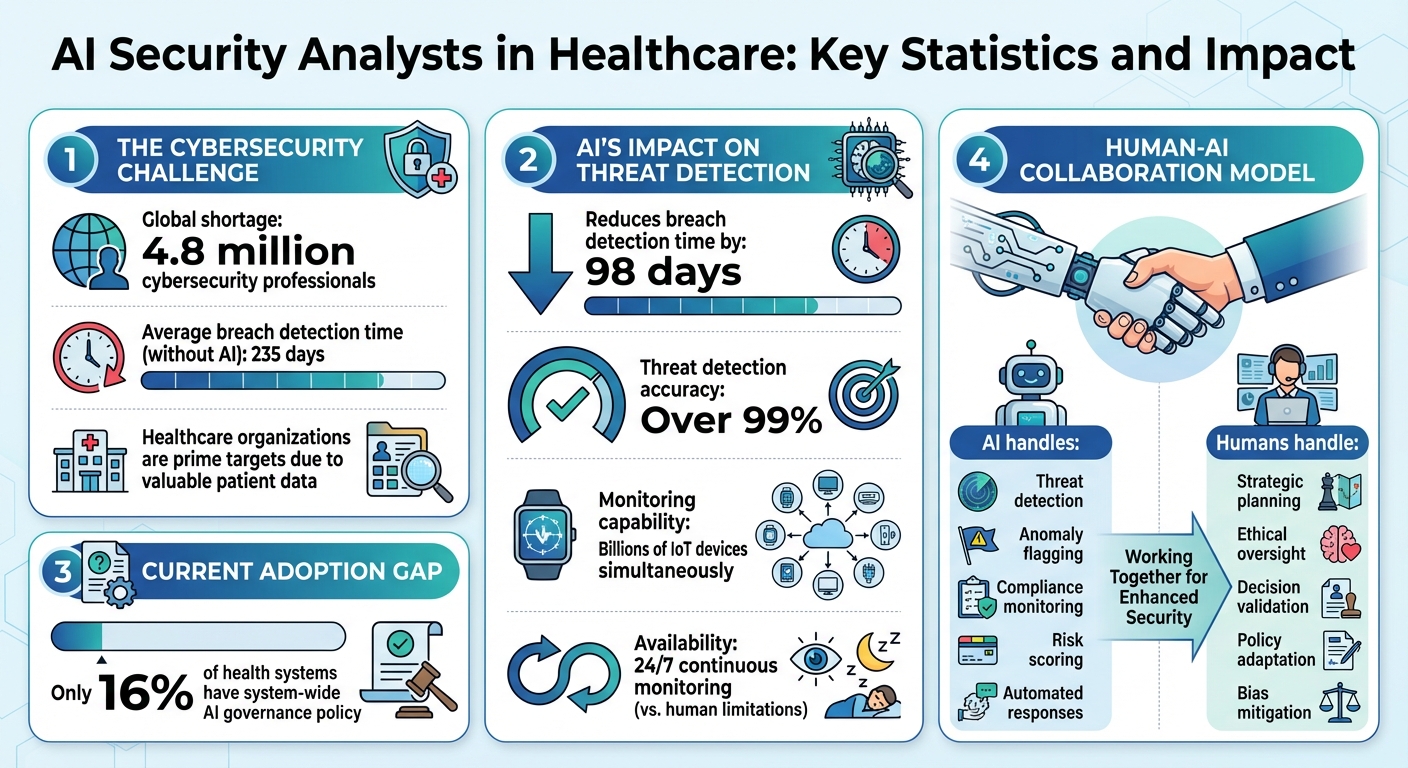

AI security analysts are transforming healthcare cybersecurity by addressing the growing threat of cyberattacks on sensitive patient data. Here's what you need to know:

- Why It Matters: Healthcare organizations are prime targets for cyberattacks due to the value of patient data. With a global shortage of 4.8 million cybersecurity professionals, AI helps fill the gap.

- What AI Can Do: AI systems detect unusual activity, automate threat responses, and analyze risks faster and more accurately than humans, reducing breach detection time by 98 days on average.

- Human-AI Collaboration: AI handles repetitive tasks like monitoring and compliance checks, while humans focus on decision-making, ethical oversight, and strategic planning.

- Implementation Tips: Success requires clean data, proper integration with current systems, and continuous monitoring to improve performance.

AI is not replacing human analysts but working alongside them to strengthen cybersecurity defenses in healthcare.

AI Security Analysts in Healthcare: Key Statistics and Impact Metrics

What AI Security Analysts Can Do in Healthcare

Detecting Threats and Analyzing Anomalies

AI systems are always on the lookout, scanning data from IoT devices, clinical systems, electronic health records, and medical devices to identify patterns that might indicate threats [2][3]. These tools establish a "normal" baseline for user and device behavior, flagging unusual activities like medical devices connecting to unexpected external servers or employees accessing patient records at odd hours.

The sheer scale of this monitoring is impressive. Tapan Mehta, a Healthcare and Pharma Life Sciences Executive, points out that AI can detect subtle irregularities across billions of IoT devices - something human teams simply can't do manually [2]. This is critical when you consider that it takes an average of 235 days for organizations to detect a breach [6]. AI-powered systems, however, can cut that time down by 98 days [4].

AI doesn't stop at pattern recognition. It uses behavioral analysis to uncover insider threats and compromised accounts by spotting deviations from normal behavior. For example, it can monitor electronic health records for suspicious access patterns or flag unusual data transfers, especially when information is sent to external devices or networks [3]. Today’s AI-driven cybersecurity models boast over 99% accuracy in detecting threats [6].

This level of precision not only identifies threats quickly but also sets the stage for efficient incident management and risk assessment.

Triaging Incidents and Supporting Investigations

Once a threat is identified, AI takes the next step by speeding up incident triage. It connects the dots between data points, such as threat feeds, and delivers concise summaries, enabling analysts to make faster decisions [2]. This means threats can be contained immediately - compromised systems can be quarantined, ransomware spread can be halted, and suspicious accounts can be disabled before significant damage occurs [2][3][5].

"A human is still needed to sift through alerts. It's a collaboration between AI and humans... The difference is that AI is able to work 24/7. It doesn't need to sleep as we do." [6]

By reducing the flood of alerts and pinpointing root causes, AI allows human analysts to focus on more complex, high-priority investigations instead of wading through endless notifications.

Scoring Risks and Assessing Third-Party Vendors

AI doesn't just detect threats - it also evaluates vulnerabilities within a healthcare organization’s systems and ranks them based on actual risk, not just generic severity scores [2][3]. When it comes to third-party vendors, AI-driven platforms calculate risk scores tailored to healthcare-specific concerns, like the sensitivity of the data involved, the criticality of systems, the scope of access, and even geographic factors [4].

This targeted analysis helps organizations prioritize fixes and manage vendor risks more effectively. AI also plays a key role in ensuring compliance with regulations like HIPAA by automating checks and using predictive analytics to anticipate potential risks, reducing the chance of violations [3][5][8]. Automated tools simplify audits, reporting, and documentation, cutting down on human error and freeing up staff to focus on more strategic tasks [8].

How Humans and AI Work Together in Healthcare Risk Management

How AI Boosts Security Team Efficiency

AI takes over time-consuming tasks like compliance audits, reporting, and documentation - chores that can eat up hours of an analyst’s week. By automating these repetitive duties, it frees up experts to focus on high-stakes decisions that require strategic thinking and problem-solving skills [8][9][10]. Beyond that, AI helps teams stay ahead of the curve by predicting cybersecurity risks, such as potential patient harm, compliance breaches, or unexpected financial hits [8][9]. This combination of automation and foresight creates an environment where human expertise is directed at the issues that demand critical thinking and nuanced judgment.

Balancing Automation with Human Judgment

While AI excels at automating routine work, effective security management depends on human oversight. The best programs combine AI's decision-support capabilities with human intuition and judgment. For instance, AI can provide real-time guidance on regulatory best practices or assess risks tied to third-party vendors [7][12]. However, humans are essential for validating AI’s findings before any action is taken.

This partnership helps address challenges like automation bias, where there’s a temptation to blindly trust AI outputs, and AI fatigue, where analysts might overlook real threats due to an overload of alerts [13][14]. To navigate these risks, organizations implement structured workflows where AI highlights key insights, but humans make the final call. Analysts review AI-generated risk assessments, tweak security policies to reflect unique circumstances, and craft strategies to mitigate risks that AI might not yet recognize [7][8][11][12]. This human oversight is especially critical for ensuring ethical AI use, particularly when health, safety, or fundamental rights are at stake [13][14].

Using RACI to Define Roles

To ensure smooth collaboration between AI systems and human teams, healthcare organizations rely on clear role definitions. A responsibility assignment matrix, like RACI, helps outline who is responsible for what:

| Activity | AI System | Security Analyst | Risk Manager | Compliance Officer |

|---|---|---|---|---|

| Threat detection and anomaly flagging | R (Responsible) | C (Consulted) | I (Informed) | I (Informed) |

| Risk scoring and vendor assessment | R (Responsible) | A (Accountable) | C (Consulted) | C (Consulted) |

| Validating AI findings and recommendations | C (Consulted) | R (Responsible) | A (Accountable) | I (Informed) |

| Strategic risk mitigation planning | C (Consulted) | R (Responsible) | A (Accountable) | C (Consulted) |

| Compliance monitoring and reporting | R (Responsible) | C (Consulted) | I (Informed) | A (Accountable) |

| Addressing algorithmic bias and ethical concerns | I (Informed) | C (Consulted) | R (Responsible) | A (Accountable) |

Here, AI focuses on tasks like flagging anomalies, scoring risks, and monitoring compliance, while human analysts and risk managers concentrate on strategic planning, adapting to regulatory changes, and maintaining ethical and legal standards [7][8][11][12]. This division of labor ensures that both AI and human expertise are used where they are most effective.

sbb-itb-535baee

How to Implement AI Security Analysts in Healthcare Organizations

Assessing Readiness and Data Requirements

Before rolling out AI-driven security tools, healthcare organizations need to take a step back and evaluate their current risk management maturity. This includes identifying technology gaps and prioritizing risk areas like patient outcomes, compliance, or financial stability [8]. Surprisingly, only 16% of health systems have a system-wide AI governance policy [15]. This highlights the urgent need for comprehensive Enterprise Risk Management (ERM) frameworks that go beyond just ticking off compliance checkboxes.

Clean, well-structured, and properly labeled data is a must. Organizations also need real-time data pipelines for continuous monitoring [8]. Infrastructure readiness is equally critical - think HIPAA-compliant cloud environments with encryption, audit trails, and role-based access controls [8]. Another key step is standardizing AI-related terminology and training staff to work safely with AI outputs [11][15]. Past failures in deploying AI tools often boil down to insufficient preparation, making this groundwork essential.

By laying a solid foundation, healthcare organizations can better leverage AI for improved threat detection and risk management. Once the basics are covered, the focus shifts to integrating these tools with existing security systems.

Connecting AI with Existing Security Systems

Start by assessing your IT ecosystem. This includes clinical systems, data sources, computing power, storage capacity, and network reliability [16]. Modular architectures are a smart choice - they offer the flexibility and scalability needed to integrate AI tools with platforms like security information and event management (SIEM) and security orchestration, automation, and response (SOAR) systems [16]. A unified data architecture with standardized data models ensures consistent formatting across diverse sources like electronic health records and lab systems [16].

It's also crucial to maintain a detailed inventory of all AI systems, documenting their functions, data dependencies, and security considerations [11]. This inventory helps define acceptable risk levels and map out dependencies across data systems, personnel, and vendors [15]. Tools like Censinet RiskOps™ can centralize AI policies, risks, and tasks, making it easier to route critical findings to the right stakeholders. This ensures that AI tools and human expertise work hand in hand.

Once integration is complete, the next step is to monitor performance continuously to keep pace with evolving threats.

Measuring Performance and Improving Over Time

Continuous monitoring is key to fine-tuning AI-driven cybersecurity operations and quickly containing compromised models [11]. Track performance metrics like threat detection accuracy, false positive rates, response times, and risk assessment effectiveness. Practical playbooks for incident response and recovery should incorporate AI-specific risk assessments into existing cybersecurity frameworks [11].

Feedback loops based on data are invaluable for refining AI security strategies [8]. This requires close collaboration between cybersecurity and data science teams to ensure AI-specific risks are fully addressed [11]. Regular resilience testing is also essential to validate the robustness of AI systems against new and emerging threats [11]. By treating AI implementation as a continuous process rather than a one-time effort, healthcare organizations can stay ahead of challenges while keeping human oversight front and center to protect patient safety.

Conclusion

Key Takeaways

AI security analysts are reshaping healthcare cybersecurity by using machine learning to identify anomalies and protect PHI in compliance with HIPAA. By automating repetitive tasks, they allow human teams to focus on more strategic responsibilities, enhancing overall security efforts.

The collaboration between AI and human expertise strengthens cybersecurity defenses. AI can automate responses to threats and adapt security protocols as new risks emerge [17]. To successfully implement AI in healthcare cybersecurity, organizations must evaluate their readiness, ensure clean data pipelines, integrate AI with existing systems like SIEM and SOAR, and regularly monitor performance. Solutions such as Censinet RiskOps™ streamline AI management by centralizing policies, risks, and tasks, sending critical findings to the appropriate stakeholders for action. Feedback loops are essential for keeping AI systems effective against new and evolving threats.

Looking ahead, the next steps involve creating systems that are both adaptable and seamlessly integrated into healthcare environments.

What's Next for AI in Healthcare Cybersecurity

As the landscape of healthcare cybersecurity continues to shift, AI must keep pace by anticipating and mitigating risks before they escalate. Future systems will need to be secure, dependable, and resilient by design, offering deeper integration, greater automation, and quicker threat detection [1][2].

However, human oversight will remain a cornerstone of cybersecurity as AI evolves. Specialists must familiarize themselves with AI tools, understanding their strengths and limitations [6][2]. The ideal approach is a partnership: AI takes on the heavy workload of data analysis and pattern detection, while humans provide strategic insight, ethical decision-making, and accountability. Organizations that adopt this collaborative approach today will be better equipped to safeguard patient safety and ensure uninterrupted care delivery in the future.

FAQs

How does AI help healthcare organizations detect cyber threats faster?

AI is transforming how healthcare organizations handle cyber threats by keeping a constant watch on their systems, spotting unusual activities, and automating the threat detection process. With these tools, potential risks can be identified and addressed much faster - cutting detection times by as much as 21%. This speed is crucial, as it helps limit potential damage and ensures that essential healthcare operations face minimal interruptions.

How do human analysts and AI work together to protect healthcare cybersecurity?

Human analysts and AI work hand in hand, blending the efficiency of machine intelligence with the depth of human expertise. AI thrives on crunching massive datasets, spotting patterns, and handling repetitive tasks with speed and precision. Meanwhile, human analysts excel in critical thinking, creativity, and interpreting context that machines might miss.

This partnership creates a dynamic duo: AI delivers insights and highlights potential risks, while human analysts step in to verify findings, make strategic calls, and tackle complex situations that demand judgment or a more nuanced perspective. Together, they build a stronger, more adaptable defense against cybersecurity threats in healthcare.

What are the essential steps to implement AI security systems in healthcare organizations?

To implement AI security systems effectively in healthcare, start by building a strong governance and risk management framework. This ensures compliance with key regulations like HIPAA. Your infrastructure should align with these standards, and any AI tools you use must undergo rigorous clinical evaluation to confirm their reliability. It's also critical to train AI models with high-quality, unbiased data to maintain both accuracy and fairness.

On the security front, consider adopting advanced measures such as zero trust architecture and microsegmentation to safeguard sensitive patient information. Real-time monitoring systems can add another layer of protection by detecting threats and initiating automated responses as needed. Lastly, prioritize staff training so your team is well-versed in AI cybersecurity practices and can manage these systems effectively. Following these steps will strengthen your organization’s defenses while keeping patient data secure.