The AI Security Paradox: Better Protection or Bigger Vulnerabilities?

Post Summary

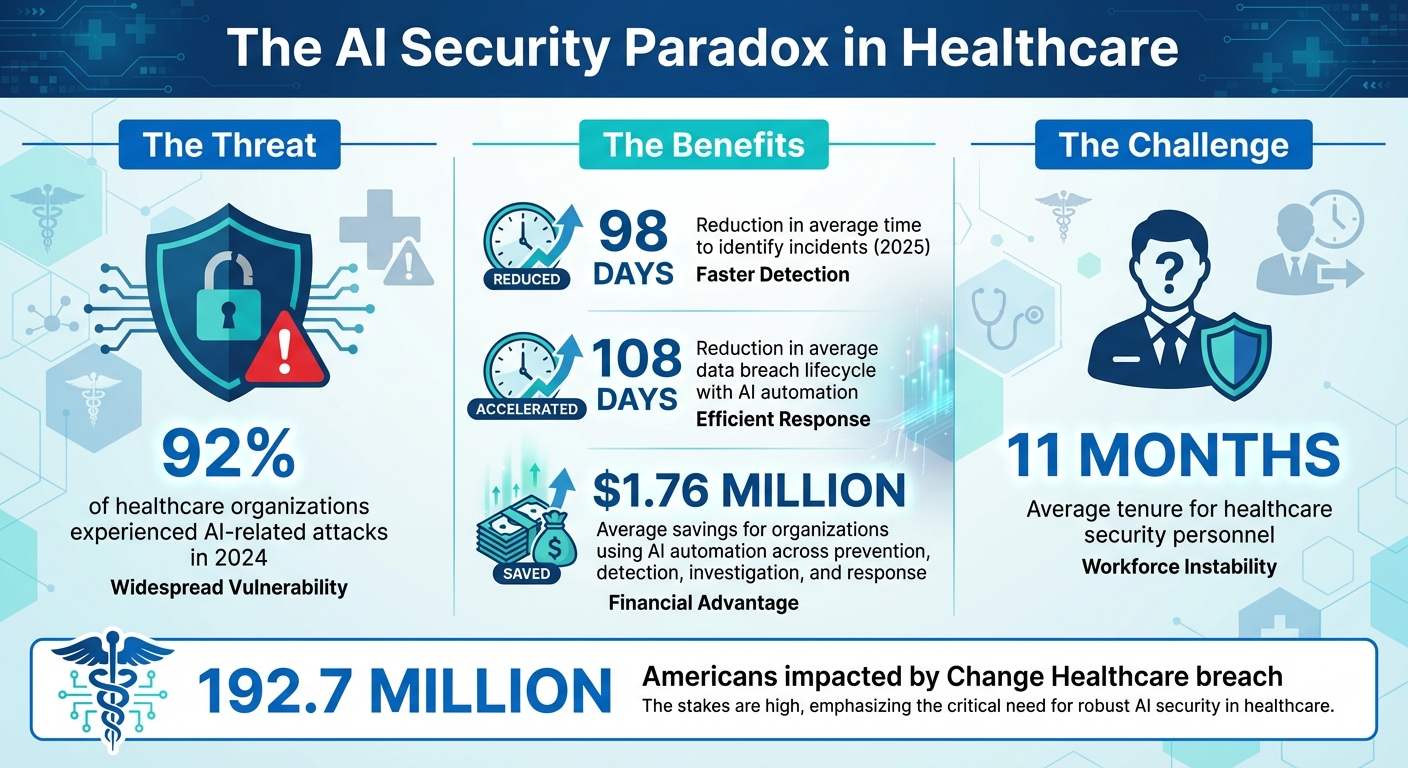

AI in healthcare cybersecurity is both a solution and a challenge. While it detects threats faster and reduces response times, it also introduces risks like adversarial attacks, privacy issues, and governance gaps. In 2025, 92% of healthcare organizations faced AI-related attacks, highlighting the urgent need for secure AI integration.

Key Points:

- AI Benefits: Detects ransomware, reduces alert fatigue, and secures cloud systems and medical devices.

- AI Risks: Vulnerable to adversarial attacks, data exposure, and "shadow AI" tools.

- Actionable Steps: Build governance frameworks, implement layered security, and ensure compliance with regulations like HIPAA.

The goal? Balance AI's strengths with its risks by combining automation, human oversight, and clear governance to protect patient data and healthcare systems.

AI Security in Healthcare: Key Statistics and Impact 2024-2025

How AI Strengthens Healthcare Cybersecurity

AI-Powered Threat Detection and Response

AI is reshaping how healthcare organizations identify and counter cyber threats. Unlike traditional security tools that struggle to process the immense flow of data in hospital networks, AI thrives on analyzing large datasets in real-time. Machine learning algorithms can predict ransomware activity within networks, spotting unusual patterns that indicate a potential breach and stopping it before it escalates. These systems also uncover vulnerabilities that conventional tools often overlook, especially in interconnected medical devices and IoT ecosystems [4][5].

Speed is where AI truly shines. In 2025, AI-driven threat detection systems cut the average time to identify incidents by an impressive 98 days for healthcare organizations [2]. This isn’t just about faster detection - it’s about stopping breaches before they cause damage. By shifting from reactive defense to proactive prevention, AI empowers healthcare organizations to take control of their cybersecurity strategies rather than constantly scrambling to address emergencies [5]. This proactive approach naturally leads to more efficient alert management.

Reducing Alert Fatigue and Response Times

Healthcare security teams face an overwhelming volume of alerts every day, many of which are false positives. AI steps in by filtering out the noise and highlighting genuine threats. Instead of wasting time manually sifting through countless alerts, security staff can focus on addressing the critical incidents that require their attention. This streamlined process makes a real difference: in 2025, AI automation helped cut the average data breach lifecycle by 108 days, enabling faster detection and resolution compared to teams relying solely on manual efforts [6].

The financial impact is equally striking. Organizations that adopted AI automation across prevention, detection, investigation, and response saved an average of $1.76 million compared to those without AI [6]. By handling routine monitoring tasks, AI allows human experts to dedicate their time to more strategic analysis, enhancing the overall effectiveness of cybersecurity efforts [5].

Securing Cloud Systems, APIs, and Medical Devices

AI’s protective capabilities extend far beyond traditional network endpoints, safeguarding cloud systems, APIs, and the growing array of connected medical devices. With healthcare relying more on digital infrastructure, these areas represent critical entry points for cyberattacks. AI tools can quickly scan hospital networks to pinpoint vulnerabilities, such as exposed APIs or outdated medical devices [3]. This speed is crucial, as healthcare often operates a mix of legacy systems and modern cloud environments, creating gaps that manual audits struggle to address.

AI-powered patch management systems prioritize updates for medical devices, focusing on the most urgent vulnerabilities instead of applying a blanket approach [4]. For cloud systems that store sensitive electronic protected health information (ePHI), AI ensures ongoing compliance monitoring and can anonymize data through tokenization before processing [4]. This multi-layered strategy protects patient information while maintaining the flexibility healthcare providers need to operate efficiently.

New Vulnerabilities AI Introduces to Healthcare

Adversarial Attacks and Model Manipulation

AI has undoubtedly strengthened cybersecurity, but it also opens up new vulnerabilities. One of the most concerning is the potential for adversarial attacks - where input data is intentionally manipulated to confuse AI systems. For example, attackers could alter inputs in a way that causes AI security systems to misclassify genuine threats as harmless or, conversely, flag benign activities as malicious. This kind of manipulation can lead to overlooked attacks or unnecessary disruptions.

The problem is further aggravated by the opaque nature of "black box" AI models, like deep learning algorithms and large language models. In healthcare, where these systems might control access to sensitive patient records or oversee network activity, this lack of transparency becomes a real liability. It not only hampers the detection of threats but also increases the risk of exposing sensitive data.

Data Exposure and Privacy Risks

AI systems that handle protected health information (PHI) bring a unique set of privacy challenges. Their inherent complexity makes it difficult to explain how patient data flows through these systems or how specific decisions are made. This lack of clarity can make it harder for patients to fully understand how their personal information is being used, raising questions about informed consent.

Another issue is how AI systems evolve over time. Many AI tools continuously learn from new data, making it tricky to track how patient information is being processed or where it ends up. A report from September 2025 highlighted a troubling example: healthcare workers unintentionally exposed sensitive data by uploading proprietary information into AI chatbots. Many of these users were unaware that their inputs could be stored or later exposed due to system vulnerabilities[3]. Beyond accidental exposure, unauthorized use of AI tools adds another layer of complexity to maintaining security.

Shadow AI and Governance Gaps

One of the most pervasive threats in healthcare today is "shadow AI" - unauthorized AI tools that clinicians and staff adopt to streamline their workflows. While often well-intentioned, the use of these unsanctioned tools can create serious security risks. In 2025, such tools were found to be a significant source of data leaks and system vulnerabilities within healthcare organizations[2].

The scale of the issue is staggering. By 2024, 92% of healthcare organizations had experienced attacks linked to AI tools[2]. Many of these problems arise from gaps in governance, such as the absence of clear policies, inadequate access controls, and insufficient safeguards for handling confidential information through AI systems[3].

To make matters worse, healthcare security teams are often understaffed and overworked. The average tenure for security personnel in this sector dropped to just 11 months, amplifying the challenge of monitoring and managing shadow AI[2]. Without proper oversight, these unauthorized tools can quickly become major security vulnerabilities, leaving healthcare organizations at risk.

Best Practices for Safe AI Adoption in Healthcare

Building AI Governance Frameworks

Healthcare organizations need clear governance frameworks to manage AI adoption effectively. These frameworks should outline specific roles throughout the AI lifecycle. Start by maintaining a comprehensive inventory of all AI systems in use. Governance boards - comprising IT, clinical, legal, and compliance leaders - should be established to evaluate and oversee AI implementation.

The Health Sector Coordinating Council (HSCC) has taken a proactive approach by forming an AI Cybersecurity Task Group. This group, which includes 115 healthcare organizations, provides operational guidance for adopting AI responsibly. Their recommendations stress the need for clinical oversight at every stage, from initial evaluation to deployment and ongoing monitoring[7].

One practical strategy is to classify AI tools by their autonomy levels. Systems requiring minimal human oversight demand stricter controls and more thorough vetting compared to decision-support tools. This classification allows organizations to adjust governance measures based on the associated risks. For third-party vendors, rigorous vetting and standardized procurement processes are essential[2]. Additionally, referencing established standards like the NIST AI Risk Management Framework ensures that governance aligns with industry expectations[7][2].

Implementing Layered Security Measures

Securing AI systems against emerging threats requires a multi-layered approach. Strategies like zero trust architecture and microsegmentation are particularly effective. By assuming no user or system is inherently trustworthy, these methods limit the impact of breaches by isolating network segments and restricting lateral movement[2].

For AI training pipelines, it's critical to safeguard the integrity of training data. Techniques such as cryptographic verification, detailed audit trails, and isolated training environments can help prevent tampering[2]. Adversarial attacks, which can exploit even the smallest data manipulations (as little as 0.001% of input tokens), highlight the importance of robust testing frameworks to evaluate how models respond to corrupted inputs.

Continuous monitoring is another key component. AI-driven patch management systems can prioritize vulnerabilities more efficiently, reducing detection times for potential threats. Regular retraining with verified datasets helps detect issues like data poisoning or model drift before they lead to significant problems[2]. Behavioral analysis tools add another layer of protection by establishing baselines for normal operations, making it easier to identify anomalies that could indicate insider threats or compromised accounts[8]. Alongside these technical measures, maintaining regulatory compliance is just as important.

Meeting Healthcare Regulatory Requirements

While technical defenses are essential, aligning with healthcare regulations is equally critical. AI systems must comply with existing mandates, especially HIPAA and FDA requirements. The HSCC’s 2026 guidance provides a roadmap for implementing AI in ways that meet these obligations[7]. For example, the updated HIPAA Security Rule mandates controls like multi-factor authentication and encryption for systems handling protected health information.

Embedding secure-by-design principles into AI-enabled medical devices is another vital step[7][4]. The ECRI Institute has flagged AI as the top health technology hazard for 2025[2], underscoring the need for proactive security measures. Governance frameworks should also address informed consent, ensuring patients clearly understand how their data is used by AI systems. Consent models should be transparent, adaptable, and regularly updated to reflect new developments[1].

Adhering to standards such as HICP, ISO, and IMDRF provides additional guidance for managing AI risks in compliance with regulatory expectations[7]. Incidents like the Change Healthcare breach, which impacted 192.7 million Americans, demonstrate how vulnerabilities in vendor systems can have widespread repercussions[2]. This makes robust third-party risk management not just advisable but a regulatory necessity.

sbb-itb-535baee

Managing AI Risk with Censinet RiskOps

Centralized Risk Visibility

In healthcare, managing risks - especially those tied to AI and cybersecurity - requires a clear, unified perspective. Censinet RiskOps™ simplifies this by consolidating risk assessments into a single platform. By integrating data from both third-party and internal evaluations, the platform ensures that risk teams can address traditional cybersecurity issues while also tackling emerging AI-related vulnerabilities. This centralized approach provides the foundation for a comprehensive risk management strategy that’s both efficient and effective.

Automated and Expert-Guided Risk Assessment

Censinet AITM speeds up the risk assessment process by enabling vendors to complete security questionnaires quickly while summarizing critical evidence. What sets it apart is its human-in-the-loop design, which combines automation with expert oversight. This ensures that healthcare organizations can scale their risk management efforts without sacrificing the careful review needed to protect sensitive patient data. With faster assessments in place, teams can focus on taking timely, coordinated actions to address risks.

Coordinated AI Governance Across Teams

With automated and expert-guided assessments in hand, healthcare organizations can align their efforts across departments. Effective risk management depends on collaboration among IT, clinical, legal, and compliance teams. Censinet RiskOps enhances this by routing key findings and tasks to the appropriate teams, ensuring everyone is on the same page. This streamlined workflow fosters consistent governance and accountability, helping organizations meet cybersecurity and regulatory standards while staying ahead of potential threats.

Conclusion: Managing AI Security Trade-offs in Healthcare

The analysis highlights a fundamental truth: AI in healthcare is both a powerful tool and a potential risk. This duality means healthcare organizations must constantly balance the benefits and vulnerabilities that come with AI adoption. With 92% of healthcare organizations reporting AI-related attacks in 2024 [2], the focus isn't on whether to use AI but on how to integrate it securely. The key is to approach AI security as an ongoing challenge, not as a one-time fix.

Success in this area requires robust AI governance, multi-layered security protocols, and continuous monitoring. These elements emphasize the importance of consistent human oversight.

"AI, in this context, is not an isolated tool but a core component of a systemic transformation that affects clinical workflows, patient engagement, and data governance." - Di Palma et al., NCBI, 2025 [1]

The risks of neglecting AI security are far from hypothetical. Take the ransomware attack on a university hospital in Düsseldorf, Germany, in September 2020. The attack forced the diversion of a critically ill heart patient to a hospital 30 kilometers away. Tragically, the patient died before receiving treatment, leading to a manslaughter investigation [1]. This incident underscores the real-world consequences of insufficient AI security measures.

To address these challenges, healthcare organizations must implement solutions that provide clear visibility into AI-related risks. Combining automation with expert oversight and aligning governance efforts across IT, clinical, legal, and compliance teams is essential. Those who treat AI security as a delicate balancing act - leveraging its strengths while minimizing its vulnerabilities - will be better equipped to use AI as both a shield and a tool, without succumbing to its inherent risks.

FAQs

What are adversarial attacks, and how do they affect AI in healthcare?

Adversarial attacks happen when individuals with harmful intentions trick AI systems by presenting misleading inputs. This manipulation can cause the AI to misinterpret data and make mistakes. In the healthcare sector, such attacks can have severe outcomes - ranging from inaccurate diagnoses to exposure of confidential patient information or even interruptions in vital healthcare services.

These weaknesses jeopardize patient safety, undermine the accuracy of data, and disrupt the smooth operation of healthcare systems. Addressing and reducing these risks is critical for ensuring trust and safeguarding the security of AI-powered healthcare solutions.

How can healthcare organizations safely harness the power of AI in cybersecurity?

Healthcare organizations can use AI to strengthen cybersecurity by combining cutting-edge tools with proactive risk management. This involves strategies like adopting a zero trust architecture, using microsegmentation to limit access within networks, and relying on continuous monitoring to quickly identify and address potential threats. Regular risk assessments and following frameworks such as NIST AI RMF are also key to uncovering and addressing vulnerabilities.

In addition, enforcing strict data validation protocols is crucial to maintaining data integrity. Providing ongoing staff training on AI-related risks ensures everyone understands potential threats, while striking a balance between AI automation and human oversight keeps systems secure. When IT, security teams, and clinical staff work together, healthcare providers can harness AI's potential without compromising sensitive patient information or system reliability.

How can healthcare organizations manage shadow AI and stay compliant with regulations?

To tackle the challenges of shadow AI and maintain compliance with regulations, healthcare organizations need to put solid governance structures in place and implement strict access controls. Conducting regular audits and performing AI-specific risk assessments can help pinpoint and resolve potential weak spots.

Keeping pace with changing regulations, like HIPAA and FDA guidelines, is a must. Organizations should also focus on promoting transparency, educating staff to identify AI-related risks, and working closely with industry regulators. Taking these proactive steps allows healthcare providers to strike a balance between advancing technology, ensuring security, and building trust.