AI's Role in Incident Response Teams

Post Summary

It accelerates detection, automates containment, reduces manual work, and supports faster recovery.

No—AI augments human expertise by automating tasks and surfacing insights for better decisions.

Complex clinical systems, IoT/medical devices, strict regulations, and large vendor ecosystems.

Analysts verify AI insights, leaders get clearer decision paths, and threat intel teams gain faster context.

It enhances preparation, speeds detection, automates containment, and strengthens post‑incident learning.

AI oversight committees, risk registers, bias testing, adversarial monitoring, and clear policies.

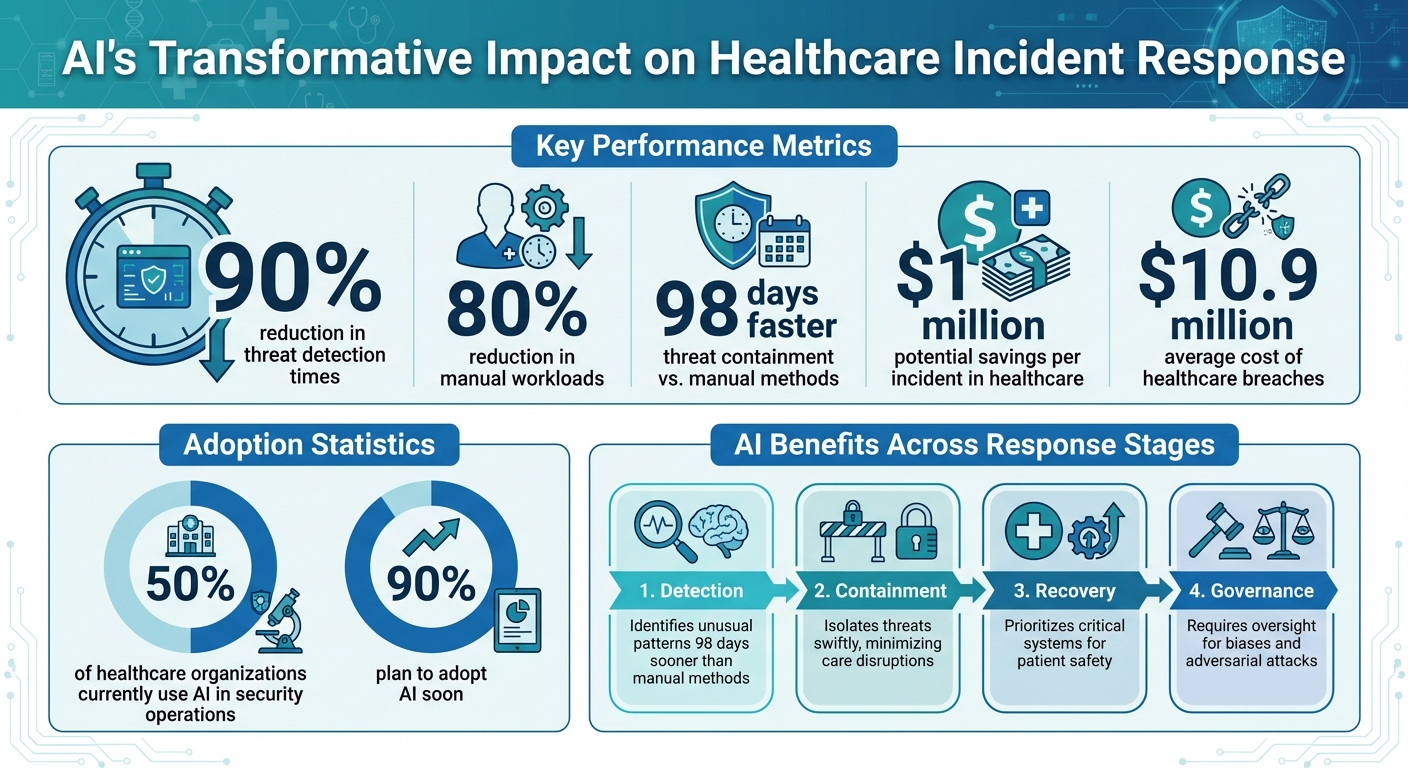

AI is transforming how healthcare organizations manage cybersecurity incidents, cutting threat detection times by up to 90% and reducing manual workloads by 80%. This shift is critical in an industry where cyberattacks can disrupt patient care, compromise sensitive data, and halt operations. By automating tasks like threat detection, containment, and recovery, AI enables faster and more precise responses, saving both time and resources. However, healthcare-specific challenges - like protecting IoT devices, complying with strict regulations, and managing complex supply chains - require tailored AI solutions.

Key takeaways:

AI is not a replacement for human expertise but a tool to enhance decision-making and efficiency. With proper governance, healthcare organizations can better safeguard patient care while navigating the growing complexity of cyber threats.

AI Impact on

: Key Statistics and Benefits

Healthcare Incident Response Basics and AI Integration

Key Functions of Healthcare Incident Response Teams

Healthcare incident response teams rely on the NIST framework to handle cyber threats in a structured way. This framework includes several stages: preparation (creating response plans), detection (monitoring for unusual activity), containment (isolating impacted systems), recovery (restoring normal operations), and post-incident review (investigating root causes). AI is transforming each of these stages. It speeds up threat detection by analyzing massive datasets in real time, automates containment by pinpointing compromised systems, and simplifies recovery by ensuring systems are secure before they’re back online.

For example, during the detection phase, AI can identify unusual patterns in EHR access that might go unnoticed by human analysts. According to IBM, organizations using AI can contain threats 98 days faster than those relying on manual methods, potentially saving $1 million per incident in healthcare settings. Nearly half of healthcare organizations already use AI in their security operations, and 90% plan to adopt it soon[6].

Healthcare-Specific Incident Response Challenges

The healthcare industry faces unique challenges that make incident response particularly complex. Clinical workflows are deeply tied to EHR systems, meaning that a ransomware attack can bring care delivery to a standstill. A stark example of this was the 2024 Change Healthcare breach, which disrupted services and impacted millions of patients. Unlike other industries, healthcare teams cannot afford to shut down entire networks when critical medical devices and patient care depend on them.

Regulatory requirements also play a major role. Laws like HIPAA and HITECH mandate strict protections for patient health information (PHI). This means that incident response must address not only technical fixes but also compliance with these regulations. Adding to the complexity, the healthcare supply chain - spanning third-party vendors and medical device manufacturers - creates additional vulnerabilities that demand careful management during an incident.

Preparing Your Organization for AI in Incident Response

Before implementing AI, healthcare organizations need to lay a solid groundwork. Start by forming cross-functional teams that include cybersecurity experts, data scientists, clinical operations staff, and compliance officers. These teams should use established guidelines, such as the NIST AI Risk Management Framework or HSCC playbooks, to create clear rules for how AI will be used in threat detection and response.

"Benchmarking against industry standards helps us advocate for the right resources and ensures we are leading where it matters."

High-quality data is essential for AI to function effectively. Organizations must ensure their data pipelines are clean and well-structured for training AI models. It’s also wise to assess cybersecurity maturity and start small by piloting AI in less critical areas, like anomaly detection, before rolling it out widely. Training staff through simulations can boost their confidence in overseeing AI systems. Tools like Censinet RiskOps™ can assist in this preparation by streamlining third-party risk assessments and identifying vulnerabilities in supply chains or clinical applications, ensuring compliance with HIPAA. Up next, we’ll explore how AI is redefining specific roles within incident response teams.

How AI Changes Incident Response Team Roles

AI is transforming the way healthcare incident response teams work. By automating tedious, repetitive tasks, it allows teams to streamline their workflows and focus on more critical areas like strategy and decision-making.

Let’s take a closer look at how AI is reshaping key roles within these teams.

Incident Response Lead: Smarter Decision-Making with AI

For incident response leads, AI provides a clearer, faster understanding of complex situations during major incidents. Instead of manually piecing together data from various sources, AI consolidates everything into a single, cohesive view. This helps prioritize actions like containment and recovery much more efficiently. While AI doesn’t replace human judgment, it complements it by offering insights based on past incidents, helping leaders make informed decisions quickly.

SOC Analysts: From Reactive to Proactive

SOC analysts are seeing a major shift in their day-to-day responsibilities. Instead of spending hours manually reviewing alerts, they now verify AI-generated insights. AI takes on the heavy lifting - handling tasks like threat detection, alert triage, and anomaly spotting - so analysts can focus on investigating the most critical incidents. This evolution also means analysts need to develop new skills, such as fine-tuning AI models and analyzing behavioral patterns, to ensure the system remains accurate and effective. As Tapan Mehta points out:

"AI is really, really good at scaling up a solution to these billions of IoT devices, which is very hard for a human being to do"

.

Threat Intelligence Teams: Faster, Smarter Insights

Threat intelligence teams are leveraging AI to process massive amounts of data and detect patterns unique to healthcare threats. Whether it’s identifying attacks targeting protected health information (PHI) or analyzing unstructured data from sources like security bulletins and dark web discussions, AI helps turn this flood of information into actionable insights [1][2]. Tools like Censinet RiskOps™ enhance this process by offering continuous risk assessments for third-party vendors and clinical applications. This ensures that threat intelligence efforts lead to proactive measures, such as addressing supply chain vulnerabilities or mitigating risks tied to medical devices and vendors.

AI Applications Throughout the Incident Response Lifecycle

AI is transforming how healthcare organizations handle incident response, streamlining everything from early detection to post-incident analysis. By making processes faster and more precise, it’s changing the game for cybersecurity in healthcare.

Preparation and Detection

Before an incident even happens, AI helps identify vulnerabilities by analyzing historical data and current trends. This means potential risks across electronic health record (EHR) systems, medical devices, and networks can be flagged early, allowing for proactive risk assessments.

AI also enhances tabletop exercises by creating realistic attack scenarios based on live threat intelligence. Instead of generic drills, it can simulate how specific threats - like ransomware - might disrupt clinical workflows. This level of detail reveals gaps in preparedness without compromising actual patient care [1][9].

When it comes to early detection, AI continuously scans massive datasets across healthcare networks. Tools like Censinet RiskOps™ provide ongoing assessments for third-party vendors, clinical applications, and medical devices - areas often overlooked in complex hospital systems [2]. AI can map out intricate networks, track third-party connections, and pinpoint risks that might otherwise go unnoticed, giving security teams a clearer picture of their vulnerabilities.

With these insights, AI sets the stage for swift containment and recovery when threats arise.

Containment and Recovery

Once a threat is identified, speed becomes critical. During containment, AI can automatically quarantine compromised systems, isolate affected EHRs or devices, disable suspicious accounts, and segment networks to stop threats from spreading. These actions help minimize disruptions to clinical operations [1][9].

For recovery, AI prioritizes which systems and devices to restore first, focusing on those most critical to patient care. It also automates vulnerability management, directing patching efforts to address the most pressing issues - like actively exploited vulnerabilities in critical systems [1]. The Healthcare and Public Health Sector Coordinating Council (HSCC) is even working on AI-driven playbooks to guide rapid containment and recovery, ensuring secure backups of both IT systems and AI models [3].

Once containment and recovery are complete, AI shifts to analyzing the incident to strengthen future defenses.

Post-Incident Review and Continuous Improvement

After an incident, AI processes logs to produce detailed reports. These reports pull together timelines, attack vectors, and impacts - pinpointing critical issues such as protected health information (PHI) exposure. By learning from each event, AI improves detection models, ensuring response strategies evolve alongside emerging threats [1][4][5].

These findings feed directly into updated risk assessments and response plans, creating a continuous improvement cycle. As cybersecurity experts note, the use of AI in detection and response has become essential for security operations centers (SOCs). It’s a fast-paced "arms race" where both attackers and defenders are leveraging AI to outmaneuver each other [4].

sbb-itb-535baee

Managing AI Risks in Incident Response Teams

AI has undeniably transformed incident response, offering powerful tools to enhance cybersecurity efforts. However, it also introduces new risks - like model biases and adversarial attacks - that require careful management within existing cybersecurity frameworks. Addressing these challenges calls for robust governance structures and the seamless integration of AI risk management into broader cybersecurity programs.

AI Governance Structures and Risk Categories

Healthcare incident response teams face unique challenges when it comes to AI. To address these, organizations should establish AI oversight committees tasked with managing risks such as model biases, adversarial attacks, data leaks, and algorithm manipulation [3][10]. These committees can maintain detailed risk registers to track and mitigate these threats.

The Healthcare and Public Health Sector Coordinating Council (HSCC) is taking proactive steps by developing 2026 guidance that includes AI-specific playbooks. These playbooks focus on critical areas like incident recovery, threat intelligence, and cybersecurity operations - extending beyond just large language models [3]. A key feature of these playbooks is resilience testing, which emphasizes collaboration between cybersecurity and data science teams. This is especially vital as threat actors increasingly use AI, creating what experts describe as an "arms race" in healthcare security operations [4].

Governance policies should prioritize pre-deployment bias testing, continuous monitoring for model drift, and adversarial training. Experts from Palo Alto Networks highlight the importance of adaptive security policies, especially for vulnerabilities in healthcare environments such as Internet of Medical Things (IoMT) devices [1]. Regular audits - conducted quarterly by cross-functional review boards - and integrating AI risk metrics into SIEM/SOAR platforms for automated alerts can also help maintain effective oversight.

Integrating AI Risk into Cybersecurity Programs

To address AI risks effectively, healthcare organizations must weave them into existing cybersecurity and risk management workflows. This involves expanding vendor risk assessments to include third-party AI tools, adding AI-specific questions to security questionnaires, and tracking AI-related vulnerabilities alongside traditional cyber risks.

Organizations can leverage their current Governance, Risk, and Compliance (GRC) frameworks by incorporating AI risk categories into existing registers, compliance checklists, and audit processes. When evaluating AI vendors, it's critical to assess their handling of model training data, their bias testing protocols, and the security measures protecting their algorithms. These steps are particularly relevant given real-world incidents where generative AI has led to data leaks [10].

While IBM research shows that AI can reduce threat detection time by 98 days, experts at Red Canary stress the importance of creating blueprints for responsible AI integration to avoid over-reliance on automation [1][5]. The key lies in balancing AI's speed and efficiency with human oversight, ensuring that automation enhances - but does not replace - governance controls. Platforms like Censinet RiskOps™ provide a centralized solution for managing these complexities.

Using Censinet RiskOps™ for AI Risk Management

For healthcare organizations looking to integrate AI into incident response, platforms like Censinet RiskOps™ offer a comprehensive solution. This platform serves as a centralized hub for managing AI risks, enabling oversight committees to maintain risk registers, track vulnerabilities, and enforce policies through automated workflows. Its collaborative dashboards provide real-time visibility into AI risks across patient data, medical devices, and third-party vendors.

Censinet RiskOps™ also streamlines third-party AI vendor assessments, benchmarking organizations against industry peers. The platform automates key aspects of AI risk management, helping healthcare delivery organizations track compliance, score risks in real time, and maintain secure oversight of AI systems embedded in clinical workflows and incident response processes.

Conclusion

AI has reshaped how healthcare organizations detect, respond to, and recover from cybersecurity incidents. By automating alert correlation and cutting threat detection times by up to 90%, while also reducing manual workloads by 80% [7], AI allows incident response teams to act more swiftly and focus on safeguarding patient care and clinical operations. But speed isn’t the only benefit - faster containment directly translates to fewer canceled procedures, reduced patient diversions, and lower clinical risks [8]. Considering that healthcare breaches cost an average of $10.9 million, AI-powered response tools can significantly minimize both financial losses and reputational harm for U.S. providers [8].

That said, speed alone isn’t enough. Striking the right balance between automation and human oversight is essential. As cyber attackers increasingly use AI to their advantage, healthcare organizations find themselves in a digital arms race requiring advanced defenses and strong governance. Clear policies, coordinated efforts across teams, and ongoing learning are vital to making sure automation supports decision-making without creating new vulnerabilities. AI must be treated as both a valuable tool and a potential risk, with its management integrated into broader cybersecurity strategies. This balanced approach is critical for developing solutions that incorporate AI risk management into daily operations.

Censinet RiskOps™ helps healthcare organizations implement a well-rounded AI risk strategy. By enabling centralized, real-time AI risk management, the platform simplifies aligning AI-driven incident responses with an organization’s risk tolerance and regulatory requirements. Its collaborative features ensure that critical findings reach the right people, offering real-time visibility into patient data, medical devices, and clinical applications - all crucial for effective AI governance.

Ultimately, the evolving cybersecurity landscape demands that AI-driven, well-governed incident response becomes a cornerstone of resilient healthcare, alongside clinical quality and patient safety. By investing in specialized AI tools, strong governance frameworks, and targeted training, healthcare organizations can stay ahead of emerging threats while maintaining the human expertise needed for sound judgment. The future will favor those who can combine AI’s speed and accuracy with the irreplaceable insight of skilled professionals.

FAQs

How does AI enhance threat detection in healthcare incident response?

AI plays a crucial role in improving threat detection within healthcare incident response by processing massive amounts of data in real time. It spots unusual patterns or potential risks, enabling teams to detect issues earlier, act quickly, and address problems before they grow into larger crises.

By automating intricate analyses, AI not only boosts precision but also lightens the workload for human teams. This allows healthcare professionals to concentrate on critical decision-making. Such a proactive strategy strengthens the protection of sensitive patient information, clinical systems, and other essential healthcare resources.

What challenges come with using AI in healthcare cybersecurity?

Integrating AI into healthcare cybersecurity comes with its fair share of hurdles. First and foremost, accuracy and reliability are non-negotiable. These tools deal with highly sensitive patient information and must detect threats without making mistakes. On top of that, they need to comply with strict healthcare regulations like HIPAA, which sets rigorous standards for data privacy and security - no small feat for any system.

Another challenge lies in ensuring that AI tools fit smoothly into existing workflows, which are often complex in healthcare environments. There's also the issue of potential biases in AI algorithms. Left unchecked, these biases could lead to unfair or incorrect results, something that healthcare systems simply cannot afford. And let’s not forget the monumental task of managing the diverse and complex data healthcare generates. From electronic health records to information from medical devices, AI systems must be built to process and analyze massive volumes of data with efficiency and precision.

How can healthcare organizations manage AI risks effectively?

Healthcare organizations can tackle AI-related risks by leveraging specialized platforms tailored to the industry's specific needs. For example, AI-driven risk management tools can simplify critical tasks like monitoring, evaluating, and addressing cybersecurity threats, all while ensuring compliance with healthcare regulations and standards.

Beyond technology, it's crucial for organizations to implement clear governance policies, schedule regular audits, and rely on industry benchmarks to uphold accountability. These proactive measures help create a framework where AI technologies are applied safely and responsibly within healthcare settings.

Related Blog Posts

- How Incident Response Automation Improves Healthcare Security

- AI Risks in Healthcare Incident Response Policies

- The Healthcare Cyber Storm: How AI Creates New Attack Vectors in Medicine

- From Breach to Resolution in Hours, Not Days: AI-Powered Incident Response for Healthcare

{"@context":"https://schema.org","@type":"FAQPage","mainEntity":[{"@type":"Question","name":"How does AI enhance threat detection in healthcare incident response?","acceptedAnswer":{"@type":"Answer","text":"<p>AI plays a crucial role in improving threat detection within healthcare incident response by processing massive amounts of data in real time. It spots unusual patterns or potential risks, enabling teams to detect issues earlier, act quickly, and address problems before they grow into larger crises.</p> <p>By automating intricate analyses, AI not only boosts precision but also lightens the workload for human teams. This allows healthcare professionals to concentrate on critical decision-making. Such a proactive strategy strengthens the protection of sensitive patient information, clinical systems, and other essential healthcare resources.</p>"}},{"@type":"Question","name":"What challenges come with using AI in healthcare cybersecurity?","acceptedAnswer":{"@type":"Answer","text":"<p>Integrating AI into <a href=\"https://www.censinet.com/resource/challenges-remain-for-healthcare-cybersecurity\">healthcare cybersecurity</a> comes with its fair share of hurdles. First and foremost, <strong>accuracy and reliability</strong> are non-negotiable. These tools deal with highly sensitive patient information and must detect threats without making mistakes. On top of that, they need to comply with strict healthcare regulations like HIPAA, which sets rigorous standards for data privacy and security - no small feat for any system.</p> <p>Another challenge lies in ensuring that AI tools fit smoothly into existing workflows, which are often complex in healthcare environments. There's also the issue of <strong>potential biases</strong> in AI algorithms. Left unchecked, these biases could lead to unfair or incorrect results, something that healthcare systems simply cannot afford. And let’s not forget the monumental task of managing the <strong>diverse and complex data</strong> healthcare generates. From electronic health records to information from medical devices, AI systems must be built to process and analyze massive volumes of data with efficiency and precision.</p>"}},{"@type":"Question","name":"How can healthcare organizations manage AI risks effectively?","acceptedAnswer":{"@type":"Answer","text":"<p>Healthcare organizations can tackle AI-related risks by leveraging specialized platforms tailored to the industry's specific needs. For example, <strong>AI-driven risk management tools</strong> can simplify critical tasks like monitoring, evaluating, and addressing cybersecurity threats, all while ensuring compliance with healthcare regulations and standards.</p> <p>Beyond technology, it's crucial for organizations to implement clear governance policies, schedule regular audits, and rely on industry benchmarks to uphold accountability. These proactive measures help create a framework where AI technologies are applied safely and responsibly within healthcare settings.</p>"}}]}

Key Points:

How does AI enhance healthcare incident response speed and accuracy?

- Cuts detection times by up to 90% through automated pattern analysis

- Identifies anomalies across EHRs, IoMT, and networks at scale

- Automates alert correlation to eliminate noise and redundant investigation

- Improves containment decisions with prioritized risk insights

- Reduces manual workloads by 80%, freeing analysts for strategy

What unique challenges does healthcare face in incident response?

- Ransomware can halt clinical operations, delaying care

- Medical devices (IoMT/OT) cannot be easily shut down without risking safety

- HIPAA and HITECH obligations require strict PHI protection

- Large vendor supply chains complicate containment and notification

- Legacy systems increase vulnerability and limit response options

How does AI reshape roles within incident response teams?

- Incident response leads gain AI‑generated situational clarity for quicker prioritization

- SOC analysts shift from manual triage to validating AI findings

- Threat intelligence teams use AI to process unstructured and high‑volume data

- Data scientists and cybersecurity teams co‑govern models to ensure accuracy

- Clinical and compliance leaders receive clearer impact analysis for decision‑making

How does AI support the full NIST incident response lifecycle?

- Preparation: Simulates realistic attack scenarios and identifies vulnerabilities

- Detection: Monitors massive datasets for real‑time anomalies

- Containment: Automatically isolates compromised accounts, devices, or systems

- Recovery: Prioritizes system restoration based on clinical criticality

- Post‑incident review: Generates reports, timelines, and updated detection logic

What governance structures help manage AI risks?

- AI oversight committees to evaluate bias, drift, and model integrity

- Risk registers and playbooks tailored to AI threats and adversarial attacks

- Continuous audits to evaluate model performance against policy

- Pre‑deployment and ongoing bias testing for fair and accurate outputs

- Integration with SIEM/SOAR to trigger alerts for AI‑specific vulnerabilities

How can platforms like Censinet RiskOps™ support AI‑enabled IR programs?

- Centralizes AI risk registers for oversight committees

- Automates third‑party AI vendor assessments using healthcare‑specific frameworks

- Tracks model vulnerabilities and incidents across clinical systems

- Benchmarks AI readiness vs. peers for strategic planning

- Unifies cybersecurity, compliance, and clinical risk data for governance