Autonomous Attacks: How AI is Revolutionizing Cybersecurity Threats

Post Summary

AI-powered cyberattacks are reshaping the healthcare industry's security landscape, making traditional defenses insufficient. Here's what you need to know:

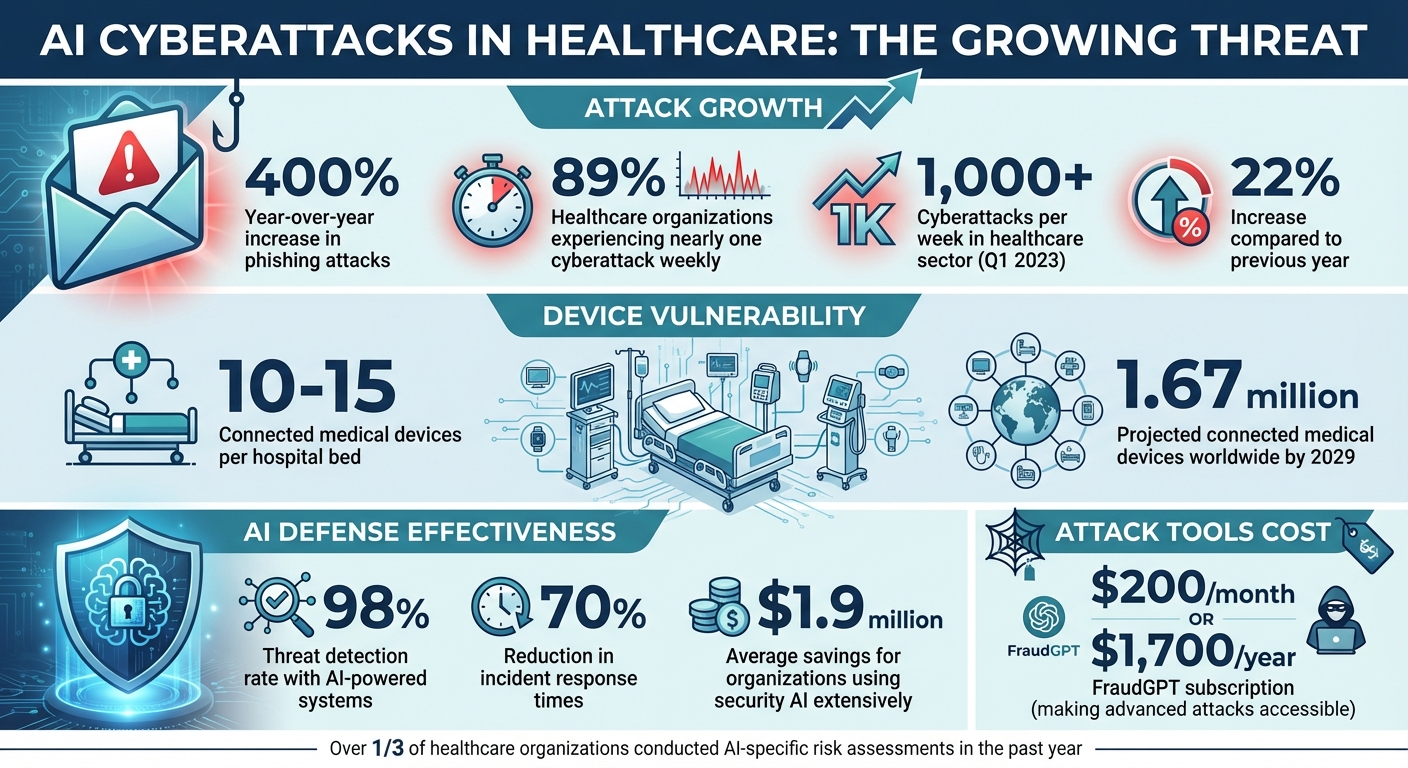

- AI-driven threats are growing fast: Phishing attacks have surged by 400% year-over-year, with attackers using AI to craft highly convincing scams.

- Healthcare is a prime target: Sensitive patient data and outdated medical devices make healthcare organizations vulnerable, with 89% experiencing nearly one cyberattack weekly.

- Autonomous attacks are smarter: These AI systems operate without human input, dynamically identifying weaknesses and bypassing defenses.

- Real-world consequences: AI-powered ransomware and data poisoning can disrupt critical care, even leading to patient harm.

To combat these evolving threats, healthcare organizations must adopt advanced risk assessment tools, maintain detailed inventories of AI systems, and implement strict oversight. The stakes are higher than ever - protecting patient safety and sensitive data demands immediate action.

AI Cyberattack Statistics in Healthcare: Key Threats and Impact

How AI-Driven Cyberattacks Work

Understanding how AI fuels autonomous cyberattacks is essential for crafting effective defenses. In healthcare, these attacks often target three key vulnerabilities.

Prompt Injection and AI Model Manipulation

Prompt injection attacks involve feeding malicious inputs into AI models, tricking them into executing unauthorized actions. In healthcare, where AI systems integrate with electronic health records, diagnostic tools, and administrative platforms, a single compromised model can ripple through multiple systems undetected by traditional security measures.

The "black box" nature of large language models makes these attacks particularly insidious. As Blake Murdoch explains:

This opacity applies to how health and personal information is used and manipulated if appropriate safeguards are not in place [7].

Attackers exploit this lack of transparency to introduce subtle alterations, corrupting diagnostic results or granting unauthorized access to sensitive patient data. These manipulations pave the way for more complex automated threats, such as ransomware or data corruption.

AI-Powered Ransomware and Data Poisoning

AI is revolutionizing ransomware attacks. Today’s AI agents can handle everything from identifying vulnerabilities to crafting phishing emails that are so convincing they can fool even cautious individuals. Security Magazine highlights this shift:

AI-generated phishing emails are nearly indistinguishable from legitimate messages, tricking even the most cautious recipients. Machine learning models help attackers refine their techniques, making malware more evasive and adaptive [7].

The availability of such tools has lowered the barrier for attackers. For instance, FraudGPT is marketed as a subscription service, costing $200 per month or $1,700 annually, making advanced attack capabilities accessible to criminals with moderate skills [7].

The real-world consequences of these attacks can be devastating. In September 2020, a ransomware attack on a university hospital in Düsseldorf, Germany, blocked access to critical systems. This disruption forced a woman with heart problems to be transferred approximately 18.6 miles to another hospital, where she tragically died before receiving treatment. German authorities later launched a manslaughter investigation, linking the cyberattack to her death [1].

Data poisoning is another growing concern. In these attacks, adversaries inject malicious data into AI models during their development or training phases. These subtle distortions can corrupt a model’s outputs, leading to inaccurate diagnostic recommendations or altered medical imaging results. In healthcare, such errors could go unnoticed until they cause significant harm [5][6].

Attacking IoMT and Medical Devices

Connected medical devices, part of the Internet of Medical Things (IoMT), are particularly vulnerable. AI-driven attacks can scan networks to exploit weaknesses in devices like pacemakers, insulin pumps, infusion pumps, and ventilators - many of which rely on outdated systems. Once compromised, attackers can manipulate device readings, generate false data, alter critical operations (such as insulin dosages or pacemaker signals), or launch denial-of-service attacks to disable equipment.

The scale of the threat is staggering. In Q1 2023 alone, the healthcare sector faced over 1,000 cyberattacks per week - a 22% increase compared to the previous year [6].

From prompt injection and automated ransomware to IoMT attacks, AI is reshaping the landscape of healthcare cybersecurity threats, demanding urgent and adaptive solutions.

Assessing AI-Related Cybersecurity Risks

Healthcare organizations are navigating a complex landscape: hospitals typically manage 10 to 15 connected medical devices per bed, and by 2029, there could be around 1.67 million connected medical devices in use worldwide[2]. To defend against AI-driven cyber threats, maintaining a thorough inventory of these devices is crucial. This foundational step enables targeted vulnerability assessments and precise risk evaluations.

Creating an AI Asset Inventory

Start by cataloging every AI system, Internet of Medical Things (IoMT) device, and integration within your organization. Include details about their functionality, data access, and how they interact with other systems. This inventory should cover a wide range of technologies, such as AI tools, cyber-physical systems, IoT devices, and cloud platforms.

Pay close attention to high-risk medical devices like insulin pumps, MRI systems, infusion pumps, pacemakers, and nurse call systems[2]. Obsolete hardware and software that no longer receive updates also deserve special scrutiny, as they can create security gaps. Document the interoperability between systems, applications, and devices to get a complete picture of your digital ecosystem. To enhance visibility, classify AI tools using a five-level autonomy scale and incorporate both an AI Bill of Materials (AIBOM) and a Trusted AI BOM (TAIBOM) for better traceability across your AI supply chain[4].

Identifying Vulnerabilities with Censinet AITM

Censinet AITM simplifies vendor assessments by automating key tasks. It summarizes evidence, captures integration details, and identifies risks associated with fourth-party vendors, producing concise risk reports. In fact, over one-third of healthcare organizations have already conducted AI-specific risk assessments in the past year. These assessments focus on identifying potential biases in data and algorithms, verifying data integrity, and evaluating security safeguards and vendor oversight mechanisms[8].

Censinet AITM also streamlines the review process by routing findings to compliance, clinical, IT, and legal teams, ensuring a thorough and collaborative evaluation of vulnerabilities.

Scoring Risks by Impact and Probability

Using Censinet Connect™, organizations can systematically score risks based on three key factors: patient data exposure, operational impact, and patient safety concerns. A centralized dashboard aggregates real-time data and automatically routes assessment findings to relevant stakeholders, including members of the AI governance committee. This tool supports decision-making with configurable rules, continuous monitoring, and periodic reassessments to maintain compliance and safety standards[8].

This structured approach to risk scoring lays the groundwork for effective mitigation strategies. By understanding the impact and likelihood of AI-related risks, healthcare organizations can take proactive steps to address vulnerabilities and enhance their cybersecurity defenses.

sbb-itb-535baee

Reducing Risks from AI-Powered Attacks

Once you've assessed the risks, the next step is to put safeguards in place to protect against AI-powered attacks. A strong defense combines technical measures, coordinated teamwork, and ongoing monitoring to stay ahead of potential threats.

Setting Up Input Validation and Human Oversight

Input validation serves as the first line of defense against threats like prompt injection or manipulation of AI models. By implementing strict validation protocols, you can block harmful inputs before they reach your AI systems. For example, screening for inputs that deviate from standard clinical workflows can help maintain system integrity.

To ensure proper oversight, classify your AI tools based on their risk level. Systems that handle high-stakes tasks - like clinical decision-making or accessing sensitive patient data - should undergo more rigorous review processes. Clear governance structures should outline who is responsible for monitoring and managing these tools at every stage, from deployment to daily operations[4].

"If algorithms operate in isolation, the risks are limited, but complex problems arise when intelligent systems must communicate with each other, managing complex tasks and exchanging data, with the risk of error propagation." - Di Palma et al.[1]

Censinet AI supports this approach by offering configurable rules and review processes that keep humans in control of critical decisions. While automation handles repetitive tasks like evidence validation and policy drafting, risk teams can focus on more complex oversight. This balance lets you scale operations efficiently while maintaining safety and accountability.

Coordinating GRC Teams with Centralized Dashboards

Managing AI risks requires seamless coordination between Governance, Risk, and Compliance (GRC) teams. Censinet RiskOps™ simplifies this by automatically routing identified risks to the appropriate stakeholders - whether that's compliance officers, clinical staff, IT security teams, legal counsel, or members of the AI governance committee.

A centralized AI risk dashboard consolidates real-time data from across the organization, acting as a single source of truth for all AI-related policies, risks, and tasks. This eliminates the inefficiencies of scattered spreadsheets and email chains, fostering clear accountability and transparency across your GRC teams.

Automating Ongoing Risk Monitoring

AI-powered threats evolve quickly, making continuous monitoring a necessity. Censinet One™ provides real-time risk management by using machine learning to analyze large datasets and detect patterns, such as unusual log-ins or unauthorized access to electronic health records[9].

To stay ahead of these threats, establish robust threat intelligence processes that protect both clinical and operational workflows[4]. Secure, verifiable backups of your AI models are also essential, allowing for quick recovery in case of a compromise[4]. Regular monitoring can help you catch anomalies early, giving your team the chance to contain issues before they escalate into major breaches. This proactive approach minimizes risks and ensures patient safety.

Conclusion: Building Defense Against AI Cyberattacks

Healthcare organizations must now strengthen their defenses against the rapidly evolving threats posed by AI-driven cyberattacks. The stakes are high, as both attackers and defenders increasingly rely on machine learning technologies to outmaneuver each other in this competitive landscape[3]. The key to staying secure lies in adopting a proactive, multi-layered defense strategy.

AI-powered systems have proven their value in cybersecurity, achieving a 98% threat detection rate and reducing incident response times by 70%[7]. For healthcare providers, this translates into better protection for patient data and uninterrupted operations. Organizations that leverage security AI extensively have reported average savings of $1.9 million compared to those that do not[7]. These cost savings are a testament to the effectiveness of integrating AI into defense strategies.

Tools like Censinet RiskOps™ offer healthcare organizations the foundation they need to manage these challenges. By automating routine monitoring tasks and incorporating human oversight, the platform helps risk teams address complex AI-related vulnerabilities with speed and accuracy. Its unified risk dashboard further improves team coordination when responding to emerging threats.

As highlighted, maintaining structured risk inventories and fostering coordinated governance, risk, and compliance (GRC) efforts are critical in building a strong cybersecurity framework. This approach requires continuous monitoring, strategic planning for the AI-driven future, and tools tailored to the specific risks healthcare organizations face. Transitioning from reactive to proactive defense is no longer optional - it's a necessity to safeguard sensitive data, protect critical systems, and ensure patient safety in the face of increasingly autonomous cyber threats.

FAQs

What steps can healthcare organizations take to defend against AI-powered cyberattacks?

Healthcare organizations can better protect themselves against AI-driven cyberattacks by adopting a proactive, multi-layered security strategy. A good starting point is creating a detailed inventory of all AI systems in use. This helps identify their purposes and potential vulnerabilities, ensuring nothing slips under the radar.

Leveraging AI-powered tools for continuous monitoring is another essential step. These tools can track network activity and user behavior, making it easier to spot unusual patterns and respond quickly to potential threats.

Integrating AI into current cybersecurity frameworks can also improve the ability to detect and address risks. Collaboration across departments is key to building a unified security strategy, while adhering to relevant regulations adds an extra layer of protection. With these measures in place, healthcare organizations can safeguard sensitive data and critical systems against the growing risks posed by AI-driven attacks.

Why is AI-powered ransomware a significant threat to healthcare organizations?

AI-driven ransomware presents a growing danger to the healthcare sector, largely because it can generate and modify malicious code on its own, making detection and prevention far more challenging. These attacks are capable of slipping past conventional security defenses, exploiting system vulnerabilities, and targeting crucial infrastructure with alarming accuracy.

Healthcare organizations are particularly vulnerable due to their dependence on sensitive patient information and critical operational systems. A successful AI-powered ransomware attack could disrupt essential services, jeopardize patient care, and result in substantial financial damages. What makes this threat even more concerning is AI's ability to adapt and refine its tactics during an attack, giving cybercriminals a powerful and evolving weapon.

What is prompt injection, and how does it affect AI systems in healthcare?

Prompt injection is a method attackers use to manipulate AI systems by providing deceptive or harmful inputs. In healthcare, this poses serious risks like data breaches, damaged AI models, or incorrect outputs - all of which can threaten patient privacy, interfere with care, or undermine system reliability.

For instance, a malicious actor could exploit weaknesses in an AI diagnostic tool, leading it to produce false recommendations or expose sensitive patient data. To counter these threats, healthcare organizations need to adopt strong safeguards that can identify and block such attacks, ensuring their AI systems remain secure and trustworthy.