Board-Level AI: How C-Suite Leaders Can Master AI Governance

Post Summary

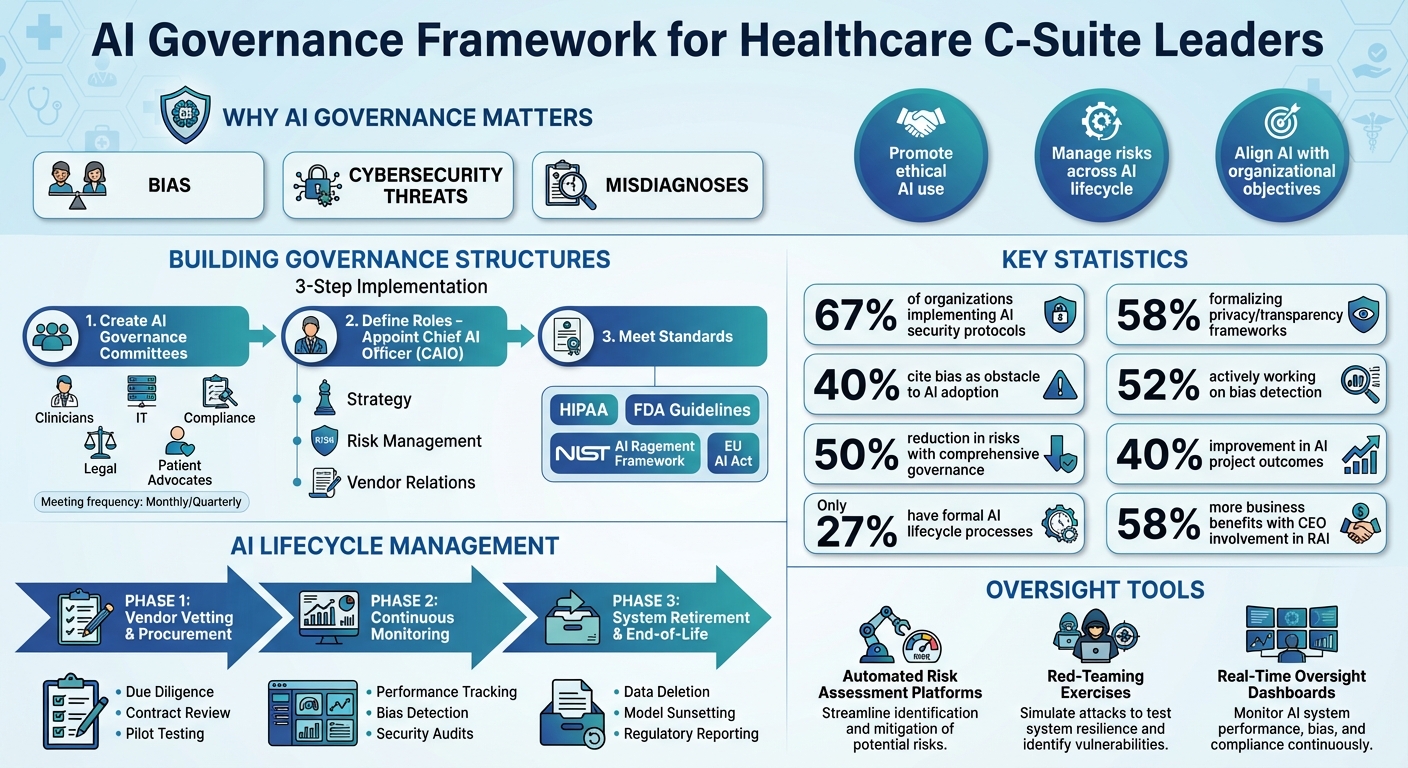

AI is transforming healthcare, but it comes with risks that demand strong leadership from the top. For C-suite leaders, managing AI governance is no longer optional - it's a responsibility that ensures patient safety, regulatory compliance, and operational accountability. Here's what you need to know:

- AI's Role in Healthcare: From diagnostics to staffing, AI is becoming integral but introduces risks like bias, cybersecurity threats, and misdiagnoses.

- Why Governance Matters: Without oversight, organizations face legal, ethical, and operational challenges that can erode trust and safety.

- Key Governance Goals:

- Promote ethical AI use with clear principles.

- Manage risks across the AI lifecycle.

- Align AI initiatives with organizational objectives.

How to Build Effective Governance:

- Create AI Governance Committees: Involve clinicians, IT, compliance, legal, and patient advocates to oversee AI initiatives.

- Define Roles: Appoint a Chief AI Officer (CAIO) to lead strategy and risk management.

- Meet Standards: Follow frameworks like HIPAA, FDA guidelines, and the NIST AI Risk Management Framework.

Tools and Practices:

- Use automated risk assessment tools for faster evaluations.

- Address cybersecurity and bias through rigorous testing.

- Implement maturity models to track governance progress.

AI governance isn't just about compliance - it's about creating systems that are safe, transparent, and aligned with healthcare goals.

AI Governance Framework for Healthcare C-Suite Leaders

Building AI Governance Structures

Creating AI Governance Committees

The first step in effective AI governance is setting up a multidisciplinary committee to oversee all AI-related efforts. This group should include clinical leaders, IT experts, compliance officers, ethicists, legal advisors, patient advocates, and data scientists. Each member plays a vital role: clinicians ensure AI tools meet care standards, compliance officers focus on regulatory adherence, and ethicists tackle concerns about fairness and bias. Together, they manage tasks like overseeing development, making deployment decisions, managing risks, creating policies, conducting ethical reviews, and approving projects. Regular meetings - whether monthly or quarterly - help the committee stay on top of new challenges in the AI landscape. This structure ensures clear responsibilities and provides strong oversight.

Assigning Roles and Responsibilities

To guide AI initiatives effectively, appointing a Chief AI Officer (CAIO) is a crucial move. This role, or its equivalent like Chief Digital and Artificial Intelligence Officer, is becoming more common in healthcare organizations. The CAIO is tasked with shaping AI strategies, overseeing risk management, and ensuring that AI projects align with the organization’s broader goals. They also manage relationships with vendors and maintain accountability throughout the AI lifecycle. Beyond the CAIO, responsibilities are distributed across different levels: the board sets the strategic vision, the AI governance committee monitors performance, and operational teams handle policy implementation. Since no single department can manage AI oversight alone, collaboration among data privacy, legal, risk, and security teams is essential.

Meeting Regulatory and Industry Standards

With roles clearly defined, organizations must also ensure compliance with strict external standards. In healthcare, this means adhering to regulations like HIPAA, which protects patient data, and the NIST AI Risk Management Framework for thorough risk assessments. For AI-enabled medical devices, FDA guidelines must be followed. In Europe, the EU AI Act categorizes most healthcare AI as high-risk, requiring stringent risk management, high-quality data, detailed documentation, and human oversight. In the absence of a comprehensive federal AI law in the U.S., organizations should establish internal safeguards, such as harm reporting systems and processes for post-market surveillance. Clear policies covering roles, data management, and incident response are essential to bridge regulatory gaps and maintain compliance.

Using AI Risk Assessment Frameworks

Boards are increasingly leaning on robust governance structures to ensure they can effectively oversee AI-related risks. This requires efficient frameworks that keep pace with the evolving landscape of AI technology.

Automated Risk Assessment Platforms

Healthcare boards are turning to automated tools to manage AI risks with speed and precision. Platforms like Censinet RiskOps™ and Censinet AI™ simplify the risk assessment process, drastically cutting down the time it takes to evaluate risks. These tools allow vendors to complete security questionnaires almost instantly, generate detailed risk reports using all relevant data, and even summarize evidence and documentation automatically. By incorporating a human-in-the-loop system, these platforms ensure that automation supports critical decision-making rather than replacing it.

With 67% of organizations already implementing security protocols for AI systems and 58% formalizing frameworks for privacy and transparency [5], these tools act as centralized hubs. They enable boards to visualize risks, route findings to the right teams, and maintain oversight across both third-party and enterprise AI deployments. This streamlined approach not only saves time but also ensures a thorough evaluation of vulnerabilities within AI systems.

Evaluating AI Cybersecurity and Bias Risks

Addressing cybersecurity vulnerabilities and algorithmic bias is essential for managing AI risks. AI introduces new threats, such as data poisoning during training or adversarial inputs that manipulate outputs. To combat these, boards should mandate strong security measures like encrypted data pipelines, strict access controls, and regular penetration testing.

Bias is another pressing issue. Around 40% of organizations cite biases and hallucinations as obstacles to AI adoption [6]. In healthcare, for example, AI systems trained on limited or non-representative patient data can exacerbate disparities in diagnoses and treatments. Effective evaluation means testing models against diverse patient populations, monitoring performance across demographic groups, and setting clear thresholds for acceptable variation. With 52% of organizations actively working on bias detection and mitigation [5], it's clear that addressing fairness is not just about ethics - it’s also critical for patient safety and organizational credibility.

Applying Maturity Models for Risk Management

Maturity models help boards measure their current AI governance and chart a course for improvement. These frameworks guide organizations from ad hoc AI usage to fully integrated, continuously monitored governance systems. Achieving high levels of maturity in Responsible AI (RAI) programs typically takes two to three years. Interestingly, organizations where CEOs are directly involved in RAI initiatives report 58% more business benefits compared to those with less engaged leadership [4].

Maturity assessments highlight gaps, such as incomplete inventories of AI systems, insufficient documentation on data sourcing and model training, or inadequate scrutiny of high-risk applications. By evaluating quarterly progress in areas like control effectiveness, incident response times, and regulatory compliance, boards can transition from reactive to proactive risk management. Organizations that adopt comprehensive AI governance frameworks report a 50% reduction in risks and a 40% improvement in AI project outcomes [7]. These results demonstrate the value of structured governance in managing the complexities of AI.

Creating AI Lifecycle Policies and Controls

Establishing clear policies for each stage of the AI lifecycle is critical. Surprisingly, only 27% of organizations have formal processes that cover the entire AI lifecycle [10]. This lack of structure leaves many boards exposed to oversight gaps. By implementing thorough lifecycle controls, organizations can ensure their AI systems remain aligned with goals, adhere to regulations, and stay secure throughout their operational lifespan. Below, we’ll explore key areas like vendor selection, ongoing monitoring, and system decommissioning to strengthen AI governance.

Vendor Vetting and Procurement Standards

The procurement phase lays the groundwork for effective AI governance. Aligning AI procurement standards with your organization's broader AI strategy is crucial to avoid mismatched expectations and ensure that vendors contribute meaningfully to strategic goals.

Procurement policies should include clear safeguards for vendors and data, such as intellectual property protections, third-party audit rights, security protocols, and data lineage standards [2]. Before signing contracts, it's essential to assess third-party AI tools for potential risks. Tools like Censinet Connect™ simplify this process by enabling detailed vendor risk evaluations upfront, ensuring all compliance and security requirements are met before any agreements are finalized.

Continuous Monitoring and Control Systems

Once an AI system is operational, continuous monitoring becomes non-negotiable. This practice helps track performance, identify issues early, and confirm alignment with organizational objectives. Boards must prioritize data governance and cybersecurity measures, incorporating regular audits to catch vulnerabilities before they become significant problems [9].

Key governance questions include: Is the system resilient to unexpected inputs? Can its decisions be traced? How quickly can problems be corrected? These considerations should shape your monitoring policies. Real-time dashboards that consolidate AI-related data offer visibility into system performance, enabling teams to address potential issues before they escalate.

AI System Retirement and End-of-Life Management

AI systems aren’t “set it and forget it” tools - they eventually need to be retired. Planning for decommissioning is a vital part of the AI lifecycle. This stage demands oversight, transparency, and accountability to ensure systems are retired responsibly [10][11][12].

Effective decommissioning involves securely archiving models, managing data retention, and evaluating long-term impacts [10]. Boards should enforce policies that oversee the safe retirement of AI systems, minimizing disruptions while maintaining secure data handling. Retaining lineage maps (which detail the origins and transformations of data, features, and models) and decision logs (documenting human oversight, exceptions, and rationale) is essential. These records not only support compliance reviews but also help organizations understand the lasting effects of AI decisions on operations and outcomes [12].

sbb-itb-535baee

Running Regular AI Audits and Oversight

Keeping a close eye on AI operations is what sets proactive governance apart from mere compliance. As Tuukka Seppä and his team at Boston Consulting Group explain, "Board oversight of an AI transformation must be continuous, rigorous, and substantive. Too often, governance discussions become buried in status decks. The most effective boards instead demand an 'outcome flight path' - a transparent dashboard that makes progress with AI as visible as cost or risk" [8]. To achieve this, organizations need structured audit protocols, human oversight, and real-time insights into AI performance and risks. This forward-thinking approach lays the foundation for the detailed audit strategies covered below.

Audit Protocols and Red-Teaming Exercises

Regular audits are a critical tool for spotting vulnerabilities before they escalate into major issues. One key method is AI red teaming, a structured evaluation technique designed to test AI systems for weaknesses like adversarial attacks, unsafe outputs, or alignment issues. This method is now a cornerstone of frameworks such as the NIST AI Risk Management Framework and the White House Executive Order on Safe AI [13]. Red teaming isn’t a one-and-done task - it’s an ongoing process embedded throughout the AI development lifecycle [13][14].

The process begins with strong executive backing. Boards should appoint a sponsor with the authority to make tough decisions and assemble a cross-functional team that includes experts from security, compliance, product development, legal, and engineering [16]. Red teaming should go beyond testing individual models, examining entire systems and their interactions to better simulate real-world challenges [15]. Combining manual testing to uncover unique vulnerabilities with automated tools for scalable, continuous probing ensures a thorough approach [13][14]. Crucially, the insights gained must feed directly into development pipelines for early fixes, and regular regressions should confirm that previous issues remain resolved [16]. While red teaming identifies risks, human oversight ensures ethical and accurate AI decisions.

Human-in-the-Loop Oversight

Automation can speed things up and expand capacity, but human judgment is still essential for ethical and precise decision-making. Human-in-the-loop oversight involves having people review critical decisions, especially in high-stakes areas like healthcare, where patient safety, clinical recommendations, or resource allocation are involved. This approach ensures that automation enhances decision-making rather than replacing it.

Boards should establish clear guidelines on when human review is necessary. For instance, AI systems making clinical recommendations might require physician approval before implementation, while automated risk assessments may need validation from security teams for high-risk findings. This balance allows organizations to scale their risk management processes without compromising on the oversight needed to maintain safety and accountability.

Real-Time Oversight Dashboards

Traditional status reports just don’t cut it anymore. Boards need what BCG refers to as an "outcome flight path" - a transparent, real-time dashboard that tracks AI progress as clearly as it tracks costs or risks [8]. These dashboards should highlight key indicators of enterprise value, such as productivity improvements, shorter cycle times, and cost reductions, enabling swift action when metrics deviate from expectations. They should also monitor progress toward broader goals like faster market delivery, better responsiveness, or enhanced adaptability [8]. These tools work hand-in-hand with the AI lifecycle controls discussed earlier, offering immediate insights for board-level decisions.

Censinet RiskOps™ is an example of a centralized platform for AI governance, providing real-time data through an intuitive risk dashboard. It automatically routes assessment findings and critical tasks to the appropriate stakeholders, including members of the AI governance committee, for review and approval. This centralized model promotes continuous oversight, accountability, and governance throughout the organization. Boards should also expect management to maintain a quarterly AI Compliance Dashboard that tracks high-risk applications, model inventories, control effectiveness, and incidents [8]. This ensures that regulatory readiness becomes a measurable and visible part of oversight efforts.

Conclusion

AI governance isn't just a technical necessity; it's a critical framework for ensuring patient safety while delivering organizational value. Boards that excel in this area are those that see AI as more than just another IT initiative. Instead, they approach it as a transformative framework that requires thoughtful, structured oversight across multiple disciplines [1].

Action Steps for C-Suite Leaders

To build effective governance, C-suite leaders must take specific, proactive steps. Start by forming a dedicated AI governance committee with clearly defined roles and decision-making authority. This team should critically evaluate AI technologies to separate meaningful advancements from industry hype.

In healthcare, organizations can't rely solely on vendors or regulators to validate AI tools. A self-governed approach is essential. Leaders should thoroughly vet vendors for issues like algorithmic bias, data security, intellectual property concerns, and risks such as AI hallucinations [17][7].

Moving Forward with AI in Healthcare

As AI continues to evolve, your governance strategy must keep pace. Current frameworks often fall short when it comes to addressing unique AI risks, such as data quality issues, algorithmic bias, and the challenge of interpretability [3]. Incorporating continuous monitoring into everyday operations can help bridge these gaps [1].

Additionally, staying aligned with regulatory developments - like FDA guidelines and HIPAA requirements - is essential. By doing so, healthcare organizations can ensure that AI remains a tool for safe, reliable, and trustworthy care [1].

FAQs

What does a Chief AI Officer do in healthcare governance?

A Chief AI Officer in healthcare governance plays a crucial role in steering AI initiatives to align with an organization’s mission while ensuring they’re implemented responsibly. Their responsibilities span several critical areas, including crafting and managing a comprehensive AI strategy, tackling risks such as bias and data security, and adhering to ethical and regulatory standards.

Beyond strategy and compliance, they work to build an AI-ready culture within the organization, ensuring that teams are prepared to adopt and integrate AI effectively. They also monitor the performance of AI systems, ensuring they meet expected outcomes, and collaborate closely with board members to ensure AI applications uphold organizational values and prioritize patient safety. By focusing on strong governance, they help organizations harness AI’s potential while addressing its complexities and risks.

What steps can healthcare organizations take to manage AI bias and cybersecurity risks?

Healthcare organizations can address AI bias and cybersecurity risks by establishing a robust governance framework. This involves routinely identifying and correcting biases in AI models, applying strong security protocols like encryption and restricted access, and consistently monitoring AI systems to spot potential vulnerabilities.

To maintain an edge, organizations need to keep up with changing regulations, develop AI expertise among leadership, and encourage a culture of openness and responsibility. These actions not only reduce risks but also build trust and ensure ethical AI practices in healthcare settings.

What are the key frameworks and standards healthcare organizations should follow to ensure AI compliance?

Healthcare organizations should consider adopting established frameworks like the NIST AI Risk Management Framework (AI RMF 1.0). This framework emphasizes identifying risks, fostering trust, and ensuring AI is implemented responsibly. It's also important to adhere to standards that promote ethical AI practices, safeguard data privacy, and comply with regulations, such as the U.S. AI Act and the Algorithmic Accountability Act.

For healthcare-specific needs, organizations can rely on tailored industry guidance to ensure AI projects meet federal requirements and align with recommended practices. By focusing on these frameworks and standards, healthcare providers can better manage risks and uphold ethical principles in their AI-driven operations.