Checklist for Choosing AI Validation Tools in Healthcare

Post Summary

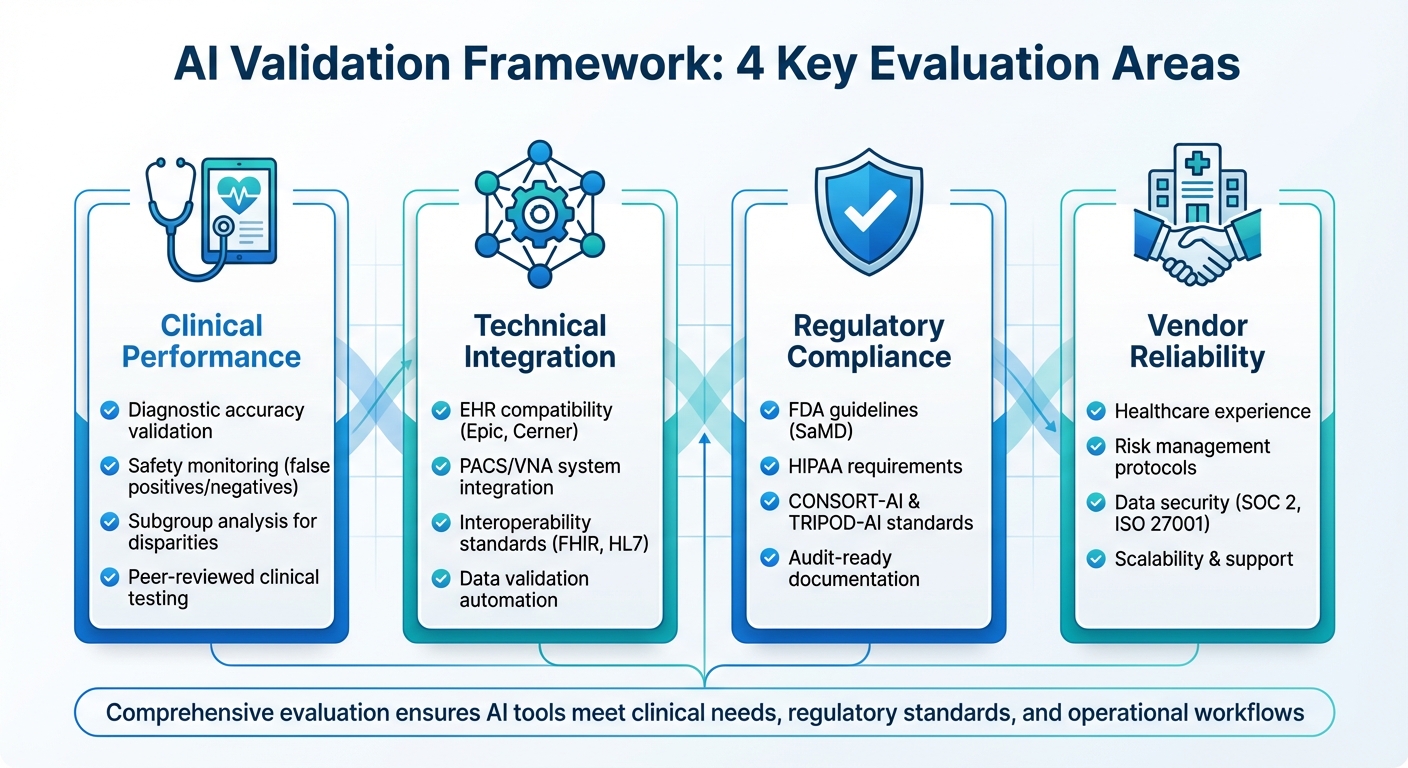

AI in healthcare is transforming diagnostics and patient care. However, without proper validation, these tools can lead to errors, bias, and patient harm. To ensure safety, accuracy, and compliance, healthcare organizations must evaluate AI tools across four key areas:

- Clinical Performance: Validate diagnostic accuracy, safety monitoring, and subgroup analysis to detect disparities.

- Technical Integration: Ensure seamless compatibility with EHRs, PACS/VNA systems, and interoperability standards like FHIR and HL7.

- Regulatory Compliance: Align with FDA guidelines, HIPAA, and standards like CONSORT-AI and TRIPOD-AI.

- Vendor Reliability: Assess healthcare experience, risk management, data security, and scalability.

This guide simplifies AI validation by offering actionable steps to help healthcare leaders choose tools that meet clinical needs, regulatory standards, and operational workflows.

Four-Pillar Framework for AI Validation in Healthcare

Clinical and Technical Validation Checklist

Clinical Performance and Safety

When evaluating an AI tool, it's essential to confirm that it has undergone rigorous peer-reviewed studies or real-world clinical testing. Look for documented proof of its diagnostic accuracy, sensitivity, specificity, and its impact on patient outcomes across diverse U.S. populations [2][5]. The tool should address a genuine clinical need, ensuring its relevance in healthcare settings.

Safety monitoring is another critical component. The tool must track safety signals, including false-positive and false-negative rates, and perform stratified analyses based on factors like age, sex, race/ethnicity, comorbidities, and care settings. This helps identify performance inconsistencies and potential inequities [2][5]. Additionally, it should confirm clinical appropriateness by reviewing its intended use, contraindications, built-in safeguards, and oversight protocols, like requiring a "human-in-the-loop" for reviewing AI outputs [4][5]. For instance, a 30-item checklist validated across 50 AI/ML healthcare studies shows how structured criteria can uncover gaps in methodology and assess reporting quality [3].

The tool should also enable subgroup analyses to uncover disparities in performance. Metrics such as calibration, equal opportunity difference, and false-negative rates across demographic groups can help identify areas where data may be sparse or unrepresentative [2][5]. Once clinical performance is verified, the next step is to examine the tool’s technical integration and automation features.

Technical Capabilities and Integration

A reliable AI tool should automatically validate both structured and unstructured data for completeness, proper value ranges, coding consistency (e.g., ICD‑10‑CM, CPT, LOINC, SNOMED CT), and the quality of free-text inputs [2]. It must also ensure de-identified validation datasets retain key clinical features and temporal relationships necessary for accurate assessments [6]. Building representative test and hold-out datasets that reflect your organization's case mix - using techniques like temporal splits and stratified sampling - is equally important [3][5].

Seamless integration with existing clinical systems is non-negotiable. The tool should support widely-used interoperability standards in U.S. healthcare, such as HL7 v2 messaging, FHIR APIs, DICOM for imaging, and flat-file extracts from clinical data warehouses [2][4]. It must also connect with major EHR platforms like Epic and Cerner/Oracle through native connectors or FHIR, enabling access to historical and near-real-time data. For imaging applications, integration with PACS/VNA systems is essential to retrieve studies, series, and metadata while returning structured outputs linked to patient and study IDs - without re-identifying PHI [4].

As Matt Christensen, Sr. Director GRC at Intermountain Health, points out: "Healthcare is the most complex industry... You can't just take a tool and apply it to healthcare if it wasn't built specifically for healthcare." [1]

The tool should also allow teams to simulate how AI outputs will function in real-world workflows, such as in emergency department triage, radiology reading rooms, or primary care clinics. It should enable testing of alert timing, priority levels, and volume to avoid alarm fatigue [4][6]. Additionally, it must support configuration and documentation of human-in-the-loop policies, specifying which outputs need clinician approval, how disagreements with AI results are resolved, and how overrides are logged.

Explainability and Transparency

A critical feature of any AI tool is its ability to provide clear, clinician-friendly explanations of its behavior. This includes insights like feature importance, example-based rationales, and case-level explanations (e.g., heatmaps for imaging, highlighted clinical notes, or key structured features influencing predictions) [2][4]. By offering side-by-side comparisons of AI outputs, explanations, and clinical ground truth, clinicians can better evaluate the tool’s plausibility and identify patterns of failure [4][5]. The platform should also support tailored explainability techniques - such as SHAP, LIME, or saliency maps - presented in accessible language for non-data scientists [3][4].

Transparency extends to providing detailed model cards that outline the tool’s intended use, input types, outputs, training data (including geography, timeframe, sites, and demographics), and performance metrics [3][7]. It should disclose data sources, such as whether they come from academic medical centers, community hospitals, or outpatient clinics, and include information on labeling methods and known limitations [3][5]. Maintaining a registry of models and configurations, complete with training snapshots, vendor release notes, and site-specific validation results, is also essential [5][7].

Finally, the tool must produce audit-ready reports detailing data sources, cohort selection criteria, performance metrics, and subgroup analyses relative to predefined benchmarks. These reports should include an immutable audit trail documenting who initiated validations, parameter settings, model versions, and manual adjustments, with timestamps formatted to U.S. standards [4][6]. For a comprehensive view of AI-related risks, the tool can integrate with broader cybersecurity and risk management platforms like Censinet RiskOps™, linking validation evidence to risk assessments and PHI handling attestations [6].

Regulatory, Privacy, and Security Requirements

Regulatory Compliance and Standards

When using AI tools in healthcare, it's crucial to ensure they align with FDA guidelines for Software as a Medical Device (SaMD) and AI/ML-enabled medical devices. Many AI tools designed for diagnosis, treatment, or disease management fall under FDA oversight. These tools may require premarket submissions, ongoing monitoring, and change-control plans. The FDA's "total product lifecycle" approach, for instance, allows for algorithm updates while ensuring safety and effectiveness. Vendors should confirm whether their tools are FDA-regulated and specify the regulatory pathway (e.g., 510(k), De Novo, or PMA). They should also provide product codes, submission summaries, and clinical validation evidence to support their intended use. Beyond FDA requirements, consider compliance with CMS regulations, especially for AI tools used in billing, quality reporting, or remote patient monitoring.

Your validation process should also align with AI-specific reporting standards, such as CONSORT-AI and SPIRIT-AI for clinical trials, TRIPOD-AI for prediction models, and STARD-AI for diagnostic accuracy studies. Templates and checklists that align validation studies with these frameworks can streamline this process. For example, a peer-reviewed 30-item clinician checklist synthesized from major AI reporting standards can help you evaluate study design, data handling, and reporting quality. This approach can also highlight gaps, such as insufficient external validation or a lack of bias analysis.

Privacy and PHI Protection

Protecting patient data is not just a regulatory requirement - it’s a fundamental responsibility. HIPAA compliance mandates that AI tools implement robust safeguards for Protected Health Information (PHI). These include encrypting data in transit (using TLS 1.2 or higher) and at rest (with AES-256 or equivalent), enforcing strict role-based access controls, and maintaining detailed audit logs for all data-related activities. Identity and access management features, like SAML/SSO and multi-factor authentication, are vital to ensure access is limited to those with a legitimate need.

AI tools should also support automated de-identification methods that align with HIPAA's Safe Harbor standards or use Expert Determination to reduce re-identification risks. Pseudonymization can enable longitudinal studies or outcomes analysis without exposing direct identifiers. Ensure HIPAA compliance is well-documented for internal reviews and external audits.

Additionally, establish Business Associate Agreements (BAAs) with AI vendors that handle PHI on your behalf. These agreements should clearly define data ownership, acceptable uses (including whether de-identified data can be used for model improvement), data retention policies, and breach notification protocols.

Cybersecurity and Risk Management

Healthcare organizations must prioritize strong security practices to protect against threats like model theft, poisoning, or data breaches. Vendors should provide evidence of regular third-party penetration testing, continuous vulnerability scanning, and adherence to secure development practices. This includes threat modeling, secure coding, code reviews, and dependency management. Certifications like SOC 2 Type II, ISO 27001, or HITRUST can offer additional assurance of a platform’s security.

To integrate AI validation into broader risk management efforts, consider tools that can feed validation outputs into enterprise risk platforms like Censinet RiskOps™. This allows for seamless alignment between validation evidence and risk assessments, helping to identify and address control gaps efficiently.

AI tools should also offer configurable risk scoring based on factors like clinical impact, data sensitivity, and cybersecurity measures. This helps teams prioritize risks and track actions such as mitigations, acceptances, and reassessments as models, data sources, or regulations change. Lastly, confirm that vendors have clear incident response plans that comply with HIPAA breach requirements and state laws. These plans should include well-defined notification timelines and be regularly tested through tabletop exercises to ensure readiness.

Governance, Workflow, and Operations

After thorough clinical and technical validation, maintaining strong governance and efficient operations becomes critical for ensuring AI systems in healthcare perform reliably and safely.

AI Governance and Oversight

Effective AI governance begins with clear accountability. Your validation platform should support a formal AI governance committee or digital health oversight group, ensuring every decision and validation step is traceable to this body. The platform should allow you to define role-based structures - such as data science, clinical leadership, compliance, information security, and operations - and use RACI-style assignments to designate accountability for each task or decision. It should also document the entire governance process, from who proposed a model to who validated it clinically and technically, approved it for use, and under what specific conditions (e.g., patient population, care setting). By linking validation outcomes to governance decisions, organizations can consistently uphold clinical safety and technical standards.

Your governance framework should include three key components: a model inventory, an AI risk register, and version-controlled policies and procedures. The model inventory should detail the model name, version, source (vendor-supplied or in-house), intended use, clinical domain, deployment sites, and current status (e.g., pilot, production, or retired). The AI risk register should track identified risks - covering clinical, ethical, security, and operational concerns - along with their likelihood, potential impact on patient safety and protected health information (PHI), mitigation measures, and ownership. Policies should include templates that tie each model to governing standards, like bias evaluation schedules or human-in-the-loop requirements, with documented evidence of compliance during validation.

This structured oversight not only ensures accountability but also supports smooth workflow integration and ongoing performance monitoring.

Workflow Integration and Usability

To avoid duplicating efforts or creating inefficiencies, AI validation tools must integrate seamlessly with existing systems such as electronic health records (EHRs), identity management (e.g., single sign-on), and IT service management (ITSM) platforms for change control and incident tracking. For instance, integration with ITSM tools like ServiceNow or Jira Service Management can automate the creation and tracking of change tickets as models move through their lifecycle stages - development, validation, production, and retirement - and link incidents or safety events to specific models.

Role-specific dashboards are another must-have. Clinicians need straightforward updates on validation status, performance metrics, and safety alerts for models in their service areas. Meanwhile, IT and data science teams require access to technical statistics and deployment health, and compliance teams need visibility into regulatory and cybersecurity statuses across the AI portfolio. These dashboards should help each group quickly identify areas requiring immediate attention. Before committing to a platform, conduct usability tests by assigning sample tasks to clinicians, risk managers, and IT staff, collecting feedback on navigation and clarity.

When workflows are well-integrated, continuous monitoring becomes a natural extension, supporting ongoing evaluation and operational efficiency.

Continuous Monitoring and Lifecycle Management

Continuous monitoring should track key performance metrics like AUROC, sensitivity, specificity, and calibration in real-time, comparing current data against baseline validation results. It’s also essential to monitor for data drift and concept drift, identifying shifts in feature distributions or changes in outcome prevalence. Configurable alert thresholds should flag clinically significant performance declines, triggering notifications to model owners and governance committees. These alerts should also activate workflows for revalidation, rollback, or retraining. Stratified monitoring by demographics (e.g., age, race, or sex) and deployment sites is critical for identifying and addressing emerging inequities.

For post-market surveillance, the platform should capture AI-related incidents, near misses, and clinician overrides, linking each record to a specific model, version, and clinical context. Integration with patient safety and quality reporting systems ensures AI-related issues feed into broader institutional safety reviews. For lifecycle management, the platform should support model versioning, linking training data, code, and deployed models, along with change control workflows requiring documented review and approval before updates impact patient care. When retiring models, the system should facilitate safe decommissioning and maintain a permanent record of the model's lifecycle, including validations, incidents, and approvals, to meet regulatory and audit requirements.

For organizations using specialized healthcare risk platforms like Censinet RiskOps™, integration strengthens lifecycle management by aligning AI validation with ongoing cybersecurity assessments and vendor collaboration. This integration ensures AI systems remain secure and compliant throughout their lifecycle, creating a comprehensive approach to managing patient data, clinical applications, and connected medical devices.

sbb-itb-535baee

Vendor Evaluation and Procurement Checklist

The final step in choosing the right AI validation tool involves conducting a thorough vendor review to ensure the solution aligns with clinical, technical, and operational requirements. Building on the validation and governance principles discussed earlier, this stage cements the groundwork for deploying AI solutions effectively and safely. Vendor evaluation should be treated as a collaborative effort, bringing together clinical leaders, data scientists, IT professionals, compliance officers, privacy experts, and supply chain teams. These stakeholders work together to score vendors using standardized criteria that assess clinical validity, security, and operational compatibility.

Healthcare Experience and Expertise

When evaluating vendors, it's critical to confirm they have real-world healthcare experience and not just general AI credentials. Ask for U.S.-based case studies and references from hospitals, integrated delivery networks (IDNs), or outpatient networks that illustrate measurable improvements in clinical outcomes. Make sure the vendor’s team includes clinicians (like MDs, RNs, or pharmacists) and healthcare data specialists who have been actively involved in model design, validation, and governance processes.

Look for evidence of participation in recognized U.S. healthcare initiatives, such as ONC programs, CMS pilots, or organizations like HIMSS, CHIME, or the Digital Medicine Society (DiMe). Vendors should also provide published validation studies to back up their claims. Additionally, confirm that their solutions support U.S. interoperability standards like HL7 v2, FHIR, and DICOM, and that they integrate seamlessly with widely used electronic health records (EHRs). It's also important to verify their understanding of Medicare, Medicaid, and commercial payer requirements, especially when AI outputs influence billing or quality metrics.

Risk Assessment, Contracts, and Legal Review

Vendor selection should hinge on completing a comprehensive third-party risk assessment. This process should cover cybersecurity, privacy, incident response, and development practices, with special attention to handling protected health information (PHI) and personally identifiable information (PII). Assessments must address data residency, access controls, logging, and monitoring, along with AI-specific risks like model drift and adversarial attacks. Many health systems rely on risk management platforms like Censinet, which offers healthcare-focused tools for cybersecurity and risk assessments. These platforms streamline evaluations, benchmarking, and remediation efforts across clinical applications, medical devices, and data workflows.

Contracts and business associate agreements (BAAs) should clearly state that your organization retains full ownership of PHI, limiting vendors to using only de-identified data under strict conditions. Agreements should explicitly prohibit secondary use of identifiable data for unrelated purposes, such as marketing or resale, and require transparency about any external data processors or cloud services. Define where data will be stored and processed, specify encryption standards for data in transit and at rest, and outline controls for training AI models with customer data.

Breach notification timelines must comply with or exceed HIPAA Breach Notification Rule requirements, typically mandating vendor notification within 24–72 hours of discovering a security incident involving PHI. Contracts should also distinguish between security incidents and reportable breaches, detailing how investigations will be conducted and how evidence will be shared. For AI-specific issues - such as systematic model errors causing clinical harm or tampering - incident response plans should include steps like suspending affected functions, issuing safety notices, and deploying corrections or mitigations.

Pricing and Scalability

Once technical compatibility is confirmed, focus on the vendor’s pricing structure and scalability. Request a detailed pricing breakdown that includes core fees, implementation costs, training, support, and optional add-ons. Pricing should be transparent and tied to common healthcare metrics, such as the number of beds, covered lives, facilities, or the volume of AI models validated. This helps finance teams estimate the total cost of ownership (TCO) over multiple years. Ensure the pricing model - whether subscription-based, usage-based, or an enterprise license - aligns with your budget structure and allows for gradual deployment without rigid minimum commitments.

Scalability is especially important for multi-site health systems. Technically, the platform should support increasing numbers of AI models, datasets, and users without performance issues. It should also enable multi-tenant or multi-entity configurations and integrate with various EHRs and data sources commonly used in large U.S. systems. Operationally, vendors should provide support for phased rollouts, such as pilot programs, regional expansions, and full enterprise deployments. They should also offer robust onboarding, training, and change management tailored to diverse user groups.

For governance, look for features like centralized dashboards, role-based access controls, standardized validation workflows, and customizable approval pathways. Vendors must demonstrate their ability to adapt to your organization’s evolving AI strategy, including support for new model types, clinical areas, and regulatory updates, without requiring expensive re-platforming. Service level agreements (SLAs) should guarantee high system uptime (99.9% or more in production environments), fast response and resolution times based on issue severity, and maintenance windows that minimize disruptions. Additionally, ensure vendors provide 24/7 or extended-hours support for critical incidents, assign a dedicated customer success manager, and establish clear escalation paths for safety-related concerns.

Conclusion

How AI Validation Supports Healthcare Transformation

Effective AI validation is the backbone of safe and scalable AI adoption in healthcare. It plays a critical role in reducing clinical risks, ensuring cybersecurity, and fostering trust among clinicians. Through rigorous validation, healthcare organizations can confirm consistent clinical accuracy across diverse patient populations in the U.S. Simultaneously, systematic assessments for data protection, system integration, and vendor risks help maintain HIPAA compliance and minimize vulnerabilities.

By embedding validation into governance frameworks - complete with approval workflows, ongoing monitoring, and clear accountability - health systems can elevate AI from experimental pilot projects to essential operational tools. Validated AI applications can enhance areas like population health analytics, medical imaging, remote monitoring, and revenue-cycle management. The results? Shorter hospital stays, fewer redundant tests, and improved quality scores. This structured approach ensures AI initiatives align with organizational goals, regulatory standards, and community trust, paving the way for long-term digital transformation rather than isolated, one-off solutions.

Next Steps for Your Organization

To move forward, adopting these practices can drive operational efficiency and establish sustainable AI governance. Here’s how to get started:

- Within the next 30–90 days, form a cross-functional AI governance team that includes experts from clinical, IT, compliance, cybersecurity, legal, and quality departments.

- Use a comprehensive checklist to evaluate your current AI tools. Then, pilot two impactful use cases - like sepsis alerts, imaging triage, or remote patient monitoring - through a full validation process.

Incorporate validation steps into your existing workflows, such as capital requests, IT intake, and contracting, to ensure no AI technology bypasses review. Establish initial performance metrics and reporting schedules for AI dashboards, which should be shared with clinical leadership and the board. Many U.S. health systems are already embedding AI validation into broader risk management programs. Platforms like Censinet RiskOps™ can help standardize vendor assessments, benchmark cybersecurity measures, and manage risks related to PHI and clinical applications - all in one place.

Treat the checklist as a dynamic framework that evolves with your organization’s AI maturity, regulatory changes, and technological advancements. Review it annually to incorporate updates from the FDA, federal or state AI regulations, and emerging industry practices. Gather feedback from clinicians, IT teams, and compliance professionals who use the checklist in real-world projects. Adjust thresholds and criteria as needed to keep the process practical and aligned with your strategic goals.

FAQs

What regulatory standards should healthcare organizations consider when validating AI tools?

When evaluating AI tools in healthcare, it's critical to align with major regulatory standards to guarantee both compliance and safety. Some of the key standards include:

- HIPAA: Ensures the privacy and security of patient data.

- FDA regulations: Governs the approval and use of AI-driven medical devices.

- CMS guidelines: Focuses on upholding healthcare quality and safety.

These regulations are in place to safeguard sensitive information, verify the dependability of AI tools, and support improved outcomes for both patients and healthcare providers.

What steps can healthcare organizations take to ensure AI tools integrate smoothly with their existing systems?

Healthcare organizations can make the integration of AI tools more seamless by opting for solutions specifically designed for healthcare settings. These platforms are built to work effortlessly with existing systems, often featuring standardized APIs, real-time data sharing capabilities, and workflows tailored to healthcare needs. This reduces disruptions and ensures everything works together smoothly.

Getting IT and clinical teams involved early in the process is equally important. Their expertise ensures the AI tools are compatible with systems like electronic health records (EHRs), supply chain platforms, and cybersecurity measures. By collaborating from the start, organizations can simplify the implementation process, maintain strong data integrity, and address potential risks effectively.

How can healthcare organizations minimize bias in AI tools?

To reduce bias in AI tools, healthcare organizations need to rely on datasets that truly represent the diversity of patient populations. By using data that includes a wide range of demographics, conditions, and backgrounds, these tools can produce outcomes that are more equitable and accurate. But it doesn’t stop there - regular validation and close monitoring of AI models are crucial to catch and correct any biases that might emerge over time.

Collaboration plays a key role in this process. Bringing together multidisciplinary teams - like clinicians, data scientists, and ethicists - during the development and validation stages ensures that AI tools are examined from various angles. This teamwork minimizes the chances of bias influencing clinical decisions. On top of that, consistent updates and thorough audits of AI systems are essential. These steps help the tools stay aligned with evolving patient data and demographics, supporting fair and precise healthcare delivery.

Related Blog Posts

- AI Tools for Compliance Risk Assessments in Healthcare

- Healthcare AI Data Governance: Privacy, Security, and Vendor Management Best Practices

- Clinical Documentation AI Vendor Risk: Accuracy, Compliance, and Workflow Integration

- Clinical Intelligence: Using AI to Improve Patient Care While Managing Risk