Clinical AI: Where Innovation Meets Patient Safety

Post Summary

By improving diagnostics, accelerating workflows, supporting clinical decisions, and analyzing massive datasets to improve outcomes.

Algorithmic bias, diagnostic errors, data privacy threats, and cybersecurity vulnerabilities in cloud and connected systems.

Only 16% of hospitals have comprehensive AI governance, increasing exposure to biased algorithms, breaches, and unsafe clinical workflows.

FDA oversight, HIPAA privacy rules, NIST cybersecurity frameworks, and emerging state laws governing AI transparency and safety.

Through governance committees, strong cybersecurity controls, continuous model monitoring, bias evaluation, and human-in-the-loop oversight.

By centralizing governance, automating assessments, routing findings to reviewers, and providing real-time dashboards for enterprise-wide AI risk.

AI in healthcare is transforming patient care but introduces challenges that demand attention. From improving diagnostics to streamlining workflows, AI tools are reshaping medicine. However, concerns like algorithmic bias, privacy risks, and cybersecurity threats require careful oversight. Here's what you need to know:

AI offers great potential but must be implemented responsibly to protect patients and maintain trust.

Clinical AI in Healthcare: Key Statistics, Risks, and Governance Gaps

Clinical AI Use Cases and Associated Risks

Common AI Applications in Patient Care

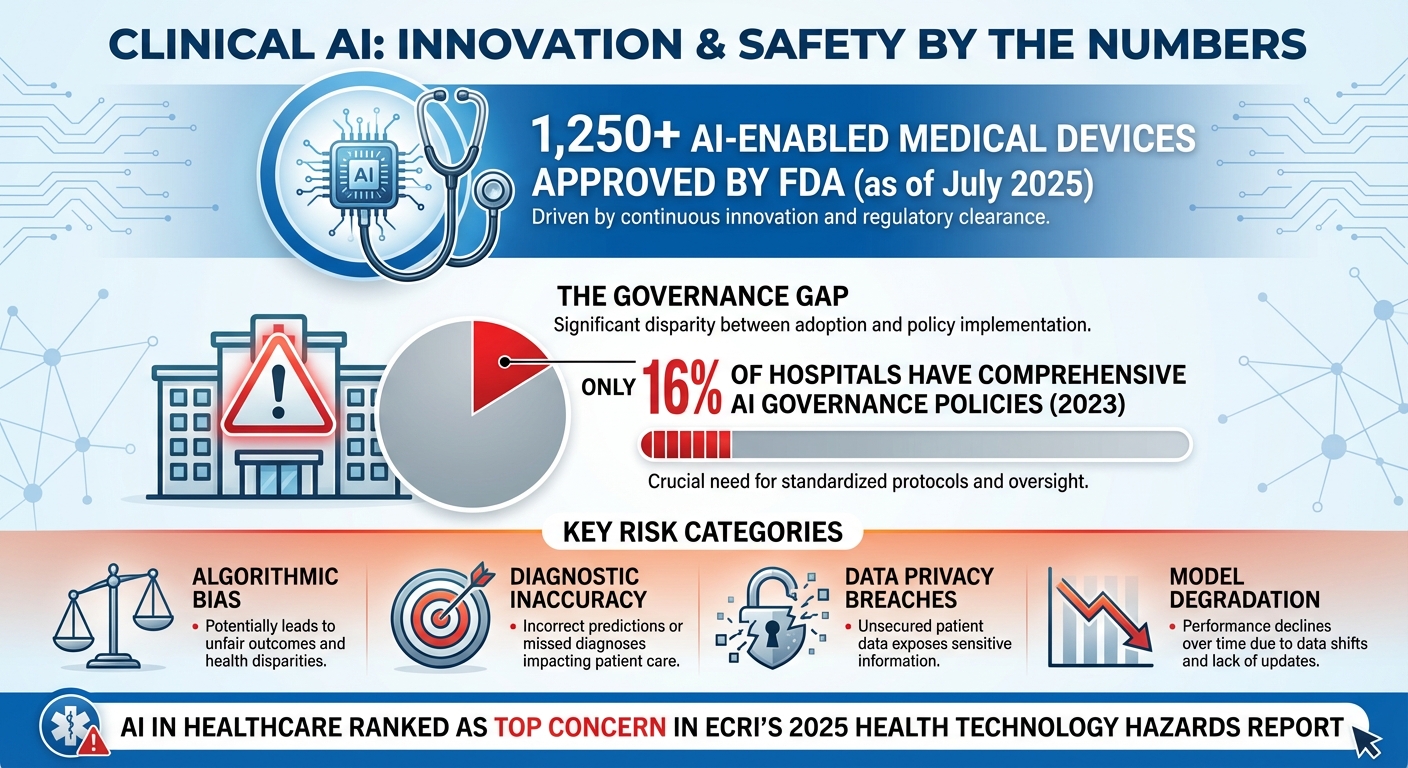

AI is becoming an essential part of patient care in the U.S., with over 1,250 AI-enabled medical devices approved by July 2025 [8]. These tools are reshaping healthcare by processing massive amounts of data to assist providers and improve patient outcomes [7].

Some of the most prominent applications include clinical decision support systems that recommend treatments, ambient listening tools for documenting patient interactions, and AI-driven drug discovery. AI also speeds up clinical trials, analyzes population health data, and creates realistic patient simulations for medical training [4][6]. According to the FDA, one of AI's strengths lies in its ability to learn and improve over time using real-world data [7].

While these advancements are promising, they also introduce risks that need careful management.

Safety and Security Risks of Clinical AI

The rapid integration of AI into healthcare brings several safety and security challenges that require immediate attention.

One major issue is algorithmic bias. When AI models are trained on flawed or incomplete data, they can produce unreliable results. This can lead to misdiagnoses, delayed treatments, and even worsen existing health disparities [9].

Another critical concern is diagnostic inaccuracy. AI models can sometimes "overfit" data, identifying patterns that aren’t relevant. This can result in incorrect predictions and inappropriate treatment plans [2].

Data privacy and security vulnerabilities are also significant risks. AI tools handle sensitive patient information, making them potential targets for data breaches, unauthorized access, and HIPAA violations. These risks are particularly pronounced in cloud-based systems [2].

Adding to these challenges is the lack of robust governance. A 2023 survey revealed that only 16% of hospitals have comprehensive AI governance policies in place [9]. Without clear oversight, healthcare organizations face increased risks from biased algorithms, security breaches, and medical errors.

Over-reliance on AI can also lead to critical errors and the gradual loss of clinical skills among healthcare professionals [2]. AI systems that aren’t regularly updated may experience model degradation, producing inaccurate results over time. Additionally, issues like alert fatigue and disruptions to clinical workflows can jeopardize patient safety [2].

US Regulations and Standards for Clinical AI

To address these risks, the U.S. has implemented regulatory frameworks to guide the development and use of clinical AI. The FDA plays a key role in overseeing AI systems classified as medical devices. It ensures safety and effectiveness through pathways like 510(k) clearance, De Novo classification, and Premarket Approval [7][11]. However, traditional FDA regulations weren’t originally designed to handle AI technologies that evolve after deployment.

To fill this gap, the FDA introduced the total product lifecycle (TPLC) framework, which emphasizes continuous oversight throughout an AI system’s operational life [3][6].

AI systems in healthcare must also comply with HIPAA privacy rules and align with NIST cybersecurity frameworks. However, these guidelines can be technically complex, making implementation a challenge for many organizations.

The regulatory landscape is still evolving. While the U.S. and other countries work on formal guidelines for AI safety, the lack of clear standards creates uncertainty for healthcare organizations [10]. On top of that, state tort laws add another layer of complexity, as liability for AI-related medical errors remains largely untested in courts [11]. These evolving measures aim to strike a balance between encouraging innovation and ensuring patient safety.

AI Governance and Risk Management

Creating an AI Governance Committee

Establishing a multidisciplinary committee is a crucial first step for overseeing the integration of AI in healthcare. This team should include clinical leaders, IT professionals, compliance officers, and risk management specialists. Their role is to handle policy development, ethical considerations, project evaluations, and communication with stakeholders. Without this structured oversight, AI systems risk misalignment with clinical workflows and regulatory requirements [12][13]. This governance framework also lays the groundwork for embedding strong cybersecurity measures throughout the AI lifecycle.

Connecting AI Governance to Cybersecurity Risk Management

Strong governance is the backbone of effective cybersecurity in AI systems. Beyond clinical oversight, governance must include a comprehensive approach to managing cybersecurity risks at every stage of the AI lifecycle [14][15]. Healthcare organizations should implement formal processes that clearly define roles and responsibilities, from development and deployment to ongoing monitoring. Key practices include maintaining a current inventory of AI tools, conducting threat modeling to uncover vulnerabilities, and monitoring system activity to quickly identify and address anomalies. By integrating these steps into existing cybersecurity frameworks, organizations ensure that security remains a priority throughout AI implementation.

Managing AI Risk with Censinet RiskOps™

Censinet RiskOps™ simplifies AI risk management by consolidating policies, assessments, and oversight into a single platform. It streamlines risk assessments while retaining human oversight through customizable rules and review processes. Acting like an air traffic control system for AI, it directs critical findings to the appropriate stakeholders and presents data in an easy-to-understand risk dashboard. This approach allows organizations to scale their AI risk management efficiently, ensuring that automation enhances - rather than replaces - human judgment, safeguarding patient safety and regulatory compliance.

Building Safe Clinical AI Systems

Creating clinical AI systems that prioritize patient safety starts with a focus on patient-centered design. These systems should aim to support clinical judgment, not replace it, ensuring that healthcare providers retain the ultimate authority in decision-making. Dennis Hancock, Head of Digital Health, Medicines, and AI at Pfizer, emphasizes this point:

"AI has truly transformative potential. AI is changing how drugs are developed and deployed. It can change how patients are diagnosed. And it can change the delivery of healthcare. The power and potential of AI to impact human health is why it's important to implement AI ethically and responsibly."

Core Principles for Safe AI Design

For AI systems to be safely implemented, transparency is key. Clinicians need to understand how AI-generated recommendations are formed, including the data sources and their limitations. This transparency fosters trust and equips providers to critically assess AI outputs. A strong example of this approach is the implementation of Abridge by Kaiser Permanente in March 2025. Abridge is a clinical documentation tool that summarizes medical conversations and drafts clinical notes. Importantly, doctors review and edit these notes before they are entered into electronic health records, ensuring that AI acts as a supportive tool rather than an independent decision-maker. Kaiser Permanente also collected feedback from thousands of doctors to confirm its effectiveness, including for non-English-speaking patients. [16]

Another critical aspect of safe AI design is addressing bias and equity from the outset. Training data must accurately represent the patient population to avoid perpetuating disparities in healthcare. Additionally, robust safeguards against issues like overfitting, performance degradation, and security vulnerabilities should be embedded through meticulous software engineering and data quality assurance.

Once these foundational principles are in place, ongoing validation and monitoring become essential.

Model Testing and Lifecycle Management

Transparency in design must be paired with rigorous model testing. AI models require continuous validation throughout their lifecycle to ensure they remain effective and reliable. Data validation is vital to confirm that training datasets reflect real-world clinical scenarios. Testing protocols should assess performance across diverse patient groups before deployment. Version control practices are equally important, providing an audit trail that supports regulatory compliance and quality assurance.

Post-deployment, systems must be monitored to quickly identify and address problems like bias or model drift. Organizations need mechanisms to detect and resolve these issues promptly. In November 2025, the American Heart Association introduced a risk-based framework for evaluating and monitoring AI tools in cardiovascular and stroke care, aiming to bridge the gap between FDA clearance and comprehensive clinical evaluation. [19]

Integrating AI into Clinical Workflows

The final step in ensuring patient safety is seamlessly integrating AI tools into clinical workflows. Embedding AI into existing systems, such as electronic health records, can encourage adoption by clinicians. Training programs are also crucial, helping providers determine when to rely on AI recommendations and when to question them. As Henk van Houten, Former Chief Technology Officer at Royal Philips, explains:

"The data-crunching power of AI must go hand in hand with domain knowledge from human experts and established clinical science."

Organizations should start with pilot programs in controlled environments, gathering input from frontline staff before expanding to a larger rollout. This iterative process helps identify potential disruptions early, allowing for adjustments and the establishment of clear escalation paths when AI outputs are uncertain or contradictory. By taking these precautions, healthcare organizations can ensure that patient safety remains the top priority as AI technologies continue to evolve.

sbb-itb-535baee

Cybersecurity Risk Assessment for Clinical AI

Clinical AI systems bring a unique set of cybersecurity challenges, going beyond the typical concerns of healthcare IT. As these technologies become more embedded in patient care processes, addressing AI-specific threats is essential to protect both sensitive data and patient safety. Notably, artificial intelligence in healthcare applications is ranked as a top concern in ECRI's 2025 report on health technology hazards [20]. Building on established AI governance practices, this section explores how to evaluate and address the distinct cybersecurity risks associated with clinical AI.

Cybersecurity Threats to Clinical AI

Clinical AI systems face several specialized threats that can jeopardize patient safety. One major risk is model manipulation, where attackers tamper with AI algorithms to produce flawed outputs. This could lead to misdiagnoses or inappropriate treatment plans. Another concern is inference attacks, where malicious actors repeatedly query AI model APIs to extract sensitive patient information.

Adding to the complexity, the opaque nature of "black box" models often conceals breaches, making it harder to detect compromised systems or unreliable outputs. Over time, as data evolves, these systems can experience performance degradation, further complicating detection efforts. A particularly troubling issue is AI "hallucinations", where the system generates false or fabricated results with unwarranted confidence - an especially dangerous scenario in clinical settings where decisions directly affect patient outcomes [1][2][5].

Cloud-hosted AI services and networked medical devices introduce additional vulnerabilities. These systems often handle protected health information (PHI) across various environments, increasing the number of potential entry points for cyberattacks.

Using NIST Frameworks for AI Cyber Risk Assessment

The NIST AI Risk Management Framework offers healthcare organizations a structured approach to identifying, addressing, and monitoring AI-related cybersecurity risks. The Health Sector Coordinating Council (HSCC) references this framework in its upcoming 2026 guidance for managing AI cybersecurity in healthcare. This guidance covers areas such as education, governance, cyber operations, secure-by-design principles, and third-party risk management [14].

By adopting this framework, healthcare organizations can formalize governance processes and implement AI-specific security measures. It is particularly useful for tackling known risks like model manipulation and system performance decline in medical devices. Additionally, it helps assess security, privacy, and bias risks when integrating third-party AI systems into clinical workflows. While the NIST framework provides high-level guidelines, platforms like Censinet RiskOps™ make it easier to apply these principles in day-to-day operations.

Censinet RiskOps™ for AI Cybersecurity Management

Censinet RiskOps™ acts as a centralized solution for managing AI-specific cybersecurity risks and benchmarking enterprise risk. Its Censinet AI™ feature streamlines vendor security assessments by summarizing evidence and identifying integration and fourth-party risks.

The platform ensures critical findings and AI-related tasks are routed to the appropriate stakeholders, such as AI governance committees, for review and approval. An intuitive AI risk dashboard consolidates real-time data, providing a comprehensive view of all AI-related policies, risks, and tasks across the organization.

What sets Censinet AI™ apart is its human-in-the-loop approach. Risk teams maintain control through customizable rules and review processes, ensuring that automation supports - rather than replaces - key decision-making. This balance allows healthcare organizations to scale their risk management efforts efficiently while maintaining human oversight. With its focus on speed and precision, the platform helps healthcare leaders manage complex third-party and enterprise risks, align with industry standards, and prioritize patient safety and care quality.

Conclusion: Moving Forward with Clinical AI

Clinical AI has the potential to reshape patient care, but its safe and effective use hinges on strong governance that ensures accountability at every stage of its lifecycle [21].

To move forward, healthcare organizations must adopt thorough risk management strategies that balance innovation with safety. This requires a tailored approach that combines governance, performance monitoring, and ongoing evaluation [21]. Key challenges like data quality issues, algorithmic bias, model transparency, cybersecurity risks, and the need for human oversight must be addressed to ensure AI systems are both reliable and safe.

Censinet RiskOps™ offers a centralized solution for managing AI risks. It streamlines the process by routing critical findings to the right stakeholders through an easy-to-use risk dashboard, ensuring that human oversight remains a core part of decision-making.

The "human-in-the-loop" model reinforces the importance of human judgment by incorporating configurable rules and review processes. This approach enables organizations to scale their risk management efforts without compromising patient safety. Striking this balance is crucial for deploying clinical AI securely and effectively across healthcare systems.

As clinical AI advances, organizations that prioritize strong governance, adopt comprehensive risk management practices, and utilize specialized platforms will be better equipped to maximize AI's benefits while minimizing potential risks. By continuously improving these processes, healthcare leaders can confidently navigate the evolving landscape of clinical AI.

FAQs

How does bias in clinical AI algorithms affect patient safety?

Bias in clinical AI algorithms poses a serious threat to patient safety, potentially resulting in uneven treatment, misdiagnoses, or unsuitable medical advice. These problems often stem from AI systems being trained on datasets that are incomplete or fail to represent diverse populations, which can reinforce existing inequities in healthcare.

Addressing these challenges requires a proactive approach. Ensuring training datasets are diverse and representative is a critical first step. Additionally, AI systems must be evaluated for fairness and regularly monitored in real-world applications to catch and correct any issues. By emphasizing transparency and accountability in the design and deployment of these systems, we can work toward protecting patient safety and building trust in clinical AI solutions.

What are the key governance practices for ensuring the safe use of clinical AI?

To ensure the safe use of clinical AI, healthcare organizations must put strong governance policies in place that keep patient safety front and center. Regularly monitoring AI systems is key to spotting and addressing risks before they become serious problems. It's also important to make sure every AI-driven decision is traceable and aligns with the broader goals of healthcare, all while maintaining transparency in how decisions are made.

On top of that, oversight measures should be established to tackle bias, protect data security, and uphold accountability. These practices are essential for earning trust, staying compliant with healthcare regulations, and balancing progress with patient safety.

What steps can healthcare organizations take to manage cybersecurity risks in AI systems?

Healthcare organizations can tackle cybersecurity risks in AI systems by implementing a few essential strategies. One of the first steps is validating and filtering inputs to block malicious data that could compromise the system. Another key approach is applying the principle of least privilege, which restricts access to sensitive data, ensuring only those who need it can reach it. On top of that, regular security testing is critical for spotting potential vulnerabilities before they can be exploited.

To keep track of system behavior, maintaining detailed audit trails is invaluable. These records provide a clear log of activity, helping to identify and address any unusual behavior. Strengthening system instructions is another important measure, ensuring AI tools function as intended without leaving room for errors or misuse. Finally, using AI-specific security tools can help detect and neutralize suspicious activity or weaknesses in the system. By focusing on these areas, healthcare organizations can build stronger defenses for their AI-powered systems.

Related Blog Posts

- The Diagnostic Revolution: How AI is Changing Medicine (And the Risks Involved)

- The Healthcare AI Paradox: Better Outcomes, New Risks

- Clinical Intelligence: Using AI to Improve Patient Care While Managing Risk

- The Future of Medical Practice: AI-Augmented Clinicians and Risk Management

{"@context":"https://schema.org","@type":"FAQPage","mainEntity":[{"@type":"Question","name":"How does bias in clinical AI algorithms affect patient safety?","acceptedAnswer":{"@type":"Answer","text":"<p>Bias in clinical AI algorithms poses a serious threat to patient safety, potentially resulting in uneven treatment, misdiagnoses, or unsuitable medical advice. These problems often stem from AI systems being trained on datasets that are incomplete or fail to represent diverse populations, which can reinforce existing inequities in healthcare.</p> <p>Addressing these challenges requires a proactive approach. Ensuring training datasets are diverse and representative is a critical first step. Additionally, AI systems must be evaluated for fairness and regularly monitored in real-world applications to catch and correct any issues. By emphasizing transparency and accountability in the design and deployment of these systems, we can work toward protecting patient safety and building <a href=\"https://censinet.com/resource/promise-and-peril-of-ai-in-healthcare\">trust in clinical AI solutions</a>.</p>"}},{"@type":"Question","name":"What are the key governance practices for ensuring the safe use of clinical AI?","acceptedAnswer":{"@type":"Answer","text":"<p>To ensure the safe use of clinical AI, healthcare organizations must put <strong>strong governance policies</strong> in place that keep patient safety front and center. Regularly monitoring AI systems is key to spotting and addressing risks before they become serious problems. It's also important to make sure every AI-driven decision is <strong>traceable</strong> and aligns with the broader goals of healthcare, all while maintaining transparency in how decisions are made.</p> <p>On top of that, oversight measures should be established to tackle <strong>bias</strong>, protect <strong>data security</strong>, and uphold accountability. These practices are essential for earning trust, staying compliant with healthcare regulations, and balancing progress with patient safety.</p>"}},{"@type":"Question","name":"What steps can healthcare organizations take to manage cybersecurity risks in AI systems?","acceptedAnswer":{"@type":"Answer","text":"<p>Healthcare organizations can tackle cybersecurity risks in AI systems by implementing a few essential strategies. One of the first steps is <strong>validating and filtering inputs</strong> to block malicious data that could compromise the system. Another key approach is applying the <strong>principle of least privilege</strong>, which restricts access to sensitive data, ensuring only those who need it can reach it. On top of that, <strong>regular security testing</strong> is critical for spotting potential vulnerabilities before they can be exploited.</p> <p>To keep track of system behavior, maintaining <strong>detailed audit trails</strong> is invaluable. These records provide a clear log of activity, helping to identify and address any unusual behavior. Strengthening system instructions is another important measure, ensuring AI tools function as intended without leaving room for errors or misuse. Finally, using <strong>AI-specific security tools</strong> can help detect and neutralize suspicious activity or weaknesses in the system. By focusing on these areas, healthcare organizations can build stronger defenses for their AI-powered systems.</p>"}}]}

Key Points:

What use cases are driving clinical AI adoption in healthcare?

- Clinical decision support that recommends treatments and predicts deterioration.

- Ambient listening tools that automate clinical documentation.

- AI-driven drug discovery accelerating R&D and trial design.

- Population health analytics and patient simulation tools for training.

- FDA-cleared medical devices that learn from real‑world data.

What risks arise from bias, inaccuracy, and model behavior?

- Algorithmic bias causes misdiagnoses and worsens disparities.

- Overfitting and diagnostic errors lead to unsafe recommendations.

- Opaque “black box" outputs harm transparency and trust.

- Model degradation reduces reliability over time.

- Alert fatigue disrupts clinical workflows.

What are the most serious privacy and cybersecurity threats?

- PHI exposure in cloud or vendor systems.

- Adversarial attacks altering imaging or diagnostic data.

- Unauthorized access and data breaches triggering HIPAA violations.

- Vendor supply‑chain weaknesses accounting for many healthcare breaches.

- Purpose creep when patient data is reused without consent.

What U.S. regulations and standards govern clinical AI?

- FDA pathways including 510(k), PMA, and De Novo classification.

- US TPLC oversight for adaptive AI models.

- HIPAA requirements for privacy, storage, and secure access.

- NIST cybersecurity frameworks guiding secure AI deployment.

- Evolving state laws defining disclosure and clinician oversight.

How should healthcare organizations structure AI governance?

- Multidisciplinary AI governance committees spanning clinical, IT, legal, compliance, and risk.

- Clear role definitions for review, approval, and monitoring.

- AI inventory and performance tracking across the enterprise.

- Threat modeling to uncover vulnerabilities.

- Continuous monitoring for drift, bias, and failures.

How does Censinet RiskOps™ simplify AI risk management?

- Automates vendor questionnaires and summarizes evidence.

- Captures integration details including fourth‑party dependencies.

- Routes findings to governance committees for review.

- Delivers real‑time dashboards for AI policies, risks, and tasks.

- Supports human oversight with configurable rules and workflows.