Clinical Decision Support AI Vendors: Risk Management and Patient Safety Considerations

Post Summary

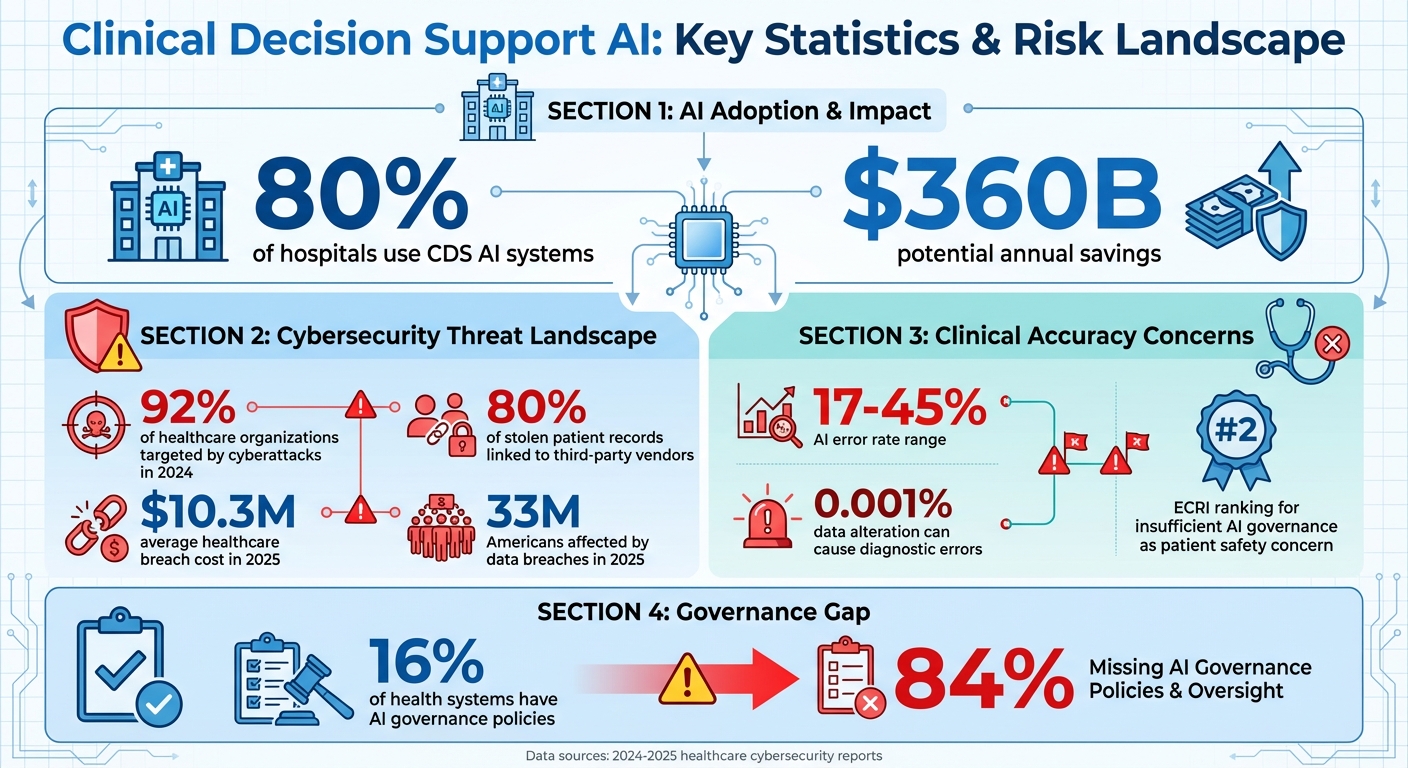

AI in healthcare is growing fast, but it comes with risks. Clinical Decision Support (CDS) AI systems are now used by 80% of hospitals, offering potential savings of $360 billion annually. However, these systems can lead to errors, cybersecurity issues, and patient safety concerns if not properly managed.

Key risks include:

- Data Privacy: Breaches of Protected Health Information (PHI) can disrupt operations and put patient data at risk.

- Opaque Algorithms: AI "black boxes" make it hard for clinicians to verify decisions, leading to trust and accuracy issues.

- Cybersecurity Weaknesses: Many vendors lack strong protections, exposing systems to attacks like data poisoning.

- Advanced Threats: Malicious actors can manipulate AI training data, leading to harmful recommendations.

How to manage these risks?

- Vendor Evaluation: Review AI governance, security protocols, and compliance with laws like HIPAA and GDPR.

- Technical Reviews: Test for vulnerabilities like adversarial attacks and ensure robust encryption standards.

- Continuous Monitoring: Track risks, train staff, and maintain oversight to detect and address issues early.

- Collaboration: Involve IT, legal, and clinical teams to align AI systems with patient safety goals.

Tools like Censinet RiskOps™ can automate vendor risk assessments and centralize AI governance, helping healthcare organizations stay ahead of threats while ensuring patient safety.

Clinical Decision Support AI Risks and Statistics in Healthcare 2024-2025

Primary Risks from CDS AI Vendors

When healthcare organizations collaborate with CDS AI vendors, they take on risks that go far beyond typical tech challenges. These risks can directly impact patient safety, compromise sensitive health data, and weaken the reliability of clinical decisions. Identifying these vulnerabilities is a critical step toward putting effective safeguards in place and ensuring patient safety.

Data Privacy and PHI Exposure

Protected Health Information (PHI) is at the core of every CDS AI system, making the potential for data breaches a serious concern. A health system’s cybersecurity is often only as strong as its weakest link, which can be found in third-party vendors, essential technologies, or the broader digital supply chain [9]. If a vendor’s system is breached, cybercriminals could gain access to sensitive patient data, disrupt critical operations, and even put lives at risk. In an interconnected healthcare IT environment, a single breach can have widespread and devastating effects.

Clinical Decision-Making Vulnerabilities

AI models, especially those relying on large language models and deep learning, often operate as "black boxes", making their decision-making processes difficult to understand [8]. This lack of transparency can leave clinicians unable to explain the basis of AI-generated recommendations or validate their accuracy. Such opacity can erode patient trust and make it harder to trace and correct errors. In some instances, these systems may even produce fabricated or misleading information, leading to incorrect diagnoses, inappropriate treatments, or medication mistakes. These issues are compounded by algorithmic biases and potential malfunctions in automated systems, introducing entirely new categories of clinical errors [8].

Vendor Cybersecurity Weaknesses

A significant number of CDS AI vendors fall short when it comes to implementing strong cybersecurity measures to protect data and maintain system integrity. Weaknesses in supply chain security and a lack of transparency around AI model training processes can leave major gaps in defense. Additionally, the reliability of data and accuracy of AI models are often at risk because training datasets and algorithms may not be fully disclosed or rigorously validated [9]. Without clear insight into how vendors secure their systems, update their models, or respond to security breaches, healthcare organizations are left vulnerable to risks that could jeopardize both patient data and clinical outcomes.

Advanced Threats: Data Poisoning

CDS AI systems are also vulnerable to sophisticated threats like data poisoning. This occurs when malicious actors intentionally introduce corrupted or biased data into training datasets, causing AI models to learn flawed patterns and make dangerous recommendations [8]. The complexity of interactions between humans and these systems can make it difficult to identify unreliable outputs, allowing harmful errors to go unnoticed and persist within clinical workflows. These advanced threats pose a significant challenge to maintaining the safety and reliability of AI-driven healthcare solutions.

How to Assess Vendor Risks

When it comes to healthcare organizations, evaluating Clinical Decision Support (CDS) AI vendors is a critical step before granting access to sensitive systems. This process involves a detailed review of governance structures, technical security protocols, and compliance with healthcare regulations. By doing so, organizations can ensure both data protection and patient safety, while laying the groundwork for effective risk management across multiple dimensions.

Reviewing AI Governance and Compliance

Start by confirming that the vendor has a well-defined AI governance program. This should include clear accountability for decisions driven by AI. Look for evidence of policies tailored to AI, such as those governing pilot programs, approved technologies, bias mitigation, data validation, and regular audits [7]. These policies demonstrate a vendor’s commitment to responsible AI practices.

On the compliance side, ensure adherence to key regulatory frameworks like HIPAA, GDPR, and state-specific AI laws, such as California AB 489, AB 3030, or relevant rules in Illinois. These regulations are essential for maintaining both legal and ethical standards in AI deployment.

Once governance and compliance are reviewed, the next step is to assess technical vulnerabilities.

Performing Technical Security Reviews

A thorough technical review focuses on identifying AI-specific vulnerabilities. This includes risks like adversarial attacks, data poisoning, model drift, and prompt injection [10]. Vendors should test their AI models under simulated attack conditions to evaluate their resilience and clinical accuracy. Additionally, it’s important to verify data provenance and integrity controls to ensure that training data hasn’t been tampered with [10].

Security standards are another crucial area. Confirm that vendors comply with rigorous cryptographic protocols, such as FIPS 140-3, and have robust data security agreements in place, like Information Technology Service Addenda [5]. These measures collectively strengthen the vendor's security posture.

Using Standardized Vendor Due Diligence

After addressing governance and technical aspects, standardized due diligence helps consolidate all findings, offering a complete view of vendor risks. Use established checklists and frameworks to evaluate governance throughout the lifecycle of the solution - from its initial concept to deployment and routine audits [5].

Pay special attention to the model selection phase, as strong governance here is crucial for implementing AI responsibly and achieving meaningful outcomes. A comprehensive pre-acquisition risk assessment, conducted in partnership with the vendor, can help flag potential issues early. Transparent processes and open collaboration set clear expectations for vendor performance and security measures before any contracts are signed [5]. This proactive approach minimizes surprises and ensures alignment between the organization and the vendor.

Risk Management with Censinet RiskOps™

Handling the risks tied to CDS AI vendors requires a platform that can keep up with the complexity of AI and the scale of third-party operations. With 80% of stolen patient records and 92% of healthcare organizations targeted by cyberattacks in 2024 [10], automating risk management is no longer optional - it's a necessity.

Censinet RiskOps™ steps up to meet these challenges, offering a platform tailored for healthcare cybersecurity and AI governance. By blending automation with human oversight, it empowers risk teams to expand their reach without sacrificing thoroughness or patient safety. Building on earlier risk assessments, Censinet RiskOps™ redefines how healthcare organizations handle risk through centralized, automated processes.

Automating Vendor Risk Assessments

Censinet Connect™ and Censinet AI™ streamline vendor risk assessments by automating time-consuming tasks. Vendors can complete security questionnaires in seconds, while the platform automatically compiles evidence, gathers integration details, identifies fourth-party risk exposures, and generates comprehensive risk summary reports.

This level of automation is especially critical for evaluating CDS AI vendors, where traditional manual methods often lag behind the rapid pace of technological advancements. By simplifying and accelerating assessments, healthcare organizations can address risks more efficiently. This is particularly important given that only 16% of health systems currently have governance policies in place to manage AI usage and data access across their operations [4].

Improving AI Governance and Oversight

Censinet AI acts as a centralized hub for managing AI-related risks and governance tasks. Key findings are routed to relevant stakeholders, such as AI governance committee members, ensuring timely reviews and decisions.

Risk teams maintain control by setting configurable rules that determine when automated assessments require human validation. A user-friendly AI risk dashboard consolidates real-time data, serving as a central repository for all AI-related policies, risks, and tasks. This approach ensures that the right people address the right issues at the right time, fostering accountability and continuous oversight. The platform also supports robust benchmarking, enabling organizations to monitor and evaluate risks effectively over time.

Benchmarking and Tracking Risks

The platform’s command center includes visualization tools that help healthcare organizations identify, track, and address risks with greater precision. Through cybersecurity benchmarking, organizations can compare their vendor risk profiles against industry standards and peer groups, making it easier to spot vulnerabilities in their security strategies.

With healthcare breach costs averaging $10.3 million in 2025 and 33 million Americans affected by data breaches that same year [10], these tracking and benchmarking tools provide critical visibility into vendor risk trends. Organizations can monitor changes in vendor security postures over time, ensuring that CDS AI vendors remain compliant with healthcare regulations and security standards throughout their partnership. Continuous monitoring not only fortifies cybersecurity but also safeguards clinical decision-making, enhancing patient safety while meeting regulatory requirements. By building on earlier risk assessments, this integrated approach ensures sustained oversight across all vendor relationships.

sbb-itb-535baee

Best Practices for Long-Term Risk Management

Ensuring patient safety and safeguarding cybersecurity when working with CDS AI vendors isn’t a one-and-done task. Third-party vendors remain an ongoing source of risk to healthcare systems and data. Beyond the initial evaluations, maintaining solid, long-term practices is key to ensuring both cybersecurity and clinical reliability over time. These efforts connect the dots between early assessments and proactive, sustained protection.

Continuous Monitoring and Staff Training

While initial technical reviews are critical, continuous monitoring provides the backbone for lasting security. This means staying vigilant for new threats by implementing network monitoring at vendor integration points, analyzing data flow for irregularities, and maintaining real-time oversight of security incidents[10]. Tracking issues such as algorithm malfunctions or unexpected outcomes can uncover patterns and help identify recurring problems like model drift or data misclassification[4].

Real-time threat detection is especially important because even minor adversarial attacks - altering just 0.001% of input data - can lead to serious diagnostic errors or medication dosing mistakes[10]. To combat this, both clinical and IT staff need ongoing training to stay informed about emerging AI threats. Educating teams ensures they can spot warning signs early and act quickly to mitigate risks.

Cross-Functional Collaboration

Strong risk management thrives on breaking down silos between IT, compliance, and clinical teams. Collaborative efforts ensure that AI models are shaped with insights from a wide range of experts, including clinicians, industry professionals, academics, and regulators. This approach leads to safer recommendations and greater acceptance in clinical settings[2].

"Reputable AI clinical decision support systems aren't built in isolation, but rather, through the expertise and lived experience of clinicians, pharmacists, nurses, and other interdisciplinary teams, with clinician-in-the-loop feedback and oversight. This collaboration helps ensure algorithms, recommendations, and user workflows are clinically relevant, safe, and practical for real-world care delivery."

- Katherine Eisenberg, MD, PhD, FAAFP, Senior Medical Director, EBSCO Clinical Decisions[2]

Legal teams, IT professionals, and clinical staff should work together when evaluating vendor AI solutions, focusing on care quality, privacy, and potential liabilities[7]. This unified strategy ensures that technical safeguards align with clinical needs and meet regulatory standards.

Following Industry Standards

Adhering to established frameworks offers a reliable roadmap for long-term governance. The Coalition for Health AI (CHAI), for example, provides national guidance tailored to responsible AI use in healthcare[2]. Staying compliant with healthcare regulations and encryption standards not only fulfills legal obligations but also reinforces patient trust[2][3]. As technology evolves and new threats emerge, it’s essential to regularly review vendor risk management practices against updated industry benchmarks. This helps identify gaps and pinpoint areas for improvement.

Conclusion

Clinical decision support (CDS) AI vendors present serious challenges to patient safety and data security that demand immediate action. Issues like data privacy risks, cybersecurity flaws, model drift, and bias in clinical recommendations highlight the need for a structured and proactive approach to managing these risks. With error rates ranging between 17% and 45%, the margin for clinical mistakes is simply too high to ignore[2].

Addressing these risks requires an ongoing commitment to monitoring, collaboration across teams, and adherence to established frameworks. An ECRI study even ranked "insufficient governance of artificial intelligence in healthcare" as the second most critical patient safety concern[2]. This highlights the pressing need for healthcare organizations to enhance their oversight of AI systems.

A strong vendor risk management strategy is crucial. This includes thorough evaluations of AI governance, in-depth security reviews, and continuous updates to keep pace with evolving technology. It's also clear that human oversight is irreplaceable - AI can support healthcare decisions, but it cannot substitute the expertise and judgment of medical professionals[1][6]. Organizations that integrate AI oversight into their culture, establish clear risk thresholds, and adapt their processes over time will be better equipped to safeguard patients while benefiting from AI-driven clinical tools.

Censinet RiskOps™ simplifies risk management by automating vendor assessments, centralizing AI governance, and providing real-time visibility into threats. Powered by Censinet AI™, the platform accelerates assessments without compromising the human oversight necessary for patient safety. Its AI risk dashboard acts as a central hub for managing policies, risks, and tasks, ensuring that critical issues are addressed by the right teams at the right time. This balance of automation and human involvement directly tackles the complex risks discussed here.

Moving forward, healthcare organizations need to stay vigilant, foster collaboration, and invest in the right technology to support comprehensive risk management. By addressing privacy concerns, technical vulnerabilities, and governance gaps, they can create a resilient framework for CDS AI adoption. Choosing vendor solutions that emphasize validation, transparency, and robust privacy protections will help organizations navigate the complexities of AI while keeping patient safety at the forefront.

FAQs

What are the key risks of using AI-powered clinical decision support systems in healthcare?

AI-powered clinical decision support (CDS) systems bring a host of challenges that healthcare organizations need to navigate with care. One major concern is the risk of inaccurate recommendations. These could range from incorrect diagnoses to dosing mistakes, both of which could have serious consequences for patient safety. Another pressing issue is bias in AI algorithms, which has the potential to create unequal treatment outcomes for different patient groups. On top of that, the lack of transparency in how AI systems make decisions can erode trust among both clinicians and patients.

There are also practical risks, like workflow disruptions when systems aren't seamlessly integrated into existing processes. Moreover, automation bias - where clinicians place too much trust in AI recommendations without sufficient scrutiny - poses a significant danger. To mitigate these risks, healthcare organizations must carefully assess vendors' governance structures, ensure compliance with regulations, and prioritize robust data security protocols.

What steps can healthcare organizations take to ensure AI-driven clinical decisions are accurate and transparent?

To ensure AI-driven clinical decisions are both accurate and transparent, healthcare organizations should implement strong validation processes. These processes help verify AI outputs and ensure they align with established clinical evidence. Regular performance monitoring and feedback from clinicians play a key role in maintaining reliability. Equally important is providing clear, straightforward explanations for AI recommendations, enabling clinicians to make well-informed decisions while preserving patient trust.

Key steps to consider:

- Validate AI systems against established, peer-reviewed clinical standards to ensure accuracy.

- Continuously monitor AI performance to detect and resolve potential issues promptly.

- Provide clear explanations for AI-generated recommendations to aid clinician understanding and decision-making.

By focusing on these practices, healthcare organizations can enhance patient safety and build trust in AI-powered clinical tools.

How can healthcare organizations evaluate and manage risks when working with CDS AI vendors?

To handle risks tied to CDS AI vendors, start by examining their governance practices. Look into how they validate their AI systems and tackle potential biases. Make sure their solutions meet healthcare regulations and emphasize patient safety. Perform detailed risk assessments before rolling out their systems and keep a close eye on the AI's performance over time.

Using strong incident reporting tools is crucial, as is maintaining transparency in how AI makes decisions. Confirm that the vendor delivers content backed by solid evidence. It's also a good idea to regularly review and update safety protocols to keep up with changing standards and address risks like data breaches or system glitches. Ultimately, a mix of collaboration and thorough vetting is essential to ensure both reliable technology and the safety of patients.