Does Your DR Plan Account for Region-Wide Cloud Failures? The Question Every Healthcare Board Should Ask

Post Summary

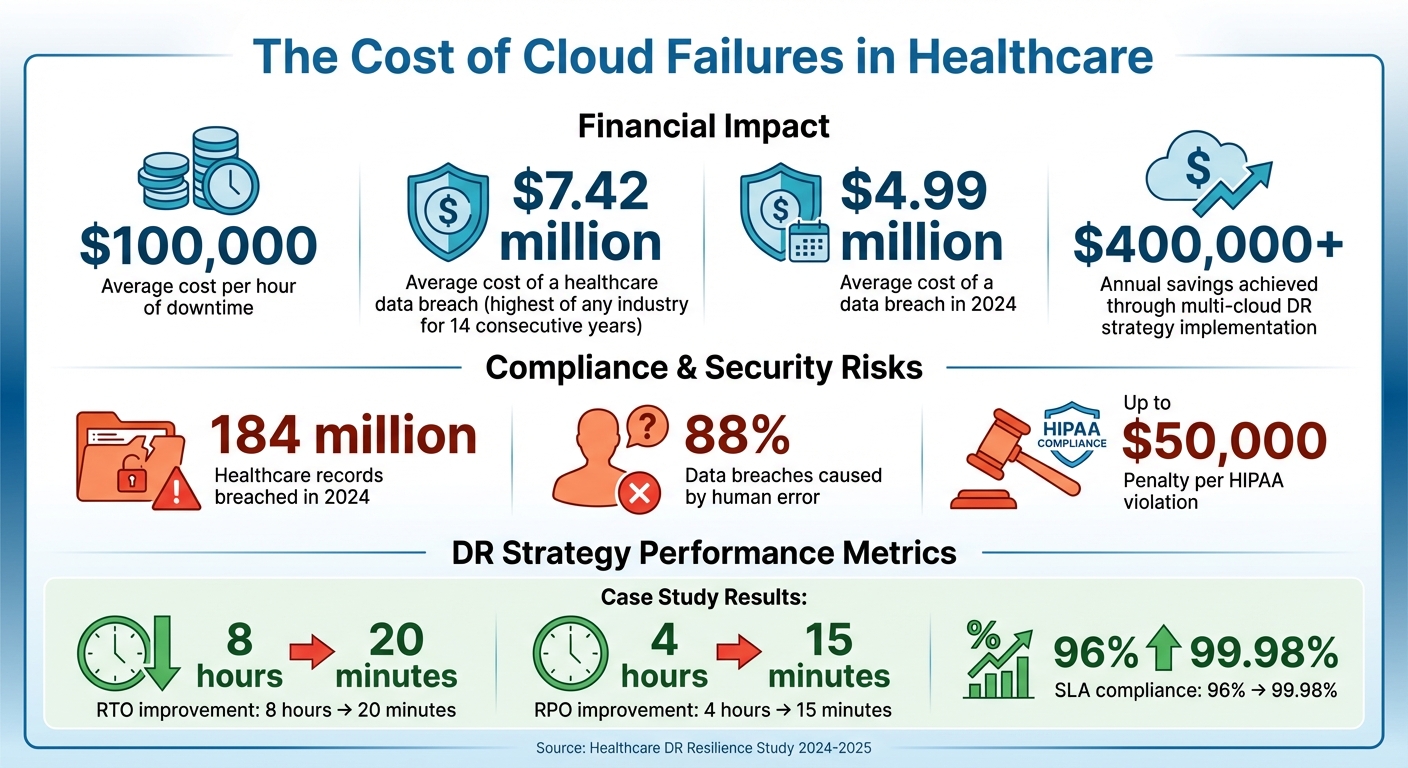

Healthcare organizations face a growing risk: region-wide cloud failures. These outages can disrupt critical systems like EHRs, telehealth, and medical imaging, putting patient safety and compliance at risk. With healthcare systems losing an average of $100,000 per hour of downtime, boards must ensure their disaster recovery (DR) plans are ready for such scenarios.

Key Takeaways:

- Major Risks: Patient care interruptions, HIPAA violations, and financial losses.

- Common Gaps: Overreliance on single cloud regions, insufficient testing, and unclear vendor dependencies.

- Action Steps for Boards:

- Assess cloud architecture for multi-region resilience.

- Define clear Recovery Time Objectives (RTOs) and Recovery Point Objectives (RPOs).

- Test DR plans regularly using tools like chaos engineering.

- Review vendor agreements for failover capabilities.

A robust DR plan is not optional - it’s a necessity to safeguard operations, protect patients, and ensure compliance. The article explores how boards can identify vulnerabilities and build resilience against region-wide cloud outages.

Healthcare Cloud Disaster Recovery: Key Statistics and Financial Impact

Why Region-Wide Cloud Failures Require Board Attention

Let’s dive into why these vulnerabilities demand the attention of healthcare boards.

How Cloud Outages Create System-Wide Vulnerabilities

Healthcare systems today rely heavily on interconnected digital platforms. This reliance means that a region-wide cloud failure can disrupt multiple critical functions simultaneously - like EHRs, imaging systems, telehealth services, and even research platforms[4]. Essentially, such an outage exposes weak links across facilities, turning single points of failure into widespread disruptions.

Patient Safety, Compliance, and Financial Consequences

Even short periods of downtime can have a serious impact on patient care. When clinicians lose access to vital information like patient histories, medication records, or lab results, they’re left making decisions based on incomplete data. This can delay treatments, lead to errors in care, and result in improper documentation and billing issues. These problems aren’t just operational headaches - they bring compliance risks as well. Healthcare organizations are legally responsible for safeguarding Protected Health Information (PHI). Extended outages can breach HIPAA and HITECH regulations, potentially leading to hefty fines and other regulatory consequences[6]. These scenarios highlight significant gaps in many disaster recovery (DR) plans.

Where Current DR Plans Fail

The reality is that many DR plans in healthcare weren’t built to handle the scale of region-wide cloud outages. While cloud outages are a known risk[5], they’re often dismissed as rare edge cases. A common issue is the overreliance on single-region cloud setups, where both primary and backup systems are located in the same geographic area. Additionally, there’s often insufficient testing for scenarios involving full regional failures. These shortcomings can leave boards unaware of the real vulnerabilities until a major outage forces them into the spotlight.

Assessing Your Disaster Recovery Plan for Gaps

Is your disaster recovery (DR) plan prepared to handle region-wide cloud failures? Many healthcare boards assume their plans are solid - until a major outage reveals critical oversights. To avoid being caught off guard, it’s crucial to ask the right questions beforehand. These questions are the first step in identifying potential vulnerabilities in your DR strategy.

Questions to Evaluate DR Readiness

Start by examining your cloud architecture. Are you relying on a single cloud provider or region? This is especially risky if you depend on regions with a history of outages, such as AWS US-East-1 [5]. Next, review your Recovery Time Objectives (RTO) and Recovery Point Objectives (RPO). Have you clearly defined your RTOs and RPOs to ensure your DR plan aligns with acceptable downtime and data loss thresholds? [2][7]. Lastly, consider your third-party vendors: Do you know which cloud providers they depend on, and how their services might be affected by regional failures?

Common Weaknesses in Healthcare DR Plans

One of the most significant gaps in healthcare DR plans is overreliance on a single cloud provider or region - a major vulnerability [5]. Simply being in the cloud doesn’t guarantee resilience; resilience must be intentionally designed [8]. Another frequent issue is insufficient redundancies for third-party vendors, such as payment processors or SaaS providers, that may rely on the same cloud infrastructure as your organization. Without understanding these dependencies, your DR plan could be undermined by external failures.

Understanding Vendor and Cloud Dependencies

To accurately assess risk, healthcare organizations need a clear view of their cloud and vendor dependencies. It’s not enough to maintain a vendor list - you need to know which cloud regions your vendors operate in, their failover capabilities, and how outages could disrupt your operations. Conducting a comprehensive risk assessment is the foundation of any effective DR plan, as it identifies threats, vulnerabilities, and their potential impact [2].

Boards must prioritize mapping these dependencies to understand how a regional cloud failure could ripple through their systems [9]. Tools like Censinet RiskOps can centralize risk tracking, offering a unified view of third-party and enterprise risks, including cloud dependencies. This visibility is crucial because you can’t manage risks you don’t see. With the average cost of a healthcare data breach reaching $7.42 million - the highest of any industry for 14 consecutive years - the financial consequences of inadequate DR planning are staggering [7]. Centralized risk tracking is a vital component of board-level oversight to ensure resilience.

Creating a Region-Resilient Cloud DR Plan

Building a strong disaster recovery (DR) strategy for your cloud infrastructure means addressing vulnerabilities and ensuring resilience. This involves setting up technical safeguards, managing risks in vendor relationships, and establishing clear governance. A well-crafted region-resilient DR plan layers protections to work seamlessly together. Let’s explore key strategies to strengthen your approach.

Multi-Region and Multi-Provider Strategies

Avoid single points of failure by implementing multi-region architectures that keep operations running even if an entire region goes offline. DR strategies generally range from Backup & Restore (lowest cost, highest RTO/RPO) to Multi-Site Active/Active (highest cost, lowest RTO/RPO) [10]. For regional disasters, approaches like Pilot Light, Warm Standby, or Multi-Site Active/Active are more effective [10].

For instance, Multi-Site Active/Active configurations allow workloads to operate simultaneously across regions, ensuring minimal downtime for critical systems like electronic health records [10]. Using continuous, asynchronous data replication helps reduce data loss, achieving low RPOs [10]. However, not all workloads require the same level of redundancy. A hybrid approach, tailored to the criticality of each system, can balance cost and resilience effectively.

Incorporating Vendor and Cyber Risk Management into DR

Your DR strategy shouldn’t stop at internal systems - it must also account for third-party vendors. When working with vendors, include specific DR requirements in contracts, such as uptime guarantees, failover procedures, and details about their cloud dependencies. During risk assessments, ask vendors to disclose the cloud regions they operate in and their backup strategies. Regularly monitor and update this information to stay ahead of potential risks.

By integrating vendor DR capabilities into your overall risk management framework, you can avoid hidden vulnerabilities. Vendors may face outages or change their cloud providers, so periodic reviews of their DR preparedness are essential to ensure they remain reliable partners.

Establishing Governance and Accountability

Even the most robust DR plan can falter without clear roles and accountability. Assign specific responsibilities for DR procedures, communication protocols, and escalation paths to ensure everyone knows their role during an incident [11]. Categorize workloads into tiers - like Mission Critical and Business Critical - based on their business impact, and set RTO and RPO targets accordingly. These targets should be regularly reviewed with stakeholders to align with evolving business needs [11].

Activating a DR plan requires clear criteria and executive authorization to avoid delays during emergencies [11]. Boards should also track key metrics, such as failover time, the percentage of systems with multi-region redundancy, and the frequency of DR testing. By tying these metrics to business goals, disaster recovery becomes a governance priority rather than just an IT concern [11].

sbb-itb-535baee

Testing and Improving DR Plans Over Time

A disaster recovery (DR) plan that’s never put to the test is just a theory. Testing brings your DR plan to life, ensuring it can handle real-world scenarios. This is especially important when addressing vulnerabilities exposed during large-scale cloud outages. Unfortunately, many healthcare organizations only test their DR plans once a year - or even less often - leaving critical gaps unaddressed [13]. Let’s explore effective ways to test and strengthen these plans.

DR Testing Best Practices

Annual cross-region failover tests are a must. These tests should cover your entire DR runbook, helping your team rehearse roles and practice seamless system transitions [11]. To simulate real-world conditions, use chaos engineering tools like Azure Chaos Studio or ChaosMonkey to inject controlled failures and observe how systems respond in real time [11][15]. For even better results, perform fault injection and chaos testing every 2 to 4 weeks. This regular testing helps uncover vulnerabilities before they escalate into crises [2].

It’s also important to involve clinical staff in downtime drills, ensuring that applications work as expected from the end-user’s perspective [11]. During these exercises, set up health checks to monitor application performance, system stability, and data accuracy [11]. Without regular testing, a DR plan remains unproven and unreliable, as industry standards often emphasize [11].

Using Test Results to Improve Resilience

Testing is only half the battle - what you do with the results makes all the difference. Each test highlights issues that need fixing. Document failures, bottlenecks, and unexpected challenges, then use this information to update your DR plan, vendor agreements, and risk management strategies [16][14]. For example, if a vendor’s failover process takes longer than promised, you might renegotiate service-level agreements (SLAs) or add redundancy. Similarly, if data integrity checks fail, adjust your replication methods and recovery point objectives (RPOs).

Keep your DR runbooks versioned in tools like Git, and make sure they’re available in multiple formats, including offline copies for use during outages [11]. To make resilience testing a continuous process, incorporate DR validation into your CI/CD pipelines with dedicated stages like dr-test and validate-dr [15]. This approach has proven transformative for organizations. Take the example of a healthcare SaaS platform serving over 1,000 clinics across the U.S. and Canada: after a major AWS us-east-1 outage in June 2025, they implemented a multi-cloud DR strategy that slashed their recovery time objective (RTO) from 8 hours to just 20 minutes and their RPO from 4 hours to 15 minutes. These changes boosted SLA compliance from 96% to 99.98% and saved over $400,000 annually [15].

How AI Improves DR Oversight

Artificial intelligence (AI) is a game-changer for monitoring and improving DR performance. AI tools can track DR metrics across regions and vendors, continuously assess risks, and flag configuration changes that could disrupt failover. By analyzing patterns in test results, AI helps pinpoint recurring issues and predicts potential failure points before they occur, reinforcing the vendor risk management strategies discussed earlier.

For governance, AI simplifies reporting by compiling key DR metrics - like failover times, redundancy coverage, and testing frequency - into easy-to-read dashboards. Tools like Censinet AI make it possible for healthcare boards to stay informed about their organization’s DR readiness without needing deep technical expertise. These dashboards translate complex infrastructure data into straightforward risk indicators, enabling executives to make smarter decisions about resources and vendor relationships. This ensures DR planning remains a strategic priority rather than just another checkbox for IT compliance.

Conclusion: Making DR Resilience a Governance Priority

Key Takeaways for Healthcare Boards

Region-wide cloud failures are not just theoretical - they’re a real and growing threat that healthcare boards must address. Relying solely on cloud providers for disaster recovery (DR) is not enough. DR plans need to assume that region-wide outages will happen and must account for every dependency, from SaaS vendors to infrastructure providers. The stakes are high: healthcare organizations lose an average of $100,000 for every hour of downtime, and in 2024, the average cost of a data breach hit $4.99 million [1]. Beyond financial losses, patient safety and regulatory compliance demand DR strategies that span multiple regions and providers.

Building an effective DR strategy isn’t a one-time task - it’s an ongoing process. It requires regular testing and updates [2]. A solid approach includes adopting a DR maturity model with practices like fault injection and chaos testing every two to four weeks, along with annual cross-region failover exercises [2]. The risks are clear: in 2024, 184 million healthcare records were breached [1], and HIPAA violations can result in penalties of up to $50,000 per offense [1]. Proposed updates to HIPAA now emphasize encryption, multifactor authentication, asset inventory tracking, and vulnerability management, making a robust DR strategy essential for compliance [1]. These realities demand immediate action at the board level.

Next Steps for Board Oversight

Disaster recovery resilience needs to be elevated as a strategic priority in governance. Start by conducting a thorough risk assessment and business impact analysis to identify vulnerabilities. Establish clear Recovery Time Objectives (RTOs) and Recovery Point Objectives (RPOs) for critical systems, and continuously monitor performance against these benchmarks [2][3]. Use the 4 C’s - Communication, Coordination, Continuity, and Collaboration - as guiding principles for governance [12].

Make quarterly DR readiness reviews a standard practice. These reviews should cover testing results, vendor dependencies, and failover performance. AI-powered tools can help by translating complex infrastructure data into actionable insights, giving boards a clearer picture of risks. Hold vendors accountable by renegotiating SLAs if they fail to meet expectations. Since human error is responsible for 88% of data breaches [1], prioritize staff training and downtime drills to ensure operational readiness.

FAQs

How can healthcare organizations prepare their disaster recovery (DR) plans for region-wide cloud outages?

Healthcare organizations can better prepare for region-wide cloud outages by adopting multi-region and multi-cloud strategies. These approaches build redundancy into their systems, making them more resilient to disruptions. Regular disaster recovery drills are equally important - they help uncover potential weak spots and confirm that recovery processes are effective.

Automating failover systems is another key step, as it significantly speeds up recovery when issues arise. Additionally, testing for regional isolation and working with multiple cloud service providers can reduce the risks tied to depending on a single vendor. These steps are essential for ensuring smooth operations and safeguarding sensitive patient data, even during unexpected cloud failures.

What are the key vulnerabilities in healthcare disaster recovery plans related to cloud reliance?

Many healthcare disaster recovery (DR) plans struggle to effectively address region-wide cloud failures. The typical pitfalls? Insufficient backup systems, inadequate redundancy measures, and a lack of rigorous testing for cloud outage scenarios. Overreliance on a single cloud provider further amplifies these risks, especially when regional disasters could disrupt cloud services entirely.

To build stronger DR plans, healthcare organizations should focus on diversifying backup solutions, routinely test for potential outages, and design contingency plans that consider geographic vulnerabilities. Taking these steps can help ensure critical data stays secure and patient care remains uninterrupted during cloud-related disruptions.

Why is it important for healthcare organizations to regularly test their disaster recovery (DR) plans?

Regularly testing disaster recovery (DR) plans is a must for healthcare organizations. It helps identify hidden vulnerabilities, ensures recovery steps work as intended, and confirms that critical systems can handle unexpected disruptions. Skipping these tests could leave gaps unnoticed until an actual emergency arises - putting both patient care and data security at serious risk.

By simulating real-life scenarios, healthcare providers can evaluate their DR strategies, train staff on how to respond effectively, and adjust plans to address new and emerging risks. This forward-thinking approach ensures smooth operations and protects sensitive patient data, even during widespread cloud outages or other significant incidents.

Related Blog Posts

- When Multi-AZ Isn't Enough: What the AWS US-EAST-1 Failure Taught Us About True Resilience

- Patient Care Can't Wait for Cloud Recovery: Healthcare's Business Continuity Crisis Exposed

- The AWS Outage Exposed Cloud Vulnerabilities - What It Means for Healthcare Business Continuity

- The 30-40% Problem: Why Healthcare's Over-Reliance on US-EAST-1 Is a Patient Safety Risk