Fail-Safe AI: Engineering Safety into Every Layer of Intelligent Systems

Post Summary

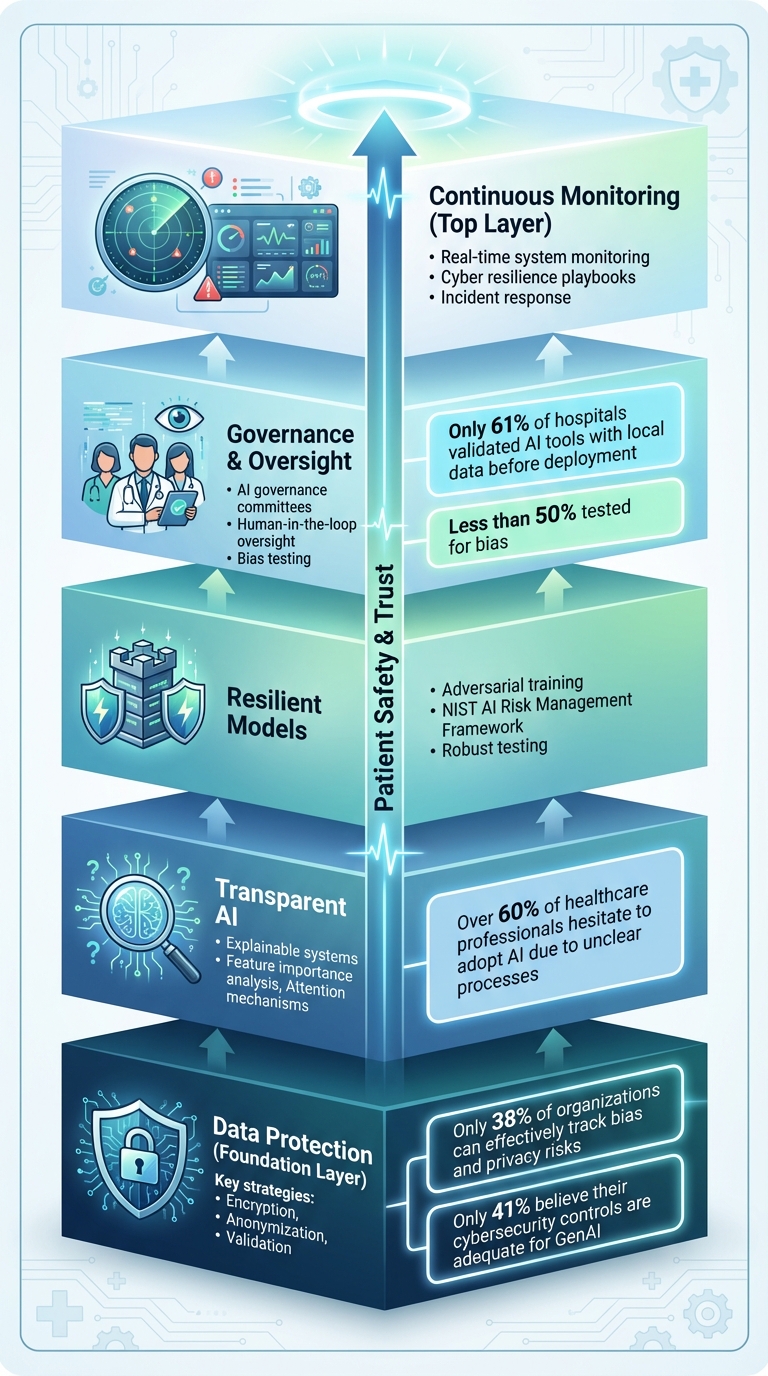

Artificial intelligence (AI) in healthcare is transforming patient care, but it comes with risks that can directly affect safety, privacy, and trust. From misdiagnoses to cybersecurity threats, poorly designed systems may harm patients or expose sensitive data. Creating safer AI involves securing data, making models transparent, and establishing strong oversight. Here's a quick summary of the key strategies:

- Data Protection: Use encryption, anonymization, and validation to safeguard patient information.

- Transparent AI: Build explainable systems so clinicians understand how decisions are made.

- Resilient Models: Train AI to resist attacks and errors for reliable performance.

- Governance & Oversight: Define clear roles, test for biases, and involve human judgment.

- Continuous Monitoring: Track system behavior in real time to detect and address issues early.

Five-Layer Framework for Fail-Safe AI Systems in Healthcare

Protecting the Data Layer from Breaches and Attacks

The data layer is the backbone of healthcare AI. If it's compromised, everything downstream - diagnostics, treatment recommendations, and patient care - becomes unreliable. AI's integration into healthcare raises the stakes for protecting patient health information (PHI) and other sensitive data [4][5]. The challenge is amplified by the sheer volume of sensitive information AI systems process, which creates numerous opportunities for breaches.

Unfortunately, current security measures often fall short of addressing AI-specific risks. Only 38% of organizations strongly agree they can effectively track bias and privacy risks, and just 41% believe their cybersecurity controls are adequate for GenAI applications [4]. This gap leaves healthcare systems vulnerable, especially at critical points where patient data enters the pipeline.

AI's complexity makes it even harder to identify vulnerabilities. Breaches or data manipulation may go unnoticed until they’ve already impacted patient care decisions.

To secure the data layer, healthcare organizations need a comprehensive strategy that prioritizes data quality, privacy, and accountability. This means implementing validation systems to catch corrupted or malicious data before it reaches AI models, deploying encryption and anonymization techniques to safeguard patient privacy, and maintaining immutable audit trails to track every interaction with sensitive information.

Without these safeguards, even the most advanced AI systems can pose risks instead of delivering value. The next sections explore how to strengthen data validation, encryption, and auditability.

Data Validation and Quality Checks

AI models are only as good as the data they process. Flawed or corrupted data can lead to misdiagnoses, incorrect treatments, and serious risks to patient safety. Data validation acts as a frontline defense, identifying issues before they influence clinical decisions.

Automated quality checks should continuously monitor for anomalies, such as missing values, out-of-range measurements, or inconsistent formats. For instance, if a patient’s temperature is recorded as 986°F instead of 98.6°F, the system should immediately flag this for review.

Anomaly detection algorithms can take this a step further by learning normal data patterns and identifying subtle deviations. These algorithms can catch issues like gradual data drift or systematic errors in medical device readings - problems that manual reviews might overlook. Once flagged, questionable data should be quarantined until verified.

Validation also plays a key role in addressing algorithmic bias. Skewed datasets can lead to AI models that perform poorly for certain groups, raising equity concerns. By monitoring data inputs, organizations can detect and correct imbalances, ensuring diverse and representative data feeds into the system.

Encryption and Anonymization for Patient Privacy

Strong encryption is a cornerstone of securing healthcare AI. Patient data must be protected both in storage and during transmission, so even if attackers gain access, the information remains unreadable.

End-to-end encryption ensures data stays secure throughout its lifecycle - from collection to AI processing to storage. Techniques like AES-256 encryption provide robust protection against unauthorized access. Key management systems add an extra layer of security by controlling who can decrypt data and under what conditions.

Anonymization techniques further safeguard privacy by removing or obscuring personally identifiable information before data reaches AI models. Methods like hashing replace sensitive identifiers, such as names or Social Security numbers, with irreversible codes. This allows AI systems to analyze longitudinal data without exposing patient identities.

For training AI systems, differential privacy can be used to add carefully calibrated noise to datasets. This approach enables models to learn from aggregate patterns while making it nearly impossible to extract details about individual patients. While this method may slightly reduce model accuracy, the trade-off is often worthwhile in healthcare, where privacy is paramount.

Using Blockchain for Secure Audit Trails

Blockchain technology offers a tamper-proof way to track every interaction with healthcare data. Unlike traditional databases, where records can be altered or deleted, blockchain maintains an immutable ledger that logs who accessed what data, when, and why.

This transparency is particularly valuable for AI systems. If an AI model makes an unexpected recommendation, blockchain can help investigators trace back to the exact data points that influenced the decision. It also reveals whether unauthorized changes were made along the way.

Blockchain's distributed nature strengthens security by eliminating single points of failure. Instead of storing audit logs in a central location, copies are distributed across multiple nodes. To alter records, an attacker would need to compromise the majority of these nodes simultaneously - a nearly impossible feat for well-designed systems.

Healthcare organizations can also use blockchain to manage patient consent. For example, smart contracts - self-executing code stored on the blockchain - can enforce data usage policies automatically. This ensures that patient data is only used for approved purposes, reducing the risk of accidental or intentional misuse of sensitive information.

Building Transparent and Resilient AI Models

After securing data, the next big hurdle is ensuring that AI models are both trustworthy and resistant to tampering. For healthcare professionals to rely on AI, they need to understand how these systems arrive at their conclusions. At the same time, the models must be robust enough to withstand threats that could compromise patient safety. A survey revealed that over 60% of healthcare professionals hesitate to adopt AI due to unclear processes and concerns about data security [3]. This hesitation highlights a core issue: many AI systems operate like black boxes, leaving clinicians unable to verify their recommendations.

To address this, building resilient AI models requires a two-pronged approach. First, the models must be explainable, allowing clinicians to trace the reasoning behind decisions. Second, they need to be fortified against adversarial attacks, which could jeopardize patient care. The strategies discussed below aim to create AI systems that are both clear in their decision-making and resilient to manipulation.

Making AI Decisions Explainable

Explainability transforms AI from an enigmatic tool into a reliable clinical partner. When an AI system suggests a treatment or flags a diagnosis, clinicians need insight into the factors behind the recommendation. Without this clarity, they’re left with two bad options: blindly trusting the AI or disregarding its output altogether.

Explainable AI (XAI) techniques shed light on the decision-making process. For example, feature importance analysis can identify which patient data points - such as lab results or medical history - had the greatest influence on a recommendation. Similarly, attention mechanisms in neural networks can pinpoint areas of a medical image that contributed to detecting abnormalities. These tools enable clinicians to validate AI reasoning and catch potential errors before they affect patient care.

"Explainable AI (XAI) emerged as one of the significant developments. It made it possible for healthcare professionals to understand AI-driven recommendations, by this means increasing transparency and trust." - International Journal of Medical Informatics [3]

Transparency also fosters accountability across the AI lifecycle. By documenting the rationale behind AI outputs and defining appropriate use cases, developers create a foundation for ongoing improvements - essential for audits and investigations.

"AI system designers and deployers should be transparent about the evidence or reasons for all outputs, as well as when, where, and how they are best used. AI tools should be sufficiently explainable while protecting the economic value of underlying algorithms and relevant intellectual property. AI systems should also enable accountability by involving human input and review across the entire design, implementation, and monitoring process." - Kaiser Permanente Institute for Health Policy [6]

Striking a balance between explainability and safeguarding proprietary algorithms can be tricky. However, healthcare organizations can focus on ensuring that clinicians understand AI recommendations and their reasoning, while still protecting the underlying technology. Next, let’s explore how adversarial training strengthens AI defenses.

Adversarial Training for Model Defense

AI models face unique threats, including adversarial attacks where input data is subtly manipulated to cause errors. In healthcare, such attacks could, for instance, alter medical images in ways that are imperceptible to the human eye, potentially leading to incorrect diagnoses.

Adversarial training prepares models to handle these challenges by exposing them to manipulated examples during development. By learning to identify and resist these altered inputs, models become more robust - not just against deliberate attacks but also against everyday inconsistencies, like variations in imaging techniques or human errors during data entry.

Following the NIST AI Risk Management Framework

The NIST AI Risk Management Framework (AI RMF) offers healthcare organizations a structured way to address AI-related risks. Unlike traditional software, AI introduces unique challenges such as data poisoning, model drift, bias, and explainability issues [7].

This framework helps organizations establish governance processes that clarify roles and responsibilities throughout the AI lifecycle, ensuring accountability. For healthcare providers navigating complex regulations, the AI RMF aligns AI governance with standards like HIPAA, FDA requirements, and others [7]. This alignment is particularly critical for AI-powered medical devices, where secure-by-design principles are essential.

"The NIST AI Risk Management Framework (AI RMF) is intended for voluntary use and to improve the ability to incorporate trustworthiness considerations into the design, development, use, and evaluation of AI products, services, and systems." - NIST [8]

The framework also addresses supply chain risks by providing criteria for evaluating third-party AI systems. This ensures that tools from external vendors meet standards for security, privacy, and bias mitigation [7]. As generative AI becomes more common in healthcare - from automating clinical documentation to enhancing patient communication - this guidance helps organizations identify and manage the unique risks tied to these technologies.

Governance and Oversight for Safe AI Deployment

Even the most advanced AI models can stumble without strong governance and human oversight in place. For healthcare organizations, this means creating structured frameworks that clearly define who is responsible for AI-driven decisions and establishing protocols to guide those decisions. This is especially critical in patient care, where the risks are far too high to rely solely on technology.

AI Governance Maturity Models

Governance maturity models are tools that help healthcare organizations evaluate their current oversight capabilities and pinpoint areas that need improvement. A solid governance framework brings together a multidisciplinary team, including experts in clinical practice, IT, legal, compliance, safety, data science, bioethics, and patient advocacy [9]. It’s not just about ticking off a checklist - it’s about embedding governance into the health system’s workflows at every stage of the AI lifecycle, from concept development to deployment and ongoing monitoring.

For example, a 2025 survey revealed that only 61% of hospitals validated predictive AI tools using local data before deployment, and less than half tested for bias. The disparity was even greater in smaller, rural, and non-academic institutions [13]. To address these gaps, organizations need clear processes for selecting, implementing, and managing AI tools while adhering to regulations like HIPAA and FDA requirements.

Human-in-the-Loop Oversight

Human oversight is essential to prevent over-reliance on AI outputs. Whether AI is optimizing surgical schedules or flagging potential diagnoses, clinicians must review these recommendations to ensure patient care remains the top priority [10]. The aim isn’t to slow down AI adoption but to make sure the technology complements clinical judgment rather than replacing it.

"Ultimately, AI should be viewed as a tool that enhances, rather than replaces, clinical judgment." – ECRI [11]

Providing role-specific training is another key step. This helps staff use AI tools effectively and spot potential errors or biases [9]. Human experts should also verify AI-generated content for accuracy and ensure it’s free of plagiarism or bias before publication [12].

"Voluntary, confidential reporting of AI-related adverse events fosters a learning health system and emphasizes the importance of keeping humans in the decision-making process." – Joint Commission and Coalition for Health AI (CHAI) Guidance on The Responsible Use of AI in Healthcare (RUAIH) [9]

Strengthening oversight doesn’t stop there - it also involves using integrated risk management tools.

Collaborative Risk Assessments with Censinet RiskOps™

Effectively managing AI-related risks requires close collaboration among clinical, IT, compliance, and cybersecurity teams. Censinet RiskOps™ provides a centralized platform for healthcare organizations to conduct risk assessments, monitor AI-related policies, and ensure accountability throughout the AI lifecycle. The system routes tasks and findings to the appropriate stakeholders, including members of the AI governance committee.

Before deployment, organizations should identify potential risks and biases, then continue monitoring throughout the AI tool’s use [9]. This includes gathering detailed information from vendors about how their AI tools were tested, validated, and evaluated for bias. Censinet AI simplifies this process by enabling vendors to quickly complete security questionnaires, summarize evidence, and generate risk reports based on the data.

The platform’s human-in-the-loop design ensures that automation supports decision-making rather than replacing it. With customizable rules, thorough review processes, and a real-time AI risk dashboard, healthcare leaders can scale their risk management efforts while maintaining the oversight needed to protect patient safety and meet regulatory standards.

sbb-itb-535baee

Monitoring and Response for Continuous Resilience

After addressing data protection, resilient AI models, and governance, the final piece to ensuring safety in AI systems is continuous monitoring and swift response. In healthcare, where AI directly impacts patient safety, this constant vigilance is non-negotiable. Real-time monitoring paired with clear response protocols ensures these systems remain reliable and secure.

Real-Time AI System Monitoring

Modern AI systems process vast amounts of data from IoT devices, sensors, electronic health records (EHRs), and imaging systems [14][15][16]. The goal? Spot anomalies before they escalate. This includes identifying issues like model drift, security risks, or unusual system behavior.

What sets these systems apart is their ability to catch early warning signs - subtle "near misses" that might escape human notice [2][16]. For instance, monitoring tools can detect inconsistent diagnostic results or flag unusual access patterns that might indicate a security breach. By identifying these risks early, healthcare teams can step in to prevent harm to patients or breaches of sensitive data.

The insights gained from monitoring lay the groundwork for well-structured and timely responses.

AI Cyber Resilience Playbooks

Real-time monitoring is only half the equation. Pre-defined resilience playbooks turn insights into action. These playbooks provide clear, step-by-step guidance for handling incidents, ensuring quick and effective responses when security issues arise. A strong governance framework is essential here, bringing together leaders from operations, finance, marketing, supply chain, and clinical teams to evaluate incidents from multiple perspectives [2]. Above all, patient safety remains the central focus in every response decision [17].

Developing these playbooks requires collaboration from a diverse group - data scientists, clinicians, ethicists, and regulatory experts. Together, they can map out specific actions for various scenarios, such as isolating compromised systems or activating backup protocols. This level of preparation ensures response teams can act swiftly and effectively, without compromising on thoroughness when incidents occur.

Conclusion

Creating fail-safe AI systems in healthcare demands a multi-layered approach that tackles vulnerabilities from every angle. This means securing the data layer with robust encryption and validation protocols, while also crafting models that are transparent and resilient. Techniques like adversarial training and explainability frameworks play a key role in ensuring these models are not only effective but also trustworthy. Together, these layers turn AI safety into more than just a checklist - it becomes a guiding principle for every decision made [19][18]. In a field like healthcare, where sensitive patient data and strict regulations intersect, this approach isn’t optional - it’s essential.

Beyond these technical safeguards, the high stakes in healthcare call for strong governance. Weak oversight can lead to serious risks, including bias, data breaches, and even medical errors [11]. To address this, organizations need to adopt safety models that are dynamic and continuously evolving, rather than static systems designed only for the initial rollout [1]. This constant vigilance is critical - not just for meeting regulatory standards but also for improving clinical outcomes and safeguarding an organization’s reputation [2].

Platforms like Censinet RiskOps™ provide a centralized solution for managing these intricate safety requirements. By leveraging Censinet AI™, healthcare organizations can accelerate risk assessments while still keeping human oversight at the forefront. The platform’s real-time AI risk dashboard ensures that key findings are routed to the right teams at the right time, enabling a coordinated and effective response. This unified approach to AI governance helps organizations scale their risk management efforts without compromising safety.

FAQs

What steps can healthcare organizations take to ensure AI systems are fair and unbiased?

Healthcare organizations can take meaningful steps to reduce bias in AI systems by focusing on diverse and inclusive training data. Ensuring datasets represent a wide range of populations helps minimize the risk of skewed outcomes that could unfairly impact decision-making processes. Regularly reviewing and updating these datasets is key to identifying and addressing any hidden biases over time.

Another critical approach is using transparent AI models. These models should clearly outline how decisions are made, fostering accountability and trust. Pairing this with ongoing performance monitoring across various patient groups can highlight disparities, enabling adjustments that improve fairness and equity.

Incorporating these practices into their AI strategies allows healthcare organizations to create systems that are not only dependable but also fair for everyone they serve.

How can we make AI systems in healthcare more understandable and transparent?

Improving transparency in AI for healthcare requires a thoughtful approach. Start by prioritizing interpretable models that clearly show how decisions are made. This means developing algorithms that focus on explainability, allowing both clinicians and patients to understand the reasoning behind the outcomes.

Involve healthcare professionals during the design phase to ensure AI systems meet practical needs and expectations. Incorporate visualization tools to simplify complex decision-making processes, making them easier to grasp. On top of that, ensure thorough documentation is available and perform extensive testing to guarantee the system's reliability, especially in high-stakes scenarios.

How does blockchain help protect patient data in AI systems?

Blockchain adds a robust layer of security to patient data within AI systems by creating a secure, unchangeable ledger. This means every piece of data is recorded in a way that prevents tampering, ensuring accuracy and complete traceability.

With blockchain, access to sensitive information is tightly regulated, minimizing the chances of unauthorized access or data misuse. This approach not only protects patient information but also helps build trust and ensures compliance with strict healthcare regulations.