Machine vs. Machine: The Future of AI-Powered Cybersecurity Defense

Post Summary

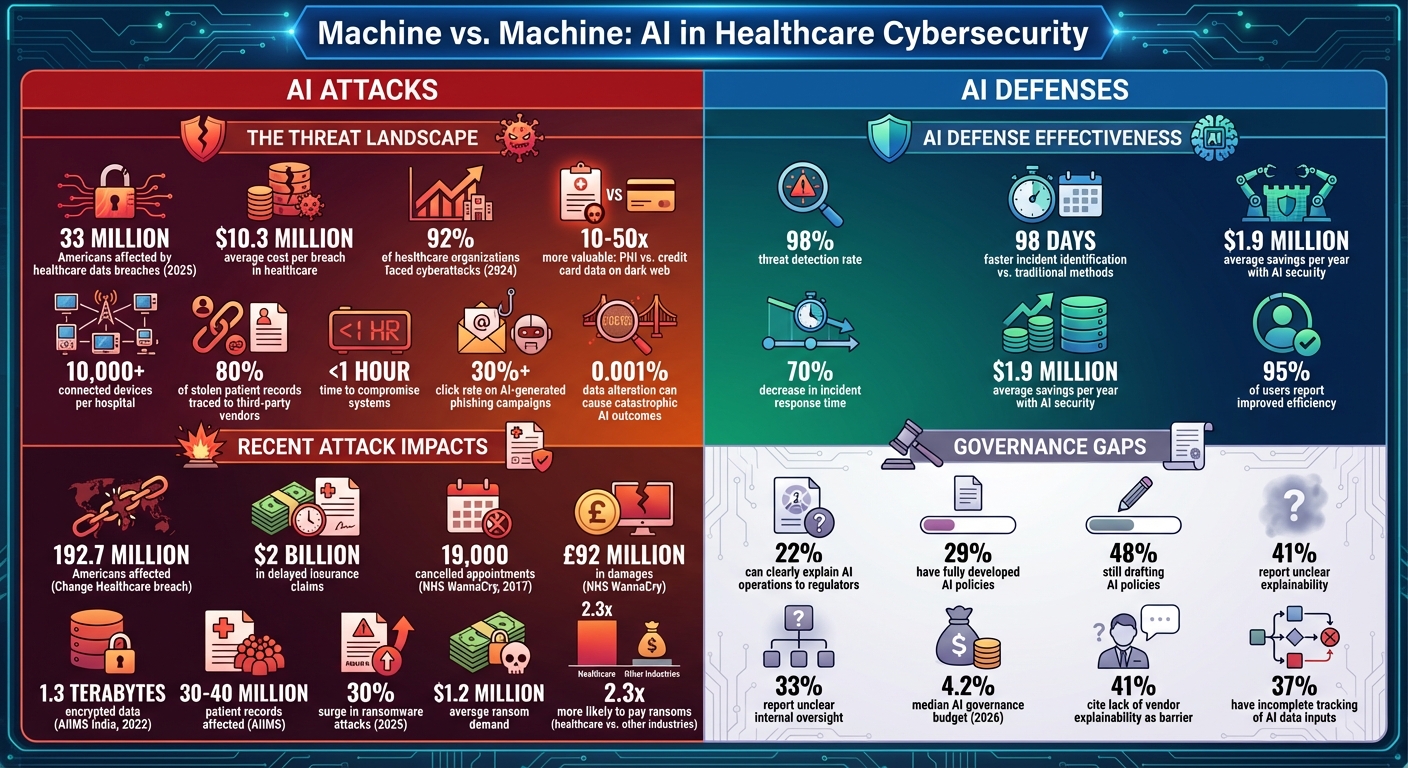

AI is reshaping cybersecurity in healthcare, but attackers are keeping pace. Cybercriminals now use AI to launch faster, smarter, and harder-to-detect attacks, targeting sensitive patient data and critical systems. Traditional defenses are falling behind, but AI-powered solutions are stepping in.

Key Takeaways:

- Why Healthcare is at Risk: Outdated systems, IoT devices, and sensitive patient data make healthcare a prime target. In 2025, 33 million Americans were affected by healthcare data breaches, with average costs hitting $10.3 million per breach.

- AI Attack Methods: Cybercriminals use tactics like data poisoning, adversarial manipulation, and supply chain attacks, exploiting vulnerabilities in under an hour.

- AI Defenses: AI systems detect threats 98% faster, reduce response times by 70%, and save organizations an average of $1.9 million per year.

- Real-World Examples: From the 2017 NHS WannaCry attack to the 2022 AIIMS breach in India, recent incidents highlight the devastating impact of AI-driven attacks.

- Solutions: Tools like Censinet AI™ streamline risk management, automate vendor assessments, and integrate human oversight to improve security.

AI is both the problem and the solution. To stay ahead, healthcare providers must embrace AI-driven defenses, strengthen governance, and demand transparency from vendors.

AI-Powered Cybersecurity in Healthcare: Key Statistics and Impact

AI-Powered Cyberattacks Targeting Healthcare

Healthcare organizations are increasingly vulnerable to cyberattacks powered by AI, largely due to the highly sensitive data they manage. Personal health information (PHI) is a prime target for cybercriminals, fetching 10 to 50 times more on dark web markets than credit card data [5]. In 2024, a staggering 92% of healthcare organizations faced cyberattacks, and by 2025, data breaches impacted 33 million Americans [5]. The financial toll is equally alarming, with the average cost of a breach in the healthcare sector reaching $10.3 million - making it the most expensive industry for data breaches for the 14th year in a row [5].

Adding to the challenge, hospitals operate vast networks of over 10,000 connected devices, many of which rely on outdated systems that can't keep up with modern threats [5]. When these systems are compromised, the consequences go far beyond stolen data. Attackers can manipulate medical devices like insulin pumps or diagnostic AI systems to deliver incorrect treatments or diagnoses, directly endangering patients' lives.

Common AI Attack Methods

AI has transformed the way cybercriminals operate, enabling them to deploy advanced methods like data poisoning. This tactic involves corrupting the training datasets that medical AI systems depend on, leading to dangerous diagnostic errors. Research shows that altering just 0.001% of input data can cause catastrophic outcomes [5].

Adversarial manipulation is another alarming method. Attackers craft subtle changes to inputs, such as medical images, to deceive AI systems. For instance, a chest X-ray could be altered in ways invisible to human radiologists, causing the AI to miss critical health issues. Supply chain attacks are also on the rise, with 80% of stolen patient records now traced back to third-party vendors rather than hospitals themselves [5]. These breaches can ripple across multiple organizations, amplifying their impact.

AI also allows cybercriminals to act with unprecedented speed and scale. Systems can be compromised in under an hour [4], as attackers automate reconnaissance and generate thousands of malware variants [5]. Vulnerabilities across vast networks are identified in minutes, and highly personalized phishing campaigns achieve click rates exceeding 30% among healthcare workers [5]. Adaptive malware further complicates defense efforts by constantly evolving to bypass detection faster than security teams can respond.

These sophisticated tactics highlight the growing difficulty of defending against AI-powered threats.

Recent AI-Driven Cyber Incidents in Healthcare

The dangers of AI-driven attacks are no longer theoretical - they're happening now. One such example is the Change Healthcare breach, where a single vendor compromise disrupted the entire healthcare system. This attack affected 192.7 million Americans, delayed over $2 billion in insurance claims, and interrupted prescription processing nationwide [5].

The 2017 WannaCry ransomware attack on the UK's National Health Service (NHS) is another stark example. It forced the cancellation of over 19,000 medical appointments and caused financial damages estimated at £92 million ($120 million) [7]. In a tragic incident in September 2020, a ransomware attack on a university hospital in Düsseldorf, Germany, blocked access to critical systems during an emergency. A patient had to be diverted to another hospital 19 miles away and died before receiving care, leading to a manslaughter investigation [6].

More recently, in November 2022, India's AIIMS hospital faced a crippling attack that encrypted 1.3 terabytes of data, forcing the facility to operate manually for two weeks and affecting 30–40 million patient records [7]. Similarly, the Medtronic insulin pump hack demonstrated how attackers could remotely manipulate medical devices, delivering incorrect doses and endangering patients [7].

These incidents underscore a disturbing trend: attackers are increasingly targeting not just data but also the systems that directly impact patient care. In 2025, ransomware attacks on healthcare surged by 30%, with ransom demands averaging $1.2 million. Alarmingly, healthcare organizations are 2.3 times more likely to pay ransoms compared to other industries [5].

How AI Defends Healthcare Systems

Healthcare organizations are increasingly relying on AI-driven defenses to safeguard their systems. These tools continuously analyze vast amounts of data to uncover anomalies, such as unusual login attempts, unauthorized access to electronic health records (EHRs), or irregular network activity.

AI’s ability to detect threats has significantly improved response times. Compared to traditional methods, AI reduces the time needed to identify incidents by a staggering 98 days. It doesn’t just spot known threats - it can also identify new, emerging risks, isolating compromised devices and halting suspicious activities in mere seconds.

The impact is undeniable. Healthcare organizations using AI-led security measures report a 98% threat detection rate and a 70% decrease in incident response time, even in high-risk scenarios. Financially, companies deploying AI for cybersecurity have saved an average of $1.9 million. Additionally, 95% of users agree that AI-powered solutions enhance the efficiency of prevention, detection, response, and recovery efforts[8].

Core Capabilities of AI Defense Systems

AI security systems don’t just stop at rapid detection - they also leverage advanced analytics to provide multi-layered defenses. These systems excel in three main areas: anomaly detection, predictive analytics, and threat intelligence.

- Anomaly detection: AI establishes a baseline for what "normal" activity looks like across a healthcare network. This includes typical user behaviors, standard data access patterns, and regular device communications. Any deviation from this baseline is flagged immediately.

- Predictive analytics: By analyzing past incidents and current threat data, AI can anticipate potential attacks and proactively address vulnerabilities[5].

- Threat intelligence: AI systems continuously learn from global attack data, adapting their defenses to counter new tactics as they arise[3][7].

Greg Surla, Senior Vice President and Chief Information Security Officer at FinThrive, highlights the importance of AI in healthcare security:

"AI's ability to analyze massive volumes of data, identify anomalies and respond instantly doesn't just shorten response times - it protects lives and builds trust in healthcare systems."

- Greg Surla[10]

The real strength of AI lies in its ability to work alongside human analysts. While AI handles routine monitoring and rapid responses to lower-risk issues, human experts focus on interpreting complex threats and making strategic decisions. This partnership not only reduces the workload for human teams but also allows them to concentrate on critical priorities like patient care[10][7].

AI and Zero Trust Security

AI also plays a vital role in supporting Zero Trust security frameworks. These frameworks operate on the principle of "never trust, always verify", requiring continuous monitoring and verification of all users and devices. AI enhances this approach by dynamically assessing risk based on factors like user behavior, device health, location, and access patterns. For instance, if a doctor’s account tries to access patient records from an unusual location at 3:00 AM, the system can immediately enforce additional authentication or block access altogether.

The recent updates to the HIPAA Security Rule align with this shift toward stricter controls. These changes eliminate "addressable" specifications and mandate the implementation of measures like multi-factor authentication (MFA) and encryption within 240 days[5]. AI platforms simplify compliance by automating monitoring and enforcement, helping organizations meet these requirements. Businesses must also revise their agreements with associates to include technical safeguards such as encryption, MFA, and network segmentation within a year and 60 days[5].

Censinet AI™: Managing Cybersecurity Risk in Healthcare

Healthcare organizations are under increasing pressure to manage cybersecurity risks tied to hundreds - or even thousands - of third-party vendors, all while ensuring patient safety remains uncompromised. Enter Censinet AI™, a solution designed to simplify vendor assessments within the Censinet RiskOps™ platform. This technology automates the completion of security questionnaires, reviews and summarizes evidence documentation, pinpoints critical product integrations and fourth-party risks, and generates concise risk summary reports. By building on the rapid detection capabilities of AI-driven defenses, Censinet AI™ extends these benefits to vendor risk evaluations. Just like AI can thwart cyber threats in real time, Censinet AI™ accelerates the process of assessing risks across complex vendor ecosystems.

Faster Third-Party Risk Assessments

Traditional vendor risk assessments can be painfully slow, often dragging on for weeks or even months. This delay creates bottlenecks that leave organizations vulnerable. Censinet AI™ tackles this issue head-on by automating the most tedious parts of the process. Instead of manually combing through extensive security policies, compliance documents, or technical specs, the system does the heavy lifting. It also identifies critical integration points and highlights fourth-party dependencies that could pose additional risks, cutting down assessment times while reducing overall exposure for healthcare organizations.

Balancing Automation with Human Oversight

Speed is crucial, but not at the expense of accuracy or safety. That’s why Censinet AI™ incorporates a human-in-the-loop approach, ensuring oversight at key stages. Risk teams set rules and review processes to determine when automation can proceed on its own and when human intervention is necessary. This setup allows AI to handle repetitive tasks like validating evidence, drafting policies, and suggesting risk mitigation strategies, while leaving the final judgment to experienced security professionals. Team members review and approve findings before they’re finalized, creating a balance where automation enhances efficiency without replacing human expertise[11][7][12]. This approach ensures organizations can scale their risk management efforts while maintaining professional oversight.

Coordinating GRC Teams and AI Oversight

For automation to work seamlessly, coordination is essential. Censinet AI™ acts as a central hub, ensuring risk insights are effectively translated into actionable governance. From security analysts to compliance officers to executive leaders, the platform connects all relevant stakeholders. Think of it as air traffic control for risk management - it routes assessment findings and tasks to the right people for review and approval. If critical AI-related risks are flagged, the system immediately notifies the AI governance committee and tracks their responses. An intuitive dashboard provides a real-time overview of AI-related policies, risks, and tasks, serving as a one-stop hub for oversight and accountability. This unified system ensures that every issue is addressed by the appropriate team, promoting continuous governance and effective risk management across the organization.

sbb-itb-535baee

Building AI-Powered Cybersecurity Capabilities

Healthcare organizations can't afford to treat AI cybersecurity as an afterthought. The numbers paint a concerning picture: only 22% of hospitals can clearly explain their AI operations to regulators, 29% have fully developed AI policies, and 48% are still in the process of drafting them. These gaps leave them open to increasingly advanced AI-driven threats [13]. To address these vulnerabilities, modern solutions like Censinet RiskOps™ are crucial. The key lies in establishing strong AI governance, secure system design, and ongoing risk monitoring.

Developing AI Governance Frameworks

Accountability is non-negotiable. Yet, 33% of hospitals report unclear internal oversight, and the median AI governance budget for 2026 is projected to be just 4.2% [13]. To overcome this, organizations should form dedicated AI governance committees. These committees should include representatives from security, compliance, clinical operations, and IT. Their role? Establish clear approval processes, oversight mechanisms, and response protocols.

Governance isn't just about policies - it requires proper infrastructure. Tools that track model inventories, document data lineage, and maintain audit trails are essential. These investments help organizations move from theoretical discussions to actionable governance. With a strong foundation in place, healthcare providers can better manage AI risks while building secure systems.

Designing Secure AI Systems

Security must be baked in from the beginning. Recent guidance from the Health Sector Coordinating Council (HSCC) for 2026 underscores the importance of secure-by-design principles and rigorous third-party risk management [9]. This means addressing security risks early, such as data poisoning and model manipulation, which can compromise AI systems.

AI security challenges tend to fall into four main areas: data, AI models, applications, and infrastructure [1]. A major hurdle? Limited transparency from vendors. In fact, 41% of hospitals cite the lack of explainability artifacts - like model cards and drift reports - as a significant barrier during audits [13]. To counter this, healthcare organizations should demand detailed documentation from AI vendors. This level of transparency not only aids in decision-making but also strengthens long-term cybersecurity defenses.

Using Censinet RiskOps™ for Long-Term Preparedness

AI threats are evolving too quickly for static solutions. Cybersecurity programs must be dynamic and continuously improve. Censinet RiskOps™ provides a centralized platform for real-time risk visibility, helping organizations identify vulnerabilities and monitor remediation efforts effectively.

Currently, 37% of hospitals admit to incomplete tracking of data inputs and model versions - a critical weakness during audits or security investigations [13]. Censinet RiskOps™ addresses this by centralizing records of AI deployments, vendor relationships, and risk assessments. This streamlined approach ensures healthcare organizations can respond swiftly to emerging threats while maintaining a robust security posture.

Conclusion

The healthcare industry is at a pivotal moment as cybercriminals increasingly leverage AI to automate attacks, from reconnaissance and phishing to deploying malware and evading detection [14][10][15]. At the same time, while AI offers incredible advantages in diagnosis and treatment, it also introduces risks like data breaches, lack of transparency in algorithms, and vulnerabilities in AI-controlled systems [6].

Relying on outdated, reactive security methods is no longer an option. Protecting electronic health records, ensuring patient privacy, and maintaining data integrity now require AI-driven cybersecurity solutions. In a world where threats evolve rapidly, proactive risk management has become a necessity.

To address these challenges, Censinet RiskOps™ offers a centralized approach to managing risks tied to emerging AI threats. By enabling real-time threat detection, simplifying third-party risk assessments, and maintaining comprehensive audit trails, the platform tackles critical vulnerabilities head-on. Meanwhile, Censinet AI™ enhances risk assessments with automation powered by configurable rules, ensuring human oversight remains integral to decision-making.

Strengthening defenses also means establishing strong AI governance, demanding transparency from vendors, and maintaining continuous risk monitoring. The future of healthcare cybersecurity lies in seamlessly integrating AI-powered tools that not only manage clinical risks but also improve patient safety [6], while optimizing resources through unified platforms [2]. These solutions represent the next phase in healthcare's cybersecurity evolution.

The battle between AI-driven threats and defenses has already begun. By adopting the strategies outlined here, healthcare organizations can protect patient data and ensure the secure delivery of care in the face of ever-growing challenges.

FAQs

How does AI help healthcare organizations detect and respond to cyber threats faster?

AI plays a crucial role in improving how quickly and accurately cyber threats are detected and addressed. By constantly monitoring networks for suspicious behavior, it processes massive amounts of data in real-time and pinpoints patterns that may signal potential attacks. This capability allows AI to take over many labor-intensive tasks, like analyzing threats and containing breaches, enabling faster responses that help limit harm.

In the healthcare sector, AI helps safeguard sensitive patient information, minimize disruptions caused by cyberattacks, and stay prepared for increasingly advanced threats.

What are the key AI-driven cyberattack methods targeting healthcare systems?

Healthcare systems have become a prime target for sophisticated AI-driven cyberattacks. These attacks leverage advanced techniques to exploit system vulnerabilities, making them particularly dangerous. Among these methods are adversarial attacks, where attackers subtly manipulate AI models to generate incorrect or misleading outputs. There's also generative AI-powered social engineering, which enables the creation of highly convincing phishing emails or messages designed to deceive individuals. On top of that, deepfake technology is being used to impersonate people or spread false information, adding another layer of complexity.

Cybercriminals also employ AI to create adaptive malware - malicious software that evolves to bypass security measures. They can perform rapid vulnerability scans to identify weak points in systems and automate exploitation processes to breach defenses quickly. These evolving tactics highlight the pressing need for healthcare organizations to implement advanced, AI-driven security measures to safeguard sensitive patient information and maintain the integrity of critical systems.

How does AI support Zero Trust security in protecting healthcare data?

AI is transforming Zero Trust security in healthcare by constantly verifying the identities of users and devices. It monitors behavior in real time, spotting anything unusual and allowing for quicker action against potential threats. With AI, healthcare organizations can use dynamic access controls that adjust permissions on the fly, ensuring that only the right people can access sensitive patient information.

Beyond that, AI helps to stop insider threats and prevent unauthorized lateral movement within networks. By analyzing activity patterns, it identifies risks early, stopping them before they grow into larger issues. This proactive approach strengthens the security of healthcare systems, keeping both internal and external threats at bay while protecting confidential patient data.