The Future of Medical Practice: AI-Augmented Clinicians and Risk Management

Post Summary

AI-augmented clinicians are healthcare professionals who use artificial intelligence tools to enhance decision-making, improve efficiency, and deliver better patient outcomes.

AI is revolutionizing medical practice by improving diagnostics, streamlining administrative tasks, and enabling personalized treatment plans.

AI enhances clinical decision-making, reduces physician burnout, and improves patient care through predictive analytics and automation.

Challenges include addressing algorithmic bias, ensuring data privacy, and integrating AI tools into clinical workflows effectively.

Clinicians can prepare by gaining AI literacy, understanding ethical implications, and participating in training programs focused on AI tools.

The future includes widespread adoption of AI tools, improved patient outcomes, and a shift toward collaborative decision-making between clinicians and AI systems.

AI is reshaping healthcare by assisting clinicians in diagnostics, treatment planning, and administrative tasks. It helps reduce workloads, improve efficiency, and enhance patient care. However, these advancements introduce risks like system errors, cybersecurity threats, and compliance challenges. For example, AI tools like Epic's sepsis predictor have faced accuracy issues, leading to missed diagnoses and alert fatigue. To safely integrate AI, healthcare organizations must focus on:

- Governance: Establish multidisciplinary teams to oversee AI usage and risks.

- Cybersecurity: Protect AI systems from data poisoning, adversarial attacks, and breaches.

- Clinician Oversight: Ensure human judgment remains central in decision-making.

- Vendor Management: Demand transparency, bias testing, and compliance from AI suppliers.

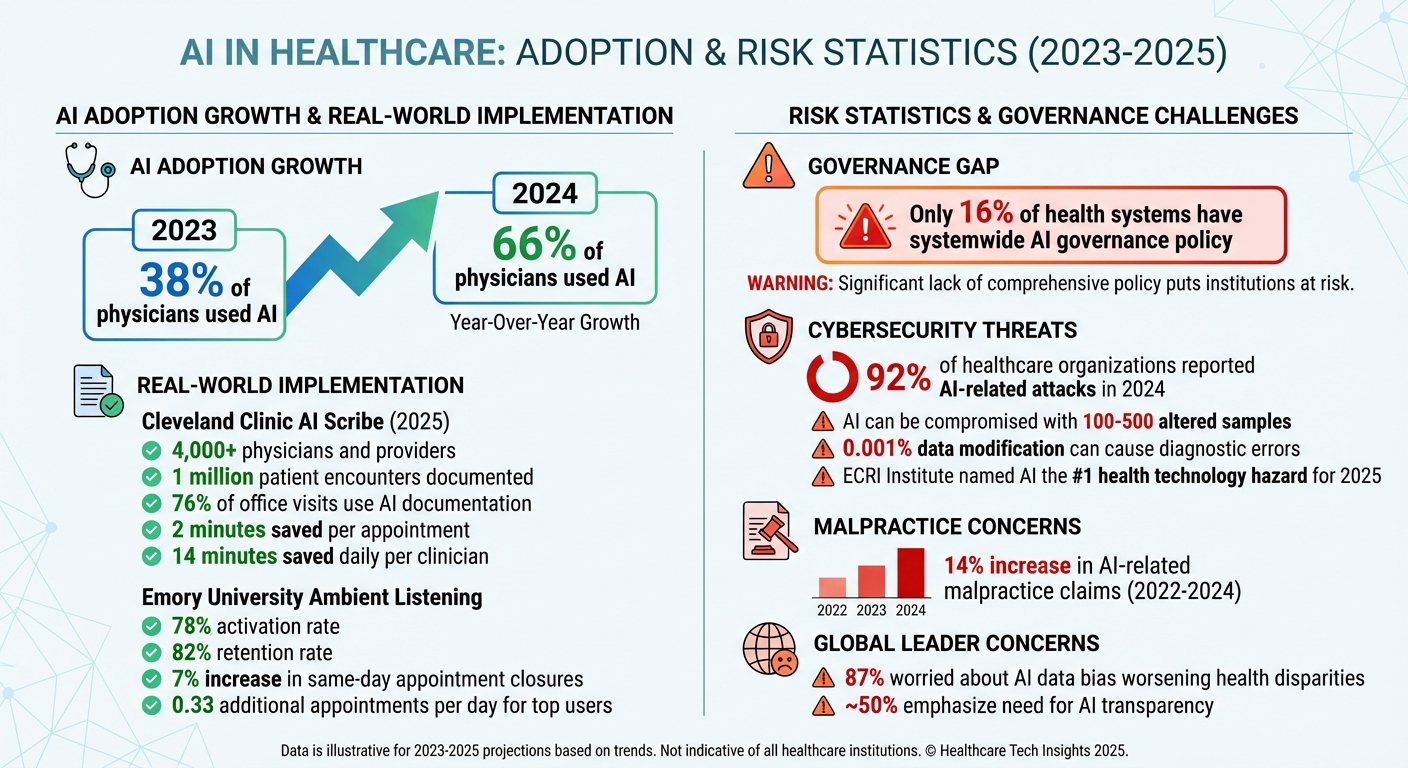

AI Adoption and Risk Statistics in Healthcare 2023-2025

How AI Augments Clinicians and Associated Risks

Common AI Applications in U.S. Healthcare

AI is making waves in U.S. healthcare by transforming how clinicians work. One standout application is the use of ambient listening software and clinical documentation tools, which capture patient conversations and automatically create structured notes for electronic health records (EHRs). For instance, Cleveland Clinic adopted Ambience Healthcare's AI Scribe in 2025, rolling it out to over 4,000 physicians and advanced practice providers. This tool has documented 1 million patient encounters, shaving off two minutes per appointment and saving 14 minutes daily. Impressively, 76% of scheduled office visits now rely on this system for documentation [4]. Similarly, Emory University's Ambient Listening Program boasts a 78% activation rate and an 82% retention rate, with top users seeing a 7% uptick in same-day appointment closures and squeezing in 0.33 additional appointments per day [6].

AI’s benefits extend beyond documentation. Predictive analytics help clinicians foresee patient outcomes and take preventive measures. Clinical decision support systems provide real-time guidance during diagnoses and treatment planning. In medical imaging, AI is enhancing the detection of abnormalities in radiology and pathology, delivering increasingly precise results. By taking over repetitive tasks, AI transforms EHRs from static record-keeping systems into dynamic tools that enable proactive, personalized care [5]. However, while these advancements boost efficiency, they also bring risks that cannot be overlooked.

Types of Risks in AI-Driven Clinical Care

As AI becomes more integrated into clinical workflows, it introduces several risks that healthcare organizations must carefully manage. Clinical risks are among the most concerning, as they directly impact patient safety. AI tools can sometimes produce incorrect outputs, fail to recognize rare conditions, or degrade in performance over time, leading to misdiagnoses, delayed diagnoses, or improper treatment recommendations. For example, Epic's sepsis predictor at the University of Michigan missed critical cases and generated false alarms, contributing to clinician alert fatigue [6, 16, 9, 8]. Over-reliance on these systems - especially when their inner workings are opaque - can also erode clinician judgment [8, 20].

Cybersecurity risks are another major concern. AI systems often access sensitive patient data and are connected to broader healthcare networks, making them attractive targets for cyberattacks. Additionally, compliance risks arise as organizations navigate complex regulations. Alarmingly, only 16% of health systems have a systemwide governance policy for AI usage and data access, leaving significant gaps in their preparedness [2].

Maintaining Clinician Control Over AI Systems

Keeping clinicians in charge of AI systems is critical to ensuring their safe and effective use. Human-in-the-loop systems are designed to let AI assist clinicians without replacing their judgment, with physicians retaining the final say in decision-making [17, 18, 21]. Striking this balance is essential for managing risks while reaping AI's benefits. A 2024 survey revealed that 66% of nearly 1,200 physicians were using AI in their work - a significant jump from 38% in 2023 [18, 20]. Despite this increase, many healthcare providers still lack clear governance frameworks to guide AI use.

To address this, healthcare organizations need robust AI governance structures. This includes creating an AI Registry to track all AI/ML tools for traceability and explainability, conducting cross-functional risk audits with clinical, technical, legal, and compliance teams, and mapping data lineage to identify potential biases [8]. AI-generated documentation should clearly indicate its origin and be reviewed by a clinician, and patient consent for AI’s role in their care must be obtained [7]. Organizations should also demand that third-party AI vendors provide audit trails, performance tests, and documentation on how algorithms are maintained [2]. Early involvement from clinicians is crucial to ensure those using these tools have a say in their governance and oversight [9].

Cybersecurity and Privacy Risks in AI Systems

Cybersecurity Threats Specific to AI

AI systems in clinical settings face risks that go beyond the usual IT vulnerabilities. One major issue is data poisoning, where attackers tamper with the data used to train AI models, leading to incorrect outputs. Another concern is adversarial attacks, which exploit weaknesses by introducing harmful inputs. Shockingly, healthcare AI can be compromised with as few as 100–500 altered samples, and even a tiny data modification (just 0.001%) can result in diagnostic errors or incorrect treatment decisions[10][11].

The fallout from such attacks is serious. They can cause diagnostic mistakes, improper medication dosages, or flawed treatment recommendations. Sensitive patient data may also be exposed or manipulated. AI-powered medical devices - like pacemakers, insulin pumps, and imaging systems - are particularly at risk. These devices could face unauthorized changes, ransomware attacks, or denial-of-service (DoS) disruptions, putting patient safety and care continuity in jeopardy. The ECRI Institute has even named AI the top health technology hazard for 2025, with 92% of healthcare organizations reporting AI-related attacks in 2024[11]. Past incidents have caused treatment delays and, tragically, even patient deaths[11].

The complexity of AI models, especially large language models and deep learning algorithms, makes it harder to detect errors and increases exposure to prompt injection attacks, where malicious inputs manipulate the AI’s behavior[11]. The rapid integration of these models into patient care and clinical documentation introduces new risks, such as data breaches and clinical errors. These growing threats emphasize the need for strict regulatory oversight in healthcare AI.

U.S. Regulations for AI in Healthcare

Several U.S. regulations aim to secure AI in healthcare. HIPAA protects electronic patient health information (ePHI), while HITECH promotes secure health IT adoption. The FDA provides guidelines for Software as a Medical Device (SaMD) and Software in a Medical Device (SiMD) throughout the Total Product Life Cycle (TPLC)[13]. Additionally, the NIST AI Risk Management Framework (AI RMF) offers voluntary guidance to help organizations manage AI risks and build trust in these systems[15]. Looking ahead, the Health Sector Coordinating Council (HSCC) is preparing 2026 guidance on AI cybersecurity risks. This guidance will cover best practices in areas like education, cyber defense, governance, secure-by-design principles, and third-party risk management[14].

Data Governance for AI Systems

Strong data governance is essential for safeguarding AI workflows. Using trusted data sources and enforcing rigorous data validation can help prevent data poisoning. Ongoing monitoring for unusual activity is also crucial to detect tampering. Organizations should secure data pipelines with strict access controls, maintain cryptographic audit trails for any data changes, and regularly retrain AI models using clean, verified datasets to ensure their reliability[12][11].

De-identifying patient data before using it to train AI models is another important step, ensuring compliance with HIPAA and state privacy laws. Healthcare organizations should also establish clear policies for AI usage, limiting access to trained personnel and implementing strict protocols for handling sensitive information[12]. Separating training environments from production systems adds another layer of protection against data poisoning attacks[11]. Finally, comprehensive validation frameworks are critical. These frameworks should test AI models for both routine errors and adversarial manipulation, including robustness testing and generating adversarial examples, to maintain system reliability[11].

sbb-itb-535baee

Creating an AI Risk Management Program

Developing a strong AI risk management program is essential for protecting patient data and maintaining clinicians' confidence in AI-assisted practices. This involves creating governance structures, carefully evaluating risks, and managing vendor relationships to ensure AI is integrated safely into clinical workflows.

Setting Up AI Governance

Start by forming a multidisciplinary governance team. This group should include clinicians, IT leaders, compliance officers, data scientists, legal professionals, safety and quality experts, bioethics specialists, and patient advocates. Their role is to evaluate the clinical validity of AI systems, assess technical infrastructure and security measures, ensure compliance with regulations, and incorporate patient perspectives.

Define clear decision-making processes and establish charters that outline how AI tools are approved for use, how risks are escalated, and when systems should be decommissioned if safety concerns arise. These steps help create transparent and accountable AI practices [16][17][18]. Once governance structures are in place, the focus shifts to systematically assessing and monitoring AI risks.

Assessing and Monitoring AI Risks

AI systems should be categorized based on their function, level of criticality, and the extent to which they interact with protected health information (PHI). Differentiate between tools still in the research phase and those already deployed in clinical settings, as each requires unique compliance measures and validation processes [20].

Continuous monitoring is crucial throughout the lifecycle of AI systems. This helps identify issues like model degradation, unexpected errors, or "hallucinations" before they can impact patient care [19][20][3].

Managing AI Vendor and Supply Chain Risks

Beyond internal evaluations, overseeing vendors and the supply chain is vital to maintaining the integrity of AI systems. Develop strict vendor selection criteria that require transparency about training data, algorithm design, and bias testing [22]. Vendors must also demonstrate compliance with HIPAA, implement strong security measures, and have clear incident response protocols, especially for cloud-based platforms handling PHI [3][22].

Ensure that vendor practices align with your organization's data governance policies. Service level agreements (SLAs) should set clear performance benchmarks and prioritize AI solutions specifically designed for healthcare tasks [21][22]. The governance committee should regularly review and oversee vendor-provided AI tools to ensure they meet regulatory standards and deliver unbiased performance [3].

Future of AI in Healthcare and Risk Management

As AI continues to evolve, healthcare organizations are tasked with refining their risk management practices to keep pace with emerging technologies and shifting regulations. The goal is to ensure patient safety while embracing innovation.

Emerging AI Technologies in Clinical Practice

AI is reshaping healthcare by introducing personalized, predictive, and portable care options [1][25]. Tools like generative AI, predictive analytics, and virtual care platforms are automating repetitive tasks, boosting clinician efficiency, and enabling new diagnostic and patient care methods [1][23][24]. But these advancements come with challenges, such as potential data inaccuracies and algorithmic bias. To mitigate these risks, clinician oversight remains essential as AI technologies continue to evolve.

Clinician Involvement in AI Governance

Clinicians play a pivotal role in shaping AI governance, starting from the early stages of identifying problems and selecting tools to their deployment and eventual retirement [16][18]. Their involvement ensures that AI systems perform effectively in real-world scenarios and across diverse patient populations [16]. A concerning trend highlights the importance of this oversight: between 2022 and 2024, AI-related malpractice claims rose by 14% [18]. To address this, healthcare professionals need to actively assess AI limitations, analyze workflows before implementation, and maintain their medical judgment rather than relying solely on AI [26]. A global survey of 3,000 leaders across 14 countries revealed that 87% are worried about AI data bias exacerbating health disparities, while nearly half stressed the importance of transparency in AI to build trust [27].

Improving AI Risk Management Over Time

Healthcare organizations are moving toward proactive, ongoing risk management by using AI-powered analytics [29][19]. Real-time dashboards and regularly updated governance frameworks allow for scalable oversight while preserving critical human judgment [28]. By learning from past AI-related incidents and adapting to new regulatory standards, healthcare providers can strike a balance between innovation and the human oversight necessary to ensure patient safety.

FAQs

What are the key risks of using AI in healthcare?

The integration of AI into healthcare brings a host of risks that demand thoughtful management. Among the most pressing concerns are privacy breaches, cybersecurity threats, and data security vulnerabilities - all of which could expose sensitive patient information. On top of that, challenges like algorithm bias, diagnostic errors, and a lack of transparency in AI decision-making can directly affect the quality of care patients receive.

There are also technical and operational risks to consider. For instance, system malfunctions or model degradation over time could lead to inaccuracies, while an overreliance on AI might undermine the critical judgment and oversight of healthcare professionals. Tackling these challenges calls for comprehensive risk management strategies, frequent system evaluations, and a strong emphasis on compliance and ethical standards.

What steps can healthcare organizations take to secure their AI systems?

Healthcare organizations can protect their AI systems by adopting strong cybersecurity practices. These include real-time threat detection, vulnerability scanning, and continuous monitoring of AI-powered devices and data flows. Adding strict access controls and routinely validating data are also key steps in safeguarding sensitive information.

Regular risk assessments using frameworks like the NIST Cybersecurity Framework 2.0 and AI RMF are essential for spotting and addressing potential weak points. Training staff on secure AI usage and using incident reporting tools can further enhance system security. Additionally, adhering to regulations such as HIPAA helps ensure both data privacy and safe operations are upheld.

Why is it essential for clinicians to oversee AI in healthcare?

Clinician oversight plays a crucial role when working with AI in healthcare. While AI has the ability to improve decision-making, it isn’t without flaws. These systems can sometimes make mistakes, provide incorrect information, or even reflect biases that could jeopardize patient safety if not carefully monitored.

By thoroughly reviewing AI-generated outputs, clinicians can ensure that critical decisions are informed by accurate and contextually appropriate insights. This hands-on approach not only safeguards patients but also reduces the legal risks tied to depending solely on AI recommendations.

Related Blog Posts

Key Points:

What are AI-augmented clinicians, and how do they impact healthcare?

AI-augmented clinicians are healthcare professionals who leverage artificial intelligence tools to enhance their practice.

- AI assists clinicians in diagnostics, treatment planning, and administrative tasks, allowing them to focus more on patient care.

- These tools act as a complement to human intelligence, not a replacement, ensuring that clinicians remain at the center of decision-making.

- By integrating AI, clinicians can improve efficiency, accuracy, and patient outcomes.

How is AI transforming the future of medical practice?

AI is revolutionizing medical practice in several ways:

- Improved diagnostics: AI-powered tools analyze medical images and patient data to detect diseases earlier and more accurately.

- Personalized medicine: AI enables tailored treatment plans based on individual patient data and predictive analytics.

- Administrative efficiency: AI automates repetitive tasks, such as documentation and scheduling, reducing physician burnout.

- Enhanced decision-making: AI provides clinicians with real-time insights and recommendations, improving clinical outcomes.

What are the key benefits of AI in healthcare?

The integration of AI into healthcare offers numerous advantages:

- Enhanced clinical decision-making: AI tools analyze vast datasets to provide evidence-based recommendations.

- Reduced physician workload: Automation of administrative tasks allows clinicians to focus on patient care.

- Improved patient outcomes: AI enables early detection of diseases and more effective treatment strategies.

- Cost efficiency: AI streamlines operations, reducing healthcare costs over time.

What challenges do AI-augmented clinicians face?

Despite its potential, AI integration comes with challenges:

- Algorithmic bias: AI systems trained on biased datasets can lead to unequal treatment outcomes.

- Data privacy concerns: Protecting sensitive patient information is critical as AI systems handle large volumes of data.

- Workflow integration: Ensuring that AI tools fit seamlessly into existing clinical workflows can be complex.

- Ethical considerations: Clinicians must navigate issues related to transparency, accountability, and patient trust.

How can clinicians prepare for AI integration in their practice?

Clinicians can take several steps to prepare for AI adoption:

- Gain AI literacy: Understanding the basics of AI and its applications in healthcare is essential.

- Participate in training programs: Educational initiatives can help clinicians learn how to use AI tools effectively.

- Engage in ethical discussions: Clinicians should be aware of the ethical implications of AI and advocate for responsible use.

- Collaborate with AI developers: Providing feedback during the development of AI tools ensures they meet clinical needs.

What does the future hold for AI in medical practice?

The future of AI in healthcare is promising:

- Widespread adoption: AI tools will become a standard part of clinical practice, improving efficiency and outcomes.

- Collaborative decision-making: Clinicians and AI systems will work together to provide the best possible care.

- Continuous innovation: Advances in AI technology will lead to new applications, such as predictive analytics and precision medicine.

- Improved patient engagement: AI will empower patients with personalized health insights and recommendations.