Future-Ready Organizations: Aligning People, Process, and AI Technology

Post Summary

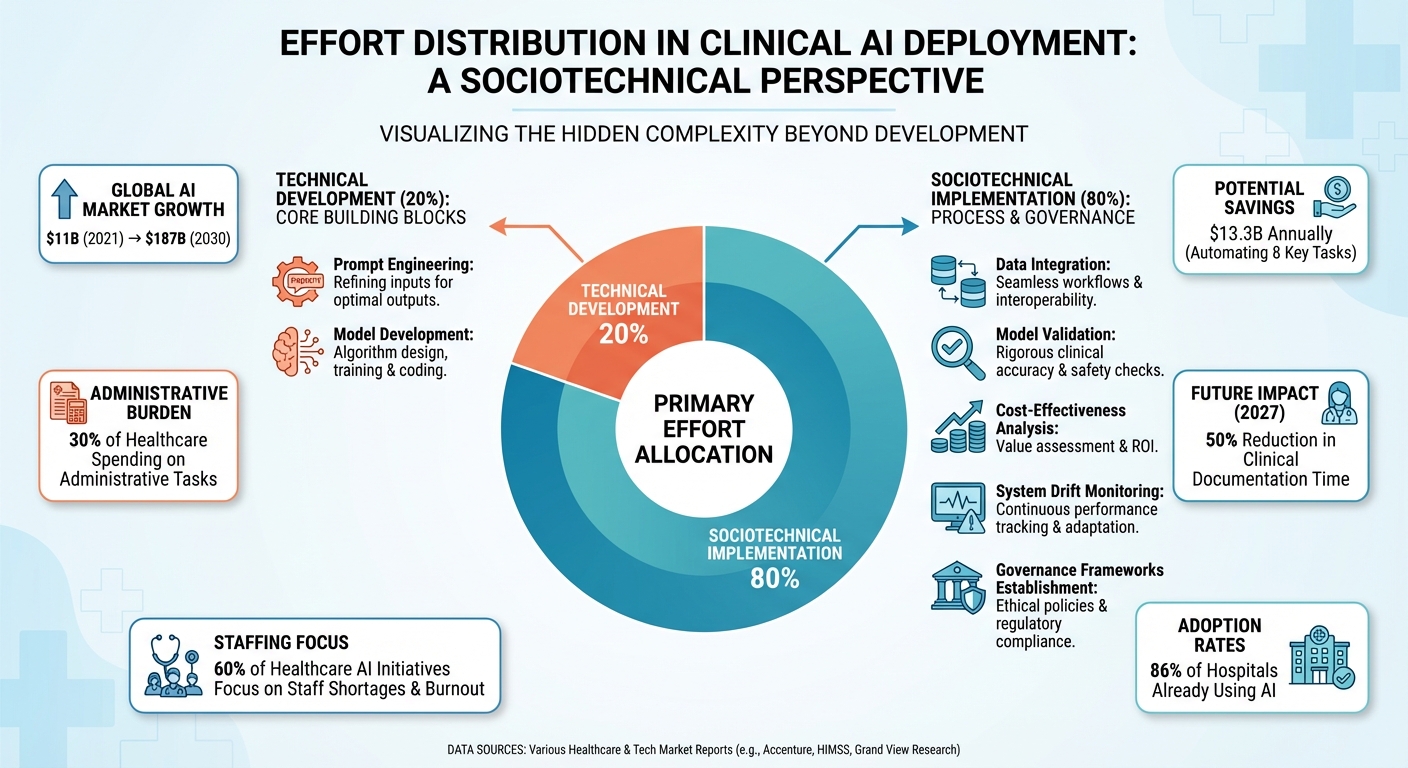

Healthcare is transforming with AI, but success hinges on aligning people, processes, and technology. The global health AI market is projected to grow from $11 billion in 2021 to over $187 billion by 2030, underscoring its potential. However, without proper integration, AI can lead to inefficiencies, poor outcomes, and lost trust. To truly improve patient care and operations, organizations must focus on:

- Outcome-driven AI adoption: Prioritize tools that enhance care and streamline workflows.

- Collaboration: Engage healthcare professionals and AI specialists to bridge expertise gaps.

- Workflow optimization: Automate repetitive tasks like documentation and approvals to save time and resources.

- Training: Provide role-specific education to ensure effective AI usage.

- Governance: Implement ethical and transparent frameworks to manage risks and compliance.

Strategies for Aligning People, Process, and AI

Clinical AI Deployment: 80% Sociotechnical Implementation vs 20% Technical Development

Integrating AI into healthcare isn't just about installing cutting-edge technology - it's about bridging the expertise gap between AI specialists and healthcare professionals. Both groups bring unique skills to the table, and successful implementation depends on thoughtful collaboration [2]. Introducing AI reshapes how tasks are distributed, alters professional roles, and shifts resource allocation within organizations [2]. A structured, well-planned approach helps reduce risks and ensures effective adoption [1].

Interestingly, less than 20% of the effort in clinical AI deployment is spent on tasks like prompt engineering and model development. The bulk - over 80% - focuses on sociotechnical implementation. This includes integrating data, validating models, ensuring cost-effectiveness, addressing system drift, and establishing governance frameworks [8]. This breakdown highlights the need to focus on people and processes as much as on the technology itself. When done right, these strategies foster collaboration, streamline workflows, and provide targeted training, creating a foundation for safe and effective AI integration.

Building Collaboration Between AI Tools and Healthcare Professionals

Collaboration begins by engaging all relevant stakeholders - patients, caregivers, clinicians, and support staff - early in the design and implementation process [3][5][6]. Transparent communication about what AI can and cannot do builds trust and secures support from everyone involved [3][5][6]. A good example comes from Ochsner LSU Shreveport, where AI-powered stroke triage tools significantly reduced door-to-puncture and door-to-CT times by simplifying complex workflows [4].

Human oversight remains essential when using AI for critical decisions. AI tools should complement clinical judgment, not replace it. For instance, platforms like Regard and Pieces integrate with electronic health records to offer real-time diagnostic suggestions, treatment recommendations, and alerts about drug interactions. However, the final decisions always rest with clinicians [5]. This balance ensures that AI enhances decision-making efficiency without compromising the nuanced expertise healthcare professionals bring to patient care.

Optimizing Workflows for AI Adoption

Before rolling out AI, organizations need to evaluate existing workflows, including tasks, technologies, and the roles of personnel involved. Automation will inevitably alter how work gets done, so the focus should be on improving safety, efficiency, and value while ensuring the technology serves all patient groups fairly [3][5].

Start by identifying repetitive, manual processes that consume significant time and resources - like prior authorizations, clinical documentation, and reimbursement activities [3][7]. These administrative tasks account for nearly 30% of healthcare spending, and automating them could save the sector billions. For example, automating just eight key administrative tasks could save $13.3 billion annually in the U.S. [5]. Gartner projects that by 2027, Generative AI could cut clinical documentation time by 50%, with 60% of healthcare AI initiatives focusing on addressing staff shortages and burnout [5].

AI-powered tools like Nuance Dragon Medical One, Augmedix, DeepScribe, Abridge, and Charting Hero can automatically transcribe patient interactions, extract key details, and create structured notes for electronic health records. These tools significantly reduce documentation time and alleviate clinician burnout [5]. Similarly, platforms such as CoverMyMeds and Cohere Health streamline prior authorization processes by analyzing clinical data, compiling necessary documentation, and submitting requests electronically. This speeds up approvals and reduces delays in care [5].

Integration with existing IT systems is critical. AI solutions must work seamlessly with electronic health records and other infrastructure, using APIs and FHIR standards to avoid data silos and disruptions [5][7]. Reliable data is the backbone of AI effectiveness, so investing in data cleansing, standardization, and ongoing maintenance is essential [5].

To ensure AI tools deliver on their promises, organizations should implement feedback loops for continuous monitoring. Track both quantitative metrics like ROI, cost savings, and error rates, as well as qualitative measures like staff and patient satisfaction [5][7]. Begin with pilot projects or smaller-scale implementations to demonstrate early wins, build trust, and gain momentum before scaling up to more transformative applications [3][6][7]. These workflow improvements not only boost efficiency but also create a solid foundation for managing risks and achieving operational goals. Once workflows are optimized, the next step is to prepare staff with the right skills for AI integration.

Training Healthcare Staff for AI Implementation

Effective AI adoption hinges on role-specific training. While all clinicians need a basic understanding of AI - how it works, its role in healthcare, and its strengths and limitations - generic training won't cut it. Tailored programs ensure each team member knows how to use AI tools effectively in their specific tasks, whether it's optimizing dictation, retrieving data, or interpreting AI-generated insights [9][10][11][12].

Hands-on, scenario-based training is particularly effective. Simulating real-world situations allows staff to practice using AI tools in a controlled environment [5][6][7]. It's also essential to communicate a clear vision: AI is here to enhance staff capabilities, not replace them. Gathering feedback during the training process can address concerns and build enthusiasm [5][6][7]. This approach transforms potential resistance into advocacy, turning healthcare professionals into champions of AI who understand its capabilities and limitations. Striking the right balance between technology and human oversight is key to achieving success in healthcare AI integration.

AI Governance Frameworks in Healthcare

As AI continues to weave itself into the fabric of healthcare operations, governance frameworks play a critical role in ensuring these tools are used ethically, securely, and effectively. Without proper oversight, AI systems can pose risks such as patient harm, compliance breaches, and erosion of trust. A well-structured governance framework applies ethical and regulatory standards to everyday operations, striking a balance between embracing new technologies and maintaining safety [18].

Healthcare organizations face numerous challenges when it comes to adopting AI responsibly. From navigating complex regulations to the absence of practical governance models, the path is anything but straightforward [17]. Effective governance goes beyond compliance - it also involves selecting appropriate use cases, validating AI tools, training personnel, and continuously monitoring performance [16]. These challenges underscore the importance of foundational governance principles.

Core Principles of AI Governance

The foundation of effective AI governance in healthcare lies in principles that guide the ethical, secure, and responsible use of AI systems [13][15]. Transparency, accountability, and ethical integrity are essential for building trust among patients, clinicians, healthcare organizations, and regulatory bodies - a trust that is crucial for AI's broader adoption [16].

On December 4, 2025, the Department of Health and Human Services (HHS) introduced an AI strategy that emphasized robust oversight, risk management, adherence to ethical standards, and a steadfast commitment to civil rights and privacy laws. This initiative is supported by the creation of a dedicated AI Governance Board [19].

These principles are not just theoretical - they directly influence day-to-day practices. For example, transparency ensures that clinicians and patients clearly understand how AI tools arrive at their recommendations. Accountability establishes clear ownership for addressing errors or unexpected outcomes from AI systems. Ethical standards safeguard patient privacy, mitigate bias, and promote fairness. Together, these principles help align AI efforts with healthcare organizations' operational and security priorities.

Tools for Managing AI Risk and Oversight

Managing AI-related risks effectively requires tools that can evaluate potential risks before deployment, monitor system performance in real time, and trigger alerts when issues arise. These tools help maintain alignment with clinical needs and regulatory standards.

The HHS AI strategy highlights the importance of strong risk controls and oversight mechanisms to sustain public trust [19]. This includes implementing robust security protocols, conducting regular audits, and ensuring compliance with regulations like HIPAA. Additionally, organizations must address risks related to data quality, algorithmic bias, system drift, and the integration of AI tools with existing clinical workflows.

The most effective strategies are those embedded in centralized platforms. These platforms automate workflows and provide a clear, organization-wide view of AI-related risks, simplifying oversight and ensuring continuous alignment with healthcare objectives.

Real Examples of AI Governance Implementation

Organizations are translating these principles and tools into real-world governance practices. Many healthcare institutions are actively establishing governance frameworks to manage AI responsibly while meeting compliance standards [16]. Governance committees - comprising clinical leaders, IT specialists, compliance officers, and risk management experts - play a pivotal role. These committees review AI use cases, approve vendor partnerships, set validation protocols, and monitor system performance post-deployment. By bringing together diverse expertise, they ensure AI initiatives align with organizational goals, ethical considerations, and regulatory demands.

Clear policies and procedures are essential for effective governance. These should outline roles, responsibilities, and decision-making processes. For instance, organizations need criteria for selecting AI tools, performance thresholds, and escalation protocols for addressing emerging risks. Detailed documentation and audit trails further enhance transparency and simplify compliance reviews. By turning governance principles into actionable strategies, healthcare organizations can confidently adopt AI while safeguarding patient welfare and maintaining trust.

Using Censinet RiskOps™ for People-Process-AI Alignment

Healthcare organizations face the challenge of aligning AI capabilities with human expertise and operational workflows. Censinet RiskOps™ steps in as a centralized platform, bringing together risk data from clinical, operational, financial, and workforce systems. By eliminating data silos, it provides real-time, cross-departmental visibility, enabling faster and more informed risk decisions. This unified perspective lays the groundwork for a closer look at the platform’s features, cybersecurity tools, and scalable risk management capabilities.

Censinet RiskOps™ Platform Features

At its core, the platform excels by automating and simplifying risk assessments while keeping human oversight intact. Censinet AI speeds up third-party risk evaluations with tools like rapid security questionnaires, automated evidence summaries, integration detail tracking, and detailed risk reporting.

The platform acts as a centralized hub, routing key findings and tasks to the appropriate stakeholders - such as members of AI governance committees - for review and approval. An intuitive AI risk dashboard aggregates real-time data, ensuring that the right teams address the right issues at the right time. This streamlined approach promotes consistent oversight and accountability across the organization, creating a strong framework for managing AI-related risks.

Improving Cybersecurity with Censinet AI

Censinet AI offers healthcare organizations a way to scale their cyber risk management efforts without sacrificing control. It uses a mix of human-guided automation for essential tasks - like evidence validation, policy creation, and risk mitigation - while configurable rules and review processes ensure that human decision-making remains central to operations. This balance strengthens cybersecurity while maintaining operational integrity.

This "human-in-the-loop" model is especially critical for healthcare AI adoption. As highlighted in the Holland & Knight Healthcare Blog:

AI will be harnessed to augment the workforce, not replace it, improving how HHS employees do their jobs. [19]

By adopting this approach, healthcare professionals can safely engage with AI tools, assess their strengths and limitations, critically analyze outputs, and report anomalies through well-designed incident reporting systems.

Managing Risk at Scale

Censinet RiskOps™ also enables seamless integration with existing systems, supporting healthcare interoperability standards and offering APIs and connectors for tools like electronic health records, HR systems, and incident reporting platforms. This smooth data exchange ensures that AI models are trained on clean, structured data while real-time pipelines enable continuous monitoring and governance. The result? Greater transparency and trust in AI outputs.

The platform’s incident reporting features further bolster risk management by documenting and categorizing AI-related issues, identifying patterns, tracking response times, and feeding insights back into process improvements. By closely monitoring AI algorithm performance, healthcare organizations can ensure their systems rely on datasets that accurately reflect their target populations, reducing the risk of bias or clinical errors.

This integrated approach allows healthcare leaders to scale risk management operations, tackle complex third-party and organizational risks with precision, and align with industry standards. By combining automation and human expertise, Censinet RiskOps™ achieves the ideal balance of people, processes, and AI - ultimately safeguarding patient care and enhancing operational efficiency.

sbb-itb-535baee

Steps to Develop AI Governance Maturity in Healthcare

Building strong AI governance in healthcare requires more than just adopting the latest tools - it’s about creating a system that aligns people, processes, and technology to manage risks effectively. This isn’t a one-and-done task; it’s an ongoing effort that demands thoughtful planning, collaboration across departments, and constant refinement. Healthcare organizations need a clear plan to progress from basic AI use to advanced, responsible integration that prioritizes patient safety and improves care delivery.

Evaluating Current AI Capabilities and Risks

The first step is to establish a formal AI governance structure with clear policies on how AI will be implemented, monitored, and utilized across the organization [20]. This involves assembling a diverse team of professionals - clinical leaders, IT specialists, compliance officers, ethicists, patient advocates, and data scientists - dedicated to assessing current AI tools and identifying potential risks [14][21][15].

Begin by conducting a thorough review of all existing AI systems. Resources like the AMA STEPS Forward® "Governance for Augmented Intelligence" toolkit can help lay the groundwork for this process [22]. Catalog every AI tool in use, from clinical decision support systems to tools for administrative automation. As Dr. Margaret Lozovatsky, Vice President of Digital Health Innovations at the AMA, explains:

AI is becoming integrated into the way that we deliver care. The technology is moving very, very quickly. It's moving much faster than we are able to actually implement these tools, so setting up an appropriate governance structure now is more important than it's ever been because we have never seen such quick rates of adoption. [22]

Next, systematically evaluate the risks and biases associated with each tool. This includes auditing training data for demographic representation, testing models across diverse patient groups, and ensuring algorithms perform equitably for all populations. Alarmingly, a recent survey found that only 61% of hospitals using predictive AI tools validated them against local data before deployment, and fewer than half tested for bias - leaving significant gaps that could jeopardize patient safety [27].

This initial assessment isn’t just about identifying risks; it sets the stage for ongoing improvements and alignment with industry standards.

Creating Continuous Improvement Processes

Once the evaluation is complete, the focus shifts to creating processes that keep your AI governance framework responsive to new technological developments. AI governance must be adaptable to remain effective [23][26]. Establish workflows that cover every stage of an AI tool’s lifecycle, from pre-implementation validation to post-deployment monitoring [16]. Keeping an updated inventory of all AI tools and implementing structured evaluation processes are key steps.

Regular monitoring and auditing of AI systems are critical to ensure they meet safety, ethical, and performance standards [16][26][22]. Governance processes should be designed with flexibility in mind, allowing for periodic reviews and updates [25]. For instance, if risks or biases are identified, retrain models with updated data that better reflect real-world scenarios, and make necessary adjustments to improve fairness [26].

Additionally, create mechanisms for reporting issues internally, with clear escalation pathways for addressing AI performance problems [16]. This feedback loop should focus not just on technical accuracy but also on real-world outcomes for patients [16]. The stakes are high: between 2022 and 2024, AI-related malpractice claims rose by 14%, underscoring the urgency of robust governance processes [23]. These steps not only protect patients but also enhance operational efficiency.

Matching AI Practices with Industry Standards

To ensure compliance and maintain patient trust, align your AI governance practices with regulatory requirements and best practices. For example, the U.S. Department of Health and Human Services (HHS) released a 21-page AI strategy on December 4, 2025, requiring divisions to implement minimum risk management practices for high-impact AI systems by April 3, 2026. This includes annual public reporting of AI use cases and risk assessments, overseen by the HHS AI Governance Board [19].

Rather than creating separate silos, integrate AI governance into existing clinical, operational, and data governance processes [25]. Build accountability into your AI strategy, with leadership regularly reviewing progress and ensuring alignment with organizational goals [19]. As Sunil Dadlani, EVP and Chief Information & Digital Transformation Officer at Atlantic Health System, notes:

The challenge [with governance] is to find the right balance of enough regulation and compliance framework without inhibiting or slowing down the innovation potential that comes with these technologies. [25]

Consider joining collaborative efforts like the Trustworthy & Responsible AI Network (TRAIN)™, which allows organizations to share insights on procuring and implementing AI solutions [24]. For instance, Duke Health and Vanderbilt University Medical Center (VUMC) are leading the Health AI Maturity Model Project, supported by a $1.25 million grant from the Gordon and Betty Moore Foundation. This project aims to help health systems evaluate their AI readiness, including governance, data quality, workforce skills, and ongoing monitoring [24].

Transparency from vendors is also crucial. Ensure they provide information about their training data, bias testing, and compliance with regulations. Define clear criteria for when AI tools should be retrained or retired if their performance declines. This reinforces the commitment to patient safety while maintaining operational excellence [14][27].

Conclusion: Building Future-Ready Healthcare Organizations

Creating a healthcare organization that’s ready for the future means blending advanced technology with the expertise of people. With 86% of hospitals and health systems already using AI [29] and nearly two-thirds of physicians expected to rely on AI tools by 2024 [30], the focus isn’t on whether to adopt AI but on how to do it in a thoughtful and responsible way. This echoes the importance of aligning people, processes, and technology, as discussed earlier.

The potential of AI in healthcare is immense. It’s reshaping how diagnoses are made, tailoring treatments to individual patients, forecasting outcomes, and simplifying administrative tasks [20]. But realizing these benefits takes more than just technology - it requires strong governance, ongoing monitoring, targeted training, and open communication with patients [28][20][29].

Dr. Michael E. Matheny highlights the importance of a measured approach that prioritizes the needs of patients and communities [29]. This involves setting clear accountability, weaving AI governance into existing clinical and operational workflows, and staying adaptable as technology and regulations evolve. These steps build a foundation for long-term success.

Organizations that thrive in this space treat AI governance as a continuous effort. They establish feedback loops, conduct regular validations, and align closely with regulatory standards. As Stanford’s Drs. Christian Rose and Jonathan H. Chen point out:

The effective integration of AI in medicine depends not on algorithms alone, but on our ability to learn, adapt and thoughtfully incorporate these tools into the complex, human-driven healthcare system [29].

When thoughtfully implemented, AI doesn’t just enhance healthcare; it transforms it into a system that is safer, more efficient, and ready to meet future challenges.

FAQs

How can healthcare organizations promote effective teamwork between AI specialists and healthcare professionals?

Healthcare organizations can create strong teamwork between AI specialists and healthcare professionals by involving clinicians right from the start of AI development. Early engagement allows clinicians to offer insights that shape tools to meet real-world needs. At the same time, providing hands-on training helps healthcare staff learn how to use AI tools effectively while boosting their confidence in applying the technology.

Open communication plays a key role in this process. Regular feedback sessions can help close the gap between technical and clinical perspectives, ensuring both sides are on the same page. Encouraging collaboration across disciplines and being upfront about what AI can and cannot do builds trust. This approach ensures that AI serves as a valuable partner to human expertise, rather than being seen as a replacement.

What are the essential elements of an effective AI governance framework for healthcare?

An effective approach to AI governance in healthcare hinges on a few critical components to ensure its use is safe, ethical, and efficient. Start by forming a multidisciplinary AI governance committee. This group will take charge of overseeing policies, managing risks, and ensuring continuous monitoring of AI systems. Next, establish well-defined policies and procedures that adhere to legal, ethical, and clinical requirements. Equally important is providing comprehensive training tailored to different roles, helping staff understand how to use AI responsibly and mitigate risks. Lastly, make regular audits and monitoring a priority to uphold compliance, safety, and ethical practices.

With these elements in place, healthcare organizations can confidently integrate AI into their workflows while safeguarding trust and accountability.

How can AI automation of administrative tasks make healthcare operations more efficient?

AI automation is transforming healthcare by reducing human errors, speeding up tasks like billing, claims processing, and documentation, and simplifying workflows such as appointment scheduling and eligibility checks. These improvements free up staff to focus more on patient care, boosting both efficiency and productivity.

By optimizing these processes, healthcare providers can experience quicker reimbursements, better use of resources, and smoother daily operations. The result? A better experience for both patients and healthcare professionals.

Related Blog Posts

- The Human Element: Why AI Governance Success Depends on People, Not Just Policies

- AI Governance Talent Gap: How Companies Are Building Specialized Teams for 2025 Compliance

- The AI-Augmented Risk Assessor: How Technology is Redefining Professional Roles in 2025

- Clinical Intelligence: Using AI to Improve Patient Care While Managing Risk