The Global AI Risk Landscape: Regional Differences in Managing Machine Intelligence

Post Summary

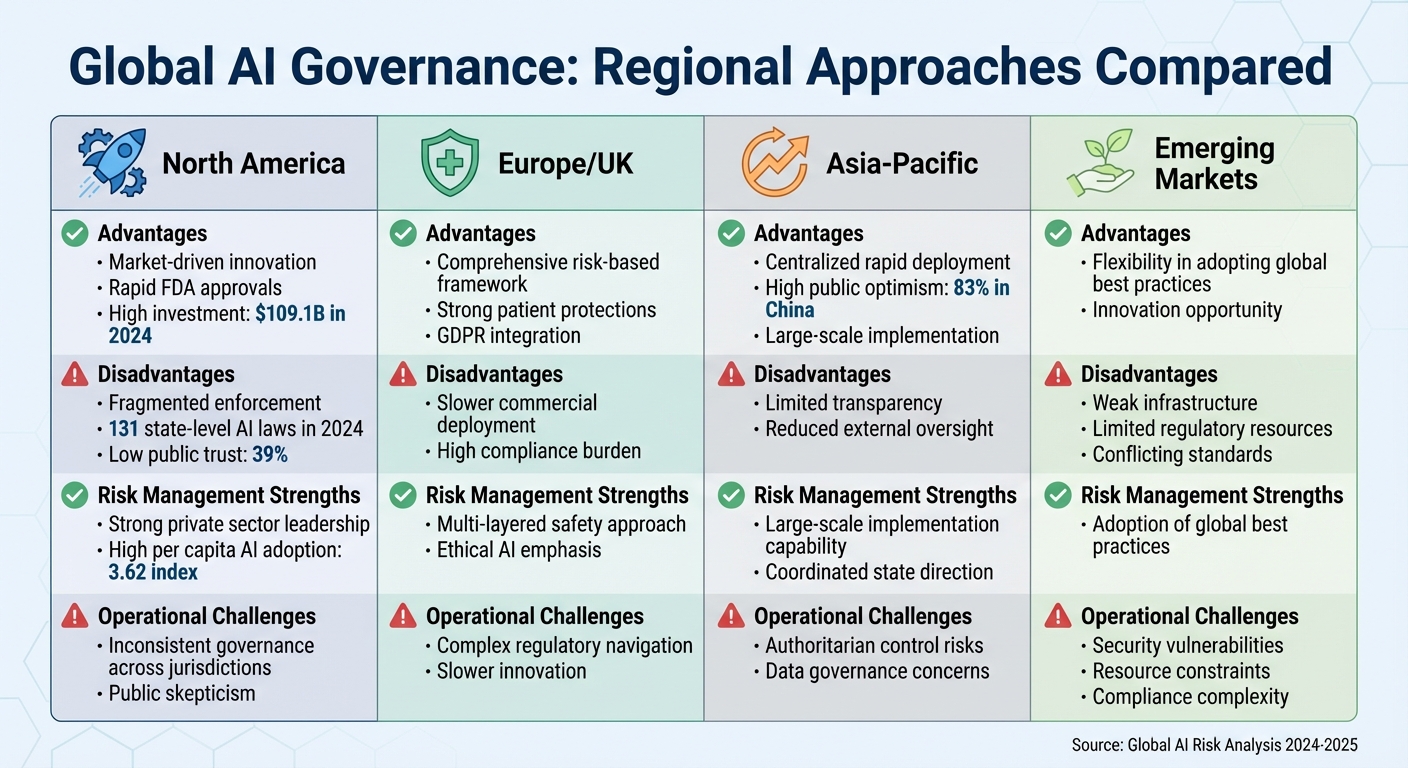

AI governance is evolving rapidly, but there's no universal approach. Different regions tackle AI risks in ways that reflect their unique priorities:

- North America: Innovation thrives, but inconsistent regulations and public skepticism pose challenges.

- Europe/UK: Strict rules like the EU AI Act prioritize safety but slow down AI adoption.

- Asia-Pacific: Rapid AI growth, especially in China, contrasts with concerns about transparency and governance.

- Emerging Markets: Limited infrastructure and resources make AI governance tough, but there's room to learn from global models.

For healthcare, navigating these varied frameworks is especially tough. Tools like Censinet RiskOps™ help centralize compliance and AI risk management, ensuring organizations meet diverse regional requirements while maintaining patient safety. With AI adoption accelerating globally, tailored strategies are key to managing risks effectively.

1. North America (United States and Canada)

Regulatory Frameworks

In North America, AI regulations are anything but uniform. As of December 2025, Canada has hit a roadblock after the Artificial Intelligence and Data Act (AIDA) failed to pass Parliament in January 2025 [5][6][7]. Meanwhile, the United States operates under a mix of federal regulations, executive orders, and guidelines from agencies like the FDA. The U.S. government's AI Action Plan leans on a governance-focused approach, aiming to encourage secure AI adoption by reducing regulatory hurdles while still maintaining oversight [8][9]. These differences in regulatory strategies directly influence how healthcare organizations manage risks.

AI Risk Management Practices

Healthcare organizations in North America are grappling with a range of challenges tied to compliance, liability, and intellectual property [9][10][11]. The adoption of AI in this sector has been slow [8][9], with hurdles such as model degradation, bias, and drift. Other pressing issues include data privacy and security risks, ethical concerns, limited AI literacy among staff, shadow AI deployments, model sprawl, observability gaps, and a noticeable skills gap between academic experts and industry professionals [12][13][14].

Healthcare-Specific Implications

To navigate these challenges, healthcare organizations need to prioritize strong compliance frameworks. Tools like Censinet RiskOps™ help centralize AI policy and risk oversight, while Censinet AI supports vendor assessments, evidence validation, and risk reporting. By incorporating a human-in-the-loop approach, these tools ensure that critical decisions remain informed and assist - rather than replace - decision-making in the highly regulated healthcare sector.

2. Europe and United Kingdom

Regulatory Frameworks

Europe has taken a pioneering step with the EU AI Act (Regulation (EU) 2024/1689), which stands as the first legally binding framework for AI on a global scale[15][16][18]. This legislation adopts a risk-based model, sorting AI systems into four categories: unacceptable, high, limited, and minimal risk. Each category comes with specific obligations that organizations must follow[15][16][17][19]. While the Act creates a standardized approach across EU member states, businesses must also account for individual country regulations alongside the overarching EU requirements[2].

Meanwhile, the United Kingdom has taken a different path post-Brexit, developing its own framework. This divergence means organizations operating across both regions need to adapt their compliance strategies to meet distinct regulatory demands. As a result, businesses are pushed to develop more nuanced and flexible approaches to managing AI risks.

AI Risk Management Practices

The regulatory frameworks in Europe and the UK demand rigorous AI risk management practices. Under the EU AI Act, many AI tools used in healthcare are classified as "high-risk", which triggers a series of stringent requirements[20][21]. These include implementing robust risk management systems, maintaining comprehensive documentation, and ensuring strong data governance. High-risk AI systems are also required to meet strict standards for accuracy, resilience, and security. This includes safeguards to prevent issues like data poisoning, model corruption, and adversarial attacks[20][21].

In addition to the EU AI Act, healthcare organizations in Europe must comply with GDPR, which enforces stringent rules around patient data privacy and protection. Managing both the AI Act and GDPR requires seamless collaboration between legal, technical, and clinical teams. This alignment is essential for navigating the overlapping demands of global AI risk management. However, the complexity of these requirements often creates operational challenges.

Operational Challenges

The regulatory environment in Europe brings with it several operational hurdles. Many organizations face reduced AI investments, fragmented leadership when it comes to AI strategies, and the difficulty of aligning with overlapping international frameworks such as those from the OECD, UNESCO, and NIST[4][2]. These challenges make it harder to balance broad governance principles with the practicalities of compliance, which in turn slows the pace of AI adoption across the region.

3. Asia-Pacific (China, Japan, Singapore, Australia)

Regulatory Frameworks

The Asia-Pacific region plays a pivotal role in the global AI landscape, with more than half of the world’s AI users based here[1]. However, the regulatory approaches across countries like China, Japan, Singapore, and Australia differ significantly. China’s governance model relies on centralized directives, allowing for swift implementation but often limiting public transparency and external scrutiny. On the other hand, nations like Japan, Singapore, and Australia are crafting strategies that aim to strike a balance between encouraging innovation and maintaining effective oversight. These differences create unique challenges in managing risks across the region.

AI Risk Management Practices

Organizations in the Asia-Pacific region are making bold investments in generative AI, outpacing counterparts in North America and Europe[4]. However, their risk management capabilities often struggle to keep up with the rapid pace of AI advancements. Differences in digital readiness and governance structures across countries[1] mean businesses must adopt risk management strategies tailored to local conditions.

Healthcare-Specific Implications

The healthcare sector highlights the pressing need for responsive AI risk management in the Asia-Pacific. While AI has the potential to bring transformative benefits to healthcare in this region, it also faces hurdles like data privacy concerns, algorithmic bias, and public trust issues. The diverse regulatory landscapes across these countries mean healthcare providers must develop strategies that align with the specific requirements of each market.

sbb-itb-535baee

4. Emerging Markets

Regulatory Frameworks

Emerging markets face distinct hurdles when it comes to shaping AI regulatory frameworks. Many of these regions operate without well-established governance systems and must navigate conflicting global standards [22][11][13]. The rapid pace of AI advancements often surpasses the development of regulations, leaving organizations to make critical decisions without clear guidance [22]. This issue is especially evident in emerging economies, where regulatory bodies may lack the resources and technical expertise that are more common in developed nations. These gaps in regulation create the need for innovative approaches to managing AI-related risks in these markets.

AI Risk Management Practices

As AI adoption grows in emerging markets, so do the associated risks, making strong governance essential [23]. Companies must find ways to align international standards with local practices, a task made even more challenging for those operating in multiple jurisdictions. Varying - and sometimes conflicting - regulatory requirements across countries add layers of complexity to compliance efforts [22][11][13].

Healthcare-Specific Implications

The healthcare sector in emerging markets faces unique challenges when integrating AI technologies. Unlike more developed regions, these markets often struggle with limited digital infrastructure, which makes implementing AI solutions even harder. Large multi-modal models are anticipated to play a significant role in areas like healthcare, scientific research, public health, and drug development [24]. However, inadequate infrastructure in many of these regions raises serious concerns about data security and patient privacy. Weak digital systems exacerbate these risks, highlighting the pressing need for effective AI governance on a global scale [23]. For healthcare providers in these markets, the challenge lies in harnessing AI's transformative potential while navigating infrastructure and resource constraints.

Pros and Cons

Global AI Governance: Regional Approaches to Managing AI Risks

AI policies vary significantly across regions, each bringing its own set of strengths and challenges. Here's a breakdown of how these differences shape regulatory and operational landscapes.

In North America, a decentralized system encourages innovation, thanks to rapid FDA approvals and significant private sector investment. For instance, AI investments are projected to hit $109.1 billion in 2024 [3]. However, this fragmented approach leads to inconsistent enforcement, with 131 state-level AI laws in 2024, and low public trust in AI (just 39%) [3]. While the private sector drives adoption - reflected in a high per capita AI adoption index of 3.62 - governance inconsistencies and public skepticism remain key hurdles.

Europe and the UK take a more structured route, employing a risk-based framework under the AI Act. This model prioritizes patient safety and ethical standards, particularly in high-risk healthcare applications. It also aligns seamlessly with existing regulations like GDPR and the Medical Device Regulation (MDR), creating robust safety layers. However, these benefits come at a cost: slower commercial deployment and a heavier compliance burden, which can hinder the pace of innovation compared to North America.

In the Asia-Pacific region, centralized directives drive rapid implementation. For example, China's state-led model enables swift deployment across healthcare systems, while countries like Singapore and Japan strike a balance between innovation and oversight. Yet, these approaches often compromise transparency and accountability, raising concerns about data governance and potential misuse. Public optimism about AI remains high in countries like China, where 83% of people express confidence in its use [3].

The emerging markets face unique challenges. Limited digital infrastructure, scarce regulatory resources, and conflicting global standards create vulnerabilities, particularly in managing sensitive patient data. While these regions have the flexibility to adopt best practices from more developed nations, resource constraints make consistent AI governance a difficult goal.

Regional Overview

| Region | Advantages | Disadvantages | Risk Management Strengths | Operational Challenges |

|---|---|---|---|---|

| North America | Market-driven innovation; rapid FDA approvals; high investment ($109.1B in 2024 [3]) | Fragmented enforcement; 131 state-level AI laws in 2024; low public trust (39%) [3] | Strong private sector leadership; high per capita AI adoption (3.62 index) | Inconsistent governance across jurisdictions; public skepticism |

| Europe/UK | Comprehensive risk-based framework; strong patient protections; GDPR integration | Slower commercial deployment; high compliance burden | Multi-layered safety approach; ethical AI emphasis | Complex regulatory navigation; slower innovation |

| Asia-Pacific | Centralized rapid deployment; high public optimism (83% in China) [3]; large-scale implementation | Limited transparency; reduced external oversight | Large-scale implementation capability; coordinated state direction | Authoritarian control risks; data governance concerns |

| Emerging Markets | Flexibility in adopting global best practices; innovation opportunity | Weak infrastructure; limited regulatory resources; conflicting standards | Adoption of global best practices | Security vulnerabilities; resource constraints; compliance complexity |

While emerging markets have the opportunity to learn from established models, their limited resources pose significant challenges, especially for healthcare providers handling sensitive data. By adopting tailored approaches, these regions could potentially bridge the gap, but the road ahead is far from straightforward.

Conclusion

The global AI risk landscape reveals a complex tapestry of approaches, with no universal solution for managing machine intelligence. North America leans on a decentralized, innovation-driven model, while Europe adopts a detailed risk-based framework. Meanwhile, the Asia-Pacific region and emerging markets bring their own distinct challenges. With 72% of businesses worldwide already incorporating AI in some capacity [25], it’s clear that a one-size-fits-all strategy simply won’t cut it.

This variation in strategies comes with tangible consequences. In 2024, 73% of organizations reported at least one AI-related security incident, with the average cost of resolving such breaches surpassing $4.5 million [26]. On the flip side, organizations with well-established AI governance frameworks saw a 23% faster time-to-market for AI projects and a 31% boost in stakeholder confidence [26]. The key difference? A focus on adaptive compliance backed by proactive regional engagement.

Healthcare organizations, in particular, face an uphill battle when operating across multiple regions. The diverse regulatory requirements demand a centralized approach to policy enforcement, ensuring that AI applications consistently meet security, access control, and compliance standards - no matter the jurisdiction.

Censinet RiskOps™ steps in as a powerful ally for healthcare organizations navigating these complexities. Acting as a centralized hub for AI governance, the platform simplifies risk management with automated workflows, role-based access controls, and real-time dashboards that highlight critical findings for stakeholders. With Censinet AI™, risk assessments are faster and more efficient while retaining essential human oversight. This allows healthcare providers to scale their cyber risk management efforts without compromising the safety and precision required in patient care. By integrating diverse regulatory requirements into a unified system, this platform showcases the kind of adaptability needed for effective global AI risk management.

These challenges highlight the growing demand for flexible governance solutions. Alarmingly, 62% of AI experts believe that organizations are not scaling their risk management capabilities quickly enough to address AI-related threats [27]. As regulations evolve and AI adoption accelerates, healthcare organizations must rely on platforms that can keep pace with both advancing technologies and shifting compliance demands.

FAQs

How do approaches to AI risk management vary around the world?

Different parts of the world tackle AI risks in ways that reflect their unique priorities, governance styles, and societal values.

The European Union (EU) takes a comprehensive, risk-based approach, prioritizing transparency and enforcing strict conformity assessments. This framework aims to ensure that AI systems operate safely and ethically within its member states.

In contrast, the United States (US) opts for a more decentralized, sector-specific strategy. This approach encourages innovation but can result in a patchwork of regulations that vary across industries and regions.

The United Kingdom (UK) leans on a flexible, sector-focused model. While this strategy allows for adaptability, it can create challenges when trying to maintain consistency across different industries.

China, on the other hand, relies on a centralized model. This enables swift implementation of AI regulations but often comes at the expense of transparency and external oversight.

These diverse approaches underline the need to adapt AI risk management strategies to fit local policies and societal expectations, particularly in critical fields like healthcare and technology, where accuracy and regulatory compliance are non-negotiable.

What challenges do healthcare organizations face in managing AI risks?

Healthcare organizations are grappling with numerous challenges when it comes to managing AI risks. One of the biggest hurdles is dealing with ever-changing regulations, especially those focused on data privacy and AI accountability. On top of that, they need to ensure compliance across different regions, which can be a daunting task.

Operational risks add another layer of complexity. These include issues like biases in algorithms, low-quality data, and unexpected outcomes - all of which can undermine trust and effectiveness.

Equally important is the need to uphold transparency and accountability in AI systems. This is especially crucial in healthcare, where decisions can directly impact lives. To tackle these challenges, organizations must establish strong governance frameworks that align with both local and international standards. This helps ensure AI tools are ethical, reliable, and capable of delivering better patient care.

Why is Censinet RiskOps™ essential for ensuring AI compliance across regions?

A tool like Censinet RiskOps™ plays a crucial role in navigating AI compliance by making the complicated task of handling regulatory requirements across various regions much more manageable. By bringing risk assessments into one centralized system, it allows organizations to align with diverse laws and standards while ensuring a consistent approach to compliance.

With capabilities such as continuous monitoring and audit-ready documentation, Censinet RiskOps™ enables businesses to tackle risks proactively and improve operational efficiency. This is particularly important for sectors like healthcare and technology, where responsible management of machine intelligence is key to maintaining trust and adhering to legal requirements.

Related Blog Posts

- Cross-Jurisdictional AI Governance: Creating Unified Approaches in a Fragmented Regulatory Landscape

- AI Governance Talent Gap: How Companies Are Building Specialized Teams for 2025 Compliance

- The Safety-Performance Trade-off: Balancing AI Capability with Risk Control

- Beyond Policy: Creating AI Governance That Adapts and Evolves