Healthcare AI Ethics and Vendor Selection: Fairness, Transparency, and Patient Rights

Post Summary

Artificial Intelligence (AI) is transforming healthcare by improving diagnostics, streamlining operations, and enabling real-time patient care. But with these advancements come serious ethical concerns. Bias in algorithms, lack of transparency, and risks to patient rights can harm trust and safety.

Key points:

- Ethical challenges: AI risks include biased decisions, privacy violations, and misuse of patient data.

- Vendor selection matters: Choosing AI vendors who prioritize ethics, transparency, and compliance is crucial for safe and fair healthcare.

- Safeguards: Organizations must assess vendors for bias mitigation, explainable AI, and data privacy protections to meet ethical and regulatory standards.

The article provides a framework for evaluating AI vendors, focusing on reducing risks, ensuring compliance, and protecting patient rights. Ethical decision-making in AI adoption is essential to building trust and delivering equitable care.

Ethical Principles for Healthcare AI

For healthcare AI to truly serve patients, it must rest on a strong ethical foundation. The American Medical Association (AMA) stresses the importance of ethics, evidence, and equity to establish trust in augmented intelligence within healthcare. Similarly, the World Health Organization (WHO) insists that ethics and human rights must guide the design, deployment, and use of AI systems in healthcare settings [5][7]. These aren't just lofty ideals - they are practical safeguards that shape how AI integrates into clinical environments, forming the backbone of a robust bioethical framework.

The traditional bioethical framework identifies four core principles: justice, non-maleficence, autonomy, and beneficence [6]. Justice ensures fair access to care and equitable outcomes for all patients. Non-maleficence requires AI systems to minimize harm and avoid biased results. Autonomy respects patients' rights to control their health data and decisions, while beneficence focuses on improving patient care and outcomes. When these principles conflict, justice and non-maleficence take precedence over beneficence and autonomy [6].

Regulatory Oversight and Risks

Regulatory frameworks reinforce the need for these ethical principles. For example, the EU AI Act, which took effect in August 2024, classifies medical AI as "high-risk", requiring strict compliance from developers and users [4][6]. Yet, despite such measures, a surprising number of healthcare providers rely on general-purpose large language models, like ChatGPT, without fully understanding the risks. A survey in Dutch hospitals revealed that while 57% of facilities used generative AI, only 29% retested, trained, or calibrated their AI models for errors. Alarmingly, 52% of respondents were unaware if such practices were even in place [6].

Addressing Algorithmic Bias

One of the most pressing ethical challenges in healthcare AI is tackling algorithmic bias. Bias occurs when AI systems amplify existing disparities in healthcare, often stemming from datasets that fail to represent diverse populations. This can happen during data collection, algorithm design, deployment, or even due to a lack of diversity in development teams. The consequences? Misdiagnoses, unequal treatment recommendations, and unfair resource allocation.

To promote fairness, healthcare AI must address both distributive justice (ensuring equitable distribution of resources) and procedural justice (ensuring unbiased decision-making). Organizations can reduce bias by using diverse, representative datasets and continuously monitoring their AI systems for emerging disparities [8].

Transparency and Explainability in AI

Transparency is another critical aspect of ethical AI in healthcare. Without clear insights into how AI systems make decisions, trust and patient safety are at risk. As Charles E. Binkley and his team point out:

If a solution lacks transparency, clinicians may struggle to trust or appropriately act on its recommendations, undermining effective clinical decision-making and potentially endangering patient safety [3].

To address this, interpretable AI tools like SHAP (SHapley Additive exPlanations) and LIME (Local Interpretable Model-agnostic Explanations) can help clinicians understand how specific data points influence AI predictions. This clarity allows healthcare professionals to validate AI outputs and use them with confidence. However, human oversight remains indispensable - clinicians must always review AI-generated recommendations before applying them in patient care [3].

Safeguarding Patient Rights and Data Privacy

Protecting patient autonomy and data privacy is non-negotiable in healthcare AI. Organizations must ensure AI vendors comply with HIPAA regulations and implement strong security protocols for data collection, transmission, and storage [1]. Patients also have the right to know how their data is being used and where it’s stored [1].

As healthcare becomes increasingly digital, traditional patient rights are evolving into what some are calling "digital patient rights" [6]. These rights include the ability to question AI recommendations, access auditable logic, and ensure fairness in AI-driven decisions. Medical associations, patient organizations, and other stakeholders must actively participate in shaping these regulations to protect patients and maintain the trust that underpins the patient-provider relationship [4][1]. Without these safeguards, AI risks undermining the very trust it aims to enhance.

How to Evaluate AI Vendors for Ethical Practices

Choosing an AI vendor in healthcare requires more than just assessing technical capabilities. It calls for a comprehensive approach that evaluates their ethical standards, adherence to regulations, and commitment to safeguarding patient data throughout the AI lifecycle [3][9]. This process not only minimizes hidden risks but also ensures that the AI systems align with critical ethical principles. A key area to focus on is how vendors address and mitigate bias.

Reviewing Vendor Bias Mitigation Efforts

Begin by asking vendors for detailed documentation on fairness metrics. Specifically, inquire about how they evaluate demographic parity, equalized odds, and disparate impact across diverse patient groups [10]. Avoid vague reassurances - insist on concrete evidence, such as bias audit reports that analyze false positive and false negative rates for different demographics [10].

Take a close look at their training datasets and data governance practices. Request detailed statistics, data dictionaries, information on storage locations, access controls, and reuse policies to ensure diverse and representative datasets [3][1]. Confirm that the vendor employs fairness-focused methods like differential privacy and federated learning, and that human auditors regularly review the AI models for potential biases [10]. Additionally, ensure the vendor complies with HIPAA regulations, particularly in how they handle Protected Health Information (PHI) during model training [1][2]. Once bias mitigation strategies are reviewed, shift your attention to how transparently vendors explain their model’s decision-making processes.

Checking Transparency and Explainability Features

Transparency builds clinical trust and ensures patient safety. Request thorough documentation of the AI’s decision-making processes, along with the mechanisms in place to audit and challenge those decisions [1]. Ask for demonstrations of interpretability tools like SHAP and LIME, as well as feature importance analyses that clearly show which inputs influence the AI’s recommendations [10][3].

Ensure the vendor provides performance reports and validation studies conducted on independent, representative datasets [3]. The AI system should adhere to fairness and non-discrimination principles while following established guidelines such as TRIPOD+AI and PROBAST for managing bias [3][11].

Verifying Patient Rights and Data Privacy Protections

After reviewing bias and transparency, dive into the vendor’s protocols for protecting patient rights and privacy. Confirm their use of strong, HIPAA-compliant security measures for data collection, transmission, and storage [1]. Scrutinize their data governance practices to understand where patient data is stored, who has access to it, and under what conditions [1]. It’s crucial to prioritize patient autonomy - patients should be fully informed about how their data will be used and retain control over their health information [1].

Using Censinet RiskOps for AI Vendor Risk Management

Once healthcare organizations evaluate vendors on factors like bias mitigation, transparency, and patient privacy, the next step is finding a platform that simplifies these assessments. That’s where Censinet RiskOps comes in, offering a centralized solution to streamline vendor risk management.

Censinet RiskOps™ for Vendor Risk Assessment

Censinet RiskOps™ serves as a one-stop platform for managing vendor risks. It brings all assessment data together in one place, making it easier to systematically review how vendors align with ethical and regulatory standards. Instead of juggling fragmented processes, organizations can conduct coordinated reviews of vendor performance. This unified method also lays the groundwork for incorporating advanced tools that combine the power of technology with the insight of human oversight.

Balancing Automation and Human Oversight with Censinet AI

In addition to centralizing assessments, Censinet AI ensures that automation doesn’t replace critical human judgment. By keeping experts involved in the review process, the platform allows risk teams to scale their evaluations without sacrificing thoroughness. This "human-in-the-loop" approach helps healthcare leaders maintain ethical standards while managing vendor assessments efficiently.

Improving GRC Team Collaboration with Censinet

Vendor risk management isn’t a solo effort - it requires strong collaboration across Governance, Risk, and Compliance (GRC) teams. Censinet boosts teamwork by streamlining review processes, making it easier for clinical leaders, compliance officers, and IT security teams to work together. With real-time follow-ups on identified risks, this coordinated effort ensures ongoing oversight and accountability throughout the vendor management process.

sbb-itb-535baee

Checklist for Assessing AI Vendors

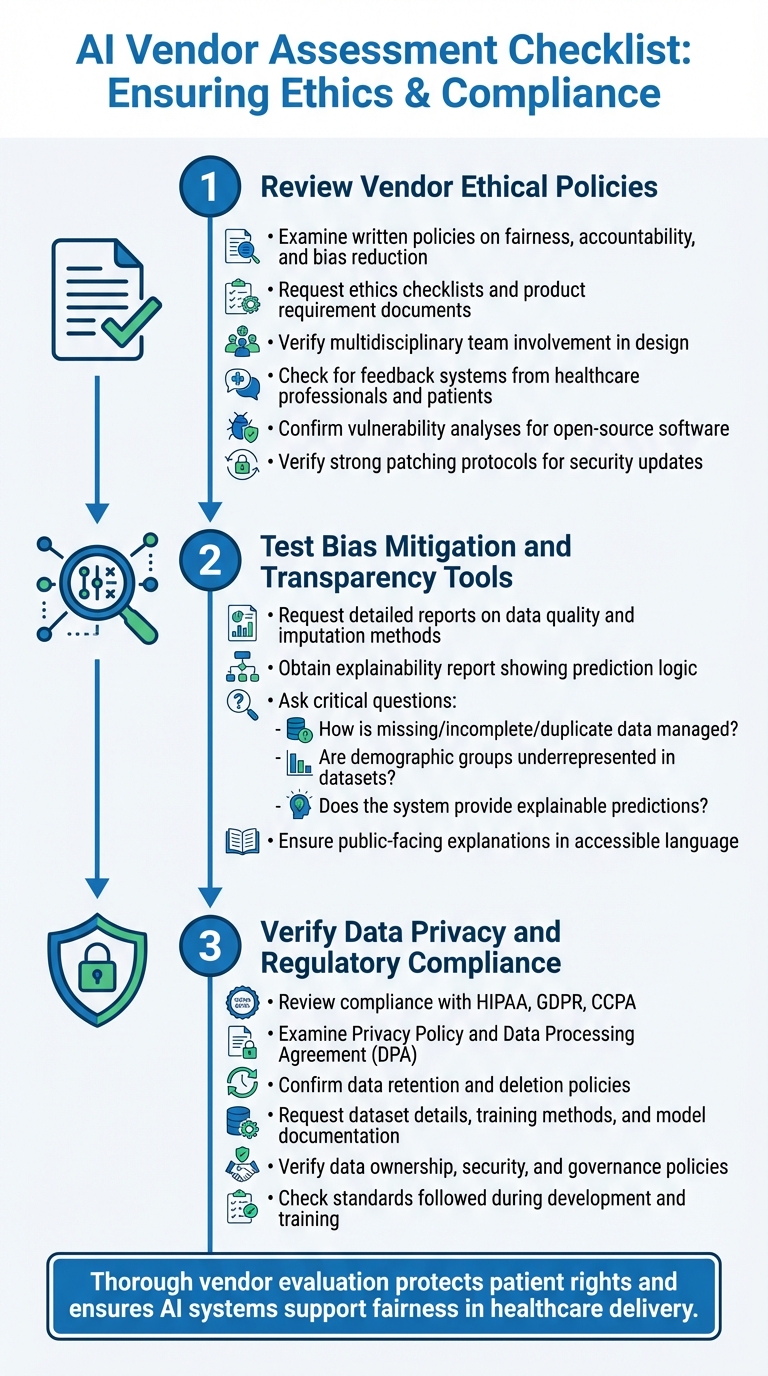

3-Step Checklist for Evaluating Healthcare AI Vendors on Ethics and Compliance

When evaluating AI vendors, it's essential to take a structured approach. Focus on their commitment to ethical practices, transparency, and safeguarding patient rights. Use this checklist to guide your assessment before making a decision.

Step 1: Review Vendor Ethical Policies

Start by examining the vendor's ethical commitments. Look for written policies or frameworks that address fairness, accountability, and strategies for reducing bias. Request documentation such as ethics checklists or product requirement documents created during the development process. Ensure that multidisciplinary teams were involved in designing the solution.

Check if the vendor has systems in place to gather feedback from healthcare professionals and patients. Additionally, confirm they perform vulnerability analyses for any open-source software they use and have a strong patching protocol to handle security updates.

Step 2: Test Bias Mitigation and Transparency Tools

Ask the vendor for a detailed report that outlines data quality, imputation methods, and the key assumptions made during the model's design. Request an explainability report to understand the logic behind the AI's predictions and the variables it relies on.

Key questions to ask include:

- How are issues like missing, incomplete, or duplicate data managed?

- Are certain demographic groups underrepresented or missing in the datasets?

- Does the system provide explainable predictions?

Ensure the vendor offers clear, public-facing explanations of the AI's purpose and functionality in simple, accessible language.

Michael Bennett, a researcher at Northeastern University, highlights the evolving regulatory landscape:

"You'll almost certainly see more AI regulation, whether it's city ordinance, state law or new federal legislation. The US is not going to be like the EU; there's probably not going to be one overarching framework in a near-term timeframe. Instead, we can anticipate a growing regulatory thicket that will be very complex for folks to navigate." [12]

Step 3: Verify Data Privacy and Regulatory Compliance

Review the vendor's compliance with regulations like HIPAA, GDPR, or CCPA. Carefully examine their Privacy Policy and Data Processing Agreement (DPA). Confirm they have clear data retention and deletion policies to uphold patient rights.

Ask for detailed information about their datasets, training methods, and model documentation. This helps ensure their solution is built on high-quality, reliable data. Also, verify their policies on data ownership, security, and governance. Request documentation on the standards followed during development and training to reduce potential legal and operational risks.

Conclusion

Choosing AI vendors with a strong ethical foundation goes beyond simply meeting regulatory requirements - it’s about creating a healthcare system that upholds fairness and safeguards patients' rights. Skipping thorough evaluations can lead to systems that reinforce existing inequalities and jeopardize patient safety. This underscores the importance of effective risk management tools like Censinet RiskOps™.

Censinet RiskOps™ offers healthcare organizations a streamlined platform to thoroughly assess AI vendors and handle the risks these technologies bring. It simplifies evaluations while maintaining critical human oversight. Acting as a central hub for AI governance, the platform directs key findings and risk insights to stakeholders, such as members of AI governance committees. With real-time data presented in an easy-to-navigate dashboard, Censinet RiskOps™ consolidates all AI-related policies, risks, and tasks, ensuring a cohesive approach across the organization.

Focusing on ethical practices and solid risk management when selecting AI vendors delivers more than regulatory compliance. It ensures AI systems are responsibly designed and deployed, paving the way for a safer, more equitable healthcare landscape. By tackling algorithmic bias through diverse data collection and rigorous testing, healthcare organizations can avoid reinforcing disparities and work toward genuine fairness in care delivery.

As regulations grow increasingly complex at federal, state, and local levels, robust vendor evaluations today can help organizations stay ahead while maintaining patient trust. Ethical vendor selection isn’t just a legal requirement - it’s a fundamental step toward building a healthcare system that respects and protects patient rights.

FAQs

How can healthcare organizations address and prevent bias in AI systems?

To tackle and prevent bias in AI systems, healthcare organizations need to take a proactive approach, starting with a close look at their AI vendors. It's important to assess how committed these vendors are to ethical practices. This means checking how transparent their decision-making processes are, ensuring they have solid data governance in place, and verifying that they comply with standards like HIPAA.

Conducting regular audits and risk assessments can also play a big role. Frameworks such as the NIST AI Risk Management Framework offer valuable guidance for identifying and addressing potential biases. Additionally, involving a diverse group of stakeholders throughout the development and implementation phases ensures a variety of perspectives are considered, which can help minimize the risk of unintentional bias.

Another key step is to work with vendors who hold certifications like HITRUST or ISO 27001. These certifications reflect strong data security measures and a commitment to ethical standards. By following these practices, healthcare organizations can build more trust in AI technologies while safeguarding patient rights.

What should I consider when choosing an ethical AI vendor for healthcare?

When choosing an AI vendor for healthcare, it's crucial to examine their expertise in healthcare-specific applications and their dedication to transparency in how AI decisions are explained and implemented. Make sure the vendor complies with data privacy laws like HIPAA and has solid data governance practices in place to protect sensitive patient information.

You’ll also want to focus on vendors that meet all necessary regulatory requirements and perform detailed risk assessments to identify and address potential effects on patient care and outcomes. Equally important is selecting vendors who implement clear accountability frameworks and offer ongoing AI lifecycle management to uphold ethical practices consistently over time.

Why is transparency in AI systems important for patient trust and safety?

Transparency in AI systems plays a crucial role in earning patient trust and maintaining safety in healthcare. When both patients and providers have a clear understanding of how AI systems make decisions, it builds confidence, minimizes uncertainty, and encourages accountability. Open communication about how AI operates also makes it easier to spot and correct any errors or biases, ultimately leading to safer and more dependable care.

By enabling audits and allowing challenges to AI-generated outcomes, transparency ensures these systems function in a fair and ethical manner. This approach not only safeguards patient rights but also reinforces trust in AI-powered healthcare, positioning these tools as key contributors to better care and improved results.