Why Healthcare Boards Should Care About GRC AI: ESG, Patient Safety, and Enterprise Risk

Post Summary

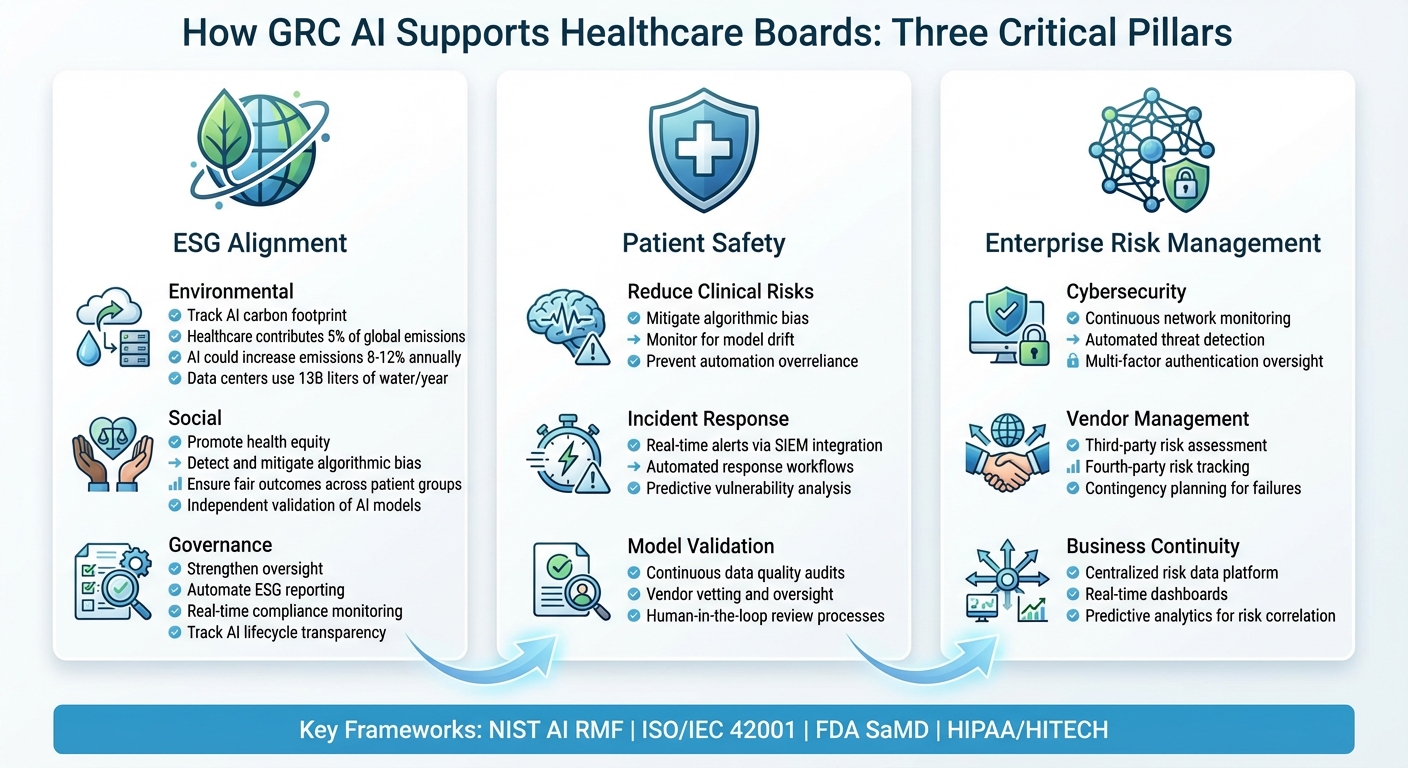

Healthcare boards face growing scrutiny to ensure AI technologies are safe, ethical, and compliant. Governance, Risk, and Compliance (GRC) AI helps organizations manage risks tied to AI adoption while aligning with regulatory demands, ethical standards, and business goals. Here's why it matters:

- Patient Safety: GRC AI mitigates risks like algorithmic bias, model drift, and overreliance on automation, ensuring AI systems improve care without introducing harm.

- Enterprise Risk Management: It consolidates risk data, automates cybersecurity monitoring, and strengthens vendor oversight to protect against threats like ransomware and system failures.

- ESG Alignment: GRC AI supports sustainability efforts by tracking AI's carbon footprint, promoting health equity, and ensuring fair outcomes for all patient groups.

Healthcare boards play a key role in overseeing AI systems, from ensuring compliance with frameworks like NIST AI RMF and FDA regulations to establishing cross-functional governance committees. Tools like Censinet RiskOps™ streamline risk assessments, automate compliance, and provide real-time insights, enabling boards to manage AI risks effectively while prioritizing patient safety and accountability.

Three Pillars of GRC AI in Healthcare: ESG, Patient Safety, and Enterprise Risk Management

How GRC AI Supports ESG Goals in Healthcare

As healthcare organizations adopt artificial intelligence, boards face a dual challenge: ensuring rigorous oversight while aligning these technologies with broader Environmental, Social, and Governance (ESG) objectives. GRC AI (Governance, Risk, and Compliance AI) goes beyond compliance, offering a structured way to integrate sustainability, equity, and accountability into healthcare's rapidly evolving AI strategies. With AI's role in healthcare expanding, the need to incorporate ESG principles has never been more pressing. GRC AI provides the tools to measure, monitor, and improve ESG performance, addressing challenges across environmental, social, and governance dimensions in one cohesive framework.

Environmental: Reducing AI's Carbon Footprint

AI's environmental impact in healthcare is hard to ignore. The healthcare sector contributes nearly 5% of global carbon emissions[5], and AI's increasing computational demands could push those emissions higher - by 8%–12% annually - if data centers continue to rely on fossil fuels[5]. For example, even a lightweight AI tool can have a notable environmental cost. Data centers, essential for AI operations, consume nearly 13 billion liters of water annually, with over 62% lost to evaporation. Additionally, the demand for critical minerals like lithium and cobalt for AI hardware is expected to surge by 300% by 2030[5].

GRC AI tools allow healthcare organizations to track and improve their environmental metrics. By adopting frameworks such as Sustainably Advancing Health AI (SAHAI)[4], boards can incorporate sustainability factors - like greenhouse gas emissions and water usage - into their AI deployment decisions.

Dr. Chethan Sarabu, Director of Clinical Innovation at Cornell Tech’s Health Tech Hub, emphasizes, "We're really in the early days of AI being implemented in health care... and what happens in the next three years or so, that's going to get baked into the system. And so if we make energy conscious decisions right now, we'll have a more efficient system."[4]

Healthcare organizations can use GRC AI to prioritize renewable energy for data centers, design energy-efficient algorithms and hardware, and implement smarter data archiving systems to reduce storage waste[4][5]. For instance, telecardiology services have already demonstrated significant reductions in carbon emissions[5]. By leveraging GRC AI, boards can track these efforts in real time, ensuring that AI adoption supports environmental goals without compromising operational efficiency.

Social: Promoting Health Equity and Fair Outcomes

Beyond environmental concerns, GRC AI plays a critical role in ensuring fair clinical outcomes and addressing bias. AI systems trained on incomplete or skewed data can unintentionally worsen healthcare disparities, leading to inaccurate diagnoses or inappropriate treatments for underrepresented patient groups[1][2]. Without proper governance, predictive models might even prioritize healthcare access unfairly[2].

GRC AI frameworks help set standards for data quality, completeness, and fairness during both training and deployment[2]. These systems enable continuous monitoring to detect and mitigate biases and protect against data-related issues like poisoning. For added assurance, healthcare organizations should independently validate vendor AI models to ensure they perform equitably across their specific patient populations[2].

Cross-disciplinary AI governance committees - bringing together data scientists, clinicians, compliance officers, and ethics experts - play a crucial oversight role throughout the AI lifecycle. These teams conduct audits and use red-teaming strategies to test AI systems under challenging scenarios.

Andreea Bodnari and John Travis of ALIGNMT AI Inc. note, "By establishing clear fairness standards, transparency, and accountability measures, governance reassures end users, clinicians, and regulators that AI innovations are safe, equitable, and aligned with the overarching mission of improving healthcare outcomes."[2]

This approach not only builds trust within communities but also ensures that AI systems are designed to serve all patients fairly and effectively.

Governance: Strengthening AI Oversight and Transparency

Effective governance is the backbone of ESG integration, ensuring AI systems remain transparent and accountable. Traditional oversight methods often struggle to keep up with the speed and complexity of modern AI technologies. GRC AI addresses this gap by automating ESG reporting, allowing boards to monitor AI performance, compliance, and ethical considerations in real time[2].

With robust documentation requirements, GRC AI ensures transparency throughout the AI lifecycle. This includes tracking data origins, feature selection processes, validation methods, and bias mitigation strategies[2]. Additionally, GRC AI platforms streamline compliance workflows, conduct ongoing regulatory checks, and use predictive analytics to identify potential risks. These capabilities help boards demonstrate accountability to regulators, investors, and patients, while staying agile in the face of evolving technologies and regulations.

Improving Patient Safety with GRC AI

Patient safety is a top priority in healthcare, and while AI offers incredible potential, it also brings risks that require thoughtful management. GRC AI tools provide a structured approach to keep clinical AI systems in check, ensuring they improve patient outcomes rather than jeopardize them. Without effective oversight, AI systems can deviate from their intended performance or introduce harmful biases. For instance, a 2020 ransomware attack delayed critical patient care, highlighting the importance of governance. Below, we’ll explore strategies to mitigate risks, respond swiftly to incidents, and validate AI models to protect patients.

Reducing Clinical AI Risks

Clinical AI systems face three main challenges: algorithmic bias, model drift, and overreliance on automation. Bias arises when AI is trained on incomplete data, leading to less accurate results for underrepresented groups. Model drift occurs as AI performance declines over time due to changes in clinical practices or patient demographics. Overreliance happens when clinicians overly trust AI recommendations without applying their judgment, especially in critical situations.

To address these challenges, healthcare organizations should establish formal AI governance committees. These groups - comprising clinicians, compliance officers, IT, and cybersecurity experts - evaluate new AI applications through detailed risk assessments. They examine issues like data privacy, algorithmic bias, cybersecurity vulnerabilities, and potential patient harm before deployment[7].

Continuous monitoring is equally important. Regular audits can identify bias, data integrity issues, and compliance gaps[6][7]. Maintaining clean and representative datasets, coupled with ongoing data audits, helps ensure AI systems remain reliable and safe over time[7].

Jeffrey B. Miller, Esq., Director-in-Charge at Granite GRC Consulting, highlights: "The healthcare organizations that avoid the big headlines aren't lucky – they're intentional. They've made AI governance part of their everyday risk and compliance program."[8]

AI-Driven Incident Detection and Response

When AI systems malfunction or make flawed recommendations, acting quickly is essential. GRC AI platforms can integrate with Security Information and Event Management (SIEM) systems to provide real-time alerts and automate incident response workflows, minimizing delays in detection and action[9]. These systems monitor networks and devices, flagging any deviations from normal patterns[9].

Machine learning tools within these platforms analyze historical data to predict vulnerabilities, allowing organizations to prioritize and address risks before they escalate[9]. They also aggregate data from incident logs, IT systems, and clinical workflows to uncover hidden patterns[9]. Effective incident response plans should clearly define the types of incidents - like data breaches or system outages - and outline steps for containment, investigation, and escalation. Regular simulations and tabletop exercises ensure these plans are ready to be deployed when needed[9].

Validating AI Models for Patient Safety

Thorough clinical validation is crucial to distinguish safe AI systems from those that could pose risks. GRC AI platforms support this by maintaining high data quality and integrity throughout the AI lifecycle. Continuous audits check for accuracy, completeness, and bias in the systems[7].

Healthcare organizations should also vet AI vendors carefully, reviewing their data practices, storage policies, and model training methods. This ensures that the vendors meet security, compliance, and ethical standards[7][8]. For high-risk decisions, human oversight remains vital. Incorporating manual review processes - such as accuracy testing and escalation procedures - ensures that AI supports, rather than replaces, clinical judgment[7].

The World Health Organization has recently cautioned against generative AI risks, emphasizing the need for strict oversight to prevent errors, patient harm, and a loss of public trust[10].

Clivetty Martinez, PhD, Director of Compliance and Privacy Services at Granite GRC Consulting, underscores: "Risk and compliance leaders can't be brought in after the fact. An AI risk assessment isn't a rubber stamp – it's a structured process that ensures decisions about AI are aligned with ethical, legal, and operational standards from day one."[8]

To stay ahead, organizations should perform risk assessments regularly - ideally quarterly or whenever significant changes occur. Tools like vulnerability scanners and penetration tests can help identify potential issues[8][9]. This ongoing validation process aligns with broader AI governance strategies, ensuring compliance and patient safety for all users[10].

Managing Enterprise and Cyber Risks with GRC AI

Healthcare organizations face the critical challenge of protecting patient data, managing vendor relationships, and ensuring operational continuity. GRC AI simplifies this by consolidating risk data, automating threat detection, and enabling proactive decision-making. This integrated approach equips organizations to respond effectively to cybersecurity threats.

AI and Cybersecurity Risk Management

Expanding traditional GRC practices to include enterprise and cyber risks allows healthcare organizations to tackle threats like ransomware, phishing, and insider attacks more effectively. GRC AI tools continuously monitor networks, endpoints, and medical devices for vulnerabilities, flagging unusual configurations or access patterns to provide early warnings. By integrating with SIEM systems, these tools deliver real-time alerts, automate responses to incidents, and oversee critical controls such as multi-factor authentication, firewall settings, endpoint security policies, and privileged access management. For example, they can detect anomalies like repeated failed login attempts or unauthorized system access [9] [11].

Agentic GRC takes this a step further by using generative AI to contextualize risks and suggest actionable next steps. Beyond automating tasks, it provides insights into why specific incidents are significant and what actions teams should prioritize, helping organizations achieve more informed compliance outcomes [11].

Third-Party and Fourth-Party AI Risks

Third-party vendors and their AI systems introduce additional layers of risk that healthcare organizations must address. Vendor contracts should include requirements for audit trails, performance testing results, and detailed documentation of algorithm updates and maintenance. Evaluating vendors' adherence to ethical and regulatory standards is also essential. Regular vulnerability assessments should focus on issues like weak access controls, unpatched software, and insecure data transmission in third-party integrations. GRC platforms equipped with cybersecurity monitoring can automate the tracking of these controls and promptly detect anomalies.

Healthcare organizations must also prepare for potential failures in third-party AI systems, whether due to vendor shutdowns, cyberattacks, or model drift. Comprehensive contingency plans should outline data access protocols, alternative workflows, and communication strategies to ensure operations can continue smoothly if disruptions occur [9] [12].

Effective vendor risk management also hinges on seamless onboarding of risk data across systems, ensuring a cohesive approach to managing third-party and fourth-party risks.

Business Continuity and Risk Correlation

To strengthen risk mitigation efforts, GRC AI unifies risk data across previously siloed systems. Many healthcare institutions still rely on separate platforms for managing clinical, operational, financial, and workforce risks, which makes it difficult to gain a complete view of enterprise-wide threats. GRC AI addresses this by centralizing information into a single platform, offering a comprehensive perspective. Using predictive analytics and machine learning, these tools can identify potential risk areas - such as patient safety issues, compliance breaches, or financial shortfalls - by analyzing both historical and real-time data.

Real-time dashboards further enhance decision-making by providing executives with clear visibility into key risk indicators. This consolidated approach streamlines compliance reporting, reduces redundancies, and ensures organizations can swiftly adapt to regulatory changes or unexpected incidents [9] [13] [2].

Clivetty Martinez, Ph.D., Senior Advisor at Granite GRC, highlights the value of this approach: "When you operationalize AI oversight through a GRC lens, you move from firefighting to foresight." [6]

sbb-itb-535baee

Building Effective AI Governance Structures

Once key AI risks are identified, the next step is creating governance structures that effectively manage these risks. In healthcare, boards play a critical role in overseeing AI systems, ensuring a balance between innovation and adherence to ethical and regulatory standards [16]. The challenge lies in designing governance frameworks that integrate smoothly into existing workflows while maintaining comprehensive oversight throughout the AI system's lifecycle.

Board and Committee Roles in AI Governance

Effective AI governance thrives on cross-functional collaboration. Committees tasked with AI oversight should bring together experts from various departments, including Legal, Ethics and Compliance, Privacy, Information Security, Research and Development, and Product Management [14]. In healthcare, this collaboration extends to senior leaders with expertise in clinical, operational, and technical areas, forming a well-rounded team for overseeing AI systems [15].

Organizations can either incorporate AI governance into existing board committees or establish dedicated groups, depending on their size and the level of AI integration. The key is to ensure board-level attention to AI governance, with clear accountability across departments. This approach helps align governance practices with regulatory requirements while fostering organizational responsibility.

Using Standards and Frameworks for AI GRC

Aligning AI governance with established standards and frameworks is essential for healthcare organizations. Some of the most relevant frameworks include:

- NIST AI Risk Management Framework (AI RMF): This framework categorizes governance activities into four core functions - GOVERN, MAP, MEASURE, and MANAGE. It’s widely adopted by U.S. federal agencies, making it a practical choice for organizations working with federal programs like CMS, VA, and DoD health systems [17].

- ISO/IEC 42001: The first international standard for AI management systems, this emphasizes ongoing risk evaluation and seamless integration with existing quality management systems [17].

- FDA Regulations for Software as a Medical Device (SaMD): These regulations require clinical validation, software verification, and cybersecurity measures, building on the Good Machine Learning Practice (GMLP) Guiding Principles [17][3].

- HIPAA and HITECH: These standards ensure patient data protection across all AI implementations, a critical requirement for healthcare organizations.

Adopting these frameworks helps mitigate the risks associated with AI while ensuring compliance with industry expectations.

Continuous Monitoring and Compliance

Establishing governance structures and adopting standards are just the first steps. Continuous oversight is vital to maintaining compliance and addressing new risks as they emerge. AI governance doesn’t stop at deployment - it demands ongoing monitoring and post-deployment audits to stay ahead of regulatory changes and unexpected challenges [2][18].

Centralized compliance reporting systems play a crucial role here, allowing real-time tracking of AI implementations and enabling quick responses to incidents or updates [2][19].

As Andreea Bodnari and John Travis from ALIGNMT AI Inc. note, "Ongoing oversight over AI implementations must focus on the monitoring of predefined Key Performance Indicators (KPIs). Such performance metrics can be defined during the initial evaluation of the AI product and historically logged to track potential model degradation" [2].

Organizations should continuously monitor data quality, model performance across different demographic groups, and potential biases. Regular manual audits, red-teaming exercises, and post-marketing surveillance - similar to pharmacovigilance practices - are also essential [2][18]. Vendors must provide evidence of bias testing and performance monitoring [20]. For example, if a chest X-ray interpretation system begins producing inaccurate results, strong governance frameworks ensure immediate corrective actions, such as suspending the system, identifying gaps in training data, retraining with diverse datasets, and redeploying the system under stricter monitoring [20].

Using Censinet for AI-Driven GRC in Healthcare

Healthcare organizations face the challenge of managing complex risks while maintaining effective oversight, especially in the fast-evolving world of AI. Tools like Censinet RiskOps™ are designed to simplify this process. By centralizing vendor risk, enterprise risk, and AI oversight, Censinet helps streamline compliance and tackle emerging risks across clinical, operational, and cybersecurity domains. Here’s how Censinet RiskOps and AITM enable healthcare boards to efficiently manage risks and oversee AI implementations.

Streamlining Risk Assessments with Censinet RiskOps

Censinet RiskOps takes the hassle out of vendor risk assessments by automating key processes. Instead of being bogged down by administrative tasks, healthcare organizations can rely on the platform to handle evidence reviews and assessments. It creates a collaborative network where organizations can share assessments, compare their cybersecurity standing with peers, and monitor risks across their vendor ecosystem.

The platform’s automated workflows ensure findings are sent to the right stakeholders, while a centralized dashboard provides real-time insights into risk exposure. This includes monitoring risks tied to medical devices, clinical applications, supply chains, and patient data systems. By automating the repetitive parts, risk teams can focus their energy on deeper analysis and more strategic decision-making.

Scaling AI Oversight with Censinet AITM

Managing multiple AI tools and vendors can be overwhelming, but Censinet AITM makes it faster and more manageable. It slashes the time needed for vendor questionnaire completion - from weeks to just seconds - by automatically summarizing vendor evidence, capturing integration details, and identifying fourth-party risks that might otherwise slip through the cracks.

The platform also generates detailed risk summary reports, pulling together all relevant assessment data. This efficiency allows healthcare boards to evaluate AI tools and vendors more quickly while maintaining a thorough review process. For organizations juggling several AI implementations, this means they can address more risks in less time - without cutting corners.

Human-Guided AI for Safe and Scalable Solutions

Censinet combines automation with human oversight through a human-in-the-loop approach, ensuring that expert judgment remains central to the decision-making process. Risk teams can configure rules to guide automation, keeping control firmly in their hands.

The platform acts as a command center for AI governance, directing assessment findings and tasks to the right stakeholders, such as members of the AI governance committee. With an intuitive AI risk dashboard, healthcare organizations gain a real-time view of policies, risks, and tasks related to AI. This setup allows for continuous oversight and helps scale risk management efforts, all while prioritizing patient safety and organizational accountability.

Conclusion: Building a Resilient Future with GRC AI

By leveraging tools like Censinet RiskOps and AITM, a unified GRC AI approach strengthens the foundation of an organization. For healthcare boards, implementing AI governance isn't just a choice - it's a necessity to ensure patient safety and secure long-term resilience. Integrating GRC AI influences areas like environmental, social, and governance (ESG) initiatives, patient safety, and enterprise risk management. It achieves this by addressing issues such as reducing carbon footprints, advancing health equity, mitigating algorithmic bias, managing safety risks, and tackling cybersecurity and third-party challenges.

A well-structured GRC AI strategy ensures that AI systems are designed, developed, and deployed responsibly, preventing them from becoming safety risks and fostering trust across the organization [2]. To accomplish this, healthcare organizations must adopt modern GRC strategies that combine AI risk management with cybersecurity, vendor oversight, and operational risk controls [21].

These strategies empower organizations to make faster, more informed decisions by integrating board oversight with real-time monitoring capabilities. The risks AI introduces - like data privacy concerns, algorithmic bias, transparency challenges, and unintended errors that could jeopardize patient safety - must be addressed head-on [2]. This alignment between governance and operations lays the groundwork for effective, real-time risk management.

Centralizing risk data simplifies decision-making across compliance, cybersecurity, and clinical operations. Platforms like Censinet RiskOps provide healthcare organizations with the tools needed to manage these complexities efficiently. By consolidating risk and compliance information, boards gain a clear, real-time view of AI implementations, vendor relationships, and overall enterprise risks [22]. These platforms also ensure that AI systems are monitored and retired when necessary, preventing them from becoming liabilities [21][2].

The future of healthcare rests on the decisions made today. Organizations that integrate GRC AI into their strategic plans will be better equipped to safeguard patients, meet regulatory standards, and build the resilient systems needed to thrive in an AI-driven world.

FAQs

How does AI-powered GRC improve patient safety in healthcare?

AI-driven Governance, Risk, and Compliance (GRC) tools play a critical role in enhancing patient safety by spotting risks early, such as biased algorithms or data security gaps, that could compromise care quality. These tools streamline compliance processes, helping healthcare organizations adhere to regulatory standards while prioritizing top-notch patient care.

With real-time monitoring and the ability to detect safety concerns early, AI-powered GRC systems reduce errors and prevent medical mistakes. Beyond automation, these tools provide actionable insights that support human oversight, uphold data integrity, and ensure transparency in decision-making. Together, these features work to deliver safer and more reliable healthcare outcomes.

How does GRC AI help healthcare organizations meet ESG goals?

GRC AI supports healthcare organizations in meeting Environmental, Social, and Governance (ESG) objectives by weaving sustainability, ethical practices, and social responsibility into their risk management and compliance strategies. It helps organizations anticipate potential risks, enhance transparency, and foster trust among stakeholders.

With AI-powered insights, healthcare leaders can fine-tune their operations to reflect ESG priorities while maintaining a strong focus on patient safety and regulatory requirements. This dual focus not only reduces risks but also reinforces the organization's ability to adapt and remain accountable.

How can healthcare boards oversee AI systems to ensure compliance and manage risks effectively?

Healthcare boards can take charge of overseeing AI systems by putting in place robust governance structures. This means setting clear policies, conducting regular risk assessments, and performing routine audits. Staying aligned with federal guidelines, such as the NIST AI Risk Management Framework, is key to meeting regulatory standards.

To strengthen their oversight, boards might consider forming specialized AI committees, ensuring transparency with detailed documentation, and adhering to ethical principles like accountability, privacy, and fairness. Incorporating AI risk management into the organization's broader risk strategies, keeping a close eye on AI system performance, and encouraging collaboration across departments are all essential steps to reduce risks and protect patient care.

Related Blog Posts

- AI-Powered GRC: How Leading Organizations Are Automating Compliance in the Age of Increasing Regulation

- From Reactive to Predictive: AI-Driven Risk Management Transformation

- The Process Optimization Paradox: When AI Efficiency Creates New Risks

- Why 92% of Healthcare Organizations Are Failing at GRC Integration - And How AI Changes Everything