The Healthcare Cyber Storm: How AI Creates New Attack Vectors in Medicine

Post Summary

AI is transforming healthcare, but it’s also introducing serious cybersecurity risks. From data poisoning to AI-enabled device vulnerabilities, attackers are finding new ways to exploit these systems. Sensitive patient information, critical medical devices, and even diagnostic tools are at risk. The adoption of AI expands the attack surface, making traditional security measures insufficient.

Key takeaways:

- AI systems are vulnerable to manipulated data, adversarial attacks, and supply chain breaches.

- IoMT devices often lack proper security, exposing hospital networks and patient safety.

- Generative AI misuse can lead to data leaks, voice cloning, and deepfake scams.

- Governance and continuous monitoring are vital to managing these risks effectively.

This article highlights the challenges and offers practical strategies to secure AI systems, protect patient data, and strengthen healthcare cybersecurity.

AI Cybersecurity Risks in Healthcare: Key Statistics and Attack Vectors 2024-2025

How AI Creates New Attack Vectors in Healthcare

AI Data Pipeline and Model Vulnerabilities

AI systems in healthcare rely heavily on massive data sets, but this dependency opens the door to various risks. For example, data poisoning involves injecting harmful inputs into these systems, which can distort diagnostic or treatment algorithms. Similarly, model manipulation targets weaknesses in the algorithms themselves, potentially altering their outputs. These issues are especially alarming when AI systems are used to make critical patient-care decisions. The "black box" nature of many AI models further complicates matters, as healthcare professionals often can't fully understand how these systems reach their conclusions.

Adding to the complexity, many healthcare organizations work with third-party AI vendors. These vendors often have extensive access to patient data and healthcare systems, introducing supply chain risks. A vulnerability in one vendor's system could ripple through multiple organizations. These risks aren't limited to data alone - they can also affect connected devices and user interactions, creating a broad attack surface.

IoMT and AI-Enabled Medical Device Risks

AI's integration into medical devices brings its own set of cybersecurity challenges. From smart infusion pumps to sophisticated imaging equipment, AI-powered medical devices are transforming healthcare delivery. However, they also expand the potential for cyberattacks. These devices connect to hospital networks, transmit sensitive patient data, and often operate autonomously, making them attractive targets.

Unfortunately, many Internet of Medical Things (IoMT) devices are designed with functionality in mind, often at the expense of security. Common issues include weak encryption, limited capacity for security updates, and vulnerabilities that can compromise entire fleets of devices. The interconnected nature of these systems means that a breach in one device could have cascading effects. For instance, if an AI-enabled insulin pump is hacked, attackers could gain access to broader hospital systems, exposing patient records or disrupting other critical equipment. This interconnectedness amplifies the potential risks across the entire healthcare network.

Generative AI and Human Error Risks

Generative AI introduces another layer of risks, particularly through human error and misuse.

One major concern is the accidental exposure of sensitive patient data. Healthcare staff might unknowingly upload confidential information into AI chatbots, not realizing that this data could be stored or accessed by unauthorized parties. On the other hand, malicious actors are leveraging generative AI to develop more sophisticated attacks.

For example, voice cloning technology allows attackers to impersonate executives or medical professionals, enabling unauthorized access to systems or fraudulent transactions. Similarly, deepfake technology can create fake identities, which might be used for insurance fraud or to fabricate synthetic patient records for billing scams. These tactics exploit the trust that healthcare staff naturally place in their colleagues and supervisors, making organizations especially vulnerable to these advanced threats.

How to Reduce AI Cyber Risks in Healthcare

Now that we've explored how AI introduces new vulnerabilities, let’s dive into practical steps to tackle these risks. By addressing these challenges head-on, healthcare organizations can better protect themselves from AI-specific cyber threats.

Building AI Governance and Accountability

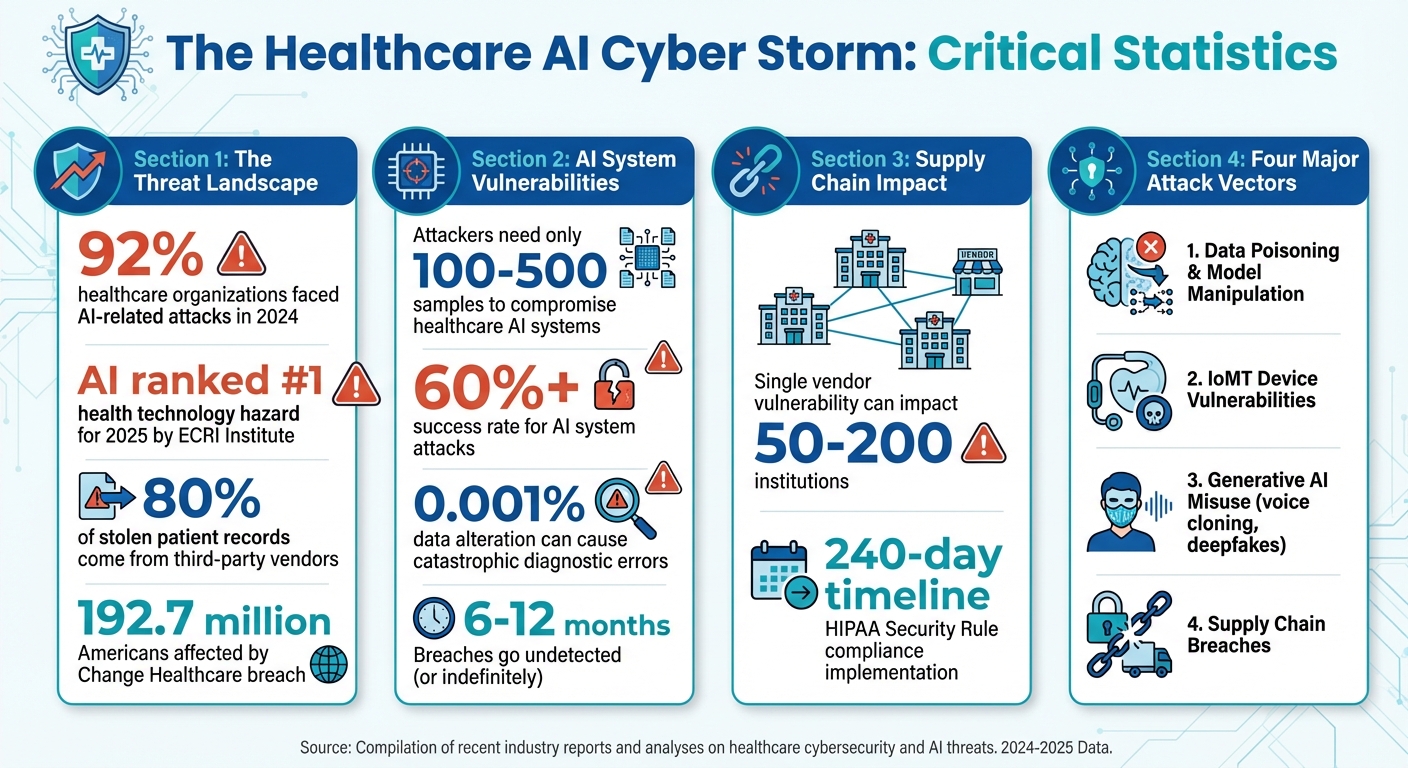

The ECRI Institute has identified AI as the #1 health technology hazard for 2025, and with a staggering 92% of healthcare organizations facing AI-related attacks in 2024, strong governance is no longer optional - it’s essential [2]. Establishing AI governance committees is a critical first step. These committees should include leaders from clinical, IT security, compliance, and risk management teams. Their primary role? To maintain a centralized inventory of all AI systems in use. This includes everything from diagnostic tools to administrative chatbots, with detailed tracking of where these systems are deployed, the data they access, and the individuals responsible for their security.

The regulatory environment is evolving quickly. For example, updates to the HIPAA Security Rule now require specific technical controls in business associate agreements. Staying proactive about these changes is key to avoiding compliance pitfalls [2][3]. A robust governance framework should also define precise data access boundaries for every AI application. This means clearly specifying what information each system can access and under what circumstances. Why is this so critical? Studies reveal that attackers need access to as few as 100-500 samples to compromise healthcare AI systems, achieving success rates of over 60%. Worse yet, breaches often go undetected for 6-12 months - or indefinitely [4].

Securing Data Pipelines, Models, and Infrastructure

AI systems are particularly vulnerable to stealthy attacks like data poisoning, which can lie dormant until activated. This makes securing your AI infrastructure an ongoing effort, not a one-and-done task [2]. One effective approach is implementing zero-trust principles. This means no user or system is trusted by default - authentication and authorization are required at every stage, from data collection to model deployment.

The management of AI models throughout their lifecycle demands extra vigilance. Adversarial attacks on medical AI systems can be devastating; altering just 0.001% of input data can lead to catastrophic diagnostic errors [2]. To mitigate this, train and test AI models in secure, isolated environments. Use strict version control to track changes, keep detailed records of training data and parameters, and validate outcomes rigorously. Simulate adversarial attacks during testing to identify potential vulnerabilities before clinical deployment. Regular audits are also essential to detect signs of data poisoning or manipulation, focusing on sudden shifts in model behavior or performance.

Once your AI systems are secure, the next challenge is to ensure the safety of AI-enabled devices and clinical workflows.

Protecting AI-Enabled Devices and Clinical Workflows

AI-enabled devices must be integrated into your broader medical device security strategy. Start with pre-procurement assessments. Before purchasing any AI-enabled equipment, require vendors to provide comprehensive documentation, including details on how their AI models were trained, results from security testing, and their processes for delivering updates.

Monitoring AI-enabled devices poses unique challenges. Unlike traditional cybersecurity threats, you also need to watch for AI-specific vulnerabilities. Establish baseline behavior patterns for each device and set up automated alerts for any deviations that might signal a compromise or malfunction. Your incident response plans must include specific protocols for AI-related issues. For instance, if an AI diagnostic tool begins producing unusual results, your team should have clear steps for isolating the system, preserving evidence, and ensuring patient safety during the investigation. This includes knowing when to switch to manual processes and effectively communicating the status of the AI system to clinical staff.

sbb-itb-535baee

Managing AI Risk with Censinet

Censinet provides solutions to tackle the cybersecurity risks associated with third-party vendors, a critical issue given that these vendors account for 80% of stolen patient records [2]. Supply chain attacks can exploit vulnerabilities in AI models, impacting anywhere from 50 to 200 institutions through a single vendor [4]. With these interconnected risks, healthcare organizations require a scalable approach, as traditional methods often fall short in addressing the complexities of modern AI threats. Here's how Censinet's tools are designed to meet these challenges head-on.

Censinet RiskOps™ for Centralized AI Risk Management

Censinet RiskOps™ simplifies AI risk management by unifying assessment data, governance decisions, and security tasks within a single platform. This eliminates the need for manual spreadsheets and fragmented workflows, offering real-time oversight - an essential feature for AI governance committees. These committees need to know which AI systems are in use, where patient data is accessed, and who is responsible for ensuring their security.

The platform also features automated risk routing, which directs identified risks to the appropriate stakeholders for immediate action. For instance, if a vendor's AI diagnostic tool exhibits unusual behavior, the system flags the issue and ensures the right team members are notified to address the concern promptly.

Censinet AI™ for Automated Risk Assessment

Censinet AI™ transforms the traditionally time-consuming vendor risk assessment process. By automating these assessments, it reduces the time needed for vendor questionnaires from weeks to mere seconds. The platform summarizes vendor evidence, highlights critical integration details, and identifies fourth-party risk exposures, generating comprehensive risk reports in the process.

While automation speeds up the process, it doesn't replace human oversight where it matters most. Risk teams can configure rules and review processes to validate evidence, draft policies, and tailor risk mitigation strategies to their organization's needs. This balance ensures that operations can scale efficiently without compromising the depth of analysis. Additionally, automated assessments feed into continuous monitoring, ensuring ongoing compliance with security standards across all vendor layers.

Improving Third-Party Risk Management with Censinet

Managing third-party risks is particularly challenging, as vendor incidents often involve multiple organizations. Censinet addresses this issue with its AI-powered platform, which streamlines third-party assessments and ensures compliance with new HIPAA Security Rule mandates. These mandates require technical controls like encryption, multi-factor authentication, and network segmentation, and healthcare organizations are expected to implement them within a 240-day timeline [2].

Censinet AI™ goes beyond periodic assessments by enabling continuous monitoring of emerging vulnerabilities and active threats across the vendor ecosystem. This capability is especially critical for managing fourth-party risks, where subcontractors working with primary vendors might otherwise evade scrutiny. By maintaining visibility into these downstream relationships, the platform enforces consistent security standards throughout the supply chain. This approach helps mitigate vulnerabilities like those seen in the Change Healthcare breach, which affected 192.7 million Americans [2].

Conclusion

Key Takeaways for AI Risk Mitigation

AI in healthcare is a double-edged sword - offering transformative potential while introducing serious risks. To navigate this, healthcare organizations need a solid plan. This includes setting up governance structures with clear accountability, applying technical measures to tackle AI-specific vulnerabilities like adversarial attacks and data poisoning, and reinforcing third-party risk management to guard against supply chain threats [2][5].

Third-party vendors are a major weak point, making supply chain security a critical focus area [2]. Instead of relying on one-time assessments, healthcare organizations should adopt continuous monitoring to keep AI systems secure and resilient [5].

These steps provide a foundation for strengthening cybersecurity in healthcare as AI continues to evolve.

The Future of AI Cybersecurity in Healthcare

As AI adoption grows, healthcare cybersecurity must keep pace. This means moving beyond static checklists to embrace ongoing risk management. Regular evaluations, staying aligned with new regulatory requirements, and adapting to sector-specific guidance are all essential to counter increasingly sophisticated threats [5].

Traditional security models fall short when addressing AI-specific risks like model drift and prompt injection attacks [1][2][4]. Centralized risk management will be key. Healthcare leaders need to understand that proactive risk management isn't optional - it's the only way to safely harness AI's potential. By implementing centralized platforms that unify governance, streamline vendor assessments, and provide real-time system visibility, organizations can safeguard patient data while driving innovation forward. The real challenge is how quickly these tools can be put in place to protect systems and patient care.

FAQs

What steps can healthcare organizations take to reduce AI-related cybersecurity risks?

Healthcare organizations can tackle AI-related cybersecurity risks by taking a proactive, multi-layered approach. Start with regular risk assessments to pinpoint vulnerabilities and follow up with continuous monitoring to catch any unusual activity early. Ensuring data integrity through validation processes is another critical step to safeguard sensitive information.

Additionally, enforcing strict access controls helps prevent unauthorized usage, while staying updated on new and evolving threats keeps defenses sharp. Having a detailed incident response plan in place ensures swift action in the event of a breach. Together, these steps provide a stronger shield for patient data, medical devices, and IT systems against AI-driven cyber risks.

What risks do AI-powered medical devices pose in hospitals?

AI-powered medical devices come with serious risks if they aren't well-protected. These risks include unauthorized access to sensitive patient information, manipulation of device functions, and system breaches that could endanger patients or disrupt hospital workflows. Cyberattacks, such as data poisoning, adversarial inputs, or prompt injection exploits, can target these devices, putting their reliability and safety at risk.

To address these challenges, healthcare organizations need to prioritize strong cybersecurity protocols, routinely evaluate vulnerabilities, and design AI systems with security and patient safety as core principles.

Why is it important to continuously monitor AI systems in healthcare?

Continuous monitoring of AI systems in healthcare plays a key role in identifying and addressing cyber threats swiftly. This proactive approach helps protect sensitive patient data and ensures the safety of medical devices and IT systems. By detecting vulnerabilities and malicious activities in real time, it becomes possible to prevent breaches and minimize disruptions.

When healthcare organizations actively monitor their AI systems, they can stay ahead of emerging risks, reduce potential harm, and reinforce trust in their ability to use AI responsibly - all while safeguarding their critical operations.