HIPAA Compliance for AI Model Encryption

Post Summary

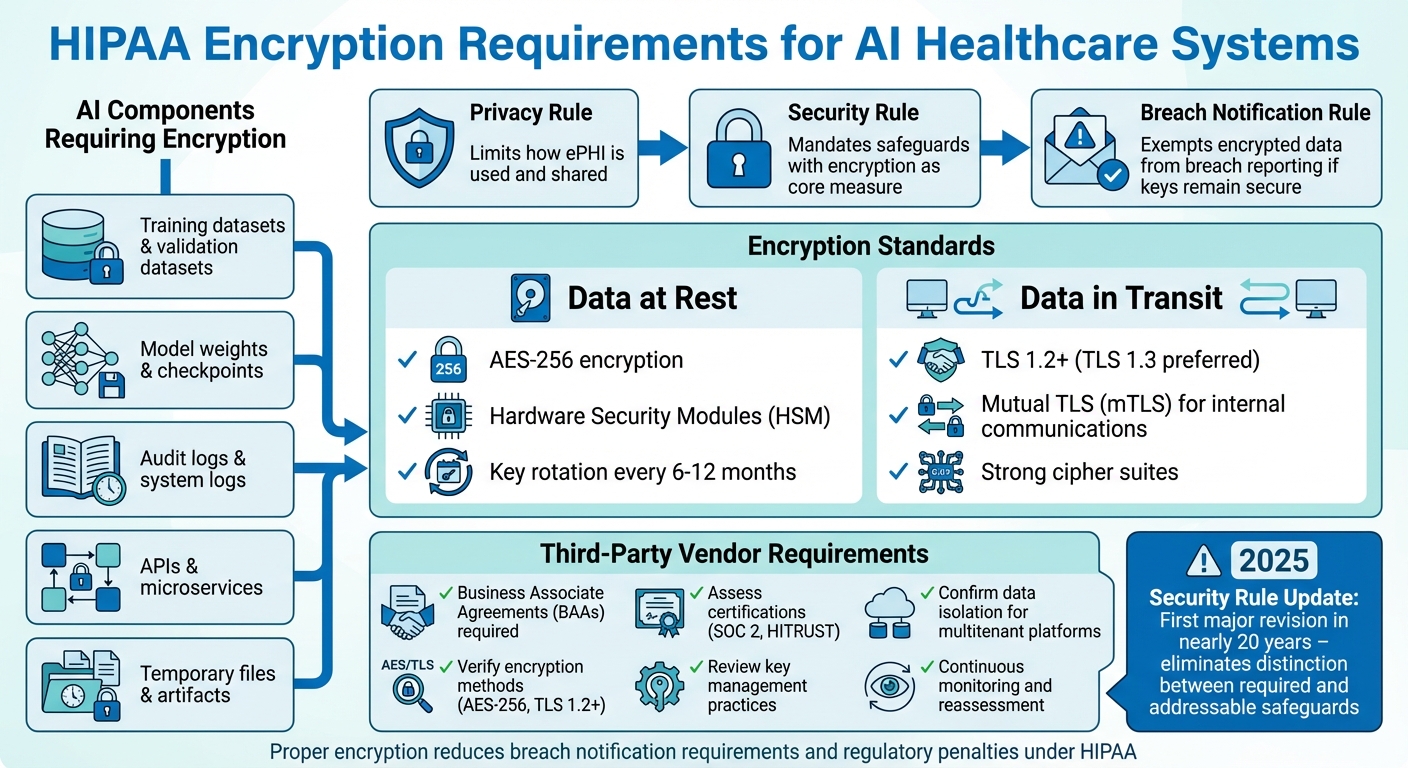

When AI systems handle sensitive healthcare data, encryption isn't just a good practice - it's a requirement under HIPAA. AI tools in healthcare process electronic protected health information (ePHI), such as medical histories and insurance details, making them a prime target for cyberattacks. Encryption protects this data by rendering it unreadable to unauthorized parties, reducing both security risks and regulatory penalties.

Key points include:

- HIPAA Rules for AI and Encryption:

- The Privacy Rule limits how ePHI is used and shared.

- The Security Rule mandates safeguards, with encryption as a core measure.

- The Breach Notification Rule exempts encrypted data from breach reporting if keys remain secure.

- AI Components Requiring Encryption:

- Training datasets, model weights, logs, and APIs.

- Encryption Standards:

- Third-Party Vendor Oversight:

HIPAA Encryption Requirements for AI Healthcare Systems

HIPAA Encryption Requirements for AI Systems

Security Rule Standards for Encryption

The HIPAA Security Rule lays out technical safeguards aimed at protecting electronic protected health information (ePHI) within any AI system that creates, receives, maintains, or transmits such data. According to 45 C.F.R. §164.312, organizations are required to safeguard the confidentiality, integrity, and availability of ePHI while defending it against foreseeable threats [7]. For AI platforms managing PHI, this means implementing controls like assigning unique user IDs for system access, encrypting data to make it unreadable to unauthorized users, and deploying integrity checks to detect any unauthorized changes to PHI [1][6].

Though the Security Rule previously allowed flexibility in choosing alternatives to encryption, the Department of Health and Human Services (HHS) has consistently encouraged encryption as a best practice for systems handling ePHI. In fact, regulatory guidance increasingly treats encryption as a standard expectation [7][4]. A proposed update to the Security Rule, set for January 6, 2025, marks the first major revision in nearly two decades. This update aims to eliminate the distinction between "required" and "addressable" safeguards for systems processing PHI, including AI platforms [5]. The change reflects growing concerns over ransomware and the risks introduced by advanced technologies like AI [5]. These heightened standards also directly affect how breaches are reported, as discussed below.

How Encryption Prevents Breach Notifications

Under the HIPAA Breach Notification Rule, covered entities and business associates must notify affected individuals, HHS, and sometimes the media when unsecured PHI is breached. However, encryption can make PHI unreadable to unauthorized users [4][2]. If encrypted AI data or logs are accessed by an attacker but the decryption keys remain secure - and the encryption meets HHS guidelines - the incident generally isn’t considered a reportable breach under HIPAA [4].

This means organizations that encrypt their PHI and maintain strong key management practices can often sidestep the financial and reputational fallout of breach notifications [2][4]. For AI systems handling large volumes of PHI across training pipelines, inference processes, and logs, encryption combined with sound key management reduces legal risks and helps maintain patient trust [2][4]. Beyond avoiding breach reports, encryption is a cornerstone for securing all parts of AI systems that interact with sensitive data.

AI Components That Require Encryption

HIPAA’s encryption requirements extend to all components of AI systems that process or store ePHI. These rules go beyond traditional databases and include:

- Training datasets, validation datasets, and feature stores: These often contain sensitive patient attributes and must be encrypted [3].

- Model weights and checkpoints: Encryption is critical here, especially when models are trained on PHI or could be reverse-engineered to uncover patient data [3].

- Temporary files and artifacts: Files shared between development, testing, and production environments must also be encrypted at rest [3].

Audit logs, frequently overlooked, are another critical area. These logs can include patient identifiers, clinical prompts, model outputs, and error traces tied to PHI [3][6]. Despite their sensitivity, logs are sometimes treated as secondary data, leading to gaps in encryption coverage. To ensure compliance, healthcare organizations must encrypt all logging infrastructure - including system logs, training logs, and monitoring data - both at rest and in transit [3][6]. This comprehensive approach ensures that every layer of AI systems handling ePHI meets HIPAA’s stringent encryption standards.

Encryption Methods for AI Models That Handle PHI

Encrypting Data at Rest and in Transit

When working with AI systems that process Protected Health Information (PHI), healthcare organizations must ensure robust encryption practices. For stored data, AES‑256 encryption is the go-to standard. This method is widely supported across both cloud platforms and on-premises systems and aligns with the U.S. Department of Health and Human Services (HHS) guidelines for making PHI "unusable, unreadable, or indecipherable" to unauthorized users[4].

For data in transit - such as API calls, microservice communications, or inference requests - TLS 1.2 or higher is mandatory, with TLS 1.3 being the preferred version. Internal communications between AI components should implement mutual TLS (mTLS) to authenticate both ends of the connection, minimizing risks like interception or spoofing. Similarly, any external connections, such as to third-party AI APIs or imaging systems, need to use TLS with strong cipher suites and proper certificate management.

Encryption keys should always be stored separately from the data they secure. Using a Hardware Security Module (HSM) or a cloud-based Key Management Service (KMS) is ideal. Access to these keys must be tightly restricted through role-based permissions, with automatic key rotation scheduled every six to 12 months. Additionally, all key usage and administrative actions should be logged for auditing purposes. Special care is also needed to secure AI model weights and training datasets, as these can inadvertently expose sensitive information.

Securing Model Weights and Training Data

AI model weights and checkpoints can inadvertently retain PHI, especially when trained on clinical datasets. These model artifacts should be treated as sensitive assets and encrypted using AES‑256. Store them in segregated repositories with access limited to authorized engineers and automated systems.

For training datasets containing PHI, encryption should be applied throughout the entire lifecycle - from initial ingestion to long-term storage. During labeling or curation, tools should only reveal the minimum necessary data fields, and any exported subsets should also remain encrypted. When data is transferred to training clusters, it must be transmitted over a secure TLS connection and stored on encrypted volumes. Once training is complete, archive raw PHI datasets with encrypted backups, enforce clear retention policies, and review training logs to ensure PHI isn’t accidentally exposed in plaintext.

Advanced Encryption Methods for Healthcare AI

For added security, advanced encryption techniques can further reduce the risk of PHI exposure in complex AI workflows.

- Tokenization replaces sensitive identifiers, such as patient IDs, with secure random tokens. These tokens map back to the original data through a secure vault, enabling AI models to process consistent identifiers without storing the actual PHI.

- Format-preserving encryption (FPE) encrypts data while maintaining its original structure. This is particularly useful for legacy systems or AI models that require specific data formats to function correctly.

- Homomorphic encryption enables computations on encrypted data without requiring decryption. This ensures that servers never handle plaintext information during processing.

- Confidential computing uses trusted execution environments (TEEs) to securely run AI training or inference within hardware-isolated enclaves. This ensures that data and model weights remain encrypted in memory, with decryption occurring only within the secure enclave.

- Encrypted search techniques allow indexing and querying of encrypted clinical documents without exposing sensitive information in plaintext.

When combined with robust key management and strict access controls, these methods not only help meet HIPAA compliance requirements but also provide an extra layer of protection for PHI in intricate AI workflows.

Managing Third-Party AI Vendor Encryption

After establishing strict internal encryption standards, it's equally important to extend these safeguards to third-party vendors.

How to Assess Vendor Encryption Practices

Before granting access to PHI, it's crucial to evaluate a vendor's encryption controls. Under HIPAA's Security Rule, covered entities must ensure that vendors can make PHI "unusable, unreadable, and indecipherable" to unauthorized individuals.

Start by requesting detailed documentation on the vendor's encryption practices. This should cover all AI components, including training datasets, model weights, inference logs, backups, and temporary storage. Ask for specifics about the encryption algorithms, key lengths for data at rest (like databases and model artifacts), and data in transit (such as API calls or microservices). Also, review their TLS configurations and certificate management processes.

Once you've reviewed the documentation, shift your focus to key management and data isolation. Confirm that the vendor uses a dedicated Key Management System (KMS) or Hardware Security Module (HSM), has defined key rotation schedules, limits access to encryption keys, and maintains audit logs. For multitenant platforms, ensure logical or physical isolation of PHI. Additionally, clarify how the vendor securely deletes or erases PHI and related artifacts if the partnership ends.

To validate these practices, collect evidence such as SOC 2 Type II reports, HITRUST certifications, or summaries of recent penetration tests. Screenshots showing encryption configurations for storage volumes and data flows can provide tangible proof that these controls are in place and functioning.

Business Associate Agreements and Encryption Requirements

Once you've assessed a vendor's encryption practices, formalize these standards in a contract. Any AI vendor handling PHI must sign a Business Associate Agreement (BAA) before accessing ePHI. The BAA should go beyond generic terms by explicitly requiring encryption as a technical safeguard for all ePHI.

Set clear minimum standards, such as AES-256 for data at rest and TLS 1.2 or higher for data in transit. The agreement should mandate the use of strong cryptographic methods, the removal of weak cipher suites, and prompt notification of any significant changes to encryption controls. For AI-specific workloads, include requirements to encrypt model weights, embeddings, and training datasets containing PHI.

The BAA should also link encryption to breach notification obligations. PHI encrypted according to HHS guidance is considered "secured", reducing the likelihood of reportable breaches if encrypted data is compromised but the keys remain safe. Additionally, the agreement should restrict secondary uses of PHI, such as training generalized models without explicit consent. Require vendors to provide regular attestations, audit reports, and immediate updates about any changes to their encryption architecture.

Using Censinet for AI Vendor Risk Management

Managing multiple vendor encryption practices can be overwhelming without the right tools. This is where platforms like Censinet RiskOps™ come into play. Designed for healthcare organizations, Censinet offers a centralized way to assess, track, and monitor third-party AI vendors' encryption controls and overall HIPAA compliance.

The platform simplifies the process by distributing standardized security questionnaires that examine vendors' encryption algorithms, key management practices, and data isolation controls. If gaps are identified - such as incomplete encryption for data at rest or weak key rotation policies - Censinet facilitates collaborative workflows to address these issues. Both parties can agree on corrective actions, set deadlines, and track progress to ensure compliance.

As Terry Grogan, CISO of Tower Health, explains:

"Censinet RiskOps allowed 3 FTEs to go back to their real jobs! Now we do a lot more risk assessments with only 2 FTEs required."

Beyond the initial onboarding process, Censinet supports continuous oversight by scheduling periodic reassessments and tracking whether vendors have implemented agreed-upon improvements. It centralizes critical evidence, such as updated certifications, revised security policies, and incident reports. The platform also generates risk dashboards and reports that show which vendors handle PHI, what encryption measures are in place, and where risks remain. This comprehensive view helps compliance teams and leadership maintain a clear understanding of their AI vendor encryption landscape over time, ensuring ongoing due diligence under HIPAA's Security Rule.

sbb-itb-535baee

Governance and Testing for AI Encryption Compliance

Strong encryption controls are just one part of the equation for maintaining HIPAA compliance in healthcare. Effective governance and regular testing are equally critical to ensure encryption measures are functioning as intended and continue to meet security standards over time.

Governance Practices for AI Encryption

Establishing a multidisciplinary committee is key to managing AI-specific encryption risks. This team should include compliance officers, security experts, IT personnel, data scientists, and clinical stakeholders. Their responsibilities should cover everything from risk analysis to overseeing encryption controls. A detailed risk management plan should outline how each AI system handles electronic protected health information (ePHI), including storage, processing, and transmission. It should also address how encryption is applied and how any residual risks are mitigated. Clearly defined roles, such as AI system owner, data protection officer, and model security lead, ensure accountability for tasks like selecting encryption methods, managing keys, and approving vendors, all in alignment with HIPAA's administrative safeguards.

Governance should span the entire AI lifecycle. This means specifying encryption requirements during design, verifying configurations during implementation, and ensuring secure key revocation and encrypted data deletion when systems are decommissioned. The principle of least privilege is crucial: encrypted data - whether it pertains to training data, model artifacts, or operational logs - must be segregated. Access to decryption keys should be tightly controlled, limited to specific service accounts, and restricted to authorized microservices. For instance, data scientists should only access production PHI with explicit approval, support staff should work with masked or de-identified logs, and automated AI processes should operate under narrowly defined permissions.

Audit logging plays a vital role in governance. These logs should capture details like who accessed encrypted resources, when, from where, and why. Making these logs tamper-resistant, securely stored, and regularly reviewed ensures they can be linked to incident-response workflows, enabling quick investigations into any suspicious activity.

Testing and Validating Encryption Controls

Encryption controls need ongoing validation through both technical testing and procedural reviews. This includes penetration testing, configuration reviews, and continuous monitoring to ensure encryption policies and access controls remain effective. Regular checks should confirm that databases, storage systems, message queues, and AI orchestration tools enforce strong encryption by default. Key management practices should ensure keys are stored securely in hardware or cloud-based systems, and no test or shadow environments should be left unencrypted by oversight.

Automated tools and SIEM platforms can monitor the encryption status of AI data stores, services, and vendor connections. Baseline policies should guide the evaluation of both cloud-based and on-premise AI resources. Integrating these tools with vulnerability scanners and configuration management systems helps detect issues like expired certificates, weak cipher suites, or missing encryption on new services, ensuring compliance with HIPAA's ongoing risk management requirements.

A structured evidence repository is essential for audits or investigations. This repository should include AI-specific risk analyses, encryption policies, architectural diagrams of encrypted data flows, preserved audit logs, test reports, copies of Business Associate Agreements (BAAs) with AI vendors, and vendor security attestations like SOC 2 or HITRUST certifications.

Censinet's Role in Ongoing Encryption Oversight

Managing third-party risks is another critical piece of the puzzle. Platforms like Censinet RiskOps™ help healthcare organizations maintain oversight by centralizing risk data for both internal and vendor-hosted AI systems that handle PHI. Censinet allows organizations to embed encryption-specific questions and evidence requests into vendor risk assessments. This ensures that partners use strong encryption for data at rest and in transit, implement secure key management practices, and meet HIPAA’s requirement to make PHI inaccessible to unauthorized users.

Censinet's dashboards and workflows address many of the governance and testing challenges healthcare organizations face. The platform enables tracking of remediation tasks when vendors or internal AI projects fall short and supports ongoing reassessments with exportable documentation for audits. Organizations can also use Censinet to create a centralized record for AI-related encryption risks, linking each AI application or vendor to its risk assessment, encryption control questionnaire, remediation plan, and supporting evidence like penetration test summaries or configuration snapshots.

Conclusion and Key Takeaways

Encryption isn't just a suggestion under HIPAA - it’s a requirement for any AI system handling PHI. The days of treating encryption as an optional "addressable" safeguard are coming to an end, with the OCR's proposed 2025 Security Rule updates signaling a shift toward stricter enforcement [5]. This means healthcare organizations need to ensure that every AI component interacting with PHI uses encryption that meets industry standards [4].

Why is this so critical? Proper encryption minimizes both financial and regulatory risks. If PHI is encrypted correctly and the keys are kept secure, organizations can often avoid the costly fallout of a data breach. This includes skipping breach notifications, credit monitoring expenses, and hefty penalties [4]. But encryption alone won’t cut it. To stay compliant, organizations must also implement strong key management practices, enforce least-privilege access controls, maintain detailed audit logs, and conduct ongoing testing to adapt to new AI use cases.

Adding to the challenge, third-party vendors introduce additional layers of complexity. Every vendor working with PHI must sign a Business Associate Agreement (BAA) that clearly outlines encryption requirements. Beyond that, they need to prove their security measures through independent certifications like SOC 2 or HITRUST [8]. Given the constant evolution of AI supply chains and model deployments, continuous monitoring and verification of these vendors are non-negotiable.

Tools like Censinet RiskOps™ can help simplify this process. By centralizing vendor assessments, tracking remediation efforts, and maintaining visibility over encryption risks, platforms like this align with the governance and ongoing risk testing needed for compliance. As Matt Christensen, Sr. Director GRC at Intermountain Health, points out:

"Healthcare is the most complex industry... You can't just take a tool and apply it to healthcare if it wasn't built specifically for healthcare."

FAQs

What are the best encryption practices for AI systems that process PHI?

To meet HIPAA requirements, the Advanced Encryption Standard (AES) is often the go-to choice for securing AI systems that process Protected Health Information (PHI). This encryption method is known for its strong security and is widely trusted in the healthcare sector.

Equally important is the use of solid key management practices. This includes creating unique, complex encryption keys and ensuring they are stored securely to block unauthorized access. Regularly updating encryption methods and performing risk assessments are also critical steps to protect sensitive patient information and maintain compliance.

How does encryption affect HIPAA breach notification requirements?

Under HIPAA, encryption is a key factor in determining whether a breach needs to be reported. If protected health information (PHI) is encrypted and a breach happens, it’s typically not considered a reportable event - as long as the encryption key remains secure. That’s because encryption makes the data unreadable, significantly reducing the risk of unauthorized access.

On the other hand, if the encryption key is stolen or accessed alongside the encrypted PHI, the breach must be reported under HIPAA guidelines. By implementing strong encryption protocols, healthcare organizations can reduce risks and simplify their compliance with breach notification rules.

What encryption requirements should be included in a Business Associate Agreement (BAA)?

A Business Associate Agreement (BAA) needs to spell out encryption requirements to ensure sensitive data is well-protected. This means stating that Protected Health Information (PHI) must be encrypted both when stored and while being transmitted, using HIPAA-compliant methods like AES-256. It should also clarify who is responsible for encryption key management and mandate regular audits to confirm adherence to encryption policies.

By including these specifics, healthcare organizations can strengthen patient data security and stay in line with HIPAA rules.