Intelligent Defense: Building AI-Native Cybersecurity Programs

Post Summary

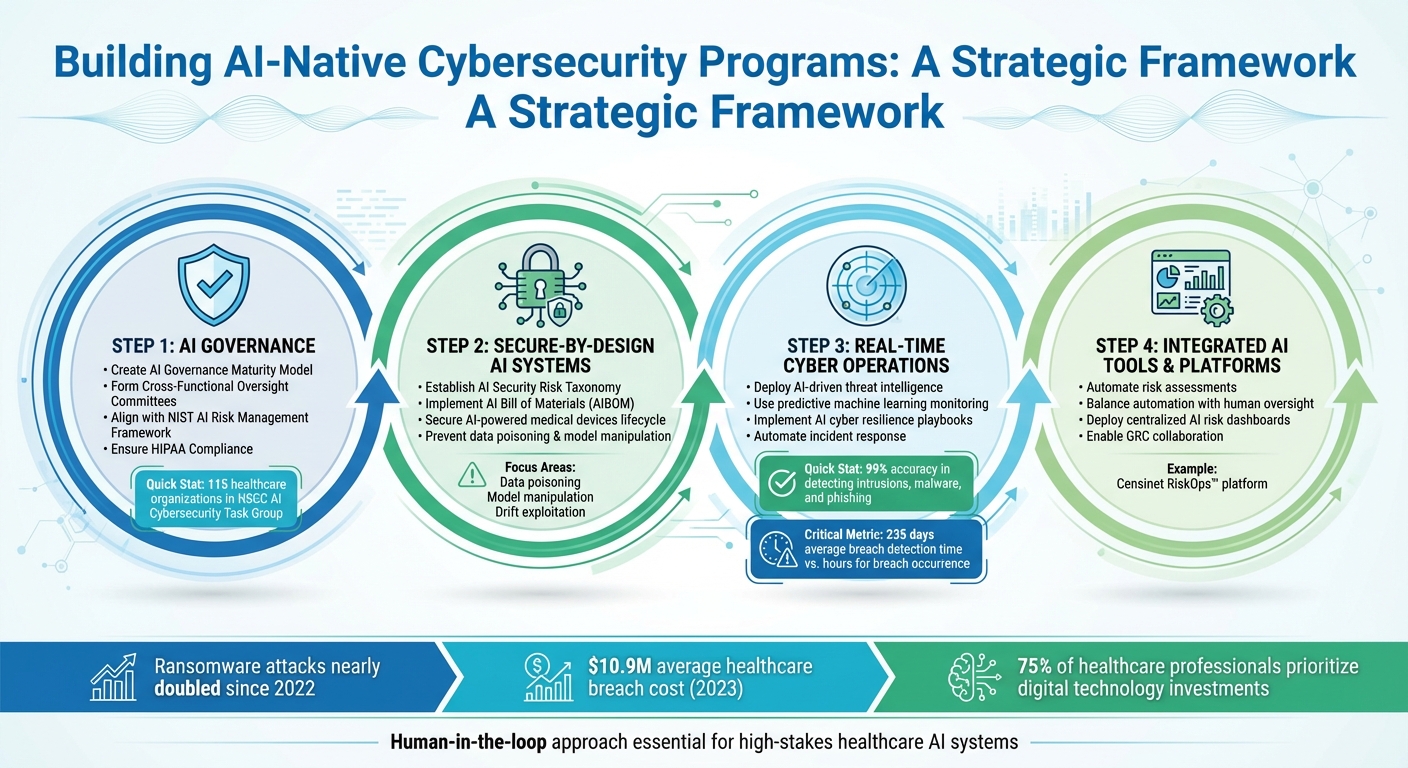

In healthcare, cyber threats are escalating due to outdated systems, connected devices, and the critical nature of patient care. Traditional defenses are no longer enough. AI offers faster responses, predictive analytics, and anomaly detection to secure sensitive data and prevent breaches. This guide breaks down how to integrate AI into healthcare cybersecurity, covering governance, risk management, secure system design, and real-time threat detection.

Key Takeaways:

- AI Governance: Establish frameworks with oversight committees and align with standards like NIST and HIPAA.

- Secure AI Design: Address risks like data poisoning and model manipulation with structured frameworks and AI-specific safeguards.

- Real-Time Threat Response: Use AI for predictive monitoring, rapid incident recovery, and automated defenses.

- Tools Like Censinet RiskOps™: Combine automation with human oversight to manage risks effectively while ensuring compliance.

AI-driven cybersecurity is reshaping how healthcare systems protect patient data and counter threats. Leaders must act now to implement these strategies and stay ahead of evolving risks.

4-Step Framework for Building AI-Native Healthcare Cybersecurity Programs

Setting Up AI Governance Frameworks

Using AI in cybersecurity without proper oversight can leave organizations vulnerable to unexpected risks. For healthcare providers, this is especially critical, as protecting patient data and meeting regulatory requirements are non-negotiable. A well-structured governance framework establishes accountability, decision-making processes, and safeguards to prevent AI systems from creating new vulnerabilities. Below are some key steps to building an effective AI governance structure.

Creating an AI Governance Maturity Model

Start by evaluating your current governance capabilities with a maturity model to pinpoint areas needing improvement. This means cataloging all AI systems, detailing their purposes, the data they access, and the risks they carry. Each AI tool should be classified on an autonomy scale, which matches the required level of human oversight to the system’s risk. For instance, an AI tool that flags unusual login attempts might need minimal supervision, while one automating decisions about patient access controls would demand closer monitoring.

Forming Cross-Functional AI Oversight Committees

AI oversight requires input from diverse perspectives. Cross-functional committees should include IT security experts, compliance officers, legal advisors, and clinical staff to ensure AI implementation is examined from all angles. A notable example is the AI Cybersecurity Task Group formed by the Health Sector Coordinating Council (HSCC) Cybersecurity Working Group in October 2024. Representing 115 healthcare organizations, this group split into five specialized subgroups: Education and Enablement, Cyber Operations and Defense, Governance, Secure by Design Medical, and Third-Party AI Risk and Supply Chain Transparency. These teams worked collaboratively to develop actionable strategies for managing AI-related cybersecurity risks [3]. This model highlights the importance of combining technical expertise with insights from those familiar with patient care and regulatory demands.

Aligning with NIST AI Risk Management Framework and HIPAA

Once internal oversight structures are in place, aligning with established external standards strengthens your governance framework. The NIST AI Risk Management Framework provides a systematic method for identifying and addressing AI-specific risks, while HIPAA ensures strict protection of patient data. On December 4, 2025, the U.S. Department of Health and Human Services (HHS) released its AI Strategy, emphasizing governance and risk management as one of its five central pillars [5]. This reflects the growing regulatory emphasis on AI oversight. Your governance plan should clearly assign roles and responsibilities for every phase of the AI lifecycle, from deployment to ongoing monitoring and updates. It should also document data handling protocols and enforce security measures like multi-factor authentication and encryption for data both in transit and at rest [1].

Building Secure-by-Design AI Systems

When it comes to developing AI systems for healthcare, security needs to be baked in from the start. Treating cybersecurity as an afterthought can leave systems vulnerable to breaches that jeopardize patient data or disrupt critical medical services. A secure-by-design approach ensures that security measures are integrated into every stage of the AI lifecycle - development, deployment, and even retirement. The first step in this process? Establishing a clear framework to identify and address risks effectively.

Creating an AI Security Risk Taxonomy

Healthcare organizations need a structured way to pinpoint and categorize security risks unique to AI systems. That’s where an AI Security Risk Taxonomy comes in. This framework breaks down risks into specific categories, making it easier to evaluate threats and prioritize defenses. For example, it should cover issues like:

- Data poisoning: When attackers corrupt training datasets to manipulate how an AI system behaves.

- Model manipulation: Direct tampering with algorithms to alter their functioning.

- Drift exploitation: Exploiting performance degradation that can occur over time.

By defining these risks upfront, organizations can craft targeted defenses rather than relying on generic cybersecurity measures that might overlook these AI-specific vulnerabilities. This taxonomy also guides the continuous security protocols needed for AI-powered medical devices.

Securing AI-Powered Medical Devices Throughout Their Lifecycle

AI-enabled medical devices demand constant security attention, starting from their design phase all the way to their eventual retirement. This requires collaboration across departments to ensure AI-specific risks are factored into device management processes. One effective strategy is adopting AI Bill of Materials (AIBOM) and Trusted AI BOM (TAIBOM) frameworks. These tools provide greater transparency and traceability across the AI supply chain, helping to build trust in the system’s integrity.

Another layer of protection comes from input filtering, which screens incoming prompts to detect and block malicious intent before it can affect the system. Together, these measures create a more secure environment for AI-powered healthcare solutions.

Preventing Data Poisoning and Model Manipulation

Keeping AI models safe from tampering involves a mix of technical safeguards and ongoing oversight. Following established guidelines, such as those from the FDA, NIST AI Risk Management Framework, and CISA, can help organizations align their security practices with industry standards. At the same time, maintaining rigorous human oversight is essential, particularly for high-stakes systems. This oversight helps address risks like bias, ensures accurate outcomes, and strengthens overall security.

Using AI for Real-Time Cyber Operations

Once AI systems are secured, the next step is deploying them to counter active threats. Hackers can breach data within hours, yet it takes organizations an average of 235 days to detect such intrusions [6]. A real-time approach to cyber defense is essential, and this is where specialized AI applications in threat detection come into play.

AI-Driven Threat Intelligence for Healthcare

AI has proven to be a powerful tool for processing threat feeds, logs, and network data to identify emerging risks [6]. By leveraging natural language processing (NLP), AI can analyze unstructured data from sources like security bulletins, dark web discussions, and vulnerability reports. This analysis helps inform strategies, such as those developed by the Health Sector Coordinating Council (HSCC), enabling a shift from reactive to proactive defense measures [3][6].

Commercial cybersecurity models powered by AI have achieved over 99% accuracy in detecting intrusions, malware, and phishing attempts [6]. The HSCC is also working on AI-Driven Clinical Workflow Threat Intelligence Playbooks. These playbooks provide structured methods to identify and respond to AI-related cyber threats, including rapid containment of compromised AI models [3].

Proactive Monitoring with Predictive Machine Learning

Predictive machine learning models take a forward-looking approach by analyzing historical data and current trends to anticipate potential security breaches before they occur. These models establish baselines for users, systems, and IoT/OT devices, flagging anomalies that could signal insider threats or system compromises. Automated vulnerability management tools then prioritize critical patches to address these risks [2].

The HSCC's Cyber Operations and Defense subgroup is developing guidance for predictive machine learning systems and embedded device AI, with plans to release it by 2026. This guidance will include operational safeguards and address potential risk factors [3]. Because these models continuously learn from new data, they enable real-time updates to security protocols, laying the foundation for swift recovery strategies [2].

Accelerating Incident Recovery with AI Cyber Resilience Playbooks

Quick action is vital during a cyber incident. Delayed responses can cost millions, while rapid containment can significantly reduce damage [4]. AI resilience playbooks are designed to automate critical containment actions, such as quarantining compromised systems, halting ransomware attacks, and disabling suspicious accounts [2].

The HSCC has assembled an AI Cybersecurity Task Group, comprising 115 healthcare organizations, to create an AI Cyber Resilience and Incident Recovery Playbook [3]. These playbooks integrate AI-specific risk assessments into existing frameworks and outline tailored responses for threats like model poisoning, data corruption, and adversarial attacks. As Tapan Mehta, Healthcare and Pharma Life Sciences Executive, Strategy and GTM, notes:

"Security needs to be automated and real-time in the era of AI. As we face new challenges and zero-day threats, we need to innovate new solutions at a much faster pace." [2]

These playbooks emphasize continuous monitoring of AI systems, recovery of compromised models, and maintaining secure, verifiable backups. They also ensure compliance with regulations like HIPAA, critical for the healthcare sector [3].

sbb-itb-535baee

Integrating Censinet AI Tools into Cybersecurity Programs

Healthcare organizations today need AI-powered solutions that can genuinely lower risks. The Censinet RiskOps™ platform answers this call by blending automation with human judgment, creating a foundation for scalable and secure AI-driven cybersecurity programs. This combination enables organizations to streamline risk assessments while ensuring expert oversight remains central.

Automating Risk Assessments with Censinet RiskOps™

Traditional third-party risk assessments are time-consuming and resource-intensive. Enter Censinet AI™, which allows vendors to complete security questionnaires in just seconds. Beyond that, it efficiently summarizes vendor-provided evidence, logs integration details, tracks fourth-party exposures, and generates concise, actionable risk reports. This speed and efficiency help healthcare organizations manage risks more effectively, even when dealing with numerous vendors. While automation takes care of repetitive tasks, human oversight remains key to ensuring accuracy and trust.

Balancing Automation and Human Oversight with Censinet AI

Censinet RiskOps employs a "human-in-the-loop" approach, ensuring that while automation handles routine processes, critical decisions remain in human hands. Risk teams can configure rules, validate evidence, draft policies, and oversee risk mitigation plans. This balance is especially crucial in healthcare, where compromised medical devices or systems can have severe consequences. As Tapan Mehta, Healthcare and Pharma Life Sciences Executive, Strategy and GTM, explains:

"For example, when a medical device or system is hacked, it can lead to data loss and disrupt critical operations, potentially endangering lives. Therefore, the accuracy of AI is extremely crucial" [2].

By combining human oversight with autonomous automation, the platform helps healthcare organizations scale operations while adhering to HIPAA, the NIST AI Risk Management Framework, and other regulatory standards, ensuring governance remains robust and compliant.

Centralized AI Risk Dashboards for GRC Collaboration

Censinet RiskOps acts as a centralized hub for AI governance, streamlining collaboration across governance, risk, and compliance (GRC) teams. Think of it as air traffic control for AI risk: the platform routes assessment findings and tasks to the appropriate stakeholders for review and approval. Through an intuitive AI risk dashboard, it consolidates real-time data, policies, risks, and tasks into one easily accessible location. This unified system promotes continuous oversight, accountability, and coordination, eliminating the silos that often hinder effective AI governance.

Key Takeaways for Building AI-Native Cybersecurity Programs

How AI Improves Healthcare Cybersecurity

AI is reshaping healthcare cybersecurity by introducing advanced threat detection, automated responses, and predictive tools that align with a proactive, AI-driven defense approach [2]. These technologies process massive amounts of data in real time, uncovering anomalies and enabling adaptive security measures to counter evolving threats. With AI, healthcare organizations can strengthen identity verification processes, enhance encryption methods, and improve how they manage third-party risks [1][3]. These advancements provide a solid foundation for actionable strategies healthcare leaders need to adopt.

Action Steps for Healthcare Leaders

To begin, healthcare leaders should establish governance frameworks that combine strong oversight, cutting-edge technology, and ongoing monitoring [2]. Compliance with HIPAA regulations and emerging AI-specific standards is essential to build trust and avoid penalties. The data paints a concerning picture: ransomware attacks have nearly doubled since 2022, healthcare breach costs hit $10.9 million in 2023, and while 75% of healthcare professionals prioritize digital technology investments, many lack the resources to prevent breaches [1]. Leaders should focus on continuous risk monitoring and training staff to use AI-enabled security tools effectively. These steps will need to evolve as new AI innovations continue to shape healthcare cybersecurity.

Future Trends in AI and Healthcare Cybersecurity

AI is driving significant advancements in healthcare cybersecurity, with organizations increasingly adopting proactive defense strategies and integrating AI seamlessly into their systems [1]. However, challenges remain, such as protecting sensitive medical data, addressing outdated systems, meeting regulatory requirements, and ensuring patient privacy [2][7]. The future promises advancements in predictive threat intelligence, automated incident response, and scalable AI-powered risk assessment tools. Organizations that invest in AI-focused security measures now will be better equipped to tackle sophisticated cyber threats targeting healthcare systems in the years to come.

FAQs

How does AI enhance cybersecurity in healthcare?

AI is transforming cybersecurity in healthcare by monitoring network traffic, user behavior, and system activity in real time to spot unusual patterns that could indicate a cyberattack. This means it can catch threats that traditional methods often overlook, like advanced malware or insider breaches.

With the help of predictive analytics, AI can anticipate weaknesses before they become problems, allowing organizations to address them ahead of time. It also automates threat responses, making containment quicker and reducing potential damage. By pulling data from various sources, AI offers deeper insights into risks, helping healthcare providers bolster their defenses and safeguard sensitive patient data more effectively.

What are the essential elements of a strong AI governance framework for cybersecurity?

A solid AI governance framework is essential for ensuring the responsible and effective use of AI in cybersecurity. Key elements include keeping a comprehensive inventory of all AI systems, clearly outlining roles and responsibilities, and establishing policies that encourage ethical and compliant AI practices. Regular risk assessments, ongoing monitoring for performance and biases, and well-prepared incident response plans are also critical.

When it comes to healthcare, the framework must tackle unique challenges like safeguarding sensitive patient data. This requires collaboration across IT, clinical, and legal teams to address these complexities. Staying aligned with regulations such as HIPAA and routinely reviewing AI tools to ensure they meet these standards are crucial steps for maintaining both trust and security.

What steps can healthcare organizations take to protect AI models from manipulation?

Healthcare organizations can protect AI models from manipulation by prioritizing high-quality data and ensuring data integrity throughout the AI's lifecycle. Keeping a close eye on AI systems and using tools for anomaly detection can help spot unusual activity or potential threats early on.

Additional safeguards include implementing adversarial training techniques to strengthen models against attacks and establishing strict governance and validation protocols based on frameworks like NIST AI RMF. Leveraging explainable AI (XAI) promotes transparency, while layered security measures such as encryption, network segmentation, and access controls help mitigate risks like model poisoning or data tampering.