ISO 14971 and AI in Medical Device Risk Management

Post Summary

AI in healthcare is transforming patient care, but it also introduces complex risks. ISO 14971:2019 provides a framework for managing these risks, but AI-enabled devices require additional considerations due to challenges like data bias, model drift, and cybersecurity vulnerabilities. Here's what you need to know:

- ISO 14971 Overview: A global standard for identifying, assessing, and controlling risks in medical devices, including AI-driven tools.

- AI-Specific Challenges: Issues like evolving models, biased training data, and unclear decision-making require tailored risk management.

- Key Stakeholders: IT/security leaders, compliance officers, and clinical teams must collaborate to ensure safe AI implementation.

- Regulatory Alignment: The FDA and Health Canada emphasize ISO 14971 compliance for AI devices, alongside Good Machine Learning Practices (GMLP).

- Risk Controls: Include robust data governance, continuous monitoring, and human oversight to address AI-specific hazards.

To manage AI risks effectively, healthcare organizations should integrate ISO 14971 principles with AI-focused guidance like AAMI TIR 34971:2023. Platforms like Censinet RiskOps™ can streamline this process by automating risk assessments, tracking updates, and ensuring compliance.

How ISO 14971 Applies to AI Medical Devices

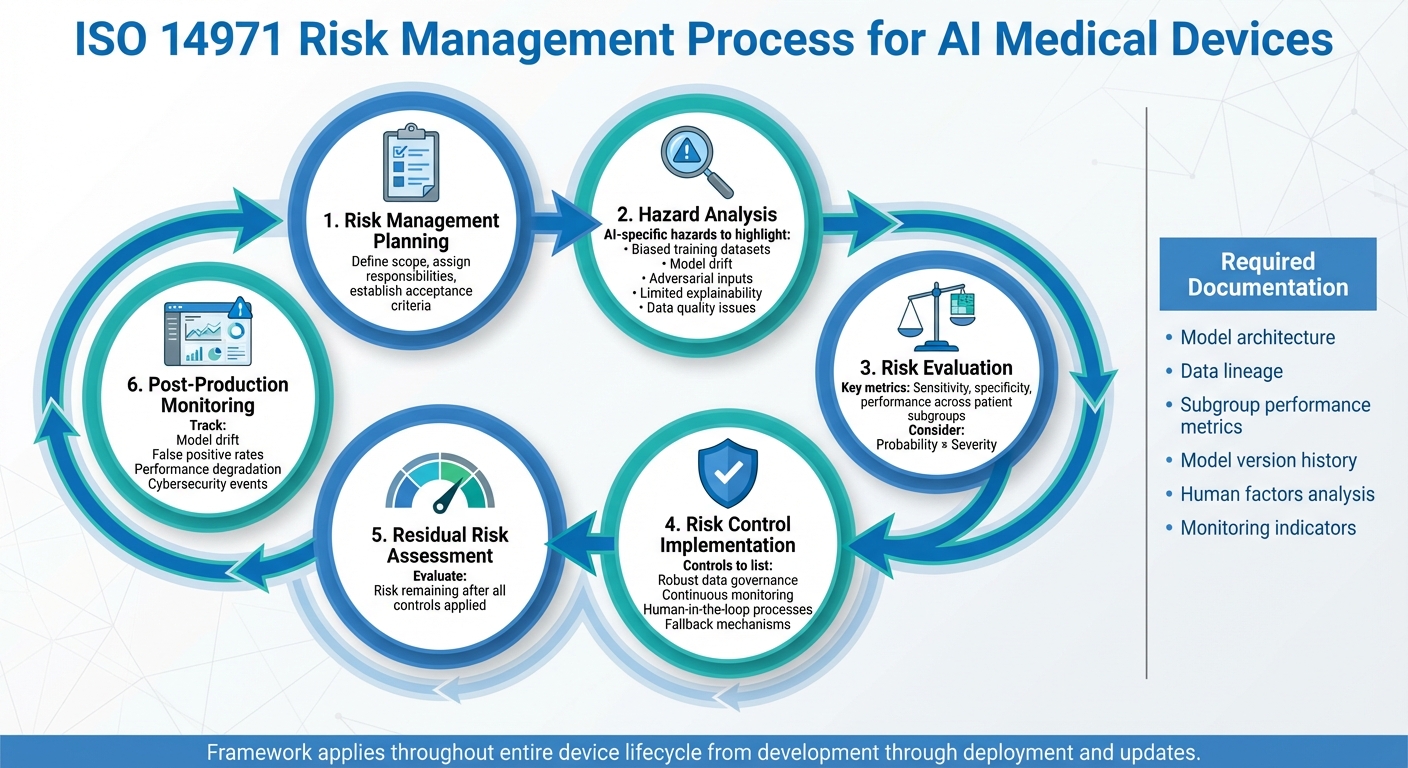

ISO 14971 Risk Management Process for AI Medical Devices

ISO 14971:2019 addresses software as a medical device (SaMD) and in vitro diagnostic tools, making it applicable to AI-driven medical devices as well [4]. While the standard doesn't outline specific hazards or controls for AI, its lifecycle approach provides a structured framework. This allows manufacturers and healthcare providers to adapt the process to tackle challenges unique to machine learning systems, such as bias or model drift.

The ISO 14971 Risk Management Process

ISO 14971 follows a lifecycle approach: planning, hazard analysis (including AI-specific ones like biased datasets and model drift), risk evaluation, control implementation, residual risk assessment, and post-production monitoring [4][5].

For AI-based devices, every step must account for risks tied to data, algorithms, and systems. During hazard analysis, manufacturers identify AI-specific issues such as biased training data, adversarial inputs, model drift, or limited explainability. Risk evaluation should factor in probabilistic metrics like sensitivity and specificity, which may vary across U.S. patient groups. Controls might include robust data governance, continuous monitoring, fallback mechanisms for low-confidence scenarios, or human-in-the-loop processes [1][2][7].

ISO 14971 also requires a comprehensive risk management file. For AI devices, this includes documentation on architecture, data lineage, subgroup performance metrics, model version history, assumptions, human factors, and monitoring indicators like drift or false positive rates [1][2][7].

Key Terms in ISO 14971

ISO 14971 introduces terms that are crucial for AI systems. A hazard is any potential source of harm, while harm refers to physical injury or health damage. Risk combines the likelihood of harm occurring with its severity, and residual risk is what remains after applying all controls [4][5].

For AI systems, these concepts expand to include new hazards from algorithmic bias or data drift. Hazards might stem from:

- Data-level issues: mislabeled training data, non-representative datasets, or data drift.

- Model-level challenges: overfitting, poor generalizability across clinical sites, sensitivity to small input changes, or lack of explainability.

- System-level risks: integration problems with electronic health records, unclear user interfaces, cybersecurity vulnerabilities, or software update failures [1][2][7].

A hazardous situation arises when someone is exposed to these risks. For example, a clinician relying solely on an AI recommendation that is biased against certain populations might delay a cancer diagnosis or prescribe incorrect medication. Risk calculations must consider the frequency of such failures in U.S. clinical settings and their potential impact. After applying controls like mandatory human review, decision support with clear explanations, and ongoing monitoring, the remaining risk reflects the residual likelihood and severity of AI-related errors [1][2][7].

Using ISO 14971 for Software and AI-Based Devices

The risk management process outlined in ISO 14971 applies equally to software-based technologies and hardware devices [4]. For traditional SaMD, risks often involve specification errors, bugs, data integrity issues, cybersecurity threats, and usability problems [1][5].

AI-integrated software, however, introduces unique challenges due to evolving model behavior and context-dependent performance. Effective controls must include robust data curation, retraining protocols, and continuous monitoring [1][2][7]. Communicating uncertainty and confidence levels to clinicians becomes critical, requiring high-quality, representative data and vigilance for performance degradation.

Manufacturers need to embed AI lifecycle controls - spanning data curation, training, validation, deployment, monitoring, and change management - into their ISO 14971 processes. This aligns with FDA expectations for AI/ML SaMD, which emphasize predefined change control plans and post-market surveillance [3][6]. The FDA's draft guidance on AI-enabled devices references ANSI/AAMI/ISO 14971 as the standard for risk management throughout the device lifecycle [6]. Additionally, the FDA's 2024 Quality Management System Regulation (QMSR) harmonized U.S. QMS requirements with ISO 13485, further aligning risk management practices with ISO 14971:2019 [8].

Two guidance documents help tailor ISO 14971 for AI systems. AAMI TIR 34971:2023 (also published as BS/AAMI 34971:2023) provides methods for identifying machine learning-specific hazards and integrating them into risk management [1][2][9]. Similarly, ISO/TS 24971-2 offers structured advice on adapting activities such as data management, training, validation, and monitoring for ML-enabled devices [7]. By mapping ISO 14971 steps to these AI-focused recommendations, manufacturers can create risk tables, define acceptance criteria, and develop post-market monitoring plans that meet FDA standards.

Healthcare organizations can also apply ISO 14971 principles when assessing AI tools for clinical use. While primarily aimed at manufacturers, the standard's concepts can guide hospital vendor risk assessments and procurement. Identifying hazards in clinical workflows, evaluating residual risk, and monitoring real-world performance can ensure safer AI adoption. Tools like Censinet RiskOps™ help streamline these practices by standardizing risk assessments for patient health information (PHI), clinical applications, and medical devices, including AI.

Risks Specific to AI Medical Devices

AI medical devices come with a unique set of risks that go beyond the typical hardware and software concerns. These risks arise from how machine learning systems handle data, generate predictions, and fit into clinical workflows. While ISO 14971 provides a foundational framework for managing these hazards, addressing the specific challenges tied to AI technology requires additional focus. Manufacturers and healthcare organizations must proactively identify and mitigate these AI-related risks to ensure patient safety.

Data Quality and Bias Risks

One of the biggest challenges with AI medical devices lies in the quality of the training data. If the datasets used to train these systems contain inaccurate or incomplete information, the resulting predictions may be flawed - leading to consequences like missed diagnoses or false alarms. What makes this issue even trickier is that these flaws might not show up during limited testing, only becoming apparent when the device is in actual clinical use.

Another major concern is non-representative training data, which can result in uneven performance across different patient populations. For example, an AI diagnostic tool trained primarily on data from academic medical centers might perform well for those specific demographics but falter when applied to patients with different ages, ethnicities, or medical conditions commonly found in U.S. community hospitals. Studies in radiology and dermatology have shown that AI tools often perform worse when tested on external datasets compared to internal ones, underscoring how dataset bias can directly jeopardize patient safety.

To address these risks, manufacturers should implement rigorous data curation practices, measure performance across demographic subgroups, conduct independent audits, and regularly revalidate the system to identify gaps. Healthcare organizations should also demand evidence from vendors showing that the AI device performs consistently across diverse U.S. patient populations and that the training data was carefully curated to reduce bias.

Algorithm Performance Risks

AI models face challenges like overfitting, drifting accuracy, and misinterpreting changes in input data. Overfitting happens when a model excels on the training data but struggles to generalize to new clinical environments, imaging devices, or patient groups. Model drift occurs when the model's accuracy declines over time due to shifts in clinical practices, patient demographics, or technology. Similarly, data drift refers to changes in input data - like updated imaging protocols or new electronic health record templates - that the model wasn’t trained to handle.

ISO 14971 emphasizes translating these performance risks into specific hazards. For example, a potential hazard might be "reduced sensitivity in detecting pneumonia in pediatric patients at new clinical sites" or "increased false positives after switching to a different CT scanner model." To manage these risks, manufacturers can use strategies like cross-site validation, locked models with controlled update policies, predefined protocols for model changes, and continuous performance monitoring dashboards. For adaptive or self-learning systems, any post-deployment updates must include a thorough reassessment of risks, updated documentation, and compliance with FDA guidelines for AI/ML-based Software as a Medical Device (SaMD).

Usability and Cybersecurity Risks

AI medical devices also introduce usability challenges, particularly in how clinicians interpret and act on the system's outputs. Automation bias, where clinicians over-rely on AI recommendations, can lead to missed diagnoses if they fail to question incorrect predictions. On the flip side, unclear or overly complex interfaces can result in under-trust or alert fatigue, causing clinicians to ignore valuable insights. Poor workflow integration further increases the likelihood of misuse.

Cybersecurity threats also present significant risks to patient safety. Adversarial attacks, where malicious inputs trick the AI into making incorrect predictions, and data poisoning, where training or update data is intentionally manipulated to produce unsafe behavior, are serious concerns. Traditional cybersecurity issues, like unauthorized access to patient health information (PHI) or system outages disrupting clinical workflows, also apply. Regulators now view these cybersecurity threats as safety hazards under ISO 14971, not just IT issues.

To mitigate these risks, manufacturers should implement controls like secure data pipelines, robust authentication systems, input validation, anomaly detection, and protections against adversarial inputs. Secure logging can also aid forensic investigations. Healthcare organizations can incorporate AI device cybersecurity into broader risk management efforts, conducting third-party assessments and leveraging tools like Censinet RiskOps™ to streamline vendor risk evaluations.

These considerations highlight the need for tailored AI-specific guidance within the ISO 14971 framework to address the unique challenges posed by AI medical devices effectively.

Adding AI-Specific Guidance to ISO 14971

Building on the risk management principles of ISO 14971, this section delves into how the framework can be adapted for AI-enabled medical devices. While ISO 14971:2019 provides a solid foundation for managing risks in medical devices, AI systems introduce unique challenges that require additional standards, updated regulations, and more detailed documentation. These considerations focus on aspects like data quality, model behavior, and the dynamic nature of AI systems. Let’s explore how these elements are integrated into the existing framework.

AI Standards and Technical Reports

New technical reports and standards are now available to help apply ISO 14971 to AI-driven medical devices. For instance, AAMI/BS 34971:2023 (TIR 34971) is regarded as a leading resource for identifying and classifying risks in machine learning (ML)-enabled devices [1]. It provides practical checklists to address AI-specific hazards like dataset shifts, model drift, bias, adversarial inputs, and explainability issues. These are tied to controls such as data governance protocols, fairness evaluations, and human oversight mechanisms.

Another important development is ISO/TS 24971-2, which is being crafted to guide the application of ISO 14971:2019 specifically to ML-enabled devices. This technical specification emphasizes structured approaches, such as validating training, validation, and test datasets as part of risk control verification. It also highlights the need to update post-market surveillance processes to monitor model performance over time and across diverse patient groups.

Complementary standards like ISO/IEC 23053 (a framework for ML-based AI systems) and ISO/IEC 5259-2 (defining data quality attributes like accuracy and completeness) further support the integration of AI-specific activities into traditional risk management workflows. Incorporating these standards into templates, procedures, and documentation ensures AI-specific requirements are seamlessly managed alongside those for conventional devices.

FDA and Health Canada Requirements for AI Medical Devices

Regulatory bodies, particularly in the U.S. and Canada, have introduced guidance to enhance risk management for AI-enabled devices. The FDA's Good Machine Learning Practice (GMLP) principles emphasize the importance of a robust quality management system, risk-based planning, and ongoing performance monitoring throughout a product's lifecycle [3]. The FDA's draft guidance, "Artificial Intelligence-Enabled Device Software Functions: Lifecycle Regulation," explicitly incorporates ANSI/AAMI/ISO 14971 as part of its expectations.

These guidelines stress the need to identify AI-specific hazards early, evaluate safety and performance using representative clinical data, and document risk control measures like human-in-the-loop mechanisms. Post-market monitoring is also critical to detect performance drift and manage model updates. Key checkpoints include GMLP design reviews, pre-deployment risk–benefit evaluations, formal change control processes for model updates, and regular performance audits using localized U.S. data.

Similarly, Health Canada's guidance for ML-enabled devices underscores the importance of lifecycle risk management. Every stage - from data collection and model design to clinical validation and post-market surveillance - should include AI-specific hazard analyses and documented controls. By aligning documentation practices between the FDA and Health Canada, organizations can streamline the creation of a single ISO 14971 risk management file for regulatory submissions and ongoing compliance in both regions.

Documentation and Traceability for AI Devices

Thorough documentation is critical for AI-enabled medical devices, ensuring traceability throughout the model's lifecycle. Beyond standard ISO 14971 requirements, AI devices demand additional records, such as:

- Dataset lineage, including sources, collection dates, inclusion/exclusion criteria, and labeling processes.

- Assessments of dataset quality and bias.

- Details of model architecture, training configurations, and version histories.

- Change logs that connect updates to corresponding risk analyses and validation reports.

In U.S. healthcare settings, documentation should also include deployment-specific details, like which model versions are active at each site, decision thresholds, integration points with electronic health records, and records of local retraining or adaptations. This level of traceability is essential for linking safety issues to specific data or model configurations, aiding both regulatory compliance and internal investigations.

Benefit–risk analyses for AI devices must consider factors such as potential disparities across demographic groups, risks from opaque models, and how performance may shift over time due to data drift or updates. For example, organizations might quantify benefits and risks separately for key U.S. subpopulations (e.g., by race, age, or comorbidities) and evaluate whether human-in-the-loop controls mitigate misinterpretations of AI outputs in clinical workflows. Tools like Censinet RiskOps™ can help standardize AI-specific questionnaires, centralize vendor evidence, and benchmark risks, ensuring alignment with ISO 14971 and AI-focused guidance.

sbb-itb-535baee

Implementing ISO 14971 for AI Medical Devices

Healthcare organizations need to weave ISO 14971 into the fabric of their processes for procuring, deploying, and monitoring AI medical devices. This involves clearly defining actions, assigning responsibilities, and documenting hazard controls specific to AI. The aim? To create a scalable, repeatable system that meets U.S. regulatory demands while managing dozens - or even hundreds - of AI tools. Everything starts with procurement, where ensuring compliance with ISO 14971-based risk criteria sets the tone for the entire lifecycle.

Evaluating AI Devices During Procurement

Procurement is more than just choosing the right device - it’s about conducting a thorough risk review aligned with ISO 14971. To do this, organizations should update their procurement processes to include a documented risk evaluation for every AI-enabled device. This means involving key stakeholders like clinical leaders, IT/security professionals, biomedical engineers, compliance/privacy teams, and supply chain managers. Together, they must establish risk-acceptance criteria for safety, cybersecurity, and protected health information (PHI) or personally identifiable information (PII). Vendors should be required to provide a risk management file (or equivalent documentation) that outlines hazard identification, risk estimation, risk control measures, and post-market monitoring for the AI component [4][5]. Many U.S. health systems streamline this process with tools like Censinet RiskOps™, which centralize and automate vendor evaluations for medical devices and digital health tools.

Vendors should also submit a comprehensive risk management file that includes:

- Data sources (training, validation, and test data origins, including U.S. vs. non-U.S. populations)

- Data quality controls and bias assessments

- PHI handling procedures

- Cybersecurity architecture

- A software bill of materials (SBOM)

- AI lifecycle change control strategies [1][2][7]

Additionally, U.S. buyers should inquire about FDA submission status and how the vendor manages model updates and validations [3][6]. Beyond technical details, it’s crucial to assess whether the vendor operates under a quality management system aligned with ISO 13485 and uses ISO 14971 as the foundation for managing risks in software and AI components [1][5]. Signs of a mature vendor include documented risk management practices, ongoing post-market surveillance, and robust processes for addressing field issues and vulnerabilities [7][9]. Platforms like Censinet RiskOps™ can also help compare vendors using standardized risk scoring and shared evidence repositories, making it easier to identify high-risk vendors and prioritize mitigations.

Deploying AI Devices in Clinical Settings

Before deployment, it’s essential to establish cybersecurity baselines for AI devices, including:

- Network segmentation

- Authentication and authorization protocols

- Encryption for data in transit and at rest

- Logging and audit trail requirements

- Patch and update policies

- Integration with incident response programs aligned with NIST or HIPAA guidelines [3][5]

Organizations should validate that the device functions safely within the clinical network, considering factors like bandwidth, latency, and interoperability with systems such as EHR, RIS, and PACS. Additionally, they should agree with vendors on protocols for vulnerability disclosure and emergency patching [3][6]. These controls should be documented in a checklist consistent with ISO 14971 [4].

Risk controls should translate into actionable workflow elements, such as clinical use protocols, eligibility criteria, human oversight requirements, alarm/alert designs, and clinician training [1][3]. If risk analysis highlights potential misclassification in specific subpopulations, workflows can mandate human review or secondary confirmation for those cases. User interfaces should display model confidence levels with clear warnings where necessary [2]. Usability engineering principles from IEC 62366 and emerging AI usability standards can ensure that controls like warnings and guardrails are practical and usable in busy clinical environments [3].

An incident response plan is also critical. It should define what qualifies as an AI-related incident (e.g., safety events, performance issues, cybersecurity breaches, or data leaks) and outline how these events are detected, classified, and managed. Immediate actions could include disabling auto-acceptance, limiting the system to advisory use, or even taking it offline. The plan should also include communication pathways to clinical leaders, IT/security teams, risk management, and vendors, as well as procedures for updating the risk management file, conducting root-cause analyses, and implementing corrective actions [3][6].

Monitoring AI Devices After Deployment

Once in use, continuous monitoring is vital to ensure compliance and address new risks. Health delivery organizations (HDOs) should track clinical performance metrics like sensitivity, specificity, false positive/negative rates, usage patterns, subgroup performance (e.g., by age, sex, or race), system availability, and security events [2][3]. These metrics fall under ISO 14971’s post-market information requirements and must feed into regular risk reviews to determine if residual risks remain acceptable or if new hazards have surfaced [4]. Monitoring methods may include automated dashboards, periodic audits, user feedback channels, and safety rounds focused on AI workflows. Aggregated data can also be shared via benchmarking platforms like Censinet RiskOps™ to identify systemic issues across institutions and vendors.

When a model update or configuration change is proposed, the HDO and vendor should jointly evaluate its impact. This includes assessing whether it’s a minor fix, a performance tweak, or a major retraining effort, and determining its effect on existing hazards and controls. Any significant changes may require new validation efforts, additional user training, or updated labeling [3][6]. A structured change control process can categorize updates, ensure pre-deployment testing for higher-risk changes, and document risk evaluations in line with ISO 14971 [2][4]. The FDA’s evolving frameworks for AI/ML SaMD modifications emphasize having a lifecycle plan, predefined change categories, and ongoing monitoring, which HDOs should mirror in their governance [3][6].

To scale ISO 14971 implementation, HDOs can establish an enterprise AI governance committee to oversee all AI technologies. Standardized risk assessment templates, a central risk register for AI devices, and clear policies for procurement, deployment, monitoring, and decommissioning are also essential [1][2]. Documentation should include a living inventory of AI devices, their intended use, clinical owners, risk ratings, controls, and monitoring plans. Tools like Censinet RiskOps™ can centralize this documentation, automate reviews, and facilitate collaboration between clinical, IT/security, and supply chain teams, ensuring a consistent ISO 14971-aligned approach across a wide range of AI tools.

How Censinet RiskOps™ Supports AI Medical Device Risk Management

Healthcare organizations face a tough task: managing ISO 14971–aligned risk assessments for numerous AI-enabled medical devices. Censinet RiskOps™ steps in with a cloud-based platform tailored specifically for healthcare. It streamlines the entire ISO 14971 risk management process - from identifying hazards to post-market monitoring - using workflows designed with AI and machine learning technologies in mind.

The platform standardizes risk assessments, offers secure evidence sharing, and provides benchmarking tools. This not only speeds up AI device evaluations but also maintains the rigorous standards required by ISO 14971. By centralizing these processes, Censinet RiskOps™ creates a solid foundation for effective collaboration with vendors.

Terry Grogan, CISO at Tower Health, shared: "Censinet RiskOps allowed 3 FTEs to go back to their real jobs! Now we do a lot more risk assessments with only 2 FTEs required."

James Case, VP & CISO at Baptist Health, added: "Not only did we get rid of spreadsheets, but we have that larger community [of hospitals] to partner and work with."

Conducting Risk Assessments for AI Devices

Censinet RiskOps™ enables healthcare organizations to design AI-specific third-party risk assessments that align with ISO 14971 and emerging standards like AAMI/BS 34971 for machine learning medical devices. Its structured questionnaires gather key AI-related details, such as data provenance, labeling, population representation in the U.S., bias mitigation, and handling incomplete data. The platform evaluates and categorizes these risks, flagging issues like missing bias analysis or limited dataset diversity. These flagged risks are then routed to clinical leaders or data science teams for review before purchase decisions are made.

For lifecycle management, the platform tracks each AI device's update model - whether fixed, periodically updated, or continuously learning. It captures vendor change control processes, testing protocols, and regulatory commitments, such as FDA plans for AI/ML software as a medical device. When vendors submit model updates, the system logs the changes, requests validation evidence, and identifies new or modified hazards, such as performance degradation in specific subpopulations. It then triggers re-approval workflows involving clinical, IT, and cybersecurity reviewers. This creates a traceable record linking updates to risk assessments and residual risk decisions, which supports internal governance and FDA audits. By ensuring risk assessments remain dynamic, the platform adheres to ISO 14971 principles.

Additionally, the platform integrates cybersecurity, privacy, and interoperability risks into a unified framework. For example, it links technical findings - like weak authentication in an AI clinical decision support tool - to specific harms, such as unauthorized access to patient data, model manipulation, or incorrect treatment recommendations. It also documents controls like network segmentation and multi-factor authentication. This approach ensures that cybersecurity and privacy risks are treated as integral parts of overall medical device risk, rather than isolated IT concerns.

Automating AI Risk Management with Censinet AI

Censinet AI takes automation to the next level by handling evidence collection and validation from AI device vendors, cutting down on manual work while maintaining the rigor of ISO 14971. The system automatically analyzes vendor-submitted documentation, categorizes it according to required evidence - such as data management, model validation, and cybersecurity - and checks for completeness and consistency with ISO 14971-aligned templates. For instance, if a vendor claims performance parity across demographic groups, the platform verifies the inclusion of subgroup performance tables, sample sizes, and statistical comparisons. Missing elements are flagged for manual follow-up, ensuring thorough evaluations.

The platform also compiles detailed assessment results into role-specific risk summaries. It highlights clinical performance risks and bias issues for physicians and nursing leaders, cybersecurity vulnerabilities for CISOs and IT teams, and overall risk ratings and remediation timelines for executives. Dashboards offer a quick overview of AI devices in use, their risk categories, open corrective actions, and trends in incidents or near-misses. Governance workflows are automated too, routing new AI device requests, assessments, and vendor documentation to the right reviewers - whether clinical, legal, privacy, IT, or cybersecurity - based on pre-set policies. This ensures no high-risk AI device is used without proper approvals, documented justifications, and monitoring plans, creating an auditable trail for regulatory inspections or accreditation reviews.

Improving Risk Management Through Collaboration and Benchmarking

Beyond automation, Censinet RiskOps™ enhances risk management through collaboration. Its ecosystem allows health systems to share anonymized risk assessment results, common findings, and remediation strategies for specific AI products or vendors. This helps organizations save time and learn from each other’s experiences. Benchmarking reports compare a vendor's risk posture to others in areas like data governance, bias management, cybersecurity, and change control, using aggregated data. These insights help hospitals negotiate stronger vendor commitments, prioritize fixes, and adopt best practices more efficiently.

Brian Sterud, CIO at Faith Regional Health, explained: "Benchmarking against industry standards helps us advocate for the right resources and ensures we are leading where it matters."

Healthcare organizations can track metrics such as reduced high risks, faster AI onboarding, and improved vendor compliance. These key performance indicators help leaders in risk management, clinical engineering, and IT security demonstrate program progress, justify investments, and align their practices with evolving guidance for AI and machine learning in medical devices. According to Censinet, customers have reduced third-party risk assessment cycle times by up to 85% through standardized questionnaires, automation, and evidence reuse. Some healthcare organizations report cutting manual assessment efforts by 50–70% for vendors already onboarded to the platform.

Conclusion

Key Points to Remember

ISO 14971:2019 offers a detailed framework for managing risks throughout the lifecycle of AI-enabled medical devices. By addressing the unique challenges posed by AI, this standard ensures that risks are thoroughly documented in hazard analyses. When paired with AI-focused guidance like AAMI TIR34971:2023 and ISO/TS 24971-2, healthcare organizations can tackle critical concerns such as data quality, algorithm performance, usability, and cybersecurity in alignment with FDA and Health Canada expectations.

Some of the most pressing risks include the use of non-representative training data, which can lead to inconsistent performance across different demographic groups, algorithm overfitting that might cause failures in new clinical environments, and cybersecurity vulnerabilities that could expose devices to unauthorized access or manipulation. ISO 14971’s structured approach - covering planning, hazard identification, risk evaluation, control implementation, and post-market monitoring - ensures that these risks are systematically managed and documented throughout the lifecycle of AI devices. This methodical process helps mitigate the ever-evolving challenges associated with AI technology.

Centralized solutions like Censinet RiskOps™ play a pivotal role in streamlining risk management for AI systems. By centralizing risk assessments, leveraging AI-powered workflows, and enabling collaborative benchmarking, Censinet RiskOps™ ensures that clinical, IT, and cybersecurity teams operate within a unified framework designed for healthcare's regulatory landscape.

Next Steps for Healthcare Organizations

To adopt these practices effectively, healthcare organizations should begin by cataloging all existing and planned AI tools. Classify these tools based on their clinical impact, data flows involving protected health information (PHI), and integration points with electronic health records (EHRs) and medical devices. Next, revise your risk management policies to include references to ISO 14971, establish clear risk acceptance criteria, and assign specific responsibilities for risk oversight.

Start by piloting a standardized AI risk assessment process - using a platform like Censinet RiskOps™ - on a high-impact AI device. This process should include vendor questionnaires, risk scoring, and detailed mitigation planning. Additionally, implement monitoring and feedback loops, such as regular performance evaluations and cybersecurity log reviews, to ensure that new insights are incorporated into risk files and future procurement decisions. These steps not only enhance immediate risk management efforts but also set the stage for stronger AI governance as standards and regulations continue to evolve.

FAQs

How does ISO 14971 manage risks specific to AI in medical devices?

ISO 14971 offers a structured approach to pinpointing, evaluating, and addressing risks associated with AI-powered medical devices throughout their entire lifecycle. It tackles AI-specific hurdles like algorithm bias, issues with data quality, and unpredictable behavior, ensuring these risks are consistently managed as the device undergoes changes and updates.

The standard focuses on proactive risk management to uphold the safety and performance of AI-based medical devices, recognizing their distinct capability to evolve and modify behavior over time. By following this framework, manufacturers can navigate potential safety challenges, comply with regulatory standards, and prioritize patient protection.

How do data quality and bias affect risk management for AI-driven medical devices?

Data quality and bias play a crucial role in minimizing risks associated with AI-driven medical devices. Inaccurate or low-quality data can lead to unreliable AI outputs, which may result in poor risk assessments and even safety issues. On the other hand, biased data can cause devices to perform inconsistently across diverse patient groups, raising concerns about both fairness and safety.

Adhering to ISO 14971 standards requires the use of reliable, unbiased data. This approach not only boosts the dependability of AI systems but also lowers regulatory risks and prioritizes patient safety.

How can healthcare organizations apply ISO 14971 to manage risks for AI-driven medical devices?

Healthcare organizations can use ISO 14971 to manage risks associated with AI-driven medical devices by creating a detailed risk management process that addresses the unique challenges AI presents. This involves pinpointing AI-specific risks, assessing their potential consequences, and putting measures in place to minimize or control them.

Some essential practices include regularly monitoring AI performance, maintaining transparency in AI decision-making processes, and keeping thorough records of all risk management efforts. Using tools specifically designed for healthcare risk management can simplify evaluations, enhance teamwork, and ensure compliance with ISO 14971 standards.