The Regulated Future: How AI Governance Will Shape Business Strategy

Post Summary

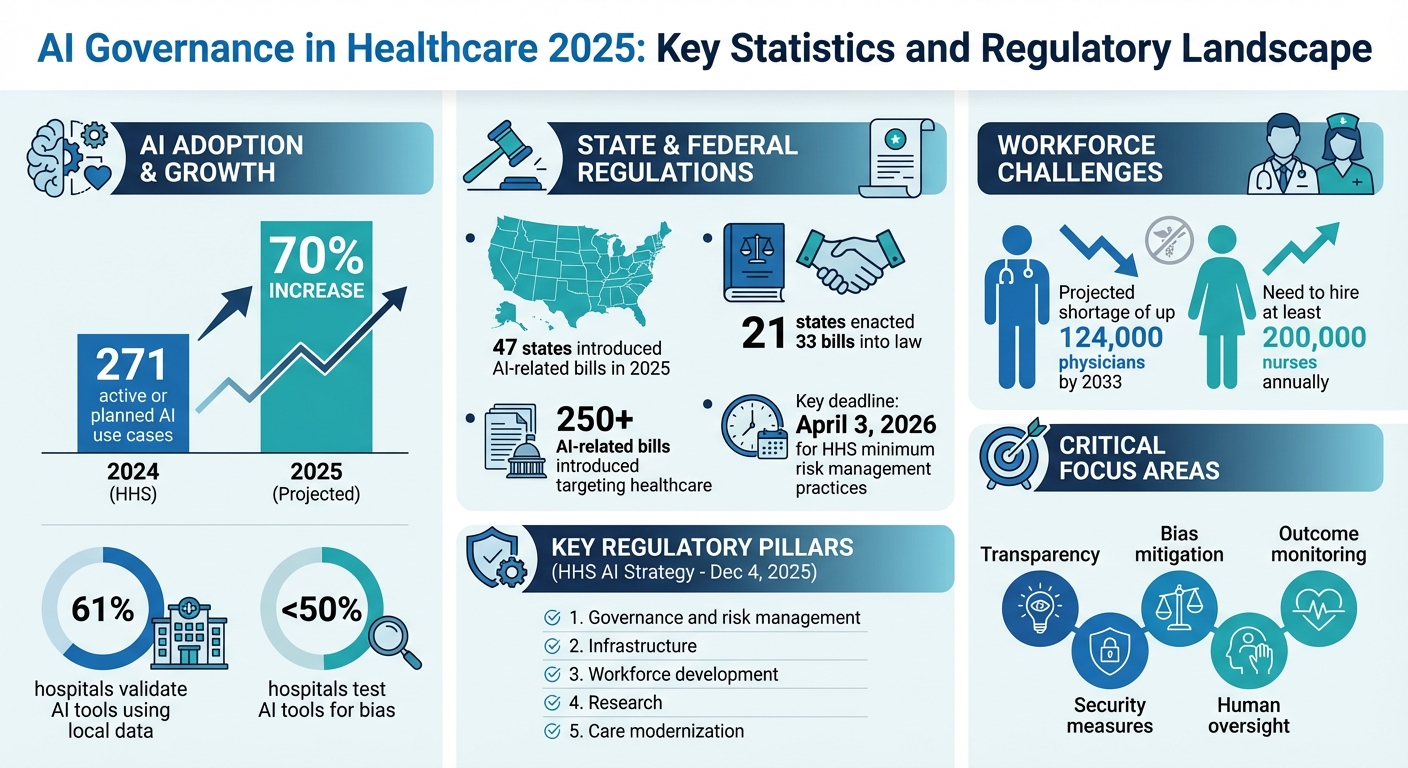

AI governance is now essential for healthcare organizations in 2025. With over 271 active or planned AI use cases reported by the U.S. Department of Health and Human Services (HHS) in 2024 and a projected 70% increase in 2025, the need for oversight, risk management, and compliance has never been greater. However, only 61% of hospitals validate AI tools using local data, and fewer than half test for bias, highlighting critical gaps.

Key points include:

- State & Federal Regulations: 47 states introduced over 250 AI-related bills in 2025, with 21 states enacting 33 into law.

- HHS AI Strategy: Released December 4, 2025, focusing on transparency, bias mitigation, and compliance.

- Critical Risks: Misdiagnoses, unequal treatment, and regulatory penalties from poorly governed AI systems.

- New State Laws: Examples include California’s mandatory AI disclosures and Texas’ Responsible Artificial Intelligence Governance Act.

Strong AI governance frameworks, such as cross-functional teams and risk management tools, are crucial for compliance and patient safety. Organizations must align governance with business goals, prioritize transparency, and ensure continuous monitoring to meet evolving regulations.

AI Governance in Healthcare 2025: Key Statistics and Regulatory Landscape

The AI Regulatory Landscape in 2025

State and Federal AI Laws You Need to Know

As the importance of AI governance grows, the healthcare industry is experiencing a wave of new regulations that are reshaping the way technology is used.

On December 4, 2025, the Department of Health and Human Services (HHS) unveiled a 21-page AI strategy built around five key pillars: governance and risk management, infrastructure, workforce development, research, and care modernization. The strategy requires divisions like the FDA, CDC, CMS, and NIH to adopt minimum risk management practices for high-impact AI systems by April 3, 2026. These practices include bias mitigation, outcome monitoring, security measures, and human oversight[1][9].

The FDA has also stepped up, releasing draft guidance on January 6, 2025, that classifies many AI and machine learning (ML) technologies as medical devices. This means they’ll need formal approval under the Federal Food, Drug, and Cosmetic Act (FDCA)[8]. Meanwhile, HIPAA continues to play a crucial role in protecting data privacy and security, ensuring that AI models handling patient data address risks like data interception and re-identification[8].

At the state level, regulations have been evolving rapidly. By October 11, 2025, 47 states had introduced over 250 AI-related bills targeting healthcare, with 21 states enacting 33 of those into law[3]. For instance:

- California’s AB 3030 (effective January 1, 2025) requires healthcare providers to disclose AI use in clinical communications, including disclaimers and contact information for a licensed professional[7].

- California’s AB 489 (effective October 1, 2025) bans AI systems from using professional titles like "M.D." or "R.N." unless overseen by licensed professionals[7].

- Illinois’ HB 1806, known as the Wellness and Oversight for Psychological Resources Act, prohibits AI systems from making independent therapeutic decisions or directly interacting with clients in therapy without review by a licensed professional (effective August 4, 2025)[7].

- Nevada’s AB 406 (effective July 1, 2025) restricts AI providers from offering services that involve professional mental or behavioral healthcare, including the use of misleading titles like "therapist" or "psychiatrist"[7].

- Texas’ HB 149, the Responsible Artificial Intelligence Governance Act, effective January 1, 2026, requires healthcare providers to disclose AI use in diagnosis or treatment at the point of care[7].

These laws are not just shaping how AI is used - they’re also driving significant changes in how healthcare organizations approach their business strategies.

How the Joint Commission and CHAI Are Shaping AI Standards

How Regulations Have Changed Business Decisions

The growing number of federal and state regulations is forcing healthcare organizations to rethink how they adopt AI technologies. For example, the HHS mandate requiring minimum risk management practices by April 3, 2026, has created a hard deadline that’s influencing procurement decisions across the industry[1]. Many organizations are now focusing on AI vendors that meet standards like the NIST AI Risk Management Framework, which emphasizes bias mitigation, outcome monitoring, and strong security protocols.

State-specific laws are adding another layer of complexity, especially for healthcare systems operating across multiple states. Take California’s AB 489 as an example: it treats each prohibited term as a separate offense and gives state licensing boards the authority to investigate violations. This has prompted healthcare providers to conduct thorough audits of their AI systems to ensure compliance[7]. The financial and reputational risks of non-compliance have become so significant that many healthcare leaders are delaying AI deployments until they can establish comprehensive governance frameworks. This cautious approach is slowing down the pace of AI integration while reshaping its scope across the industry.

How to Build an AI Governance Framework

An AI governance framework combines rules, processes, and technology to ensure AI is deployed responsibly in healthcare settings [4]. For healthcare organizations, this framework must prioritize transparency, fairness, and adherence to ethical and regulatory standards, all while managing risks and protecting patient safety [4]. It serves as the foundation for both operational success and risk management across AI systems in healthcare.

The People, Process, Technology, and Operations (PPTO) framework is a practical approach to establishing AI governance in healthcare [4]. Achieving success requires the right mix of skilled individuals, clear oversight processes, and strong operational structures.

Creating Cross-Functional AI Governance Teams

Healthcare organizations should form cross-functional AI governance teams that bring together a variety of expertise [10]. These teams can either operate independently or be integrated into existing committees, but they must be led by individuals with deep knowledge of AI.

The team should include a mix of stakeholders: executive leaders, regulatory and ethical compliance officers, IT specialists, safety and incident reporting experts, clinical and operational professionals, cybersecurity and data privacy experts, and representatives of affected groups such as staff, patients, and caregivers [10]. This diversity ensures that AI-related decisions are evaluated from multiple angles, including clinical effectiveness, patient safety, data security, legal compliance, and ethical considerations.

These teams oversee AI risks throughout the technology's lifecycle [10]. By embedding AI governance into existing quality assurance programs and enterprise risk management strategies, organizations can maintain consistent care delivery while addressing potential risks [2][6]. Additionally, providing ongoing education and training on AI literacy, tool usage, and organizational policies ensures that healthcare providers and staff can safely and effectively integrate AI into their workflows [10]. This integrated approach ties AI governance to broader organizational strategies.

How to Manage AI-Related Risks

Managing risks associated with AI requires a proactive strategy to identify and address potential issues before they affect patient care or violate regulatory requirements. Key areas of focus include reducing bias, monitoring outcomes, implementing security measures, and maintaining human oversight.

The first step is understanding potential risks, such as biased algorithms, data breaches, or inaccurate predictions. Your governance framework should outline clear processes for identifying, assessing, and mitigating these risks at every stage of the AI lifecycle. Using centralized tools to monitor risks can provide better visibility and control. Incorporating these risks into your organization's broader enterprise risk management strategy ensures that AI governance aligns with overall risk tolerance and compliance goals.

Aligning AI Use with Ethical Standards

To ensure ethical AI use, healthcare organizations must build on existing regulatory frameworks while incorporating medical ethics and clinical standards [11]. The guiding principles - accountability, transparency, fairness, and safety - should inform every AI-related decision [11].

AI governance in healthcare extends established IT governance practices but also addresses the unique challenges posed by machine learning technologies. It must comply with existing safety-critical regulations, such as medical device rules, clinical trial standards, GxP controls, HIPAA, and GDPR [12]. Your framework should ensure that AI systems meet these standards while addressing the specific risks of machine learning.

Transparency is especially critical. Patients and providers need to know when AI is being used and how it influences care decisions. Clear disclosure protocols should be established, ensuring that AI recommendations can be explained in clinical terms. Human oversight remains essential for critical decisions, so the governance framework should define when human review is required, who can override AI recommendations, and how these actions are documented for compliance and quality improvement.

sbb-itb-535baee

How Censinet Supports AI Risk Management and Compliance

Censinet RiskOps™ streamlines AI risk management while ensuring compliance with regulations. Powered by Censinet AITM, the platform speeds up risk assessments without sacrificing oversight. Here's how Censinet is reshaping AI risk management.

Faster AI Risk Assessments with Censinet AITM

Censinet AITM revolutionizes third-party risk assessments by enabling vendors to complete security questionnaires in mere seconds instead of weeks. The platform automatically processes vendor evidence and documentation, highlights key integration details and external risk factors, and generates comprehensive risk summary reports. This level of automation significantly reduces the time required for risk management.

Balancing Automation with Human Oversight

Censinet AITM strikes a balance between automation and human involvement in essential risk assessment tasks such as evidence validation, policy creation, and risk mitigation. Customizable rules and review steps ensure that risk teams maintain control, allowing automation to assist rather than replace critical decision-making. This combination enables healthcare leaders to scale up their risk management efforts and tackle complex risks with efficiency and accuracy.

Managing AI Risks from a Single Dashboard

Censinet RiskOps™ acts as a centralized hub for AI governance, integrating automated assessments with guided oversight. The platform routes key findings and tasks to the appropriate stakeholders, including AI governance committee members, for review and approval. An easy-to-navigate, real-time dashboard ensures that the right teams address critical issues promptly. This cohesive system supports ongoing AI oversight, accountability, and governance across the organization, making AI risk management more efficient and organized.

Preparing Your Business for Future AI Regulations

As AI regulations continue to evolve, healthcare organizations must proactively prepare for these changes. Relying on static governance strategies won’t cut it in this fast-moving environment. Instead, creating governance frameworks that can adapt to regulatory shifts will help organizations stay ahead and respond efficiently as new requirements emerge.

Connecting AI Governance to Business Goals

AI governance works best when it’s integrated into your overall business strategy. Rather than creating isolated processes, align AI oversight with your existing operational frameworks. Tools like the NIST AI RMF and ISO 42001 can guide you in building a unified governance structure that complies with federal, state, and industry-specific standards [12].

This integrated approach is particularly important in addressing workforce challenges. For example, the American Hospital Association projects a shortage of up to 124,000 physicians by 2033 and the need to hire at least 200,000 nurses annually [15]. By ensuring AI systems meet clinical and regulatory standards, healthcare organizations can better support their workforce and improve patient care.

Effective governance should also prioritize patient outcomes over purely technical metrics. Real-world clinical performance doesn’t always match test results, so it’s crucial to establish mechanisms that flag underperformance in real-life settings [5].

Why Continuous Monitoring Matters

Once your governance aligns with business goals, continuous oversight becomes critical. AI systems are dynamic - they evolve, adapt their algorithms, and improve based on real-world data. This adaptability, while beneficial, also introduces risks. As researchers from Duke-NUS Medical School's Centre of Regulatory Excellence observed:

"The current regulations may not suffice as AI-based technologies are capable of working autonomously, adapting their algorithms, and improving their performance over time based on the new real-world data that they have encountered" [14].

Without ongoing monitoring, AI systems can unintentionally amplify biases, disrupt internal processes, and create regulatory risks. This can lead to operational setbacks, reputational harm, and even legal trouble [16]. Regulatory frameworks like the EU AI Act, FDA Software as a Medical Device guidelines, and ISO 42001 now require post-market surveillance and continuous performance evaluations for high-risk AI systems [12][14].

To prepare for audits and meet regulatory expectations, document early and thoroughly. This includes detailing where and how AI systems are deployed, why they’re being used, and how they’re performing. Such documentation is essential in high-risk regulatory environments [12].

Action Steps for Healthcare Leaders

Turning strategy into action requires decisive leadership. Start by forming a multidisciplinary AI governance team that includes experts from various fields such as medical informatics, clinical leadership, legal, compliance, safety and quality, data science, bioethics, and patient advocacy [5]. This diverse team ensures your governance framework addresses technical, clinical, and regulatory needs comprehensively.

Create clear pathways for internal harm reporting and escalation, along with well-defined go/no-go criteria and pre-implementation validations [12][5]. Healthcare organizations bear the ultimate responsibility for ensuring the safety, effectiveness, and appropriateness of their AI systems [5].

Your governance framework should also be flexible enough to handle varying state-level requirements while maintaining consistent risk management practices. States like Utah, Colorado, California, and Virginia have already enacted laws addressing data privacy, security, transparency, bias mitigation, and accountability in AI [15][13]. As more states introduce similar regulations, your framework should adapt without requiring a complete overhaul of core processes.

FAQs

What are the essential elements of an effective AI governance framework in healthcare?

An AI governance framework for healthcare must address several critical elements to ensure AI technologies are used ethically, safely, and in compliance with regulations.

- Accountability: Establish clear roles and responsibilities for oversight and decision-making to ensure every action has a responsible party.

- Transparency: Design AI systems so their processes and decisions are easy to understand and explain, making them accessible to healthcare professionals and patients alike.

- Fairness: Actively work to identify and reduce biases, ensuring that AI-driven outcomes are equitable for all patients, regardless of their background.

- Safety: Make patient safety a top priority by thoroughly testing AI systems and continuously monitoring their performance in real-world settings.

- Data Governance: Develop strong policies to protect data privacy, ensure security, and maintain data quality throughout the AI lifecycle.

- Continuous Monitoring: Regularly review AI systems to spot and address emerging risks, adapting as technology and healthcare needs evolve.

By focusing on these principles, healthcare organizations can responsibly integrate AI technologies, building trust while reducing potential risks.

How do varying state AI laws affect healthcare organizations operating across multiple states?

State-specific AI laws present a complex web of regulations that healthcare organizations must manage, especially when they operate across multiple states. These laws vary widely, covering areas like transparency requirements, bias reduction, human oversight, and even restrictions on specific AI applications.

To remain compliant, organizations must craft governance strategies that cater to the unique requirements of each state. While this adds layers of complexity to operations, careful planning and strong AI governance frameworks can help minimize risks and maintain seamless functionality across different jurisdictions.

Why is it important to continuously monitor AI systems in healthcare?

Continuous monitoring plays a key role in keeping healthcare AI systems accurate, fair, and aligned with patient safety standards. As clinical practices shift and patient demographics change over time, AI tools can experience performance issues or develop biases.

Regular evaluations allow healthcare organizations to address these challenges, minimize risks, and stay compliant with regulatory guidelines. This constant oversight not only ensures these systems deliver dependable and ethical results but also strengthens trust in AI technologies within the ever-evolving healthcare landscape.

Related Blog Posts

- Cross-Jurisdictional AI Governance: Creating Unified Approaches in a Fragmented Regulatory Landscape

- AI Governance Talent Gap: How Companies Are Building Specialized Teams for 2025 Compliance

- AI Governance for Ethical Risk Prediction in Healthcare

- Board-Level AI: How C-Suite Leaders Can Master AI Governance