Risk Management Renaissance: How AI is Transforming Enterprise Risk Programs

Post Summary

AI is changing how organizations handle risks, especially in healthcare, where cyberattacks and data breaches are skyrocketing. In 2025, the average cost of a healthcare data breach hit $10.3 million, with third-party vendors being responsible for 80% of stolen patient records. Traditional, manual risk management methods are proving too slow and ineffective in this evolving landscape.

AI tools now enable faster threat detection, automate compliance tasks, and provide real-time risk assessments. For example, AI can reduce incident identification time by 98 days and streamline third-party vendor evaluations. However, integrating AI requires navigating strict regulations like HIPAA and ensuring strong governance frameworks to avoid issues like algorithmic bias or privacy violations.

Key takeaways:

- Cybersecurity risks: Healthcare breaches cost $9.8 million per incident, with ransomware attacks up 30% in 2025.

- AI solutions: Predictive analytics, anomaly detection, and centralized platforms improve response times and decision-making.

- Governance: Cross-functional teams and AI risk policies ensure ethical and compliant AI use.

AI isn't just automating processes; it's reshaping risk management into a proactive, data-driven discipline. With tools like Censinet RiskOps™, healthcare organizations can manage risks more efficiently while maintaining compliance and patient trust.

AI-Driven Risk Management in Healthcare

Healthcare organizations face challenges that demand tailored risk management strategies. Interestingly, the healthcare sector is adopting AI at more than twice the pace of the broader economy. This year alone, healthcare AI spending has surged to $1.4 billion, nearly tripling the investment projected for 2024 [9]. This rapid growth underscores the urgency of addressing healthcare-specific risks and the promise AI holds in tackling them. Let’s dive into some of the key risk areas in this field.

Key Risk Areas in Healthcare

Healthcare providers grapple with several critical risks, each with serious implications:

- Cybersecurity threats: The healthcare industry experiences an average cost of $9.8 million per incident [6]. These breaches not only disrupt patient care but also damage trust in the system.

- Operational risks: Failures in medical devices or supply chain disruptions can jeopardize patient safety and create system-wide vulnerabilities.

- Privacy concerns: With HIPAA regulations in place, healthcare organizations must carefully manage patient data as it moves through increasingly intricate digital networks.

- Third-party vendor risks: Many healthcare providers rely on numerous external partners, each introducing their own security challenges and potential weaknesses.

Matching AI Technologies to Risk Activities

AI tools are proving invaluable in mitigating these risks. Here’s how:

- Anomaly detection and predictive analytics: These technologies identify unusual patterns, such as suspicious login attempts or unexpected spikes in prescription orders. By analyzing historical and real-time data, AI can forecast and address risks before they escalate [5].

- Automation: By automating routine compliance tasks, AI reduces human error and allows healthcare staff to focus on more critical responsibilities [5].

- Centralized risk platforms: AI-powered systems consolidate risk data, providing real-time insights and enabling faster, more informed decision-making [5].

- Natural Language Processing (NLP): NLP tools can analyze complex vendor contracts and regulatory documents, making it easier to identify potential risks.

- Continuous control monitoring: AI monitors security practices around the clock, ensuring vulnerabilities are addressed promptly [4][7].

These technologies are reshaping how healthcare organizations approach risk, but their use must align with strict regulatory standards.

Regulatory and Standards Requirements

Implementing AI in healthcare risk management requires navigating a maze of regulations:

- HIPAA: Sets the foundation for safeguarding patient health information, requiring encryption, access controls, and timely breach notifications.

- HITECH Act: Expands on HIPAA with stricter enforcement and higher penalties for non-compliance.

- FDA cybersecurity guidance: Focuses on medical devices and health IT systems, emphasizing security considerations during both premarket and postmarket phases.

- NIST frameworks: Offer structured methodologies for managing cybersecurity risks.

- CMS regulations: Govern risk adjustment processes and hierarchical condition categories (HCCs) [8].

Additionally, healthcare organizations are increasingly establishing internal guidelines for emerging technologies like generative AI. For example, 64% of organizations have enacted data privacy rules for GenAI, while 55% have implemented transparency measures, 51% have developed ethical guidelines, and 42% have created integration processes [6]. These frameworks ensure that AI tools enhance both security and compliance without compromising ethical standards.

Evaluating Your Current Risk Management Program

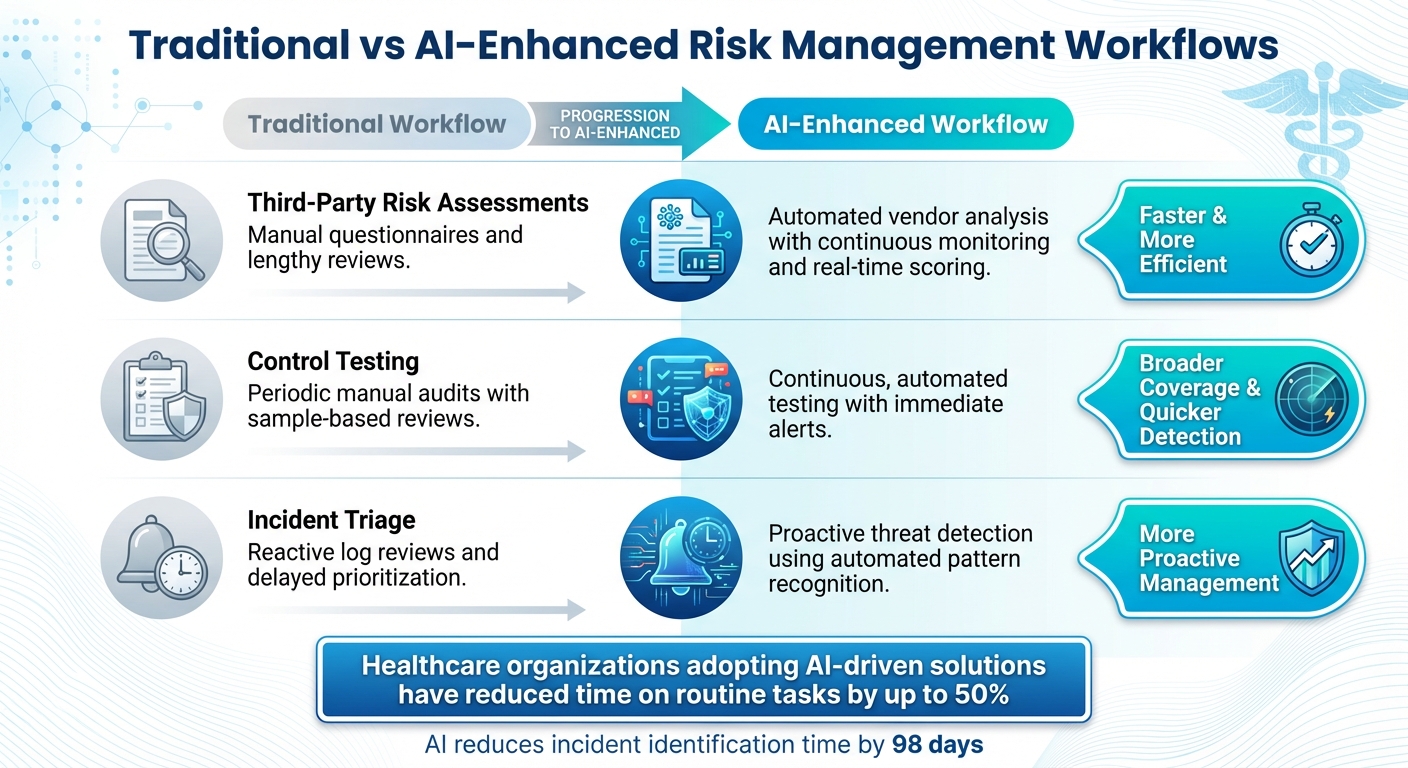

Traditional vs AI-Enhanced Risk Management Workflows in Healthcare

Start by taking a close look at where your risk management efforts currently stand. Begin with an inventory of your risk areas and assets - this means listing cybersecurity threats, operational weaknesses, and the systems they impact. Next, map out your existing processes. Document how your organization identifies, evaluates, monitors, and addresses risks. Many organizations find they’re still relying on outdated tools like spreadsheets, manual audits, and static reports that don’t keep up with today’s rapidly changing threats [2]. This initial review helps pinpoint areas where AI can make a real difference.

From there, dig into the gaps. Are your current approaches too slow to respond to cybersecurity breaches or financial fraud? Check for weaknesses in governance, especially when it comes to accountability or staying compliant [2]. Data quality is another critical area to examine - poor or biased data can derail any AI-driven solution you try to implement [14].

"It's making sure that everyone understands the AI risk appetite for the organization" [13].

Assessing Risk Program Maturity

Once you’ve identified the gaps, the next step is to evaluate how mature your risk management program is. This helps you figure out how well AI can be integrated into your processes. Whether you’re building a new risk management program from scratch or improving an existing one, a thorough risk assessment is key. Focus on identifying the data and controls that will shape your AI strategy. These insights will guide the development of the necessary safeguards [13].

You’ll also need to assess your organization’s internal AI expertise. Many leadership teams lack the technical knowledge to implement AI effectively, which can create significant risks [12].

"There needs to be collaboration between risk and the business, vertically up and down but then also horizontally across the organization. It is absolutely essential - collaboration across risk departments. The problem is there are silos. Risk and audit are interconnected and interdependent" [12].

Creating an AI Risk Register

Building on your maturity assessment, establish a detailed AI risk register to maintain continuous oversight. This register centralizes all identified risks, outlining their likelihood, impact, controls, ownership, and mitigation strategies [15]. AI can simplify much of this process by analyzing assessments and frameworks, suggesting relevant risks, and calculating exposure levels as conditions evolve.

Catalog how AI is used in your organization - identify use cases, systems, and data flows. Align these elements with the NIST AI Risk Management Framework’s key functions: Govern, Map, Measure, and Manage. This ensures that your AI initiatives not only meet established standards but also reflect your organization’s specific risk tolerance.

"Assess and enhance your risk framework's readiness for generative AI, including acceptable use guidelines and clear accountabilities" [11].

Comparing Current vs. AI-Enhanced Workflows

The benefits of AI in risk management become evident when comparing traditional methods to AI-driven workflows. Here’s how they differ:

| Workflow | Traditional | AI-Enhanced | Improvement |

|---|---|---|---|

| Third-Party Risk Assessments | Manual questionnaires and lengthy reviews | Automated vendor analysis with continuous monitoring and real-time scoring | Faster and more efficient assessments |

| Control Testing | Periodic manual audits with sample-based reviews | Continuous, automated testing with immediate alerts | Broader coverage and quicker detection |

| Incident Triage | Reactive log reviews and delayed prioritization | Proactive threat detection using automated pattern recognition | More proactive incident management |

"Have a candid assessment of what your board's capabilities are… The board needs to apply an appropriate level of governance pressure to someone who's going to oversee the AI landscape, the risk exposure, the disruption, and the opportunity" [12].

Implementing AI for Cybersecurity and Third-Party Risk Management

Once you've evaluated your risk program, the next step is to leverage AI to tackle cyber threats and manage third-party risks. In healthcare, these challenges are especially pressing - protecting sensitive patient data like protected health information (PHI) and keeping a close eye on vendor security are critical. AI takes these time-consuming, manual tasks and turns them into automated, continuous processes that can identify threats faster and evaluate vendors more effectively. Let’s explore how AI is reshaping threat detection and vendor risk management.

AI for Threat Detection and Monitoring

AI tools excel at analyzing massive amounts of network data, user activity, and system logs in real time - something human teams simply can’t do at the same speed or scale. These tools spot patterns that might signal a breach, such as unusual access to PHI systems or suspicious behavior on clinical networks. Using advanced machine learning, they can even predict potential risk areas by combining historical and real-time data. This helps healthcare organizations avoid patient safety issues, regulatory troubles, and unexpected financial costs [5][18].

To ensure AI systems remain secure, it's essential to extend the software development lifecycle (SDLC) to include monitoring inputs, system stress, and potential data leaks [17]. This involves implementing AI-driven threat intelligence processes with built-in safeguards that align with clinical workflows while maintaining constant oversight. The Health Sector Coordinating Council's Cybersecurity Working Group is set to release guidance in 2026, offering practical playbooks for handling AI-related cybersecurity threats [16].

AI-Driven Third-Party Risk Management

Traditional methods for assessing vendors are often slow and outdated before they’re even complete. AI streamlines this process by automating critical tasks - analyzing vendor responses, validating documentation, and keeping tabs on external threat intelligence feeds. AI-enabled platforms can process security questionnaires, summarize evidence, track integration details, and uncover risks from fourth-party relationships much faster than manual approaches.

These platforms also consolidate all vendor risk data into a single, unified view, eliminating silos and offering real-time insights through user-friendly dashboards [5].

Censinet RiskOps™ and Censinet AI Capabilities

Censinet RiskOps™ is designed specifically to address these healthcare challenges. It combines third-party risk management, enterprise risk assessments, and cybersecurity benchmarking into one integrated platform. With Censinet AI, the entire assessment process becomes faster and more efficient. Vendors can complete questionnaires quickly, while the platform auto-summarizes evidence, captures integration details, identifies fourth-party risks, and generates detailed risk reports.

The platform uses a mix of human oversight and autonomous automation for tasks like evidence validation, policy drafting, and risk mitigation, allowing risk teams to scale their operations without compromising safety. Teams can set up configurable rules and review processes, ensuring automation supports - not replaces - key decision-making. Censinet AI also enhances governance by routing critical AI-related risks and tasks to the appropriate stakeholders. A centralized AI risk dashboard provides real-time updates, serving as the go-to hub for managing policies, risks, and tasks. This ensures that the right teams are always focused on the most pressing issues.

sbb-itb-535baee

Establishing AI Governance and Oversight

As AI continues to transform industries, having a solid governance framework in place is critical to ensure safety, ethics, and compliance. AI governance refers to the structured frameworks, policies, and processes that guide the ethical and responsible design, development, and use of artificial intelligence [10][19]. Without proper oversight, risks such as algorithmic bias, privacy violations, and errors in AI systems can erode trust and negatively impact healthcare outcomes [10]. Below, we’ll explore how to create an effective governance structure, develop risk policies, and centralize oversight to manage AI responsibly.

Building an AI Governance Structure

The first step is forming a cross-functional AI governance committee. This team should include representatives from key areas like cybersecurity, compliance, clinical leadership, IT, legal, and business operations. Together, they’ll define policies and assign responsibilities for each stage of the AI lifecycle - starting from identifying the problem to deployment and ongoing monitoring.

A well-defined problem statement is essential. The committee must evaluate whether AI is the most appropriate solution, consider alternatives, and ensure AI offers distinct value before proceeding [10]. This approach not only minimizes unnecessary risks but also ensures resources are allocated effectively.

Developing AI Risk Policies

AI risk policies should prioritize transparency, safety, fairness, and compliance with relevant regulations [20]. For instance, the European Union’s Artificial Intelligence Act (AI Act), which takes effect in 2025, will introduce mandatory obligations for healthcare organizations to adhere to governance-focused policies [20].

Effective policies should outline acceptable AI use cases, implement strict data access controls, and establish robust model validation procedures to confirm that AI systems function as intended. Incorporating guidelines like the Office of Inspector General’s seven elements for AI compliance - covering areas such as data integrity, algorithm accountability, and continuous monitoring - can further strengthen these policies.

Centralizing Oversight with Censinet RiskOps™

Fragmented governance across different teams can lead to oversight gaps. This is where a platform like Censinet RiskOps™ becomes invaluable. Acting as a central hub, it routes AI-related risks and tasks to the appropriate stakeholders, including members of the AI governance committee.

The platform provides a centralized dashboard that consolidates real-time data, tracks the ownership, purpose, status, and version history of AI models, and ensures risks are addressed promptly. By centralizing oversight, healthcare organizations can maintain accountability, streamline AI monitoring, and ensure governance processes keep up with rapid advancements in AI technology.

Continuous Monitoring and Improvement with AI

Once you've established an AI governance framework, the next step is ensuring it stays relevant and effective. Continuous monitoring allows healthcare organizations to respond quickly to new risks and make informed adjustments. Unlike traditional assessments done at intervals, AI enables real-time detection of threats, integration of insights into decisions, and performance comparisons with industry standards.

Real-Time Risk Monitoring

AI-powered systems can detect threats far faster than manual methods, which is crucial in a landscape where 92% of healthcare organizations faced cyberattacks in 2024[1]. The ability to respond in real time can make all the difference.

Continuous monitoring should extend beyond internal systems. It’s essential to keep an eye on vendor security practices, unusual data flow patterns at integration points, and unexpected access to Protected Health Information (PHI). Deploy tools like Security Information and Event Management (SIEM) and Security Orchestration, Automation, and Response (SOAR) to monitor network activity, detect stress signals, and flag potential data breaches[17].

AI can also safeguard medical AI systems from adversarial attacks. Protect these systems by implementing data provenance controls, verifying data sources with cryptography, and retraining models using verified datasets. This helps identify issues like model drift and biases[1].

Integrating AI Insights into Risk Reporting

AI analytics are reshaping how risk information is presented to decision-makers. Real-time, centralized dashboards provide leadership with actionable insights at a glance[5]. Predictive analytics, powered by specialized healthcare machine learning models, can forecast risks based on historical and current data. This helps avoid compliance issues and financial surprises[5].

"AI-driven analytics and modern technology are redefining what's possible in healthcare risk management. These tools go beyond addressing traditional pain points - they empower hospital leaders to proactively identify, mitigate, and monitor risk in real time." – Health Catalyst[5]

Incorporate AI-generated Key Risk Indicators (KRIs) into your dashboards and reports. These metrics highlight potential obstacles to achieving organizational goals, delivering actionable intelligence rather than just raw data[3].

Scenario Analysis and Benchmarking

Beyond monitoring, AI enables scenario analysis, allowing organizations to test and refine risk models. With AI, healthcare providers can simulate potential risks, conduct stress tests, and map out how hazards could lead to harm. This approach quantifies both the likelihood and severity of risks, making it easier to improve risk models over time[21].

Benchmarking is another powerful tool. By comparing performance with industry peers, organizations can set clear targets for improvement. For example, AI-powered solutions have helped reduce patient wait times by 22%, improve early cancer detection rates by 18%, close readmission rate gaps by 5%, and cut post-operative infection rates by 30%[22]. Platforms like Censinet RiskOps™ aggregate real-time risk data across healthcare organizations, making it easier to assess and enhance cybersecurity readiness.

To stay ahead, maintain a detailed inventory of all AI systems, including their functions, data dependencies, and security implications[16]. Use methods like Failure Mode and Effects Analysis (FMEA) to test AI processes for safety[23]. Additionally, align your AI governance practices with regulations like HIPAA and FDA requirements to ensure compliance[16]. These steps ensure your AI-driven risk management strategies adapt to new challenges and align with industry standards.

Conclusion: The Future of Risk Management with AI

AI is reshaping risk management by processing massive amounts of data at speeds no human could match, uncovering patterns, and offering predictive insights that enable proactive decision-making. Traditional methods, where fewer than 20% of enterprise risk owners meet expectations for mitigating risks, simply can't keep up with the fast-evolving threat landscape[2].

Take healthcare organizations as an example - those adopting AI-driven risk management solutions are already seeing tangible results. Automated processes have slashed time spent on routine tasks by as much as 50%. Frameworks that once took months to implement can now be deployed in just seven days[24][12]. Real-time detection and response capabilities are becoming indispensable in combating emerging cyber threats.

These efficiencies are paving the way for unified platforms that streamline risk oversight. Censinet RiskOps™ is one such solution, consolidating risk data by connecting insights across departments and vendors, effectively breaking down silos[25]. With the added power of Censinet AI, healthcare organizations can complete security questionnaires in seconds, automatically generate summaries of vendor evidence, and direct critical findings to the right stakeholders - scaling operations while maintaining human oversight[12].

However, automation alone isn't enough. A strong governance framework is crucial to ensure long-term success. This includes establishing AI ethics guidelines, implementing robust data standards, and defining clear governance structures[12]. Start small with high-quality data sources, even if limited, and expand as capabilities grow. By allowing AI to handle data-heavy tasks, risk professionals can dedicate their time to strategic priorities[2]. The organizations that marry AI's analytical power with human expertise will be best equipped to navigate the increasingly intricate risk landscape of the future.

FAQs

How does AI enhance threat detection and response in healthcare risk management?

AI plays a crucial role in improving threat detection and response within healthcare risk management. By processing massive amounts of data in real time, it can pinpoint unusual patterns or anomalies that might otherwise go unnoticed. This means potential risks can be identified early - before they develop into serious problems.

With automated alerts and the ability to prioritize threats, AI helps healthcare organizations act quickly and respond to incidents more effectively. This proactive method not only reduces risks but also safeguards sensitive information and ensures compliance with strict industry regulations.

What are the main regulatory hurdles when using AI in healthcare risk management?

Integrating AI into healthcare risk management presents several hurdles on the regulatory front. First and foremost, compliance with HIPAA is essential to safeguard patient data privacy and security. Another pressing issue is tackling algorithmic bias, which can lead to unfair or inaccurate outcomes if left unchecked. Ensuring transparency in how AI systems make decisions is equally vital, as it helps build trust and accountability.

Organizations also face the challenge of keeping up with shifting federal and state laws, all while ensuring there's clear accountability for any errors AI systems might cause. On top of this, maintaining ethical standards that align with healthcare regulations is critical - not only for fostering trust but also for prioritizing patient safety at every step.

What steps can healthcare organizations take to use AI ethically and stay compliant in risk management?

Healthcare organizations can encourage responsible AI use in risk management by implementing well-defined policies and setting up oversight mechanisms like model registries and approval workflows. Adding human-in-the-loop controls and performing frequent risk assessments are crucial steps to ensure systems remain accountable and reliable.

Following established industry guidelines such as the NIST AI Risk Management Framework (AI RMF) and complying with data privacy regulations further strengthens compliance efforts. To build trust and minimize risks, organizations should focus on transparency, proactively tackle biases, and consistently evaluate AI systems for fairness and accuracy.