AI Algorithm Transparency in Healthcare: Vendor Selection and Risk Assessment Criteria

Post Summary

AI transparency in healthcare is more than a buzzword - it's about understanding how AI systems make decisions, ensuring patient safety, and managing risks. Here's what you need to know:

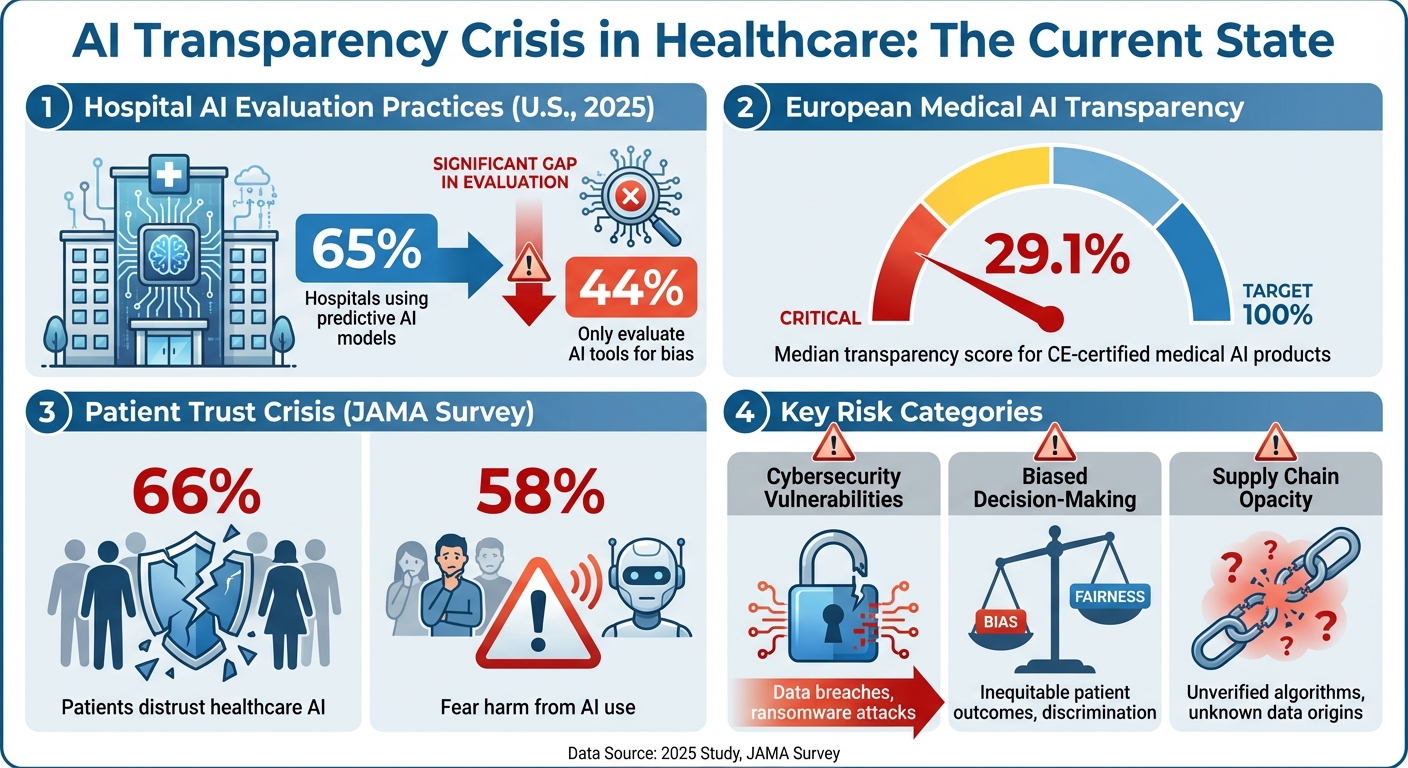

- Transparency is lacking: A 2025 study found that only 44% of U.S. hospitals evaluate AI tools for bias, despite 65% using predictive models. In Europe, CE-certified medical AI products scored a median transparency of just 29.1%.

- Patient trust is low: 66% of patients surveyed by JAMA distrust healthcare AI, and 58% fear harm from its use.

- Key risks include: Cybersecurity vulnerabilities, biased decision-making, and supply chain issues due to opaque vendor practices.

To address these challenges, healthcare organizations should prioritize vendors with:

- Explainable AI (XAI): Clear, interpretable outputs.

- Bias mitigation: Documented efforts to detect and reduce bias.

- Regulatory compliance: Audit readiness and adherence to laws like HIPAA and GDPR.

- Human oversight: Systems that allow clinicians to review and approve decisions.

- Risk management tools: Integration with platforms like Censinet RiskOps™ for ongoing monitoring.

The focus should be on structured evaluation frameworks, transparency metrics, and continuous oversight to ensure safe, reliable, and ethical AI use in healthcare.

AI Transparency Crisis in Healthcare: Key Statistics and Patient Trust Data

Risks of Non-Transparent AI Algorithms in Healthcare

Cybersecurity Vulnerabilities and Data Breaches

Transparency plays a crucial role in identifying and addressing cybersecurity risks in AI systems. When AI algorithms lack openness, healthcare organizations face heightened vulnerabilities. Without clear insights into how these algorithms work or the data they were trained on, it becomes nearly impossible to spot weaknesses before implementation. This lack of transparency opens the door to threats like data breaches, model inversion, and adversarial attacks [2].

The use of advanced AI tools often increases the potential attack surface, making it harder to track how sensitive data is managed and stored. This becomes especially alarming when vendors utilize Protected Health Information (PHI) for model training without explicit contractual agreements, such as an Information Technology Service Addendum (ITSA) [2]. Such practices not only risk violating HIPAA regulations but also expose organizations to penalties and erode patient trust.

To reduce these risks, compliance with standards like FIPS 140-3 is essential, as it helps ensure both security and adherence to HIPAA guidelines [2]. However, cloud-based AI platforms introduce additional challenges. Without proper oversight, these systems may inadvertently breach HIPAA rules or data sovereignty laws, creating opportunities for unauthorized access [3]. Moreover, the lack of transparency regarding training data can lead to inaccuracies, jeopardizing both data security and clinical outcomes [2].

These cybersecurity weaknesses not only endanger sensitive information but also undermine the reliability of AI-driven clinical decisions - an issue explored further below.

Bias and Errors in Clinical Decision-Making

When clinicians can’t understand how an AI system arrives at its conclusions, they lose the ability to identify errors, which can directly harm patients [2]. Generative AI models, in particular, are prone to producing misleading or entirely false outputs - often referred to as hallucinations - that may appear credible but are fundamentally incorrect [2][1].

The situation worsens when the training data itself is flawed. If datasets contain biases or lack quality, the AI can perpetuate discriminatory patterns, disproportionately affecting certain patient groups. Non-transparent systems make these biases difficult to detect until harm has already occurred. Without visibility into the decision-making process, it’s impossible to determine whether an AI recommendation is grounded in sound clinical evidence or flawed assumptions, undermining the quality of care and putting patient safety at serious risk.

But the risks don’t stop there. The lack of transparency also amplifies concerns within the vendor supply chain.

Third-Party and Supply Chain Risks

AI algorithms provided by third-party vendors bring unique challenges that traditional Third-Party Risk Management (TPRM) frameworks are ill-equipped to handle. Without full disclosure from vendors, healthcare organizations can’t verify how AI systems are being used, how sensitive data is secured, or whether it’s being misused [4].

This issue extends beyond individual vendors to the broader supply chain. When vendors fail to provide clear information about their AI practices, organizations are left in the dark about whether patient data is being shared with downstream partners, used to train external models, or exposed to other risks. Traditional TPRM strategies often overlook these complexities, leaving significant gaps in an organization’s ability to manage and mitigate risks tied to opaque AI models [4].

Vendor Selection Criteria for Transparent AI Algorithms

When assessing AI vendors, it's essential to dig into their design, training, and deployment practices. The goal? To ensure transparency, safeguard patient safety, and minimize liability. Here's what to look for:

Explainable AI (XAI) Capabilities

AI models should come with clear, understandable explanations of how they work. Whether it's through visual tools, decision trees, or plain-language summaries, vendors need to show how their models arrive at conclusions. This level of interpretability is key to understanding the factors driving specific outputs. Without it, verifying the clinical soundness of recommendations becomes a challenge.

Bias Detection and Mitigation Strategies

Bias can sneak into an algorithm at any stage - starting from the initial concept and data collection to development, validation, and even during ongoing monitoring [5]. Vendors should provide proof of their efforts to detect and reduce bias throughout the AI lifecycle. This includes documented training datasets and results from fairness testing. A solid bias detection framework is non-negotiable [5].

Regulatory Compliance and Audit Readiness

Compliance with regulations like the FDA's SaMD requirements, HIPAA, and the EU AI Act (for international use) is a must. Vendors should offer complete audit trails, covering data lineage, model updates, and decision logs. This ensures they are prepared for audits and meet all necessary standards [6].

Human-in-the-Loop Oversight

AI systems should allow for configurable human review. This means clinicians or other stakeholders can step in to review and approve outputs, especially for high-risk decisions. Having escalation protocols in place ensures that human expertise remains central to the decision-making process.

Risk Management Platform Integration

To streamline oversight and reduce administrative headaches, vendors should integrate with risk management platforms like Censinet RiskOps™. Such integration helps centralize monitoring, track compliance, and improve overall oversight by breaking down operational silos.

Risk Assessment Frameworks for AI Vendors

Once you've established vendor criteria, the next step is to implement a structured framework to evaluate transparency, monitor performance, and adapt to the ever-changing risks associated with AI. For healthcare organizations, having a repeatable system that spans from initial vetting to ongoing oversight is critical. This involves capturing transparency metrics, tracking vendor performance over time, and staying responsive to the dynamic nature of AI systems.

Transparency Metrics and Scoring

Transparency metrics help transform subjective evaluations into clear, measurable assessments. By building on vendor selection criteria, these metrics allow for objective evaluations of transparency.

One effective framework identifies 12 risk domains, with three being especially critical for healthcare: algorithm explainability, data handling practices, and bias mitigation. Other domains include regulatory readiness and incident response protocols [2].

Creating a scoring system can standardize vendor comparisons. Assigning weighted scores to each domain based on your organization's priorities can make evaluations more relevant. For instance, clinical decision support tools might place greater emphasis on explainability and audit readiness, while administrative AI tools could prioritize other factors. Key metrics to track might include:

- Completeness of algorithm documentation

- Availability of validation studies using independent datasets

- Frequency of bias audits

- Turnaround time for addressing security incidents

This quantitative approach eliminates guesswork and provides a reliable baseline for both initial vendor selection and ongoing evaluations.

"If the documentation doesn't prove the decision, CMS assumes the decision cannot be trusted." – Mizzeto [9]

This audit philosophy highlights why scoring documentation quality is essential. Vendors should provide detailed evidence, including algorithm design specifics, performance reports, fairness audit results, data governance policies, regulatory compliance certifications, and drift management protocols [2]. The more comprehensive and accessible this documentation, the better equipped your organization will be to validate vendor claims and mitigate risks throughout the AI lifecycle.

Continuous Monitoring and Evidence Collection

Initial scoring is only the beginning - continuous oversight is vital to address risks as they evolve.

AI models operate in dynamic environments where data patterns can shift over time. Without regular monitoring, this can lead to outdated or biased predictions [7]. Continuous oversight transforms vendor evaluation from a one-time task into an ongoing process that tracks performance and risk [8].

Platforms like Censinet AITM streamline this process by automating risk assessments. Vendors can complete security questionnaires in seconds, while the system automatically compiles evidence, summarizes documentation, and tracks product integration details and fourth-party risks. Risk summary reports are then generated from all relevant data, ensuring consistency and reducing human bias. This automation accelerates workflows, making it easier to manage large-scale AI vendor portfolios efficiently [7].

However, automation doesn't replace human judgment. Tools like Censinet RiskOps act as centralized hubs for managing AI-related policies, risks, and tasks. They route critical findings to designated stakeholders for review and approval, while real-time dashboards provide a clear view of emerging issues. This human-in-the-loop approach ensures that critical decisions remain in the hands of experts, while also enabling the scale needed to manage multiple vendors effectively.

sbb-itb-535baee

Implementing AI Transparency with Censinet RiskOps™

Healthcare organizations face the challenge of managing multiple AI vendors, each with unique documentation and risk profiles. Without the right tools, these tasks can overwhelm internal processes. That's where Censinet RiskOps™ comes in, offering a centralized platform to simplify and streamline AI transparency efforts.

By building on the criteria covered earlier, Censinet RiskOps™ turns transparency into actionable steps through integrated risk assessments.

Streamlined Vendor Risk Assessments

Vendor evaluations can be time-consuming, but Censinet RiskOps™ changes that. The platform leverages Censinet AITM to automate the entire vendor risk assessment process. It collects critical evidence, organizes integration details, identifies third-party exposures, and generates detailed risk summary reports. This automation not only speeds up assessments but also ensures compliance with regulatory standards. The result? Healthcare organizations can identify and address risks faster.

AI Governance and Oversight Dashboards

Censinet RiskOps™ acts as a central hub for managing AI-related policies, risks, and tasks. Its intuitive dashboard provides real-time data, giving stakeholders - from risk managers to AI governance committees - a clear view of key findings and tasks. By routing updates and responsibilities to the right people, the platform ensures timely oversight and accountability. This approach aligns with the broader goal of integrating AI cybersecurity and vendor risk management into a cohesive framework.

Human-Guided Automation for Risk Management

While automation is a core feature, Censinet RiskOps™ emphasizes the importance of human expertise. Its human-in-the-loop design ensures that automation supports, rather than replaces, critical decision-making. Automated processes handle tasks like evidence validation, policy creation, and risk mitigation, while risk teams maintain control through configurable rules and review stages. This balance allows healthcare leaders to scale their operations, manage complex risks, and protect patient safety - all with greater speed and accuracy.

Conclusion and Key Takeaways

The discussion above highlights the critical role of AI algorithm transparency in healthcare, emphasizing its importance for both patient safety and trust. As AI becomes a more integral part of clinical workflows, healthcare organizations need to prioritize vendors that demonstrate explainability, bias reduction, and adherence to regulatory standards. Without these elements in place, organizations risk exposing themselves to cybersecurity threats, clinical errors, and compliance failures - all of which can jeopardize patient care.

To address these challenges, adopting structured frameworks for vendor evaluation is key. By concentrating on explainable AI capabilities, audit readiness, and human-in-the-loop oversight, healthcare leaders can select vendors that meet regulatory demands and align with their organizational values. These criteria not only make transparency more actionable but also help reduce risks related to cybersecurity, clinical operations, and compliance.

Censinet RiskOps™ offers a practical application of these frameworks. The platform streamlines AI risk management by combining automated assessments with human oversight. By centralizing AI-related policies, risks, and tasks, it ensures continuous monitoring and accountability across teams - all within a single, unified system.

Strong AI governance in healthcare strengthens both patient trust and data security. Transparent risk management and rigorous vendor oversight signal a clear commitment to protecting sensitive health information. Moreover, this approach equips organizations to adapt to evolving regulatory landscapes, ensuring long-term compliance and operational stability.

Moving forward, healthcare organizations must remain vigilant and leverage the right tools. By embedding comprehensive transparency criteria into their vendor selection processes - and utilizing platforms tailored to healthcare's specific needs - they can maximize the benefits of AI while minimizing its associated risks.

FAQs

What should healthcare organizations consider when selecting an AI vendor?

When selecting an AI vendor for healthcare, it's important to focus on a few critical factors that ensure the system meets your organization's specific needs. Start by assessing accuracy and automation capabilities - these are crucial for achieving both clinical and operational objectives. You'll also want to check for compatibility with your current systems to make integration smoother and more efficient. Don't overlook scalability, as it ensures the system can handle future growth and increasing demands. Equally important is how well the solution can adapt to your unique workflows.

Another key consideration is the vendor's support services. Strong implementation assistance and reliable ongoing maintenance are essential for ensuring the system's long-term success. By focusing on these priorities, healthcare organizations can reduce risks, protect sensitive data, and strengthen patient confidence in AI-powered tools.

What steps can healthcare organizations take to ensure transparency in AI algorithms?

Healthcare organizations can strengthen trust in AI algorithms by being transparent about their development and usage. This starts with providing clear documentation that explains how these models are created, trained, and validated. Key details like the data sources, performance metrics, and the logic behind the AI's decisions should be outlined in a straightforward manner.

Equally important is ensuring that AI systems produce outputs that are easy for both clinicians and patients to understand. When the reasoning behind an AI's recommendations is clear, it builds confidence and helps everyone involved feel more comfortable with the technology.

Additionally, adhering to regulatory standards and following industry best practices is crucial. This not only ensures compliance but also minimizes risks tied to opaque AI models, protecting patient data and maintaining the trust of all stakeholders.

What are the risks of using non-transparent AI in healthcare?

Non-transparent AI in healthcare poses serious risks that can jeopardize patient safety and diminish trust in healthcare organizations. Key concerns include misuse of AI systems, biased or inaccurate results, and model degradation over time, which may lead to incorrect diagnoses or inappropriate treatment plans.

Another major challenge is insufficient monitoring and oversight, which can allow mistakes to go unnoticed. Additionally, the lack of transparency in many AI systems makes it hard for healthcare professionals to fully understand or verify the reasoning behind AI-driven decisions. These issues not only undermine patient trust but also expose organizations to increased liability and potential regulatory violations.

To address these challenges, healthcare organizations need to focus on transparency and accountability when selecting AI vendors. Systems should be designed to be reliable, easy to interpret, and tailored to meet the specific demands of the healthcare industry.