The AI Cyber Risk Time Bomb: Why Your Security Team Isn't Ready

Post Summary

AI expands attack surfaces, introduces new vulnerabilities, and powers faster, more precise cyberattacks.

Data poisoning, model drift, API exploitation, prompt injection, and attacks on AI‑controlled medical devices.

Skills gaps, outdated frameworks, siloed teams, and limited understanding of AI systems.

Compromised AI outputs that directly impact clinical decisions and patient safety.

By integrating NIST AI RMF, HSCC guidance, AI‑specific controls, and continuous monitoring.

It automates AI risk assessments, monitors third‑party vulnerabilities, and centralizes AI governance.

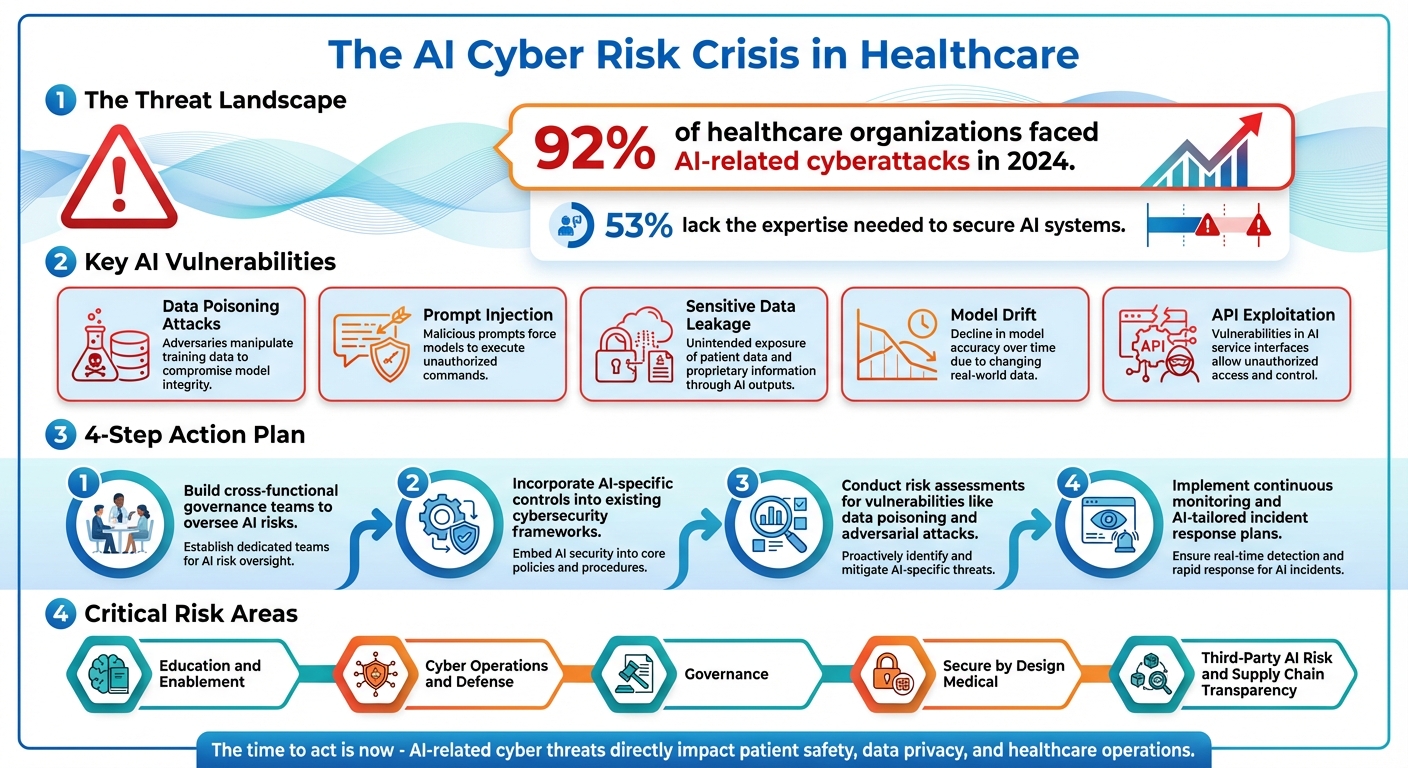

Artificial intelligence (AI) is transforming healthcare, but it’s also creating serious cybersecurity risks. In 2024, 92% of healthcare organizations faced AI-related cyberattacks, and the trend continues to grow. These threats - like adversarial attacks and data poisoning - can compromise patient safety, disrupt workflows, and expose sensitive data. Despite these dangers, most healthcare security teams lack the tools and expertise to manage AI-specific vulnerabilities.

Key takeaways:

To address these challenges, healthcare organizations must:

The risks are clear, and the time to act is now. Without proactive measures, AI-related cyber threats could severely impact patient safety, data privacy, and healthcare operations.

AI Cybersecurity Crisis in Healthcare: Key Statistics and Action Steps

The AI Attack Surface in Healthcare

How Healthcare Uses AI

AI is now woven into the fabric of healthcare operations, playing a pivotal role in both patient care and administrative functions. Algorithms are employed to process massive amounts of patient data, enabling faster diagnoses, analyzing medical imaging, and even suggesting treatment plans. Tools like clinical decision support systems use machine learning to flag potential drug interactions, predict when a patient’s condition might worsen, and assist doctors in navigating complex cases. On the patient side, AI powers tools such as chatbots that handle symptom triage, schedule appointments, and answer basic questions.

Behind the scenes, AI is just as active. It manages backend operations like claims processing, optimizing supply chains, and streamlining staffing. Many healthcare providers also lean on third-party AI services for specialized tasks, such as analyzing radiology images or running predictive analytics. AI isn’t just a standalone tool - it’s a critical part of a larger transformation that influences clinical workflows, patient interactions, and the handling of sensitive data. However, this deep integration comes with a downside: AI systems now interact with protected health information (PHI), essential medical devices, and even life-or-death decisions, creating a broader attack surface and introducing new security vulnerabilities.

AI-Specific Vulnerabilities

The rise of AI in healthcare has brought unique risks that traditional security measures often can’t address. For instance, data poisoning attacks occur when bad actors tamper with training datasets, leading AI models to learn incorrect or harmful patterns. This could result in faulty clinical recommendations. Another issue is prompt injection, where attackers manipulate AI chatbots or language models to either expose sensitive patient data or provide harmful advice.

There’s also the risk of sensitive data leakage, where AI models inadvertently reveal PHI because they’ve memorized parts of their training data. Over time, model drift can occur, where changes in input data patterns degrade an AI system’s accuracy, potentially leading to diagnostic errors without raising any alarms. API exploitation is another concern, as attackers can target the interfaces connecting AI systems to electronic health records or medical devices, opening doors to unauthorized access. Complicating matters further is the "black box" nature of AI algorithms, which makes it difficult to detect or explain when a model has been compromised[3].

Why Healthcare Organizations Are at Risk

As AI becomes embedded in nearly every aspect of healthcare - from clinical decision-making to logistics - the risks grow exponentially. The healthcare sector’s reliance on highly interconnected systems only amplifies these vulnerabilities. AI models often integrate with electronic health records, medical devices, lab systems, and billing platforms. A breach in just one AI component could disrupt multiple critical functions at once. Adding to the stakes, PHI is highly valuable on the black market, making healthcare an attractive target for cybercriminals.

The reliance on third-party vendors further complicates security. Many healthcare providers depend on external AI services for specialized tasks, creating potential weak points at every integration. These vendors often access sensitive patient data and clinical systems, yet healthcare organizations may lack insight into how these external systems are secured or monitored. When AI systems are responsible for life-critical tasks - like determining medication dosages, interpreting diagnostic images, or predicting patient decline - a successful attack could go beyond data breaches, posing direct threats to patient safety.

Why Current Security Teams Are Unprepared

Skills and Organizational Gaps

Healthcare security teams often lack the necessary understanding of AI, struggling with the basics like evaluating machine learning models, datasets, or algorithmic outputs. Without a shared vocabulary or foundational training, they find it hard to pinpoint what actually needs protection. On top of that, responsibilities for AI oversight in healthcare organizations are often scattered. Clinical, IT, and security teams tend to work in isolation, with little collaboration. Security teams usually step in only when a problem has already surfaced. This fragmented approach and lack of AI governance make it harder to classify threats and respond effectively. Combined with outdated frameworks that don’t address the unique challenges AI presents, these gaps leave organizations vulnerable.

Framework and Process Limitations

Most existing security frameworks aren’t built to handle the complexities of AI. They fall short when it comes to AI-specific evaluations, continuous monitoring, or tailored incident response plans [1][3][2]. Traditional security models focus on protecting networks and endpoints, but they don’t account for newer threats like data leakage, model inversion, or prompt injection [6]. AI systems, often described as "black boxes", complicate traditional auditing and data tracking methods. This lack of transparency makes it tough to identify when a model has been compromised or is behaving unpredictably. Meanwhile, manual assessment methods that worked for older systems simply can’t keep pace with the speed and complexity of AI deployments.

Impact on Patient Safety and Operations

These operational shortcomings have far-reaching consequences, especially in healthcare. Poorly managed AI risks don’t just lead to data breaches - they can directly harm patients. For instance, AI systems generating incorrect outputs could result in flawed therapeutic decisions, putting lives at risk [3]. Biases or malfunctions in automated systems can introduce errors that traditional clinical risk management methods are unequipped to catch [3]. Compromised AI models can also act as gateways for cyberattacks, spreading through interconnected systems like electronic health records or medical devices. Devices such as pacemakers, insulin pumps, or imaging equipment could be manipulated, potentially altering their operations or disrupting care entirely.

Beyond immediate safety concerns, AI-related incidents can cause regulatory headaches. Failure to demonstrate proper AI governance and risk management could lead to violations of HIPAA or FDA requirements, even though these regulations offer limited guidance on AI security. All of this highlights why today’s security teams are unprepared to tackle the challenges posed by AI.

Adapting Risk Frameworks for AI in Healthcare

Extending Cybersecurity Frameworks for AI

Healthcare organizations face a growing need to address AI-specific risks within their cybersecurity strategies. Traditional frameworks, while robust in many areas, often lack provisions tailored to the complexities of AI. To bridge this gap, organizations should incorporate AI-specific controls into their existing security measures. The NIST AI Risk Management Framework (AI RMF) offers a strong starting point, outlining principles to guide the design, development, and use of trustworthy AI systems [7][8]. By integrating these principles, healthcare providers can better manage AI security through enhanced assessment protocols, continuous monitoring, and dedicated incident response strategies - areas where existing regulations like HIPAA and FDA guidance currently fall short [1].

The Health Sector Coordinating Council (HSCC) has identified five critical workstreams to manage AI-related cybersecurity risks: Education and Enablement, Cyber Operations and Defense, Governance, Secure by Design Medical, and Third-Party AI Risk and Supply Chain Transparency [4]. These workstreams serve as a practical guide for incorporating AI considerations into the NIST Cybersecurity Framework 2.0, allowing security teams to build on their existing processes rather than starting from scratch. By layering AI-specific controls onto current frameworks, organizations can more effectively address the unique challenges posed by AI technologies.

AI Risk Control Taxonomy for Healthcare

A well-structured approach to managing AI risks in healthcare involves three essential layers:

By addressing these layers, organizations can create a comprehensive defense against AI-specific risks.

Where Current Frameworks Fall Short

Despite the progress made, current cybersecurity frameworks still struggle to address the unique challenges of AI. Most were designed for static systems and do not account for AI’s dynamic nature, such as continuous learning and evolution. This creates significant gaps, including:

Without clear guidance on what constitutes "secure AI", security teams are left navigating uncharted territory. Addressing these shortcomings is essential to building frameworks that can keep pace with AI's rapid advancements and ensure its safe integration into healthcare environments.

These steps set the stage for tackling AI-specific threats head-on, equipping organizations with the tools they need to secure their systems effectively.

sbb-itb-535baee

Preparing Security Teams for AI Cyber Risk

Building AI Governance Structures

Addressing AI-related cyber risks starts with creating a solid governance framework that covers every stage of the AI lifecycle. Back in November 2025, the Health Sector Coordinating Council (HSCC) offered a sneak peek into its 2026 AI cybersecurity guidance. This guidance reflected the collective efforts of 115 healthcare organizations working together to design practical frameworks. As part of this initiative, the HSCC's Governance subgroup has been developing an AI Governance Maturity Model to help organizations evaluate their current capabilities and identify areas for improvement [4].

To ensure AI decisions prioritize patient safety, comply with regulations, and secure technical operations, organizations should establish a cross-functional governance committee. This committee should include representatives from security, clinical, IT, legal, and compliance teams. Their duties involve maintaining a comprehensive inventory of all AI systems, categorizing tools by their autonomy levels, and aligning operations with frameworks like the NIST AI Risk Management Framework. Senior leadership plays a critical role by setting clear policies and establishing reporting structures for AI-related risks [11].

Once a governance framework is in place, the next step is turning policy into actionable risk assessments.

Operationalizing AI Risk Assessments

Traditional risk assessments often overlook vulnerabilities specific to AI, such as data poisoning, model drift, or adversarial attacks. To address these gaps, organizations must update their evaluation processes to test for both clinical accuracy and resilience [1].

Tools like Censinet RiskOps™ and Censinet AI™ can simplify this process by automating risk assessments. These platforms summarize critical evidence, document integration details, and flag potential risks from third-party vendors. Their AI risk dashboard enables the governance committee to receive real-time alerts, ensuring that critical issues are addressed promptly and with human oversight. This streamlined approach ensures that risks are managed effectively and in a timely manner.

After assessing risks, continuous monitoring becomes essential to detect and respond to anomalies quickly.

Continuous Monitoring and Incident Response for AI

Because AI systems evolve through continuous learning, monitoring efforts must focus on identifying changes in model behavior, unexpected outputs, and signs of adversarial manipulation [4].

"The

– Health Sector Coordinating Council Cybersecurity Working Group

Organizations should create AI-specific incident response playbooks tailored to the unique challenges of machine learning systems. These playbooks should outline procedures for securing model backups, rolling back compromised systems, and ensuring patient safety during incidents [4]. Training programs need to cover AI basics, risk identification, and mitigation strategies. Clear usage policies should limit AI tool access to trained personnel and include measures for handling sensitive information securely [9][10].

Regularly conducting simulated attacks can help teams stay sharp and adapt to evolving AI-based threats. Encouraging a "no-blame culture" during incident reviews fosters a focus on systems-based analysis, ultimately strengthening the organization’s overall resilience [3][10].

Conclusion

The year 2025 brings with it a cybersecurity crisis in healthcare, threatening patient safety, data privacy, and the functionality of critical systems [1][12]. The ECRI Institute has flagged AI as the top health technology hazard for 2025, highlighting the urgency of addressing these challenges.

To tackle these risks, security teams must rethink their strategies. The shift from reactive to proactive measures is essential, requiring robust governance frameworks, the expansion of Clinical Risk Management to address AI-specific vulnerabilities, and the integration of secure-by-design principles into every stage of AI deployment [4]. Traditional cybersecurity methods simply aren’t equipped to handle the fast-evolving threats posed by AI.

A strong, multi-layered defense is key. This includes forming cross-functional governance committees, updating risk assessments to account for AI-driven challenges, and creating incident response playbooks tailored specifically for AI scenarios. Additionally, maintaining strict oversight of third-party vendors is crucial to safeguarding systems.

Equally important is fostering a culture of security awareness. Operationalizing AI risk assessments and establishing a no-blame incident review process can significantly improve preparedness. The clock is ticking, and the time to act is now - before the risks posed by AI become unmanageable.

FAQs

What steps can healthcare organizations take to strengthen their expertise in securing AI systems?

Healthcare organizations can strengthen their ability to protect AI systems by investing in specialized training programs. These programs should focus on both the basics of AI and key cybersecurity practices. This dual approach ensures that teams are well-equipped to handle the unique challenges that come with securing AI in healthcare.

Another critical step is creating interdisciplinary teams. By bringing together IT security specialists, clinical staff, and data scientists, organizations can address AI-related risks from multiple perspectives, ensuring a more comprehensive strategy.

Organizations should also implement governance frameworks specifically designed for AI systems, such as those outlined by NIST. These models can help identify and address vulnerabilities early. By establishing clear policies and tailored frameworks, healthcare providers can build a strong, scalable system to manage potential threats effectively.

What are the biggest AI-related security risks in healthcare?

AI's role in healthcare brings with it some distinct security challenges. Among the most pressing concerns are data breaches and unauthorized access to confidential patient records. There's also the threat of adversarial attacks, where bad actors manipulate AI models to deliver incorrect or misleading results. Another issue is prompt injection exploits, where harmful inputs can disrupt the system's functionality. Beyond these, attackers might tamper with AI-guided clinical decisions or interfere with the operation of medical devices, creating serious risks for patient safety.

These issues underscore the importance of developing targeted strategies to counter AI-specific threats, as standard cybersecurity approaches often struggle to address these newer, more complex vulnerabilities.

Why aren't traditional cybersecurity measures enough to handle AI-related threats in healthcare?

Traditional cybersecurity approaches often fall short when it comes to handling the unique risks posed by AI. These risks include issues like data poisoning, where attackers deliberately tamper with training data to compromise AI systems, and adversarial attacks that exploit vulnerabilities in AI models to manipulate their outputs. On top of that, problems such as model manipulation and system drift add another layer of complexity, requiring targeted strategies for detection, prevention, and oversight.

The healthcare sector is especially at risk. With its heavy reliance on sensitive data and the growing adoption of AI technologies, it faces heightened exposure to these threats. Tackling these challenges calls for a forward-thinking approach, equipped with customized tools and frameworks that go beyond the capabilities of standard cybersecurity measures.

Related Blog Posts

- AI Cyber Risk: When Your Smart Defense Becomes the Attack Vector

- The Healthcare Cyber Storm: How AI Creates New Attack Vectors in Medicine

- The Cyber Diagnosis: How Hackers Target Medical AI Systems

- The Process Optimization Paradox: When AI Efficiency Creates New Risks

{"@context":"https://schema.org","@type":"FAQPage","mainEntity":[{"@type":"Question","name":"What steps can healthcare organizations take to strengthen their expertise in securing AI systems?","acceptedAnswer":{"@type":"Answer","text":"<p>Healthcare organizations can strengthen their ability to protect AI systems by investing in specialized training programs. These programs should focus on both the <strong>basics of AI</strong> and <strong>key cybersecurity practices</strong>. This dual approach ensures that teams are well-equipped to handle the unique challenges that come with securing AI in healthcare.</p> <p>Another critical step is creating interdisciplinary teams. By bringing together IT security specialists, clinical staff, and data scientists, organizations can address AI-related risks from multiple perspectives, ensuring a more comprehensive strategy.</p> <p>Organizations should also implement governance frameworks specifically designed for AI systems, such as those outlined by NIST. These models can help identify and address vulnerabilities early. By establishing clear policies and tailored frameworks, healthcare providers can build a strong, scalable system to manage potential threats effectively.</p>"}},{"@type":"Question","name":"What are the biggest AI-related security risks in healthcare?","acceptedAnswer":{"@type":"Answer","text":"<p>AI's role in healthcare brings with it some distinct security challenges. Among the most pressing concerns are <strong>data breaches</strong> and <strong>unauthorized access</strong> to confidential patient records. There's also the threat of <strong>adversarial attacks</strong>, where bad actors manipulate AI models to deliver incorrect or misleading results. Another issue is <strong>prompt injection exploits</strong>, where harmful inputs can disrupt the system's functionality. Beyond these, attackers might tamper with AI-guided clinical decisions or interfere with the operation of medical devices, creating serious risks for patient safety.</p> <p>These issues underscore the importance of developing targeted strategies to counter AI-specific threats, as standard cybersecurity approaches often struggle to address these newer, more complex vulnerabilities.</p>"}},{"@type":"Question","name":"Why aren't traditional cybersecurity measures enough to handle AI-related threats in healthcare?","acceptedAnswer":{"@type":"Answer","text":"<p>Traditional cybersecurity approaches often fall short when it comes to handling the <strong>unique risks posed by AI</strong>. These risks include issues like <em>data poisoning</em>, where attackers deliberately tamper with training data to compromise AI systems, and <em>adversarial attacks</em> that exploit vulnerabilities in AI models to manipulate their outputs. On top of that, problems such as model manipulation and system drift add another layer of complexity, requiring <strong>targeted strategies</strong> for detection, prevention, and oversight.</p> <p>The healthcare sector is especially at risk. With its heavy reliance on sensitive data and the growing adoption of AI technologies, it faces heightened exposure to these threats. Tackling these challenges calls for a forward-thinking approach, equipped with customized tools and frameworks that go beyond the capabilities of standard cybersecurity measures.</p>"}}]}

Key Points:

How does AI expand the attack surface in healthcare?

- Deep integration with EHRs, IoMT devices, and cloud platforms

- AI models interacting with PHI and patient‑care decisions

- New entry points through APIs, data pipelines, and web‑connected devices

- Lateral spread potential, where one compromised model affects multiple systems

- Dependence on third‑party AI vendors, increasing supply‑chain exposure

What AI‑specific vulnerabilities are most dangerous?

- Data poisoning, corrupting training datasets

- Prompt injection, manipulating chatbot‑style AI

- Model inversion and leakage, exposing PHI

- Model drift, degrading accuracy over time

- API exploitation, granting unauthorized access to integrated systems

Why are current healthcare security teams unprepared?

- Lack of AI literacy across IT and security teams

- Fragmented oversight, with clinical, IT, and cybersecurity teams working in isolation

- Outdated frameworks missing AI controls

- Manual processes that cannot match AI‑driven attack speed

- Little visibility into AI model logic and data flows

How does AI threaten patient safety and clinical operations?

- Incorrect AI outputs leading to misdiagnosis or unsafe treatments

- Manipulated models altering dosing, lab interpretation, or patient triage

- Compromised medical devices, such as infusion pumps or imaging systems

- Workflow disruption across EHRs, labs, and scheduling platforms

- Regulatory exposure from mishandled PHI or unsafe AI behavior

How should healthcare adapt existing cybersecurity frameworks for AI?

- Integrate NIST AI RMF into security and governance workflows

- Adopt HSCC workstreams (education, governance, secure AI design, supply‑chain oversight)

- Build AI‑specific incident response playbooks

- Monitor AI for drift, bias, and adversarial manipulation

- Add AI‑focused controls to vendor risk assessments and procurement workflows

How does Censinet RiskOps™ support healthcare AI risk management?

- Automated AI risk assessments with healthcare‑specific controls

- Centralized AI risk registers across vendors, devices, and applications

- Continuous monitoring for new vulnerabilities or supply‑chain changes

- Configurable workflows that route AI findings to governance committees

- Real‑time dashboards integrating cybersecurity, compliance, and clinical risk data