AI-Enhanced Phishing: The Evolution of Social Engineering Attacks

Post Summary

AI-enhanced phishing is reshaping cyber threats by using generative AI to create highly personalized, convincing attacks. These methods exploit public data, mimic writing styles, and bypass traditional security tools. In healthcare, where sensitive patient data is abundant, these attacks pose critical risks, including compromised systems, delayed treatments, and regulatory penalties.

Key takeaways:

- Healthcare as a target: Sensitive patient data and decentralized systems make healthcare vulnerable.

- Advanced tactics: AI enables tailored phishing emails, deepfake audio/video, and fake websites.

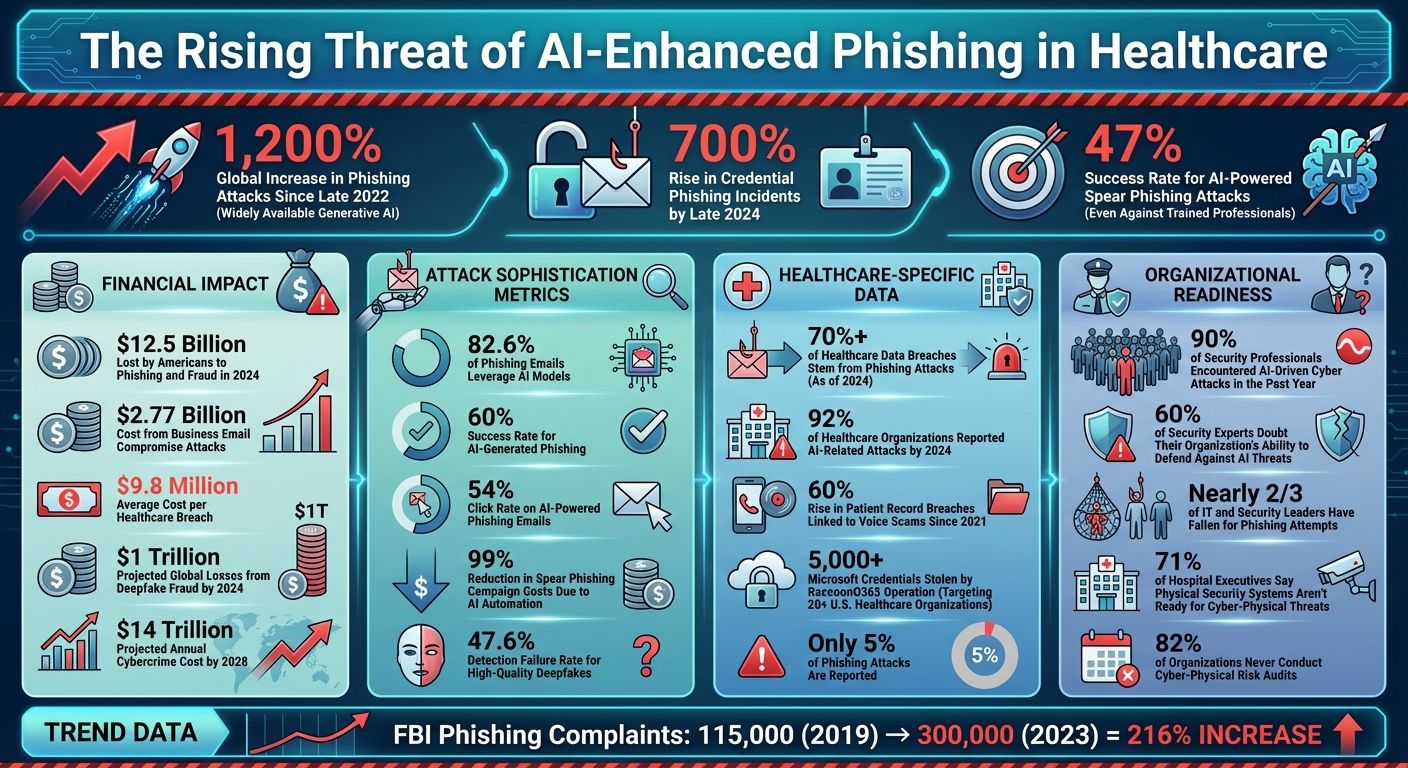

- Impact: Credential phishing incidents rose 700% by late 2024; global phishing attacks increased 1,200% since 2022.

- Recent example: The RaccoonO365 operation targeted U.S. healthcare, stealing credentials and enabling ransomware attacks.

To counter these threats, organizations must strengthen email security, implement multi-factor authentication, and train staff to recognize AI-driven phishing attempts. Combining advanced tools with regular training is essential to safeguard against these evolving attacks.

AI-Enhanced Phishing Attack Statistics in Healthcare 2022-2024

How Social Engineering Attacks Have Changed in Healthcare

From Basic Phishing to AI-Powered Attacks

Phishing attacks used to be fairly easy to spot. They often had glaring spelling errors, generic greetings like "Dear User", and clunky designs. These tactics relied on sending out massive amounts of emails, hoping someone would slip up. For trained staff, identifying and reporting these attempts was straightforward.

But AI has completely shifted the game. Now, attackers can create highly convincing emails, fake login pages, and messages almost instantly. These tools make it cheaper and faster to launch phishing campaigns. AI essentially acts as a digital assistant, helping attackers build websites, generate malicious code, and craft phishing emails with alarming efficiency [2][6].

"We've reached the point where I am concerned. With very few prompts, an AI model can write a phishing message meant just for me. That's terrifying" [6]

- Stephanie Carruthers, Chief People Hacker for IBM X-Force Red

This evolution has paved the way for even more targeted and personalized attacks, powered by AI's ability to mimic human communication.

How AI Increases Attack Sophistication

AI doesn't just make phishing faster - it makes it smarter. Machine learning algorithms can sift through enormous amounts of data, like social media profiles, corporate websites, and public records, to craft hyper-personalized messages. These messages can even adjust in real time based on how a target responds [5][8]. The result? Emails and messages that are nearly impossible to distinguish from legitimate ones, mimicking specific tones and writing styles.

The numbers are staggering. According to McKinsey & Company, there has been a 1,200% global increase in phishing attacks since generative AI became widely available in late 2022 [8]. Even more troubling, AI-powered spear phishing attacks have a 47% success rate, even against trained security professionals [8]. Nearly two-thirds of IT and security leaders have admitted to falling for phishing attempts themselves [8].

"The AI models are really helping attackers clean up their messages. Making them more succinct, making them more urgent - making them into something that more people fall for" [6]

- Stephanie Carruthers, Chief People Hacker for IBM X-Force Red

AI-driven automation has also slashed the costs of creating spear phishing campaigns by up to 99% [7]. This means that even individuals with minimal resources can now launch highly sophisticated attacks. For healthcare organizations, this growing threat is especially concerning.

Recent Attacks on U.S. Healthcare Organizations

These threats are far from hypothetical. In September 2025, Microsoft disrupted a phishing operation called RaccoonO365, which had targeted at least 20 healthcare organizations in the U.S. [4][8]. This operation used phishing kits designed to look like official Microsoft communications to steal credentials. Since July 2024, these kits have stolen at least 5,000 Microsoft credentials across 94 countries. Cybercriminals then used these stolen credentials to carry out ransomware attacks on hospitals, putting patient safety at risk. Microsoft ultimately shut down 338 websites linked to this group through a court order [4].

"Credentials stolen through RaccoonO365 enabled ransomware attacks against hospitals, posing a direct threat to patient and community safety. This operation also highlights a disturbing trend - cybercriminals' increased use of 'initial access brokers' to steal credentials and AI to accelerate the effectiveness, sophistication and impact of cyberattacks." [4]

- John Riggi, AHA national advisor for cybersecurity and risk

In one case, attackers used AI-generated code to hide a phishing payload inside an SVG file, disguising it as a file-sharing notification [4][8]. The broader impact is equally alarming. Americans lost $12.5 billion to phishing and other fraud in 2024 [6], while Business Email Compromise attacks alone cost companies a combined $2.77 billion [8]. Phishing complaints to the FBI’s Internet Crime Complaint Center skyrocketed from about 115,000 in 2019 to 300,000 in 2023 - a staggering 216% increase [7].

Common Techniques in AI-Enhanced Phishing

AI-Generated Spear-Phishing Emails

Cybercriminals are now leveraging generative AI to craft phishing emails that are nearly indistinguishable from legitimate messages. Unlike the clumsy, error-ridden phishing attempts of the past, these emails are polished, grammatically correct, and tailored to specific recipients. They often include precise details - like internal job titles, medical terminology, or references to ongoing projects - which makes them blend effortlessly into the daily flow of communication in healthcare settings. The challenge of verifying email authenticity is further compounded by decentralized workforces and the involvement of numerous third-party vendors.

"AI-powered scams exploit machine learning to generate thousands of personalized phishing emails within minutes, each tailored to the recipient's behavior and vulnerabilities. Unlike manual scams, these bypass traditional detection by mimicking legitimate communications with alarming accuracy."

But phishing emails are just one piece of the puzzle. Attackers are increasingly turning to other advanced methods to boost their schemes.

Deepfake Audio and Video

Voice cloning has emerged as a serious concern for healthcare organizations. With as little as 60 seconds of recorded audio, AI can replicate someone’s voice, enabling attackers to convincingly impersonate doctors, administrators, or IT personnel during phone calls. Under the pressure of urgent requests, staff are often more likely to comply - studies show that clinicians are three times more likely to respond to video requests compared to text-based ones[3]. This vulnerability has contributed to a 60% rise in patient record breaches linked to voice scams since 2021[3].

The scale of this threat is staggering. High-quality deepfakes fool people nearly half the time, with a detection failure rate of 47.6%[3]. The financial toll is equally daunting, as global losses from deepfake fraud are projected to hit $1 trillion by 2024[3]. While healthcare is a prime target, the ripple effects of these attacks are being felt across industries worldwide.

Voice and video deepfakes undermine trust in direct communication, but attackers are also exploiting digital platforms to deceive users in other ways.

Fake Websites and Chatbots

Generative AI has made it easier than ever to create convincing fake websites. These fraudulent pages often mimic authentic healthcare portals, complete with accurate staff names, logos, and organizational language. Their goal? To steal login credentials that can grant access to sensitive patient records, financial information, and critical clinical systems.

AI-powered chatbots, widely used in healthcare for tasks like patient communication and clinical decision support, are also becoming a target. Through prompt injection attacks, malicious actors can manipulate these chatbots into providing unsafe medical advice, exposing confidential patient data, or generating flawed clinical documentation. Even minor tweaks in input can lead to serious errors, putting patient safety at risk[9]. Alarmingly, 92% of healthcare organizations reported AI-related attacks by 2024, highlighting the vulnerabilities in traditional security measures[9].

sbb-itb-535baee

How to Reduce AI-Enhanced Phishing Risks

The rise of AI-driven cyber attacks is a growing concern, with nearly 90% of security professionals encountering such incidents in the past year. Even more troubling, 60% of these experts doubt their organization's ability to fend off these threats. And the stakes are high - cybercrime is projected to cost the global economy a staggering $14 trillion annually by 2028 [13]. To tackle this, organizations need a layered defense strategy that starts with understanding risks, strengthening email security, and ensuring employees are well-trained.

Conduct Threat Modeling and Risk Assessments

Start by identifying individuals within your organization who are most at risk - think finance teams, HR personnel, and executives. These roles often have access to sensitive data or the authority to approve financial transactions [13][8]. Then, map out the critical assets and workflows that, if compromised, could jeopardize patient safety or regulatory compliance.

It's also essential to think like an attacker. Audit the public information that AI tools might exploit to craft convincing phishing messages or deepfakes [13][6][8]. Stephanie Carruthers, IBM's Global Lead of Cyber Range, offers this advice:

"It's really important for both individuals and organizations to be cognizant about what you put online" [6].

Setting baselines for normal activity and behavior is another crucial step. These baselines allow AI-powered anomaly detection systems to identify unusual patterns that could signal an attack [13][8]. Tools like Censinet RiskOps™ can help by mapping potential AI-driven phishing threats to your critical assets, offering continuous monitoring and intrusion detection. For high-risk activities - such as wire transfers or access changes - implement strict verification protocols. Ensure these verifications happen through secure, human-only channels, not through links or instructions in suspicious emails [8].

Deploy Email and Identity Security Controls

Traditional email filters are no match for AI-generated phishing attempts, which are designed to mimic human communication with uncanny accuracy [11][12]. To counter this, healthcare organizations should consider AI-powered email security systems that use natural language processing (NLP) and semantic analysis. These tools can detect manipulative language or unusual urgency, even when it's subtle [11].

Advanced systems can also build profiles of normal communication patterns, flagging anomalies like unexpected sender-recipient pairings or logins from unfamiliar locations [11]. By analyzing behavior in real time and adapting over time, these technologies enhance threat detection while minimizing false alarms. Multi-layered scans for malicious links, spoofed domains, and harmful attachments provide an additional safety net.

On top of that, multi-factor authentication (MFA) should be mandatory across all systems. Regularly updating software and hardware patches is equally crucial [1][15][16]. Network detection tools that monitor for suspicious activities 24/7 are a must, especially since over 70% of healthcare data breaches as of 2024 stemmed from phishing attacks [11]. Automated responses, like quarantining suspicious emails or sandboxing attachments, add another layer of protection by alerting security teams with actionable insights [11].

Train Staff to Recognize AI-Driven Phishing

No matter how advanced your technology is, it can't fully replace human vigilance. AI-powered spear phishing attacks have a 47% success rate, even against trained security professionals [8]. Unlike traditional phishing emails, AI-generated ones often lack obvious giveaways like grammatical errors [16]. This makes ongoing training critical.

Simulate real-world scenarios, including deepfake audio, fake websites, and tailored spear-phishing emails, to prepare staff for AI-driven threats [1][15][16]. Training should go beyond a one-size-fits-all approach. Tailor programs to address the specific risks faced by different departments. Stephanie Carruthers highlights the importance of continuous learning:

"If we're giving them one hour of training once a year, is that really enough?" [6].

It's not. Training should be regular and evolve to keep pace with attackers' tactics.

Establish clear policies for the use of AI tools, restricting access to trained personnel and implementing safeguards for handling sensitive information [1][15]. This minimizes the risk of employees unintentionally exposing data through public AI platforms. As emphasized during a HIMSS21 presentation:

"Implementation of preventive, detective and corrective controls aligned with the enterprise risk assessment is the only way any health system can prepare" [14].

Ultimately, educating your workforce remains the most effective defense against social engineering attacks. While technology can help, it can't fully shield against threats that rely on human error [15].

Conclusion: Protecting Healthcare from AI-Driven Phishing

AI-powered phishing is a growing challenge, and the numbers paint a stark picture. By late 2024, credential phishing incidents skyrocketed by over 700% [2], with 82.6% of phishing emails leveraging AI models. These attacks boast a 60% success rate and a 54% click rate [18]. Considering that healthcare breaches now average $9.8 million per incident [3], the risks are immense.

To tackle this, healthcare organizations need to rethink their approach to security. An identity-first model - which continuously verifies users - is essential. This strategy moves away from outdated perimeter defenses and directly counters AI's ability to mimic legitimate communications. Key measures include robust multi-factor authentication, AI-driven threat detection, and strict out-of-band verification for sensitive actions. Tools like Censinet RiskOps™ support this approach by providing continuous monitoring and rapid anomaly detection to address threats swiftly.

However, technology alone isn’t enough. Limor Kessem, IBM Consulting's global lead for cyber crisis management, emphasizes:

"It's a big deal, and it's just getting a lot harder to recognize a phishing attempt from attackers" [17].

Given that only 5% of phishing attacks are reported [19], educating healthcare staff is critical. Training must go beyond one-time sessions, incorporating regular simulations that mirror real AI-generated phishing attempts. This helps employees learn to verify suspicious communications rather than just identify them.

The challenge doesn’t stop at digital threats. Cyber-physical vulnerabilities, like deepfake badges and sensor spoofing, are becoming an increasing concern. With 71% of hospital executives acknowledging their physical security systems aren’t ready for these threats, and 82% of organizations never conducting cyber-physical risk audits [10], there’s a clear need for action. Integrated security teams, unified response plans, and red-team exercises simulating AI-driven attacks are vital steps to address these gaps.

As attackers refine their methods, healthcare organizations must stay ahead. By combining advanced tools like Censinet RiskOps™, strong identity verification protocols, and ongoing staff education, they can build a resilient defense. This not only protects patient safety but also ensures regulatory compliance and operational stability in an ever-evolving threat landscape.

FAQs

What steps can healthcare organizations take to protect against AI-driven phishing attacks?

Healthcare organizations can guard against AI-driven phishing attacks by using phishing-resistant multi-factor authentication (MFA) and passkeys to confirm user identities. These methods add an extra layer of security, making it harder for attackers to gain access. Adopting a zero-trust security model is another critical step. This approach continuously checks user behavior and device health, minimizing the chances of unauthorized access.

Regular training for staff, including phishing simulations and awareness programs, plays a key role in preparing employees to identify and handle advanced threats. Beyond training, organizations should enforce strict access controls, keep a close watch for unusual activity, and promote a strong culture of security awareness. These steps help ensure they stay ahead of the ever-changing tactics used in social engineering attacks.

Why are AI-generated phishing emails more difficult to spot than traditional ones?

AI-generated phishing emails are becoming increasingly difficult to identify because they use natural language processing (NLP) to craft messages that mimic human communication. These emails can be customized with publicly accessible information, incorporating personal details to make the messages feel highly specific and convincing to the recipient.

On top of that, AI can imitate an organization’s tone and branding, even producing realistic deepfake content like fake voice recordings or videos. These sophisticated methods make AI-driven phishing attempts nearly impossible to differentiate from genuine communications, boosting their success rate significantly.

Why are deepfake audio and video a serious threat to healthcare security?

Deepfake audio and video present a serious challenge to healthcare security by convincingly imitating the voices and appearances of staff, patients, or trusted officials. This uncanny level of detail makes it easier for attackers to execute social engineering schemes, such as deceiving employees into granting access to sensitive systems or confidential data.

By enabling identity fraud, deepfake technology can sidestep traditional security protocols, increasing the likelihood of data breaches and endangering patient safety. In an industry where trust and precision are paramount, the misuse of deepfakes can lead to devastating consequences for both healthcare organizations and the individuals they serve.