The AI Governance Playbook: Practical Steps for Risk-Aware Organizations

Post Summary

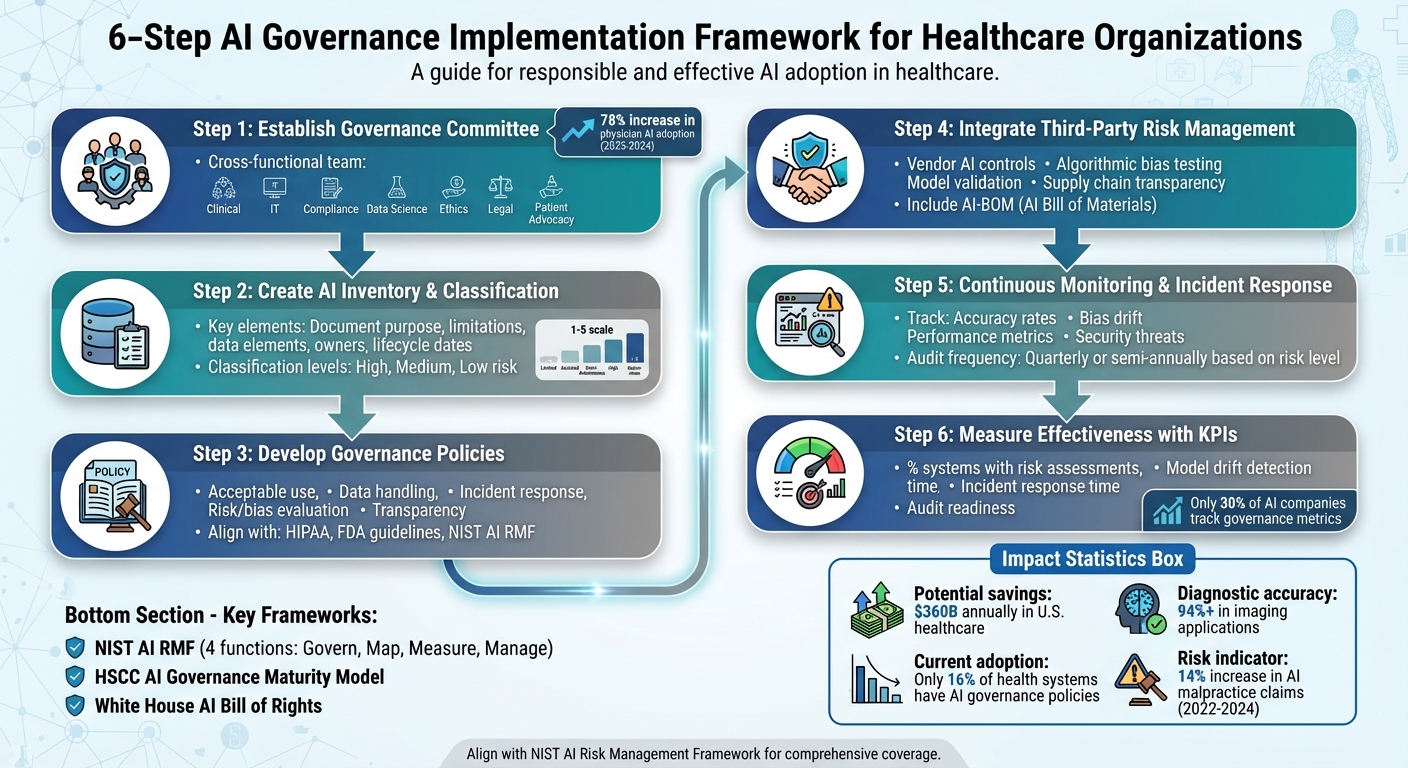

AI governance is critical for healthcare organizations to manage risks tied to artificial intelligence. It ensures patient safety, data security, compliance with regulations like HIPAA, and addresses AI-specific challenges such as algorithmic bias and system transparency. This guide outlines actionable strategies for implementing AI governance, including:

- Establishing governance committees with clear roles and responsibilities.

- Creating policies for managing AI risks across its lifecycle.

- Maintaining an AI inventory to classify and track systems.

- Integrating AI oversight into third-party risk management.

- Continuous monitoring and incident response planning.

- Measuring governance effectiveness using key performance indicators (KPIs).

AI governance frameworks, like the NIST AI Risk Management Framework, help organizations align with U.S. regulations while managing risks tied to data quality, bias, and system malfunctions. Following these steps ensures safer AI deployment and builds trust in healthcare systems.

6-Step AI Governance Implementation Framework for Healthcare Organizations

Core Principles and Frameworks of AI Governance

Building on the discussion of AI risks, the principles of AI governance serve as the foundation for managing these challenges. Effective governance relies on ethical, legal, and societal guidelines to ensure AI systems are used responsibly. For healthcare organizations, these principles act as a guide to navigating regulatory requirements while managing AI systems thoughtfully.

Key Principles of AI Governance

AI governance is shaped by four main principles: transparency, accountability, fairness, and ethics [3]. Transparency involves making the workings of AI systems and their data usage clear and understandable [3]. Accountability emphasizes that organizations must take responsibility for the outcomes and decisions of their AI tools. For instance, if an AI system impacts patient care, a designated individual or group must be accountable for the results. Fairness and ethics focus on minimizing algorithmic bias, ensuring that AI systems do not discriminate or harm vulnerable groups.

The National Institute of Standards and Technology (NIST) AI Risk Management Framework (AI RMF) outlines four core functions to guide risk assessment: Govern, Map, Measure, and Manage [6][7]. The "Map" function helps organizations place AI systems within their specific operational context, considering technical, social, and ethical factors [6][7]. Meanwhile, the "Measure" function assesses the likelihood and potential impact of AI-related risks [6][7]. The Health Sector Coordinating Council (HSCC) also references the NIST AI RMF, offering healthcare organizations a way to align governance practices across the sector [1].

Aligning with U.S. Regulations and Frameworks

AI governance in the U.S. operates within a complex web of federal, state, and sector-specific regulations, including executive orders, agency guidance, and privacy laws [4]. The NIST AI RMF provides a structured approach to address issues like fairness, bias, and security throughout the AI lifecycle [7][8]. Additionally, the White House Office of Science and Technology Policy (OSTP) has introduced the Blueprint for an AI Bill of Rights, which offers guidance on safeguarding individual rights in the AI era [4].

To ensure effective governance, organizations should integrate AI risk assessments into existing cybersecurity frameworks. This involves adapting traditional compliance measures to cover new challenges, such as algorithmic biases, lack of transparency in predictive models, system malfunctions, and the complexities of human-technology interactions [5]. In healthcare, these cybersecurity risks typically fall into three categories: data access risks, device operation risks, and system-level risks [5].

Identifying and Classifying AI Systems

Maintaining an up-to-date inventory of AI systems is crucial for understanding their functions, data dependencies, and security risks [1]. This inventory should classify AI tools based on their level of autonomy and associated risk. The HSCC guidance suggests using a multi-level autonomy scale - such as a five-level scale - to match the required human oversight to the system's risk level [1]. For example, a fully autonomous system making treatment decisions would demand stricter oversight than a scheduling tool used for administrative purposes.

Organizations should also conduct security risk analyses and impact assessments tailored to each AI system's operational environment [6][7]. This includes determining whether an AI system serves as a clinical decision support tool, a medical device, or an administrative application. Each category comes with unique regulatory requirements and risk considerations. By situating AI systems within their specific operational contexts, organizations can better grasp potential impacts and establish appropriate governance measures [6][7]. Proper classification enables organizations to set up effective governance committees and craft policies tailored to their needs.

Building an AI Governance Structure

After identifying and categorizing your AI systems, the next logical step is setting up a governance structure to ensure oversight and accountability. This structure is essential for responsible AI deployment across your organization, providing clarity in decision-making and reinforcing accountability. It builds directly on earlier efforts around AI risk management and inventory tracking.

Forming an AI Governance Committee

Once your AI systems are classified, creating a dedicated AI governance committee becomes a top priority. This committee acts as the central oversight body for all AI-related initiatives. To ensure diverse perspectives, it should be a cross-functional team that includes members from various areas of the organization, such as clinical leadership, IT, compliance, data science, ethics, legal, and patient advocacy [10][11]. By integrating the committee within existing governance frameworks, you can avoid isolating AI governance as a separate entity [13].

The committee's makeup is critical. For example, the rapid increase in physicians adopting health AI - doubling between 2023 and 2024, a 78% rise - underscores the urgency of establishing proper oversight now [12]. The committee should focus on key responsibilities like developing policies, conducting ethical reviews of AI projects, approving deployments, and ensuring smooth communication with stakeholders [10]. While the committee oversees governance, operational responsibilities such as clinical workflows, business strategies, and budgeting remain with individual departments [9].

To make the committee effective, secure early support from the CEO and board [12]. Clearly define expectations around time commitments, staffing, and compensation [9], and review the committee’s membership annually to ensure it stays relevant and effective [9].

Defining Roles and Responsibilities

Clearly defined roles are essential to prevent confusion and ensure accountability throughout the AI lifecycle. Governance processes should explicitly outline responsibilities at every stage, from initial planning to system decommissioning [1][16].

Start by appointing executive sponsors who can champion AI governance and allocate necessary resources. Assign model risk owners to handle ongoing monitoring and risk assessments of deployed AI systems. The Chief Information Security Officer (CISO) should develop expertise in AI vulnerabilities and collaborate closely with data scientists to ensure continuous security validation [16].

"AI's ability to analyze massive volumes of data, identify anomalies and respond instantly doesn't just shorten response times - it protects lives and builds trust in healthcare systems."

– Greg Surla, Senior Vice President and Chief Information Security Officer, FinThrive [15]

Committee members should have role-specific knowledge to contribute effectively. For instance, clinical representatives should understand basic technical concepts like Electronic Health Records (EHRs), while technical experts should familiarize themselves with clinical workflows [9]. It’s also essential to distinguish between the committee’s oversight role and the implementation duties of individual departments [9][10]. Similarly, third-party engagements require clear governance policies and approval pathways to ensure accountability for external systems [1].

Creating AI Governance Policies

Policies are the bridge between governance principles and day-to-day operations. These should cover areas like acceptable AI use, data handling, incident response, risk and bias evaluation, and transparency [18][3]. Align your policies with legal and regulatory requirements, such as HIPAA and FDA guidelines, and refer to established frameworks like the NIST AI Risk Management Framework [1][17][18].

An acceptable use policy should define permitted AI applications, authorized users, and usage conditions. Data handling policies must specify how AI systems can access, process, and store sensitive information, especially when dealing with protected health information under HIPAA.

For AI-specific threats like model poisoning, data corruption, or adversarial attacks, your incident response policy should outline clear procedures. Define who is responsible for responding, how incidents are escalated, and what documentation is required. Risk and bias assessment policies should mandate regular evaluations of AI systems to ensure fairness, accuracy, and the absence of discriminatory impacts.

Develop policies that prioritize safety and ethics while encouraging innovation [9]. Involve clinicians, patients, and operational teams in the policy-making process to ensure the guidelines are transparent, practical, and aligned with real-world needs [14].

Managing AI Risks Across the Lifecycle

Once governance structures and policies are in place, the next step is actively managing AI risks throughout their lifecycle - from deployment to retirement. This process involves keeping a close eye on all AI systems, factoring AI-specific concerns into vendor relationships, and setting up monitoring protocols to catch issues before they escalate.

Creating an AI Inventory and Risk Classification

An AI inventory is the backbone of effective lifecycle risk management, helping track each system and its associated risks. Start by defining what qualifies as an AI system within your organization [19].

For every system, document its purpose, limitations, key data elements, feeder systems or models, owners, developers, validators, and lifecycle dates [19]. Assign dedicated teams, such as Data Science and ML Operations, to maintain the inventory’s accuracy and handle version control.

"The purpose of an inventory is to allow the organization to identify and track the AI/ML systems it has deployed and monitor associated risk(s) (if any). Such an inventory might describe the purpose for which the system is designed, its intended use, and any restrictions on such use. Inventories might also list key data elements for each AI/ML system, including any feeder systems/models, the owners, developers, validators, and key dates associated with the AI/ML lifecycle."

– Artificial Intelligence/Machine Learning Risk & Security Working Group (AIRS) [19]

AI Security Posture Management (AI-SPM) tools can simplify this process by creating an AI Bill of Materials (AI-BOM), which provides detailed insights into system components and flags unauthorized or unknown AI deployments [20].

Risk classification is another critical step, where systems are prioritized based on potential impact and organizational risk tolerance. Group AI systems into categories like high, medium, or low risk, considering factors such as data sensitivity, patient safety, and regulatory obligations. Tools like Censinet RiskOps can centralize this inventory, ensuring it stays up-to-date in real time.

Ongoing monitoring is key. Regular audits, data cleansing, and automated data management ensure the inventory remains accurate. For example, Wiz Research uncovered a case where Microsoft researchers accidentally exposed 38 TB of sensitive data due to a misconfigured AI model, underscoring the importance of strong data risk management [20].

Once your internal inventory is solid, it’s time to extend these governance practices to external partners.

Integrating AI Governance into Third-Party Risk Management

Internal risk classification principles should also guide how you manage vendors. A vendor with weak AI controls can undermine your entire cybersecurity framework [21]. Traditional Third-Party Risk Management (TPRM) programs need to evolve to address AI-specific challenges like algorithmic bias, data misuse, lack of explainability, and poor model governance [22]. The Health Sector Coordinating Council (HSCC) is currently working on 2026 guidance to address AI cybersecurity risks, including a focus on third-party AI risks and supply chain transparency [1].

When assessing vendors that use AI, go beyond standard security checklists. Ask detailed questions about their AI training data sources, model validation practices, bias testing protocols, and incident response plans for AI-related threats. Include AI-specific governance requirements in contracts and Business Associate Agreements (BAAs) to ensure transparency, performance tracking, and secure data handling.

Demand that vendors disclose all AI components in their solutions, similar to requesting a software bill of materials. This level of transparency helps uncover fourth-party risks. For instance, Wiz Research identified a vulnerability in Hugging Face’s architecture that allowed attackers to manipulate hosted models, potentially compromising integrity and enabling remote code execution [20].

Platforms like Censinet RiskOps streamline this process, integrating AI-specific risk assessments into existing TPRM workflows. With features like Censinet AITM™, you can automate vendor questionnaires, summarize evidence, document integration details, and flag fourth-party risks. This approach can cut assessment times from weeks to days without sacrificing thoroughness.

Monitoring AI Systems and Responding to Incidents

Continuous monitoring is essential to ensure AI systems perform as expected and to identify emerging issues like performance degradation, bias drift, or security threats. Track key performance metrics - such as accuracy rates, prediction confidence, processing speeds, and error rates - and set thresholds to trigger alerts when anomalies occur. Regularly review outputs across demographics to catch any signs of bias. Depending on the system’s risk level, schedule audits quarterly or semi-annually to reassess fairness and accuracy as data evolves.

Incident response plans must also account for AI-specific scenarios. Clearly define escalation paths for issues like model accuracy drops, detected bias in clinical decision-making tools, or suspected model poisoning attacks. Document the steps, responsibilities, and reporting protocols for each scenario.

Censinet RiskOps can serve as a centralized hub for AI oversight. Its risk dashboard aggregates real-time data from all systems, functioning like an air traffic control center for AI governance. The platform routes critical findings and tasks to the appropriate stakeholders - such as members of the AI governance committee - ensuring issues are addressed quickly and accountability is maintained across the organization.

sbb-itb-535baee

Measuring and Improving AI Governance

Setting up governance structures is just the first step; measuring how well they work is what ensures your AI systems are operating safely. Evaluating effectiveness not only highlights weak areas but also proves compliance and builds trust in your AI systems. However, only 30% of companies using AI currently track governance performance through formal metrics [23]. This is where maturity models come into play, offering a structured way to measure and improve governance outcomes.

AI governance maturity models build on traditional risk management practices, providing clear benchmarks for progress. These models evaluate essential capabilities like policy implementation, risk assessments, monitoring, and incident response readiness. For example, healthcare organizations can use frameworks like the Health Sector Coordinating Council (HSCC) AI Governance Maturity Model or the OWASP AI Maturity Assessment (AIMA) as starting points. Begin with a baseline evaluation, then reassess periodically - quarterly or after significant changes, such as deploying new models or responding to updated regulations.

Key performance indicators (KPIs) turn governance goals into measurable outcomes. Metrics to track might include the percentage of AI systems with completed risk assessments, time taken to detect model drift, incident response times, explainability coverage, and audit readiness (measured through up-to-date documentation and version control). Assigning ownership for each KPI is crucial, and automating tracking through MLOps dashboards can streamline the process. Visualizing these metrics for executives and compliance teams ensures everyone stays aligned [23].

The need for strong oversight is underscored by a 14% rise in AI-related malpractice claims between 2022 and 2024 [14]. To address this, success metrics should cover areas like accuracy, bias, drift, and hallucinations. Regular manual red-teaming exercises can help test fairness, while enterprise audit teams should periodically evaluate AI model performance in production [2]. Aligning your KPIs with frameworks like ISO/IEC 42001 can further demonstrate adherence to industry standards [23].

Embedding these KPIs into daily operations offers actionable insights for ongoing improvement. Tools like Censinet RiskOps can centralize AI policies, risks, and KPIs to support maturity assessments. The AI risk dashboard consolidates real-time data, acting as a central hub for governance oversight. Additionally, platforms like Censinet AITM™ speed up assessments by automating evidence validation and summarizing vendor documentation. These tools route critical findings to key stakeholders, such as members of the AI governance committee, ensuring accountability and fostering continuous improvement across the organization.

Conclusion

AI governance is no longer optional for healthcare and cybersecurity organizations - it’s a must for deploying AI responsibly. As Alliant Cyber explains, "AI governance is a necessity for organizations looking to harness the power of AI while mitigating risks" [24]. This guide has outlined the key steps: forming governance committees, maintaining detailed AI inventories, aligning with established frameworks, and ensuring continuous monitoring throughout the AI lifecycle. These practices are essential for protecting compliance and safeguarding patient care.

The stakes couldn’t be higher. In September 2020, a ransomware attack on a university hospital in Düsseldorf, Germany, disrupted critical systems. Tragically, a patient had to be transferred 19 miles to another facility and died before receiving treatment. German authorities even launched a manslaughter investigation, highlighting the severe consequences of IT vulnerabilities [5].

At the same time, the potential benefits of AI are enormous. It’s estimated that AI could save the U.S. healthcare system up to $360 billion annually while boosting diagnostic accuracy to over 94% in certain imaging applications. Yet, only 16% of health systems currently have system-wide AI governance policies in place [25]. Organizations that take action now stand to unlock these advantages while managing risks effectively.

To get started, focus on these steps: assemble your AI governance committee, build a thorough inventory of all AI systems, create incident response playbooks, and define clear key performance indicators. Use centralized AI oversight tools to simplify policy management, track risks, and evaluate vendors efficiently.

FAQs

What are the key principles of AI governance in healthcare organizations?

The main principles guiding AI governance in healthcare aim to ensure the ethical, transparent, and responsible use of AI technologies, with patient safety and data security at the forefront. These principles include:

- Strategic alignment: AI initiatives should support the organization's goals and healthcare priorities, ensuring they're integrated into the broader mission.

- Ethics and fairness: AI systems must produce equitable outcomes and actively work to minimize bias, ensuring fairness across all patient groups.

- Transparency and accountability: AI decisions need to be clearly explained, with stakeholders held responsible for the outcomes of these systems.

- Privacy and safety: Protecting sensitive patient information and maintaining strict safety protocols are non-negotiable.

- Continuous monitoring: Regular evaluations are essential to confirm that AI systems remain compliant, effective, and safe while addressing potential risks.

By following these principles, healthcare organizations can embrace technological advancements while ensuring patient trust and safety remain central.

How can healthcare organizations integrate AI governance into their existing cybersecurity practices?

Healthcare organizations can strengthen their cybersecurity strategies by weaving AI governance into their existing frameworks, such as the NIST Cybersecurity Framework 2.0 and the NIST AI Risk Management Framework. This means performing regular risk assessments, keeping a close eye on systems through continuous monitoring, and incorporating AI-specific checks into their cybersecurity policies.

To tackle AI-related challenges like algorithmic bias and data privacy issues, collaboration among IT, clinical, and compliance teams is essential. Using tools that automate vulnerability detection and manage threats can bolster system defenses, making AI deployments more secure, transparent, and compliant with regulations like HIPAA. These efforts help build a cohesive, risk-conscious approach to cybersecurity in healthcare.

How can organizations set up an effective AI governance committee?

To set up a strong AI governance committee, begin by assembling a diverse team of stakeholders. This group should include members from legal, compliance, data science, and executive leadership to ensure a well-rounded approach. Clearly outline each member’s roles and responsibilities to maintain accountability and align efforts with the organization's goals.

Create policies that prioritize ethical AI usage, thorough risk evaluations, and adherence to regulations. It’s also important to regularly monitor AI systems and perform audits to identify and address risks while staying in step with changing regulatory landscapes. Encourage collaboration across departments to maintain transparency and build a culture rooted in trust and adaptability when tackling AI-related challenges.

Related Blog Posts

- AI Governance Talent Gap: How Companies Are Building Specialized Teams for 2025 Compliance

- Healthcare AI Data Governance: Privacy, Security, and Vendor Management Best Practices

- The Safety-Performance Trade-off: Balancing AI Capability with Risk Control

- Board-Level AI: How C-Suite Leaders Can Master AI Governance