AI Model Security Audits: Checklist for HDOs

Post Summary

Healthcare delivery organizations (HDOs) using AI systems face critical security challenges. These systems handle sensitive patient data and influence clinical decisions, making them high-risk targets for breaches. Regular AI model security audits are essential to safeguard patient privacy, ensure compliance with regulations like HIPAA, and mitigate risks such as data leaks, model inversion, and membership inference attacks.

Key Takeaways:

- Audit Scope: Focus on AI tools handling Protected Health Information (PHI) and clinical workflows. Include cloud, on-premises, and vendor-managed systems.

- Core Steps:

- Build an inventory of AI assets (datasets, models, APIs).

- Map PHI data flows and identify trust boundaries.

- Ensure encryption, access controls, and de-identification measures.

- Test for vulnerabilities (e.g., prompt injection, data poisoning).

- Monitor logs and metrics to detect issues like model drift or PHI exposure.

- Vendor Oversight: Secure HIPAA-compliant agreements, review security measures, and continuously monitor vendor risks.

- Continuous Improvement: Conduct audits annually or after major system changes, and prioritize remediation of identified vulnerabilities.

Quick Tip:

Tools like Censinet RiskOps™ can streamline asset management, vendor oversight, and risk assessments, reducing administrative burdens while maintaining compliance.

By integrating these practices into governance and maintaining ongoing monitoring, HDOs can better protect sensitive data and reduce risks associated with AI systems.

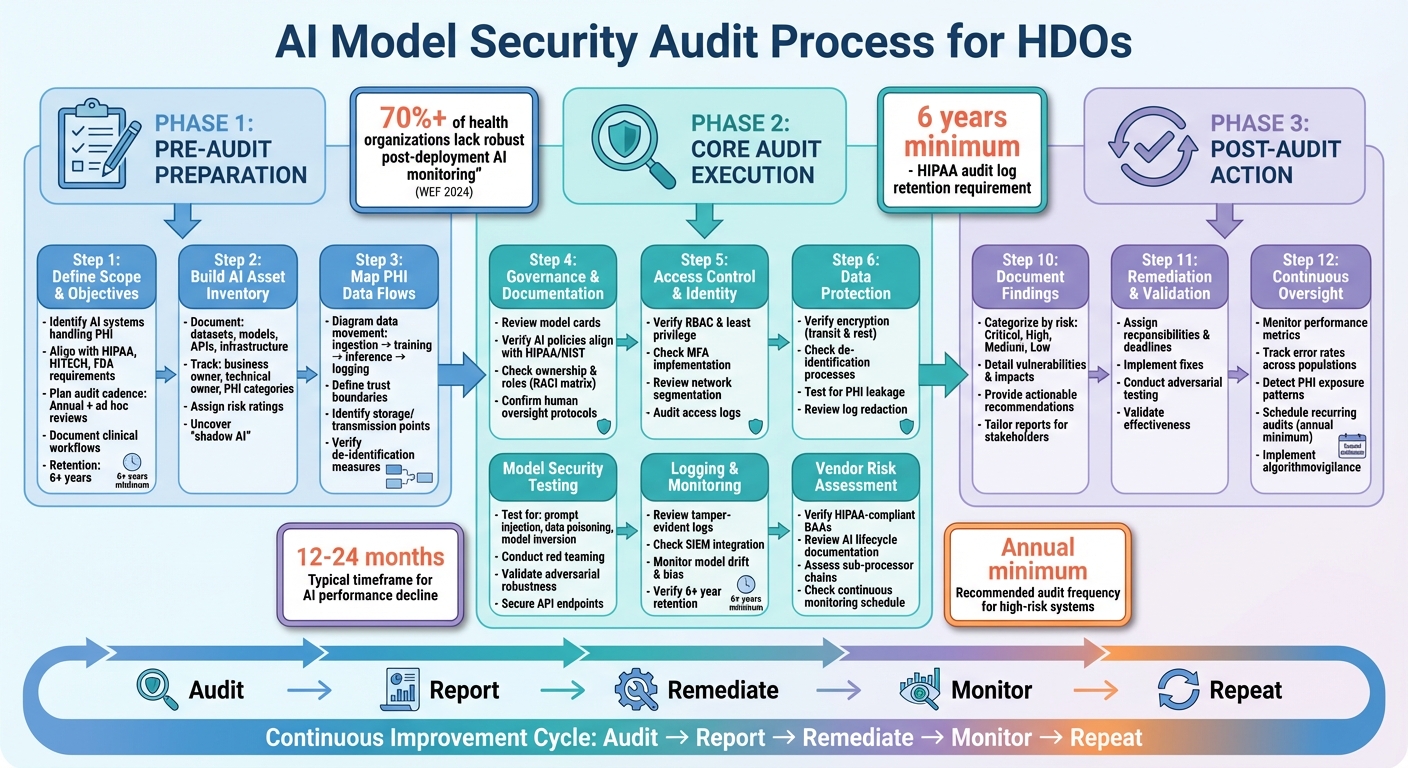

AI Model Security Audit Process for Healthcare Organizations

Pre-Audit Preparation Checklist

Define Audit Scope and Objectives

The first step in preparing for an audit is to clearly define which AI systems will be audited and how frequently. According to HIPAA's Security Rule, audit documentation must be retained for at least six years [2]. Your scope should outline the specific AI tools and environments involved - whether they’re cloud-based, on-premises, or vendor-managed - and align with the relevant regulatory frameworks, including HIPAA, HITECH, and any FDA requirements for systems supporting clinical decision-making.

Establish the audit cadence. Annual reviews are standard, but you should also plan for ad hoc audits following major system changes, security incidents, or the onboarding of new vendors [2]. Document the business processes and clinical workflows that rely on AI systems, focusing on tools that directly handle PHI or influence patient care. This approach helps prioritize high-risk systems, ensuring they receive the necessary attention to protect sensitive information and meet compliance requirements. A well-defined scope reduces the risk of overlooking critical areas.

Once the scope is set, the next step is to create an accurate and comprehensive inventory of your AI assets.

Build and Verify an AI Asset Inventory

Develop an inventory of all AI components that interact with PHI, including datasets, models, APIs, and supporting infrastructure [2]. During this process, many healthcare delivery organizations (HDOs) uncover "shadow AI" - systems operating without formal oversight.

For each asset, document details such as the business owner, technical owner, intended use, PHI categories handled, data sources, and deployment environment [2]. Assign risk ratings to reflect each asset’s impact on patient safety and data security. This ensures every component’s role in protecting PHI is thoroughly evaluated. Platforms like Censinet RiskOps™ can simplify this process by centralizing information and automating risk assessments for vendors, patient data, medical devices, and supply chain components [1].

Keeping the inventory up to date is critical. Outdated or incomplete records are a common issue in AI security reviews and can lead to vulnerabilities. With a detailed and current inventory, you can move on to mapping PHI data flows and trust boundaries.

Map PHI Data Flows and Trust Boundaries

Create detailed diagrams showing how PHI moves through each AI system - from ingestion and training to inference, logging, and archival [2]. This step is essential for identifying potential vulnerabilities, particularly at interfaces and integrations, where issues are more likely to occur than within the model itself [2].

Define trust boundaries, which mark points where data transitions between components with varying levels of trust or control. Typical boundaries include the connections between internal networks and cloud environments, between HDO systems and third-party vendors, and between clinical systems like EHRs or PACS and AI tools [2]. For each boundary, document who controls each side, the authentication and authorization mechanisms in place, the data (especially PHI) that crosses it, and the safeguards used - like encryption, tokenization, or network controls [2][5].

Additionally, pinpoint where PHI is stored, transmitted, or transformed, and verify the effectiveness of any de-identification measures. Address weak points in these boundaries before the audit and document all fixes to demonstrate compliance and preparedness.

Core AI Model Security Audit Checklist

Governance, Policies, and Documentation

Start by ensuring that every AI system handling protected health information (PHI) has a clear governance structure. This includes documented ownership, a defined clinical use case, and an assigned risk rating.

Each system should also include a model card that outlines details like its clinical purpose, data sources, performance metrics, limitations, and risks [7][8]. The European Data Protection Board emphasizes the importance of such documentation to help auditors quickly understand a system's purpose and associated risks. Without this, it’s tough to verify if the safeguards match the system’s risk profile.

Review internal AI-specific policies to confirm they align with HIPAA’s Security Rule and frameworks like NIST. These policies should detail how new AI deployments are approved, maintain human oversight for clinical decisions, and address what happens if a model’s performance declines. Additionally, assigning clear roles and responsibilities - such as who monitors models, responds to incidents, and authorizes changes - is crucial. Tools like a RACI matrix can help organize these responsibilities. Strong governance ensures proper access controls and data protection measures are in place.

Access Control and Identity Management

Once governance is established, the next step is to tightly control access to AI systems and PHI. Use role-based access control (RBAC) and follow the principle of least privilege to limit access to only what’s necessary [2][4]. For example, restrict PHI access to authorized personnel and ensure only specific roles can retrain models, modify parameters, or deploy new versions. Research shows that misconfigured cloud storage and poor access control are frequent issues in HIPAA risk assessments for AI workflows [2].

Implement multi-factor authentication (MFA) for all administrative access [2][5]. Additionally, segment networks to isolate environments used for AI training and inference, especially when processing PHI. Only allow necessary connections [2][5]. During audits, confirm that administrative access logs are detailed, regularly reviewed, and that access rights are re-certified after role changes or staff departures [2][4].

Data Protection and PHI Safeguards

Encrypt all data - whether in transit (using TLS/HTTPS) or at rest - and rotate encryption keys regularly through secure methods like hardware security modules (HSMs) [2][5]. Avoid using raw PHI for training unless absolutely necessary and formally approved. Instead, prioritize de-identified, pseudonymized, or synthetic data and ensure the de-identification process is well-documented [2].

To prevent PHI leakage, deploy input and output filters, especially for applications like generative AI or chatbots. Regularly test models to identify unintended memorization or data leaks [2][3]. Logs used for monitoring and debugging should either exclude PHI or include redaction measures to avoid secondary exposure [2]. Beyond encryption, protecting the AI model itself is critical to prevent targeted attacks.

Model Security and Robustness

Incorporate AI-specific risks into threat modeling. Address scenarios like prompt injection, data poisoning, model inversion, membership inference, adversarial examples, and insecure third-party integrations [2][5]. Secure AI pipelines by protecting endpoints, applying API rate limits, sanitizing inputs, and enforcing rigorous code reviews and change management for updates [2][5].

Conduct red teaming and adversarial testing to uncover vulnerabilities like PHI leakage, unsafe instructions, or bias [3][7]. Keep training environments isolated from production systems, and validate new models in non-production settings using safe, realistic data before deployment [2][3]. During audits, ensure these testing procedures are well-documented and that any identified issues are resolved before the system goes live. Once robustness is verified, continuous monitoring and logging are key to maintaining security.

Logging and Monitoring for AI Systems

Use tamper-evident logs to track user access, administrative actions, data ingestion, training runs, inference requests, and model outputs. Synchronize system clocks to support forensic investigations [2][5]. Centralize logs in a security information and event management (SIEM) platform and set up real-time alerts for behaviors like unexpected PHI access, data exfiltration, or model drift [2][3][5].

Monitor critical AI metrics, including performance degradation, drift, PHI leakage, and bias indicators. Integrate these metrics into your existing security alert systems [3]. Establish clear log retention policies, ensuring compliance with HIPAA and internal guidelines. For example, retain logs for at least six years and use secure deletion methods to make PHI irretrievable afterward [2]. Regularly review logs, promptly address alerts, and monitor both technical security events and clinical safety signals to maintain comprehensive oversight.

Third-Party and Vendor Risk Management for AI Systems

Vendor Risk Assessments and BAAs

When working with AI vendors handling Protected Health Information (PHI), treat them as Business Associates under HIPAA and secure a HIPAA-compliant Business Associate Agreement (BAA). However, standard BAAs often fall short for AI systems. You'll need to include clauses that address AI-specific concerns, such as how PHI is used, logging practices, data retention policies, subcontractor oversight, and breach notification procedures [2][6].

During the risk assessment process, ask vendors to provide detailed documentation of their AI lifecycle. This should include information on training data sources, methods for evaluating bias and reliability, and their security measures [7][9]. Vendors should also clarify whether PHI is used for model training and, if so, explain their de-identification, encryption, deletion, and access control processes. Look for specifics on multi-factor authentication (MFA) and network segmentation [2][5]. Be cautious of claims that data is "de-identified", as combining outputs or logs with other data sources can often lead to re-identification risks [6]. These details must be clearly outlined in contracts and supported by technical safeguards.

Once you have robust AI-specific BAAs in place, the focus should shift to ongoing evaluation of vendor performance and associated risks.

Continuous Monitoring of Vendor Risks

Managing vendor risks isn’t something you can set and forget. Develop a routine schedule - at least once a year - and conduct additional reviews when events like product updates, new features, or security incidents occur [2][5]. Keep tabs on vendor performance metrics, their incident history, and any updates to their AI systems or third-party dependencies. Security checklists frequently point out that expanding third-party integrations often introduces new vulnerabilities, making periodic reassessments and monitoring essential [5].

It’s also important to understand your vendor's sub-processor chains, such as their use of large language model (LLM) providers or cloud services. These dependencies should be included in your AI asset inventory and trust-boundary diagrams [2]. Each layer of this chain introduces potential risks, so you need clear visibility into how these relationships are managed and secured.

Using Platforms for Vendor Oversight

In addition to regular reviews, healthcare risk management platforms can simplify vendor oversight. For example, Censinet RiskOps™ is specifically designed for healthcare organizations to manage risks from third-party vendors, including those related to AI. This platform connects healthcare delivery organizations (HDOs) with over 50,000 vendors and products, replacing manual tools like spreadsheets with automated workflows, centralized evidence tracking, and secure data exchange [1].

The platform’s companion tool, Censinet AITM™, speeds up the risk assessment process by allowing vendors to complete security questionnaires quickly. It automatically summarizes vendor evidence, captures integration details, identifies fourth-party risks, and generates risk summary reports based on the collected data [1]. While automation handles much of the heavy lifting, human oversight remains critical. Risk teams can configure rules and review processes, ensuring that automation supports decision-making rather than replacing it [1].

Censinet also serves as a centralized hub for managing AI-related policies, risks, and tasks. Key findings from assessments are routed to relevant stakeholders, such as members of the AI governance committee, for review and approval [1]. The platform’s real-time AI risk dashboard pulls everything together, helping teams address issues efficiently and stay on top of their AI risk management responsibilities.

"Not only did we get rid of spreadsheets, but we have that larger community [of hospitals] to partner and work with, and Censinet RiskOps allowed 3 FTEs to go back to their real jobs - now we do a lot more risk assessments with only 2 FTEs required." [1]

For vendors, tools like Censinet Connect™ make it easier to share completed questionnaires and evidence with prospective HDO customers, streamlining the assessment process and demonstrating compliance early [1]. This collaborative approach allows healthcare organizations to perform thorough risk assessments, compare against industry benchmarks, and maintain continuous oversight of AI vendor risks. At the same time, it reduces the administrative burden on internal teams while ensuring compliance with HIPAA and other regulations.

sbb-itb-535baee

Audit Reporting, Remediation, and Continuous Improvement

Documenting and Reporting Audit Findings

When preparing an audit report, tailor it for executives, compliance officers, security teams, and clinical leaders. Start with the audit scope, detailing which AI systems were reviewed, the PHI data flows examined, and the security controls tested. Next, include a findings section that organizes issues into categories like governance, access control, data protection, model security, and logging. Assign each finding a risk rating - Critical, High, Medium, or Low - based on its potential impact on HIPAA compliance or patient safety.

Each finding should clearly identify the vulnerability, explain its potential impact on clinical operations or PHI protection, and offer actionable recommendations. For instance, if an AI system logs PHI in plaintext, this would warrant a Critical rating, while missing documentation for a model card might be categorized as Medium.

Planning and Validating Remediation

Turn audit findings into a detailed remediation plan. Assign responsibilities, set clear deadlines, and allocate the necessary resources. For example, the CISO might address access control issues, the Data Science lead could handle model drift concerns, and the Clinical Informatics lead might focus on reducing bias. For AI-specific findings, outline technical validation steps to ensure the effectiveness of corrective actions. For instance, retest models for demographic bias and document the results.

Before closing any remediation task, validate fixes with adversarial testing. For example, after patching a prompt injection vulnerability in an LLM-based system, simulate potential attack scenarios to verify the patch's resilience. Use a centralized tracking system to monitor progress and maintain accountability across teams like IT, security, data science, and clinical operations. Platforms such as Censinet RiskOps™ can simplify this process and promote collaboration. Once fixes are validated, shift your focus to ongoing oversight to address new vulnerabilities as they arise.

Establishing Continuous Oversight

A one-time audit isn’t enough to ensure long-term security. After remediation, implement continuous monitoring to stay ahead of potential risks. According to a 2024 global survey by the World Economic Forum, over 70% of health organizations using AI lack robust frameworks for post-deployment monitoring of AI safety and bias [7][9]. Your organization should monitor model performance metrics, track error rates across different population segments, and detect any PHI exposure or unusual patterns in inputs and outputs. Research highlights that AI performance can decline significantly within 12–24 months due to shifts in patient demographics and clinical practices [7].

Schedule AI audits regularly, prioritizing high-risk clinical AI systems for annual reviews or whenever significant changes occur in data, models, or workflows. Use drift detection tools to catch performance issues early. Additionally, document lessons from AI-related security incidents or near misses and incorporate them into updated policies, training, and technical controls. Adopting an algorithmovigilance approach - continuous monitoring of AI safety and performance, similar to pharmacovigilance in medicine - will help ensure your AI systems remain secure, reliable, and effective over time [4][7].

Conclusion: Building Better AI Model Security in HDOs

AI model security audits are essential for maintaining ongoing risk management, safeguarding patient data, and ensuring HIPAA compliance. As AI systems evolve through retraining, updates, and new integrations, vulnerabilities can emerge - even in systems that previously passed security checks. To stay ahead, healthcare delivery organizations (HDOs) should conduct formal audits of AI systems handling protected health information (PHI) at least once a year. High-risk clinical applications or significant system changes may require even more frequent reviews.

Taking audit findings into account, HDOs should weave AI security measures into every aspect of their operations. Treating AI security as an integral part of governance - not just a standalone technical issue - sets successful organizations apart. This means incorporating AI asset inventories and PHI flow documentation into governance frameworks, alongside implementing strong access controls, encryption, detailed audit logs, and continuous monitoring to detect model drift, bias, or potential PHI exposure.

External vendor management is just as critical. Tools like Censinet RiskOps™ can help HDOs simplify vendor risk assessments, ensure Business Associate Agreements are in place, and monitor remediation efforts across their AI ecosystems.

Shifting from sporadic checks to a fully developed AI security program takes time but follows a clear roadmap. Start by inventorying your AI systems and defining the scope of audits within the first 90 days. Over the next three to six months, complete initial audits, document the results, and start addressing any vulnerabilities. By the end of the first year, establish recurring audit schedules, implement continuous monitoring, and build a solid track record of regulatory compliance. As Matt Christensen, Sr. Director GRC at Intermountain Health, points out:

"Healthcare is the most complex industry... You can't just take a tool and apply it to healthcare if it wasn't built specifically for healthcare." [1]

The healthcare sector's unique challenges - ranging from medical devices to supply chains, clinical applications, and sensitive patient data - demand tailored solutions. By using healthcare-specific risk management platforms and committing to regular audits, HDOs can transform AI security from a compliance hurdle into a strategic advantage that protects patients while fostering innovation.

FAQs

What are the essential steps to ensure AI model security during audits for healthcare delivery organizations?

To keep AI models secure during audits, healthcare delivery organizations (HDOs) should take several key steps:

- Pinpoint and evaluate risks tied to AI models, focusing on areas like data privacy, compliance, and integrity.

- Examine data security protocols to protect sensitive information, such as PHI, during the training and testing phases.

- Analyze potential weaknesses in the models to guard against threats like adversarial attacks.

- Ensure compliance with regulations such as HIPAA, verifying that all processes align with legal standards.

- Conduct regular security tests, including penetration testing and vulnerability scans, to uncover and fix any gaps.

- Implement ongoing monitoring to quickly identify and address new threats as they arise.

Platforms like Censinet RiskOps™ can simplify these tasks, helping HDOs maintain compliance while strengthening security measures.

What steps can HDOs take to continuously enhance AI security after an audit?

To keep AI security robust, healthcare delivery organizations (HDOs) need to prioritize consistent risk monitoring and adjust security measures based on audit results. Tools like Censinet RiskOps™ can simplify this process by automating risk assessments, comparing performance to industry benchmarks, and staying ahead of new threats.

It's equally important to routinely evaluate and enhance security protocols, use audit feedback to make improvements, and promote a strong cybersecurity mindset across the organization. These steps are key to maintaining resilience and meeting compliance standards over time.

What challenges do AI systems create for managing vendor risks in healthcare organizations?

AI systems bring distinct challenges to vendor risk management for healthcare delivery organizations (HDOs). These challenges stem from their intricate nature, the risk of algorithmic bias, and the struggle to maintain security and regulatory compliance in a landscape of constantly changing rules.

To tackle these issues, HDOs should adopt continuous monitoring practices and use advanced tools designed to simplify risk assessments and address potential weaknesses. This approach helps safeguard patient data, protect clinical applications, and secure other essential systems while staying aligned with industry regulations.