Checklist: AI Model Security Testing

Post Summary

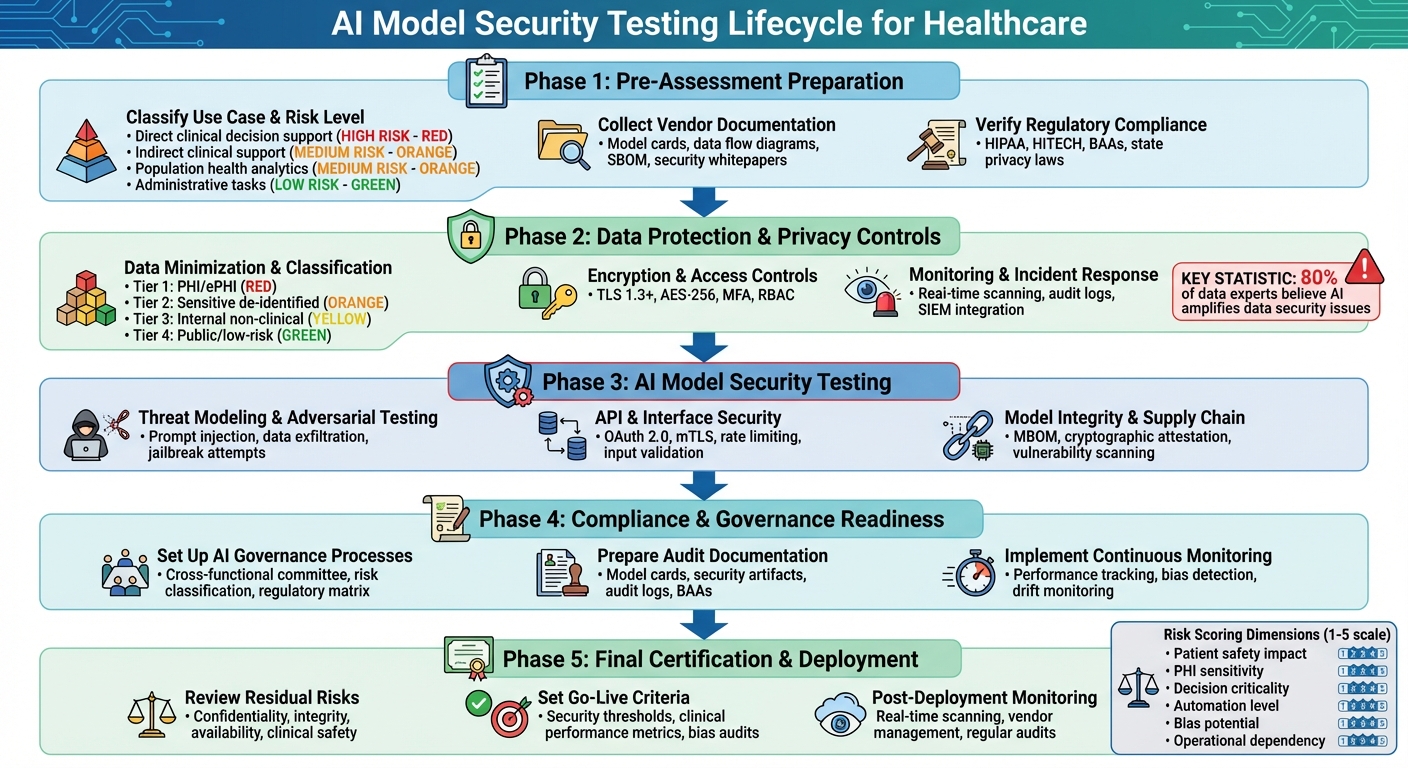

When integrating AI into healthcare, security testing isn't optional - it’s a must. AI systems often handle sensitive health data and influence patient care, making vulnerabilities a serious risk. Traditional security approaches don't cut it for AI. Here's what you need to know:

- Key Risks: AI systems can expose patient data, generate biased results, or fail to handle adversarial attacks like prompt injections or data poisoning.

- Regulatory Compliance: HIPAA and HITECH require healthcare organizations to ensure AI vendors meet strict security and privacy standards.

- Security Testing Steps: Evaluate training data, model architecture, and API security. Test for adversarial robustness, encryption, and access controls.

- Governance: Build a risk management framework, enforce vendor accountability, and maintain audit-ready documentation.

- Ongoing Monitoring: Track model performance, detect anomalies, and conduct regular audits to address evolving threats.

This checklist provides a step-by-step guide to assess risks, secure AI systems, and ensure compliance while safeguarding patient safety and data privacy.

AI Model Security Testing Framework for Healthcare Organizations

Pre-Assessment Preparation

Before diving into technical testing, pre-assessment lays the groundwork for securely integrating AI systems.

Classify Use Case and Risk Level

Start by defining the AI model's purpose and assessing its risk level. Categorize the model based on its function:

- Direct clinical decision support: Tasks like diagnosis, treatment selection, or medication ordering.

- Indirect clinical support: Workflow triage, documentation assistance, or patient routing.

- Population health and analytics: Broader data-driven healthcare insights.

- Administrative tasks: Non-clinical activities like billing or scheduling.

Each category carries a different level of risk. For example, models that influence diagnoses or treatments are high-risk, as errors could harm patients or compromise protected health information (PHI). Medium-risk models might handle tasks like prioritization or documentation, where mistakes disrupt workflows but don’t directly endanger patients. Low-risk models, such as scheduling automation, involve minimal clinical decision-making and limited PHI exposure.

To evaluate risk, create an internal scoring system that rates the model on several dimensions:

- Patient safety impact: Could errors directly harm patients?

- PHI sensitivity: Does the model process sensitive data or send PHI to external vendors?

- Decision criticality: Are outputs used automatically, or do clinicians review them?

- Automation level: Is the system fully automated or human-supervised?

- Bias potential: Could the model produce discriminatory results?

- Operational dependency: Would system downtime disrupt clinical workflows?

Use a scale (e.g., 1–5 for each factor) to calculate an overall risk score. This score will guide the depth of security testing, authentication requirements, logging protocols, and contingency planning. Document the risk classification using a standardized template, including details like intended users (e.g., clinicians or administrative staff), patient populations, data types (including PHI categories), and decision criticality.

Maintain an inventory of all AI systems - active, potential, or pilot projects - with information about their purpose, data sources, integrations, vendors, and model types. This documentation will serve as a key reference for future security, privacy, and validation efforts.

Collect Vendor Documentation

Gather detailed documentation from vendors to understand the model's architecture, data flows, and security measures. Request the following:

- Model cards: Information on training data sources, intended use cases, limitations, known biases, and performance metrics.

- Data flow diagrams: Details on PHI entry points, processing, storage, transmission, outputs, logging practices, and third-party subprocessors.

- Software Bill of Materials (SBOM): A list of all open-source and third-party components, libraries, frameworks, runtime environments, and infrastructure dependencies.

- Security whitepapers: Information on encryption methods, access controls (e.g., role-based access control or multi-factor authentication), logging capabilities, and secure deployment practices.

- Vulnerability reports: Results of recent penetration tests, remediation actions, secure development lifecycle practices, and incident response plans.

- Compliance documentation: Business Associate Agreements (BAAs) specific to AI data use, certifications (e.g., SOC 2 Type II, ISO 27001, HITRUST), and privacy or risk assessments.

Evaluate the quality of these documents by checking their completeness (all critical artifacts included), specificity (clear details instead of vague statements), timeliness (recent versions), and healthcare relevance (explicit references to HIPAA, PHI handling, and breach protocols). Be cautious of red flags like missing security details, lack of a vulnerability disclosure process, inconsistent information, or no healthcare-specific references.

Platforms like Censinet RiskOps™ can simplify this process by centralizing vendor questionnaires, SBOMs, certifications, and remediation tracking - especially helpful for organizations managing multiple AI vendors. Once the documentation is complete, move on to verifying regulatory compliance.

Verify Regulatory Compliance

Ensure the AI model complies with HIPAA, HITECH, and your organization's internal policies. Map the model's use case to key regulatory standards:

- HIPAA Privacy and Security Rules: Confirm safeguards like access controls, audit trails, integrity protections, transmission security, and workforce training.

- HITECH breach notification: Verify that vendors can detect incidents quickly, provide logs, support forensic analysis, and export data when needed.

- Minimum necessary standard: Make sure the AI processes only the minimum required PHI, with all access logged.

- HIPAA-compliant BAA: Check that the agreement explicitly covers issues like data retention, deletion commitments, breach notifications, and whether data (e.g., prompts or outputs) is used to train or improve the model.

Standard BAAs often lack clauses addressing AI-specific risks, such as the reuse of training data or long-term storage of prompts and responses. Tailor BAAs to address these concerns during pre-assessment.

Additionally, align the AI system with your organization's AI usage policies, which might outline permitted use cases, prohibited content, and clinical oversight requirements. Determine whether the AI qualifies as Software as a Medical Device (SaMD) or regulated clinical decision support, and, if so, collaborate with regulatory affairs to address FDA requirements before deployment.

For organizations operating in multiple states, review state-specific privacy laws that may impose stricter rules on health or biometric data.

To ensure thorough compliance, create a traceability matrix that maps regulatory requirements - such as those from the NIST Cybersecurity Framework, the NIST AI Risk Management Framework, or HITRUST - to vendor controls and internal safeguards. This matrix will help identify gaps to address or accept as residual risks. Align your documentation with established guidelines like the OWASP AI Testing Guide and emerging AI audit checklists. This preparation ensures that the next phase of testing will cover critical areas such as adversarial robustness, bias, explainability, and data protection, safeguarding both PHI and patient safety.

Data Protection and Privacy Controls

Once risks are classified and compliance is verified, the next step is ensuring secure handling of sensitive data, such as PHI and ePHI, throughout the AI system. This foundation supports the technical safeguards detailed below.

Data Minimization and Classification

After identifying risks and reviewing vendor documentation, it's crucial to manage and classify data carefully to limit exposure. Start by creating an inventory of all data processed by the AI model. This includes PHI/ePHI (like patient identifiers and clinical data), de-identified clinical information, and administrative records. Organize these into tiers:

- Tier 1: PHI/ePHI

- Tier 2: Sensitive de-identified data

- Tier 3: Internal non-clinical data

- Tier 4: Public or low-risk data

This tiered system aligns with HIPAA's "minimum necessary" standard. For each AI use case, document the data elements the model will handle - whether they include PHI/ePHI, de-identified, or pseudonymized data - and note applicable regulations, such as HIPAA or state privacy laws. Store this documentation in a risk management platform like Censinet RiskOps™ to maintain a clear record of data flows and their justifications.

To apply data minimization, analyze each use case to identify only the essential data needed for the model’s performance. For instance:

- Use tokens or pseudonyms instead of direct identifiers in prompts and API payloads.

- Trim or mask free-text notes to transmit only clinically relevant details.

- Replace exact timestamps with approximate date ranges (e.g., "within the last 30 days").

- Remove identifiers at integration points or ETL layers before data enters the model.

These minimization rules should be encoded as technical controls, such as input filters or middleware services, and validated during pre-deployment testing to confirm the model still meets its goals with reduced data.

Where possible, rely on de-identified or synthetic data for training, tuning, and testing. HIPAA outlines two de-identification methods: Safe Harbor, which removes 18 specific identifiers, and Expert Determination, which uses statistical techniques to minimize re-identification risks. Synthetic datasets, created using methods like generative adversarial networks (GANs) or variational autoencoders (VAEs), can retain statistical properties crucial for model performance while reducing exposure to PHI. Use identified PHI only in production inference when absolutely necessary, and document the reasons for doing so. Always ensure de-identified or synthetic data cannot be easily re-linked to individuals, even when combined with external datasets.

Encryption and Access Controls

Encrypting PHI/ePHI both in transit and at rest is non-negotiable. For data in transit, use TLS 1.3 or higher with strong cipher suites and certificate pinning. For data at rest, deploy FIPS-validated cryptographic modules and AES-256 encryption for databases, storage, and backups, alongside secure key management practices.

Contracts and security questionnaires should address how training data, prompts, outputs, logs, and system metrics are stored and encrypted. These elements, particularly logs and interaction histories, may contain incidental PHI and must adhere to the same encryption and retention standards as primary data. Confirm through documentation - such as SOC 2 or HITRUST reports - that the vendor applies encryption controls across all environments, including staging, testing, analytics, and backups.

Access controls are equally critical. Implement least-privilege access, role-based access control (RBAC), and multi-factor authentication (MFA) while adopting zero-trust principles. Define specific AI-related roles (e.g., clinician user, data scientist, or vendor support) and ensure each role has access only to the data and model capabilities they require. Require MFA for accessing AI consoles, configuration portals, and logs, and integrate authentication with corporate identity providers using protocols like SAML or OIDC.

For vendor access, contracts should enforce RBAC for support staff and limit administrator access to PHI. Access should be granted only for specific tasks, on a time-limited basis, and with full activity logging. Regular audits and simulated attacks (e.g., red teaming) can help validate these controls.

Network segmentation is another key layer of defense. Isolate AI systems and PHI repositories from corporate networks to limit lateral movement during security incidents. A zero-trust approach ensures all users and devices are continuously verified, with micro-segmentation enforcing dynamic access controls.

Monitoring and Incident Response

Encryption and access controls are critical, but continuous monitoring ensures anomalies are detected and addressed promptly. Maintain tamper-resistant audit logs that track user access, data inputs and outputs, and applied policies. These logs should include details like user IDs, roles, source applications, timestamps, and metadata, while ensuring PHI is managed per established policies.

Use real-time scanning tools to monitor inputs and outputs for PHI patterns, prompt injection attempts, or data exfiltration behaviors. These tools, whether developed in-house or provided by vendors, should integrate with your existing security information and event management (SIEM) systems. Set clear thresholds for anomalies, such as unusual query volumes or unexpected data patterns, and regularly review metrics like PHI leak rates or blocked prompt injections.

Your incident response plan should address AI-specific risks, such as:

- PHI exposure through prompts or outputs

- Compromised AI API keys

- Leakage of training data containing PHI

- Adversarial prompts leading to unsafe recommendations

- Cross-tenant data exposure

Develop detailed playbooks for detecting, containing, and addressing these scenarios. For example, you might disable integrations, revoke API keys, or follow forensic protocols to investigate incidents. Conduct tabletop exercises and simulated incidents - like test prompts mimicking PHI exfiltration - to ensure your monitoring systems are effective and your response teams are prepared. Lessons from these exercises should guide updates to controls, vendor requirements, and training protocols.

Platforms like Censinet RiskOps™ can consolidate vendor logs and alerts into your SIEM and risk management workflows, providing unified oversight of third-party AI tools and aligning AI data protection with broader risk management strategies.

AI Model Security Testing

This phase builds upon existing data protection measures, focusing on actively testing AI models for vulnerabilities that might compromise patient safety or data integrity. The goal is to identify and address potential security risks, such as exposing PHI, jeopardizing clinical safety, or allowing unauthorized changes.

Threat Modeling and Adversarial Testing

Start by mapping out the entire AI system architecture. This includes data sources like EHR, PACS, and claims databases, preprocessing pipelines, third-party models, hosting environments, APIs, and downstream clinical applications. Identify critical assets such as PHI/PII, model weights, prompts, API keys, and training data. Then, pinpoint all access points to these assets, whether through internal staff or external users. Clearly define trust boundaries, including network segments, VPNs, VPCs, and tenant boundaries in multi-tenant SaaS platforms.

Use established threat modeling frameworks, such as STRIDE adapted for AI or the OWASP AI Testing Guide, to identify specific risks. These might include prompt injection, adversarial examples, model theft, data exfiltration, inversion attacks, model poisoning, and API abuse. For each threat, document its likelihood and potential clinical impact - like misdiagnosing stroke patients or unauthorized PHI exposure - and map out existing or needed controls, such as RBAC, network segmentation, PHI de-identification, and input/output firewalls.

Conduct adversarial testing and red-teaming exercises to identify vulnerabilities. For generative or conversational models, test for prompt injection by embedding instructions to bypass safety rules, attempt data exfiltration by extracting PHI or internal details, and try jailbreak methods to override clinical restrictions. Additionally, test whether the model can be manipulated into providing unsafe medical advice that contradicts clinical guidelines. For predictive or diagnostic models, create adversarial examples - small input changes (e.g., imaging data or lab values) that lead to clinically significant misclassifications - and test fraud or anomaly detection evasion. Stress-test the model with malformed, extreme, or out-of-distribution inputs to observe how it behaves under unexpected conditions.

Red-teaming simulates real-world attackers. Assemble teams to manually and automatically test the model’s security and safety defenses. Document all attack paths, implement fixes - such as updated policies, reinforced guardrails, or enhanced monitoring - and retest to ensure vulnerabilities are resolved. These findings, along with remediation steps, should inform go/no-go deployment decisions and residual risk documentation for clinical governance.

After completing threat modeling, ensure API endpoints are equally fortified against these attacks.

API and Interface Security

Since third-party AI models are often accessed via APIs, securing these endpoints is critical. Treat AI APIs as high-risk and enforce strong authentication and authorization protocols, such as OAuth 2.0, mutual TLS (mTLS), or signed requests. Use granular role-based access control (RBAC) to ensure only authorized users and applications can send PHI to models or retrieve sensitive outputs. Apply the principle of least privilege - for instance, a clinician querying a diagnostic model shouldn’t have access to administrative functions or training data.

To protect against injection attacks and malformed payloads, implement strict input validation and schema enforcement. Use rate limiting and throttling to prevent denial-of-service attacks, adversarial probing, or automated abuse of model endpoints. Isolate AI model APIs from core EHR and clinical systems through network segmentation, minimizing lateral movement risks if an endpoint is compromised. Monitor and log all API activity, capturing key details like caller IDs, timestamps, data volumes, error codes, and unusual patterns. Feed these logs into a SIEM system for correlation with other security events.

For third-party hosted models, evaluate the vendor’s API security measures through documentation and testing. Confirm encryption in transit (TLS 1.3 or higher), authentication protocols, and incident response SLAs. Ensure a Business Associate Agreement (BAA) is in place for any PHI transmitted to the vendor’s API. Conduct penetration testing on APIs, including attempts to bypass authentication, replay tokens, escalate privileges, or exploit injection flaws. Test for edge cases by fuzzing prompts and parameters to identify scenarios where the API might inadvertently reveal sensitive system details or PHI.

Securing APIs is just one part of the equation; maintaining the integrity of the model and its supply chain is equally important.

Model Integrity and Supply Chain Security

Ensure the authenticity of AI models by creating a model bill of materials (MBOM) that lists details like the model name, version, training data sources, frameworks, libraries, and dependencies. Verify the model’s origin by obtaining developer identities, training data source details, and licensing information. Use cryptographic attestations or checksums to validate model artifacts, containers, or images and compare them against secure, out-of-band values. Only pull images from trusted registries with vulnerability scanning enabled.

Integrate supply chain scanning into your CI/CD pipeline. Use tools like SCA and vulnerability scanners to check AI libraries, data processing components, and container images. Regularly apply patches and updates to AI frameworks when new vulnerabilities are identified, and re-scan after updates. Secure model artifacts in storage and deployment with access-controlled repositories and encryption at rest. Enforce version control and rollback options to revert to trusted models if a new version is found to be compromised, biased, or unsafe. Consider using model watermarking or fingerprinting to detect tampering or unauthorized reuse.

Extend these reviews to the vendor’s development and delivery pipeline. Assess their DevSecOps practices, including code review, environment segregation, access controls, and incident response plans for model or data breaches. Identify all dependencies - such as libraries, frameworks, and ML toolchains - and verify them against vulnerability databases. Ensure timely patching and secure configurations. Platforms like Censinet RiskOps™ can help integrate vendor assessments into your broader risk management workflows, applying the same rigorous standards used for other clinical applications and medical devices.

sbb-itb-535baee

Compliance and Governance Readiness

After conducting thorough security testing, the next step is ensuring that AI models adhere to both regulatory and internal governance standards. For healthcare organizations, this means transforming test results into a governance framework that meets U.S. regulatory requirements and is prepared for audits. This process ensures that third-party AI models comply with regulations like HIPAA, HITECH, state privacy laws, and new AI-specific guidance from agencies such as the FDA and ONC. The focus is on creating accountability, maintaining audit-ready documentation, and establishing ongoing oversight to keep AI deployments compliant over time. Once these compliance measures are in place, the next priority is to develop governance processes.

Set Up AI Governance Processes

Establish a cross-functional AI Governance Committee that includes representatives from clinical leadership, information security, privacy, compliance, legal, risk management, and data science. This committee will review AI use cases, approve deployment decisions, and oversee risk management. Develop formal policies for AI usage and risk management, detailing:

- Permitted use cases (e.g., clinical decision support versus direct diagnosis)

- Human-in-the-loop requirements

- Risk assessment procedures

- Incident response protocols

- Decommissioning standards

Classify third-party AI models based on risk levels. For example, diagnostic tools handling PHI require more rigorous validation and monitoring than back-office automation tools. Assign clear roles for oversight, such as a business owner for each application, a model steward for technical management, and a risk owner for documenting residual risks.[6][7]

"Healthcare is the most complex industry... You can't just take a tool and apply it to healthcare if it wasn't built specifically for healthcare." - Matt Christensen, Sr. Director GRC, Intermountain Health[1]

To ensure compliance, map regulatory requirements to specific AI controls using a regulatory-to-control matrix. For instance, HIPAA §164.312 technical safeguards can be linked to measures like role-based access to model APIs, PHI masking in prompts, and immutable interaction logs.[2][6] This matrix serves as the foundation for demonstrating compliance during audits and supports repeatable approval workflows for new AI integrations.

Prepare Audit Documentation

Strong audit documentation is essential for verifying compliance. Start by maintaining detailed data governance records, including:

- Classification of all data used (e.g., PHI, PII, or de-identified)

- Documentation of de-identification methods

- Data lineage tracing from source systems through training and inference[6][8]

Gather model documentation such as model cards, which should outline intended use, limitations, training data characteristics, performance metrics across patient groups, and known biases. Link these to internal risk ratings and approval records.[4][7]

Preserve security testing artifacts like vulnerability assessments, penetration test reports, adversarial testing results, and red-team exercise summaries. For AI models in clinical workflows, store validation reports that document test designs, performance metrics, and clinical leader approvals before deployment.[6] Keep policy documents and SOPs related to AI usage, along with training records showing staff competence in safe AI practices.[6]

Audit logs should capture every PHI access event, AI inference request, administrative change, and model version update in a tamper-proof format. Regularly review these logs and document the process.[2][8] The Telehealth Resource Center’s 2024 Health Care Artificial Intelligence Toolkit emphasizes the importance of "routine audits", "performance reviews", and continuous security testing as part of compliance efforts.[6]

| Governance Area | What To Implement | Evidence for Audits |

|---|---|---|

| AI Governance Structure | Oversight committee, usage and risk policies | Committee charters, approved policies, risk register[6][7] |

| Vendor Management | BAAs, security questionnaires, compliance attestations | Signed BAAs, vendor certifications, risk assessments[2][6][8][7] |

| Model Documentation | Model cards, intended-use statements, bias analysis | Versioned model cards, validation summaries, bias audits[6][4][7] |

| Security Controls | Encryption, RBAC, MFA, network segmentation | Access matrices, network diagrams, encryption records[2][6][8] |

| Testing & Validation | Clinical validation, adversarial testing | Test plans, validation results, penetration test reports[2][6][4] |

| Continuous Monitoring | Performance, PHI leakage, drift monitoring | Dashboards, incident tickets, audit reports[2][3][6] |

Platforms like Censinet RiskOps™ simplify vendor assessments, automate evidence collection (e.g., BAAs, SOC reports, security questionnaires), and maintain audit trails for third-party AI models.

Implement Continuous Monitoring

Continuous monitoring strengthens risk management by building on earlier security and data protection efforts. Use real-time AI observability tools to track model performance, PHI leakage, bias, drift, and anomalies. Integrate alerts into your security operations center (SIEM) so AI-related incidents are handled like other security events.[2][3] Conduct regular audits to confirm adherence to usage policies, data privacy rules, and security standards, documenting any remediation steps.[6] Schedule performance reviews against clinical and operational metrics, and retrain or adjust models when necessary.[6][3]

Perform recurring security tests, including vulnerability assessments and penetration tests, especially after model updates or infrastructure changes.[6][4] Reassess vendor risk periodically, reviewing certifications, incident history, and any updates to models or infrastructure. Tools like Censinet RiskOps™ streamline these assessments and provide ongoing monitoring tailored to healthcare needs.

"Not only did we get rid of spreadsheets, but we have that larger community [of hospitals] to partner and work with." - James Case, VP & CISO, Baptist Health[1]

Establish policies for testing schedules, such as annual comprehensive reassessments and more frequent checks after major updates or changes. Use centralized platforms to track assessments, findings, remediation tasks, and approvals, ensuring all documentation is versioned and accessible for regulators, payers, or internal audits.[6]

Final Certification and Deployment

Securing formal approval and establishing operational controls are the final steps before deploying an AI model. This process turns all the prior testing, documentation, and risk evaluations into a clear decision to proceed - or not. The focus here is on documenting any remaining risks, obtaining stakeholder sign-offs, setting clear deployment conditions, and implementing robust monitoring to ensure the AI model operates safely and securely over time. These steps build on earlier compliance efforts to ensure the deployment is both secure and controlled.

Review Residual Risks and Secure Approvals

Residual risks must be carefully reviewed and documented, building on earlier assessments of compliance and risk categories. These risks typically fall into several areas:

- Confidentiality: Risks such as PHI leakage through prompts or outputs.

- Integrity: Issues like data poisoning or unauthorized model changes.

- Availability: Concerns around vendor dependencies or service outages.

- Clinical Safety: Risks including hallucinations, biases, or potential misdiagnoses.

Each risk should be quantified using measurable metrics - likelihood (e.g., rare, possible, likely) and impact (e.g., low, moderate, high). These metrics help assess risks in terms of patient safety, financial exposure, compliance with HIPAA, and organizational reputation. Once quantified, map these risks into your enterprise risk management framework and summarize them in a one-page briefing for executive review.

Risks should also be categorized by type - security, clinical quality, operational workflow, and compliance. Then, decide how to handle each: accept, transfer (e.g., via insurance), mitigate further, or address through changes to the model before deployment.

Formal approval must come from key stakeholders, including:

- Clinical Leadership: For patient safety and clinical efficacy.

- Information Security Team: To address cybersecurity and PHI protection.

- Compliance and Privacy Officers: For adherence to HIPAA and internal policies.

- Business or Operations Owners: To manage workflow and liability concerns.

All approvals should be recorded in a standardized template, stored in your organization’s governance system, and include renewal dates and re-approval conditions, supported by contractual documentation.

Set Go-Live Criteria

Before deploying the AI model, establish measurable, objective thresholds that must be met. These criteria should be testable in pre-production or pilot environments and cover key areas:

- Security and Privacy: Ensure no critical vulnerabilities remain, access controls are validated, and HIPAA-compliant data practices are in place.

- Clinical Safety and Performance: Verify minimum accuracy, sensitivity, and specificity metrics are met, with acceptable error rates and clear escalation workflows.

- Bias and Fairness: Confirm bias audits show acceptable disparities across demographic groups.

- Reliability and Availability: Meet SLA targets for uptime and error rates, with tested failover mechanisms.

- Observability: Set up dashboards and alerts for key metrics like model drift or potential PHI leakage.

- User Readiness: Complete staff training, update SOPs, and provide clear usage guidance.

Start with a limited deployment scope, such as specific departments or patient groups, and define criteria for expansion based on monitored performance. Establish rollback conditions - situations where the AI model would be disabled or reverted - and conduct a structured go/no-go review meeting. This meeting should recap the use case, present testing results, evaluate residual risks, and confirm that all go-live criteria have been met. Any exceptions must be documented with compensating controls and time limits.

Once the model is live, shift focus to ongoing monitoring and vendor oversight.

Post-Deployment Monitoring and Vendor Management

After deployment, continuous monitoring is essential to identify issues with security, privacy, or clinical performance. Implement real-time scanning of inputs and outputs to detect PHI leaks, biased content, or prompt injection attempts. Use anomaly detection to track usage patterns, latency, token consumption, and error rates, which can help spot potential attacks, misuse, or performance drops. Monitor the model’s performance and drift using ground-truth samples or retrospective reviews, particularly for clinical outcomes.

Provide a feedback channel for clinicians to report unsafe outputs. Set up alerts for critical issues like PHI exfiltration, repeated jailbreak attempts, or sudden performance declines. Display these metrics on dashboards accessible to security, clinical safety, and operations teams, and ensure thresholds trigger automatic incident response or temporary throttling and rollback if needed.

Schedule regular audits - at least annually or more frequently for higher-risk models. These should cover security testing, performance, bias, and compliance. Triggers for additional reviews may include:

- Vendor updates to the model or major configuration changes.

- Significant shifts in training data or detection of model drift.

- Security incidents or near misses.

- Expansion to new patient groups or regions.

- Changes in regulatory requirements or organizational policies.

Vendor management is critical. Formalize contracts that outline security and privacy controls, breach notification timelines, and rights to audit or obtain third-party attestations (e.g., SOC 2). Specify SLAs for uptime, response times, and support, with clear escalation paths and penalties for non-compliance. Vendors should notify you promptly about model changes, new training data, or architecture modifications that could affect risks. Require vendors to participate in joint incident response exercises, periodic reviews, and provide logs and metrics for your monitoring tools.

"Not only did we get rid of spreadsheets, but we have that larger community [of hospitals] to partner and work with." - James Case, VP & CISO, Baptist Health

Platforms like Censinet RiskOps™ can simplify vendor risk management by maintaining updated vendor profiles, supporting regular risk assessments, and facilitating collaboration between healthcare organizations and vendors to address vulnerabilities.

Conclusion

This checklist outlines a step-by-step approach to managing the entire lifecycle of third-party AI models - from initial assessment to ongoing post-deployment monitoring. Conducting thorough security testing is not just a recommendation; it’s a necessity to safeguard patients and protect PHI within the U.S. healthcare system. By following these steps, organizations can ensure AI systems comply with HIPAA regulations, prioritize patient safety, and uphold the trust that is vital to effective healthcare delivery.

AI security testing needs to go beyond traditional IT practices. Healthcare providers must evaluate adversarial risks and verify the integrity of AI models. A study by Mindgard highlights this challenge, revealing that 80% of data experts believe AI amplifies data security issues[9]. This statistic underscores the importance of implementing AI-specific testing protocols.

Once deployed, continuous monitoring becomes essential to address evolving risks. AI systems adapt over time as they interact with new data, integrate with other systems, and face emerging threats. To stay ahead, organizations need clear governance structures, including documented policies, business associate agreements, audit trails, and incident response plans. These measures not only ensure compliance but also simplify audits and regulatory reviews.

To put this into practice, start by applying the checklist to a high-impact AI use case. Once the controls prove effective, scale the process to other areas. Many of these measures build on existing cybersecurity, privacy, and clinical safety frameworks, reducing the need for entirely new systems. Collaboration is key - security, IT, clinical, legal, and vendor management teams must work together to make these efforts manageable. Tools like Censinet RiskOps™ can help streamline the process by simplifying AI risk assessments, keeping vendor profiles current, and fostering continuous collaboration to address vulnerabilities in PHI, clinical applications, and medical devices[5].

FAQs

What are the main security threats AI models face in healthcare?

AI models in healthcare face distinct security challenges that could compromise patient safety, privacy, and the reliability of systems. Among these threats is data poisoning, where attackers inject harmful data during training, effectively corrupting the AI's learning process. Another concern is adversarial attacks, where inputs are subtly altered to trick the AI into making incorrect or misleading predictions.

Additionally, there's the threat of model inversion, where hackers attempt to extract sensitive patient information from the AI system. Bias exploitation is another pressing issue, as it can result in unfair or skewed outcomes. Tackling these vulnerabilities is essential to preserve trust and ensure AI technologies are used safely and effectively in healthcare.

What steps can healthcare organizations take to ensure their AI systems meet HIPAA and HITECH requirements?

Healthcare organizations can maintain compliance with HIPAA and HITECH by conducting thorough security tests on their AI systems. This involves checking data privacy measures, ensuring encryption protocols are up to standard, and identifying any potential weaknesses.

To make this process smoother, organizations can use specialized risk management tools like Censinet RiskOps™. These platforms are designed to help manage risks tied to sensitive patient information, clinical applications, and medical devices. Keeping risk assessments up to date and staying aware of regulatory updates are also key steps in ensuring ongoing compliance.

What are the best practices for ongoing AI model security in healthcare?

To keep AI models secure in healthcare, it's crucial to stay ahead of potential risks. Start with regular risk assessments to pinpoint vulnerabilities that could compromise the system. Use real-time monitoring to keep an eye on the model's performance and quickly spot any anomalies. Make it a habit to run vulnerability scans and update security measures to tackle new threats as they emerge. Adding automated alerts for unusual activity can also provide a fast response to potential issues. These steps play a key role in protecting sensitive patient information and ensuring confidence in AI-driven healthcare solutions.